Mark Pfeiffer

AMZ Driverless: The Full Autonomous Racing System

May 13, 2019

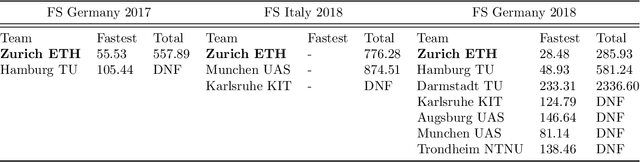

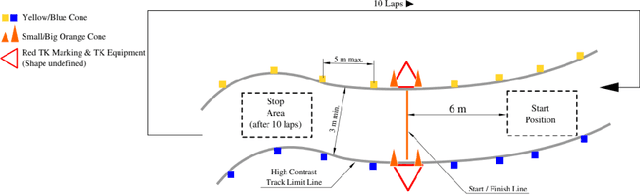

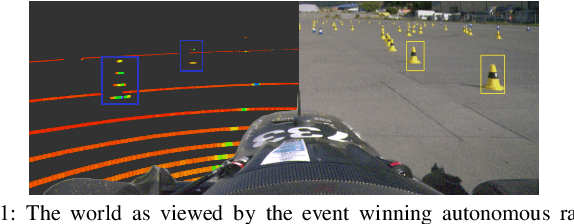

Abstract:This paper presents the algorithms and system architecture of an autonomous racecar. The introduced vehicle is powered by a software stack designed for robustness, reliability, and extensibility. In order to autonomously race around a previously unknown track, the proposed solution combines state of the art techniques from different fields of robotics. Specifically, perception, estimation, and control are incorporated into one high-performance autonomous racecar. This complex robotic system, developed by AMZ Driverless and ETH Zurich, finished 1st overall at each competition we attended: Formula Student Germany 2017, Formula Student Italy 2018 and Formula Student Germany 2018. We discuss the findings and learnings from these competitions and present an experimental evaluation of each module of our solution.

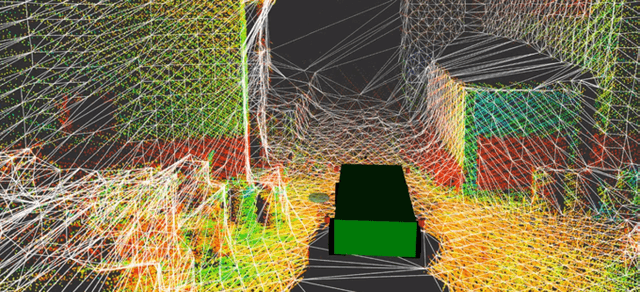

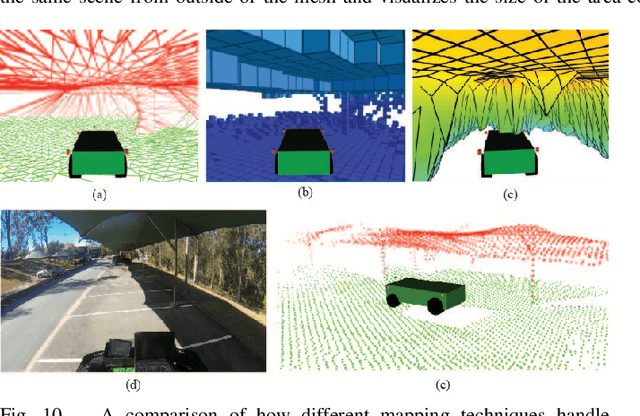

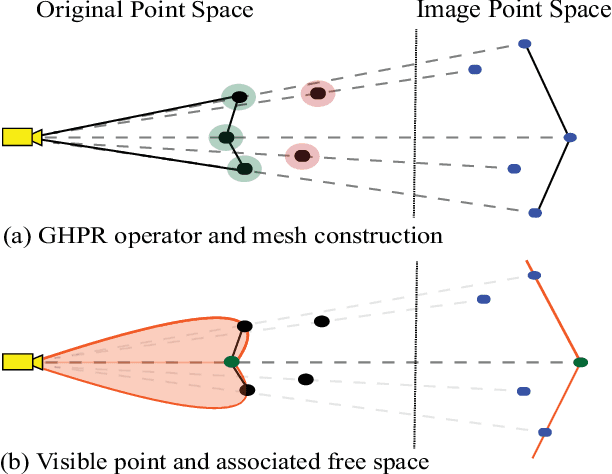

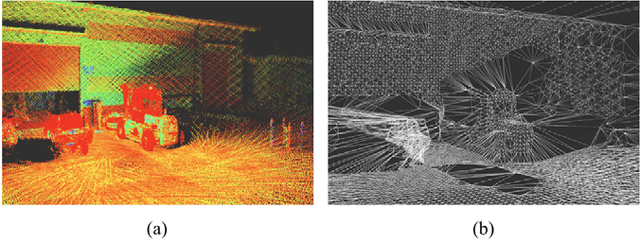

OVPC Mesh: 3D Free-space Representation for Local Ground Vehicle Navigation

Nov 26, 2018

Abstract:This paper presents a novel approach for local 3D environment representation for autonomous unmanned ground vehicle (UGV) navigation called On Visible Point Clouds Mesh(OVPC Mesh). Our approach represents the surrounding of the robot as a watertight 3D mesh generated from local point cloud data in order to represent the free space surrounding the robot. It is a conservative estimation of the free space and provides a desirable trade-off between representation precision and computational efficiency, without having to discretize the environment into a fixed grid size. Our experiments analyze the usability of the approach for UGV navigation in rough terrain, both in simulation and in a fully integrated real-world system. Additionally, we compare our approach to well-known state-of-the-art solutions, such as Octomap and Elevation Mapping and show that OVPC Mesh can provide reliable 3D information for trajectory planning while fulfilling real-time constraints.

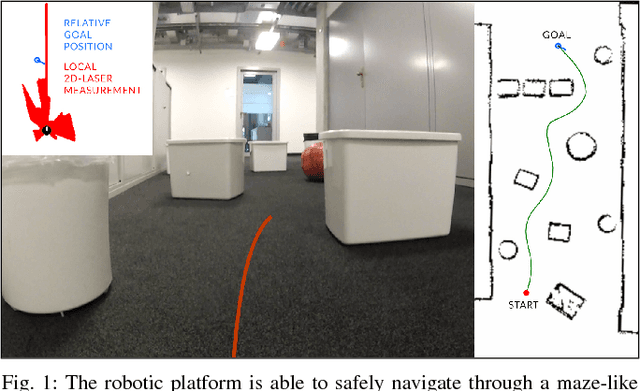

From Perception to Decision: A Data-driven Approach to End-to-end Motion Planning for Autonomous Ground Robots

Nov 06, 2018

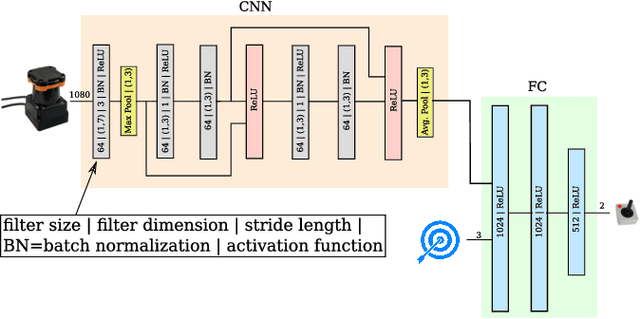

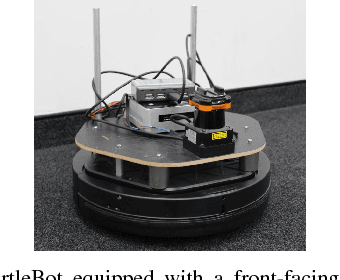

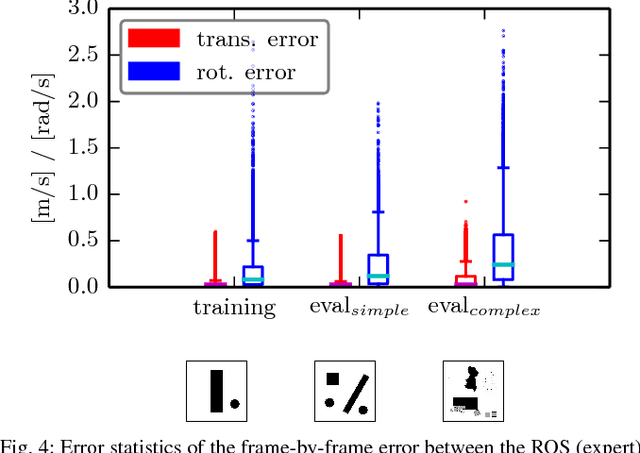

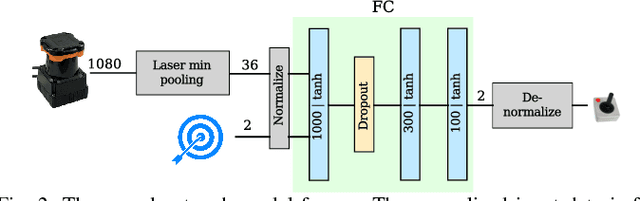

Abstract:Learning from demonstration for motion planning is an ongoing research topic. In this paper we present a model that is able to learn the complex mapping from raw 2D-laser range findings and a target position to the required steering commands for the robot. To our best knowledge, this work presents the first approach that learns a target-oriented end-to-end navigation model for a robotic platform. The supervised model training is based on expert demonstrations generated in simulation with an existing motion planner. We demonstrate that the learned navigation model is directly transferable to previously unseen virtual and, more interestingly, real-world environments. It can safely navigate the robot through obstacle-cluttered environments to reach the provided targets. We present an extensive qualitative and quantitative evaluation of the neural network-based motion planner, and compare it to a grid-based global approach, both in simulation and in real-world experiments.

Redundant Perception and State Estimation for Reliable Autonomous Racing

Sep 26, 2018

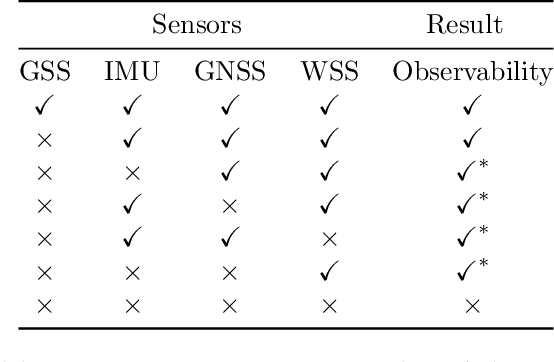

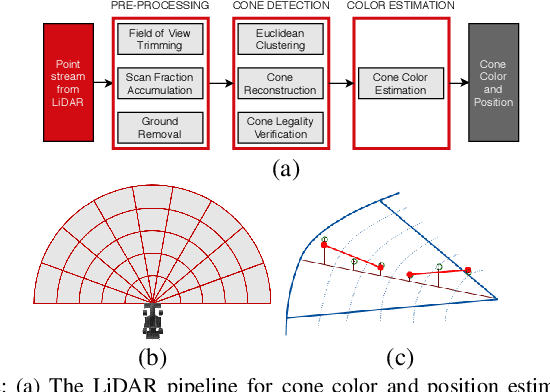

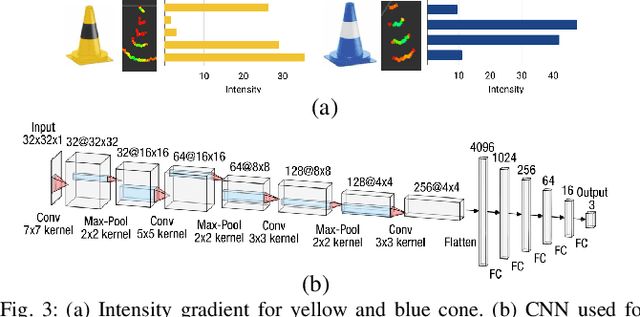

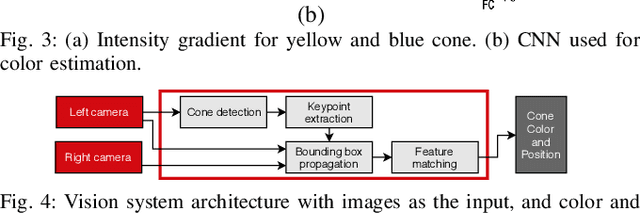

Abstract:In autonomous racing, vehicles operate close to the limits of handling and a sensor failure can have critical consequences. To limit the impact of such failures, this paper presents the redundant perception and state estimation approaches developed for an autonomous race car. Redundancy in perception is achieved by estimating the color and position of the track delimiting objects using two sensor modalities independently. Specifically, learning-based approaches are used to generate color and pose estimates, from LiDAR and camera data respectively. The redundant perception inputs are fused by a particle filter based SLAM algorithm that operates in real-time. Velocity is estimated using slip dynamics, with reliability being ensured through a probabilistic failure detection algorithm. The sub-modules are extensively evaluated in real-world racing conditions using the autonomous race car "gotthard driverless", achieving lateral accelerations up to 1.7G and a top speed of 90km/h.

Reinforced Imitation: Sample Efficient Deep Reinforcement Learning for Map-less Navigation by Leveraging Prior Demonstrations

Aug 31, 2018

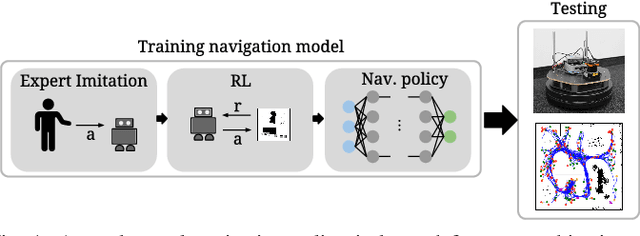

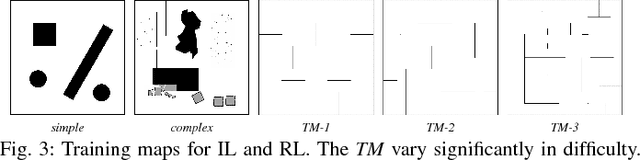

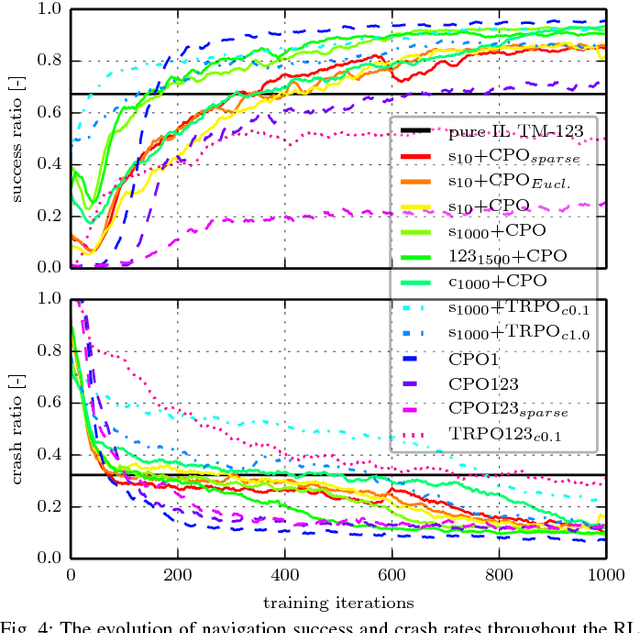

Abstract:This work presents a case study of a learning-based approach for target driven map-less navigation. The underlying navigation model is an end-to-end neural network which is trained using a combination of expert demonstrations, imitation learning (IL) and reinforcement learning (RL). While RL and IL suffer from a large sample complexity and the distribution mismatch problem, respectively, we show that leveraging prior expert demonstrations for pre-training can reduce the training time to reach at least the same level of performance compared to plain RL by a factor of 5. We present a thorough evaluation of different combinations of expert demonstrations, different RL algorithms and reward functions, both in simulation and on a real robotic platform. Our results show that the final model outperforms both standalone approaches in the amount of successful navigation tasks. In addition, the RL reward function can be significantly simplified when using pre-training, e.g. by using a sparse reward only. The learned navigation policy is able to generalize to unseen and real-world environments.

Dynamic Objects Segmentation for Visual Localization in Urban Environments

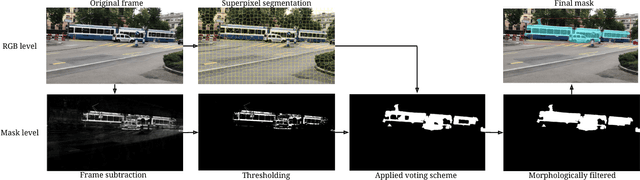

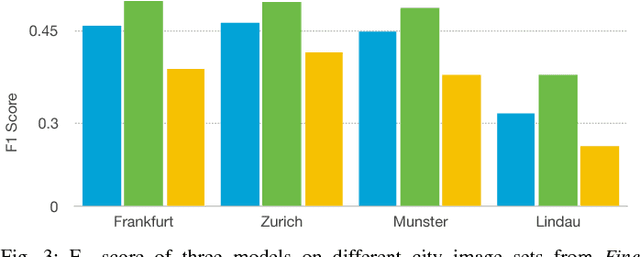

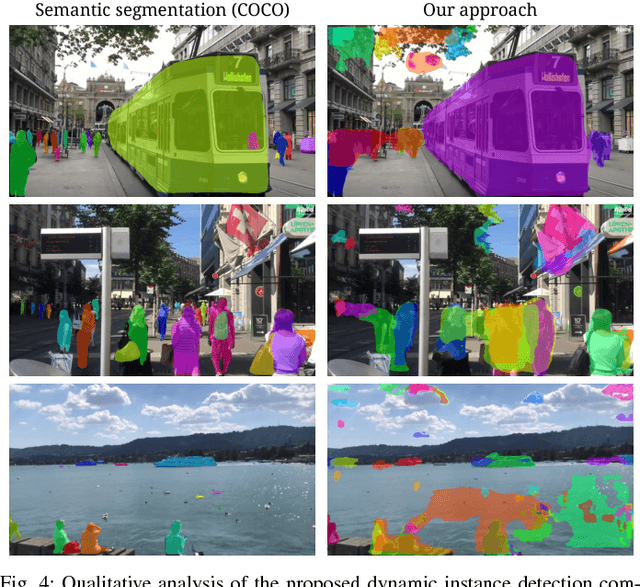

Jul 09, 2018

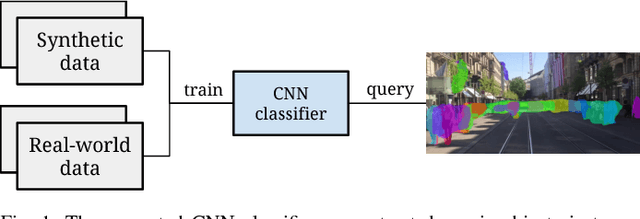

Abstract:Visual localization and mapping is a crucial capability to address many challenges in mobile robotics. It constitutes a robust, accurate and cost-effective approach for local and global pose estimation within prior maps. Yet, in highly dynamic environments, like crowded city streets, problems arise as major parts of the image can be covered by dynamic objects. Consequently, visual odometry pipelines often diverge and the localization systems malfunction as detected features are not consistent with the precomputed 3D model. In this work, we present an approach to automatically detect dynamic object instances to improve the robustness of vision-based localization and mapping in crowded environments. By training a convolutional neural network model with a combination of synthetic and real-world data, dynamic object instance masks are learned in a semi-supervised way. The real-world data can be collected with a standard camera and requires minimal further post-processing. Our experiments show that a wide range of dynamic objects can be reliably detected using the presented method. Promising performance is demonstrated on our own and also publicly available datasets, which also shows the generalization capabilities of this approach.

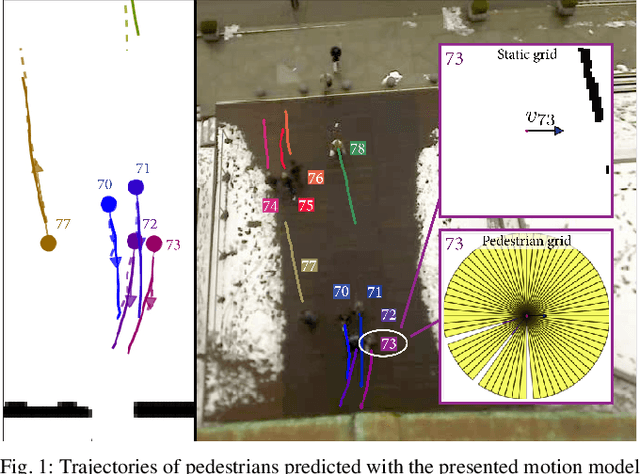

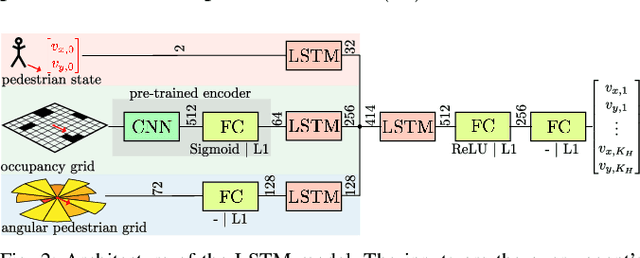

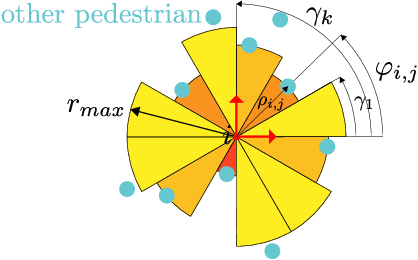

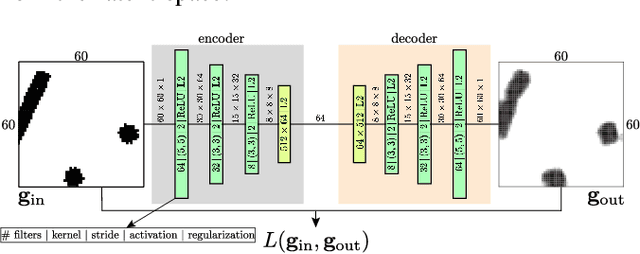

A Data-driven Model for Interaction-aware Pedestrian Motion Prediction in Object Cluttered Environments

Feb 26, 2018

Abstract:This paper reports on a data-driven, interaction-aware motion prediction approach for pedestrians in environments cluttered with static obstacles. When navigating in such workspaces shared with humans, robots need accurate motion predictions of the surrounding pedestrians. Human navigation behavior is mostly influenced by their surrounding pedestrians and by the static obstacles in their vicinity. In this paper we introduce a new model based on Long-Short Term Memory (LSTM) neural networks, which is able to learn human motion behavior from demonstrated data. To the best of our knowledge, this is the first approach using LSTMs, that incorporates both static obstacles and surrounding pedestrians for trajectory forecasting. As part of the model, we introduce a new way of encoding surrounding pedestrians based on a 1d-grid in polar angle space. We evaluate the benefit of interaction-aware motion prediction and the added value of incorporating static obstacles on both simulation and real-world datasets by comparing with state-of-the-art approaches. The results show, that our new approach outperforms the other approaches while being very computationally efficient and that taking into account static obstacles for motion predictions significantly improves the prediction accuracy, especially in cluttered environments.

Cone Detection using a Combination of LiDAR and Vision-based Machine Learning

Nov 06, 2017

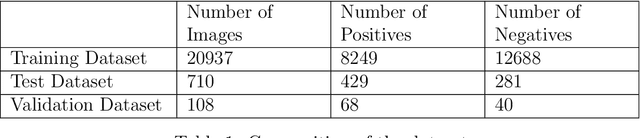

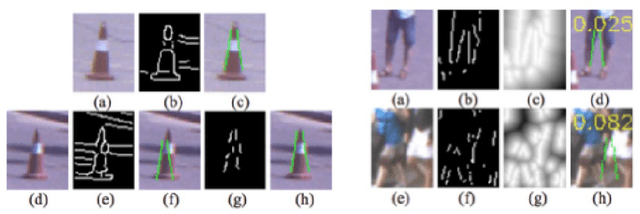

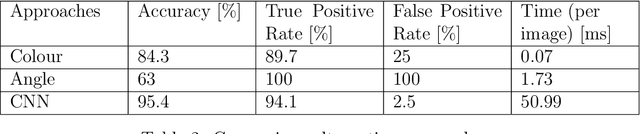

Abstract:The classification and the position estimation of objects become more and more relevant as the field of robotics is expanding in diverse areas of society. In this Bachelor Thesis, we developed a cone detection algorithm for an autonomous car using a LiDAR sensor and a colour camera. By evaluating simple constraints, the LiDAR detection algorithm preselects cone candidates in the 3 dimensional space. The candidates are projected into the image plane of the colour camera and an image candidate is cropped out. A convolutional neural networks classifies the image candidates as cone or not a cone. With the fusion of the precise position estimation of the LiDAR sensor and the high classification accuracy of a neural network, a reliable cone detection algorithm was implemented. Furthermore, a path planning algorithm generates a path around the detected cones. The final system detects cones even at higher velocity and has the potential to drive fully autonomous around the cones.

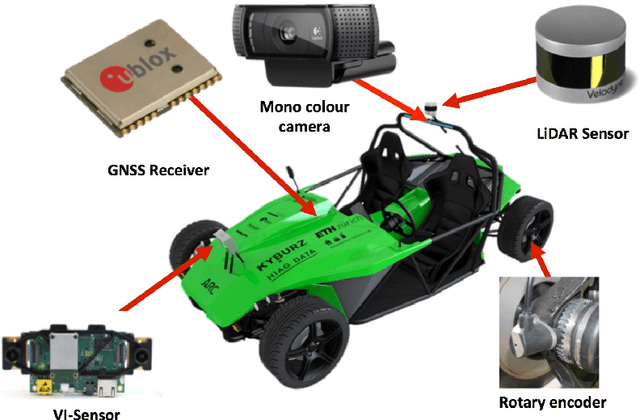

Autonomous Electric Race Car Design

Nov 01, 2017

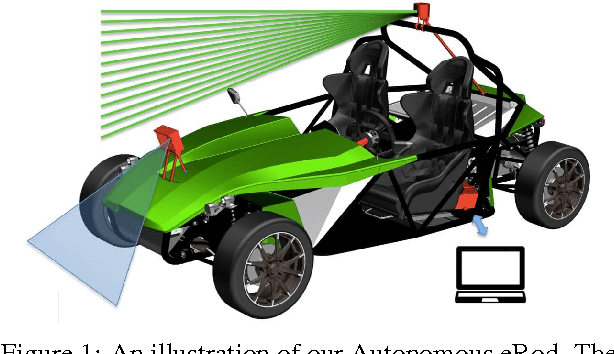

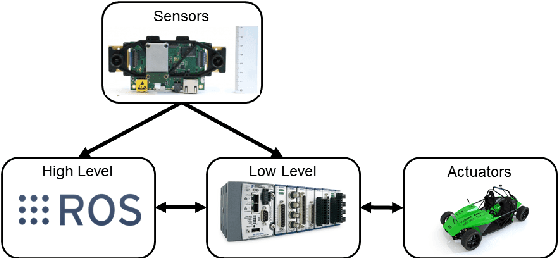

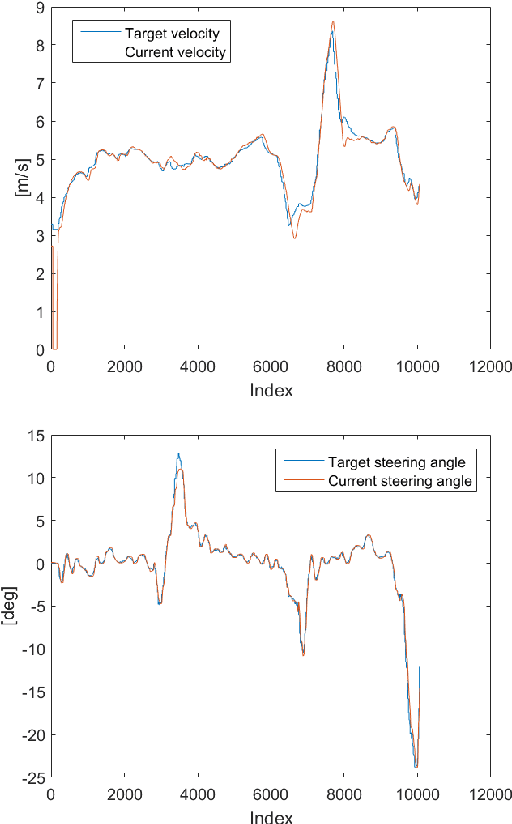

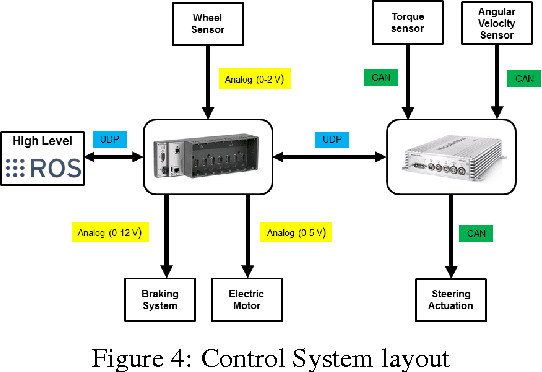

Abstract:Autonomous driving and electric vehicles are nowadays very active research and development areas. In this paper we present the conversion of a standard Kyburz eRod into an autonomous vehicle that can be operated in challenging environments such as Swiss mountain passes. The overall hardware and software architectures are described in detail with a special emphasis on the sensor requirements for autonomous vehicles operating in partially structured environments. Furthermore, the design process itself and the finalized system architecture are presented. The work shows state of the art results in localization and controls for self-driving high-performance electric vehicles. Test results of the overall system are presented, which show the importance of generalizable state estimation algorithms to handle a plethora of conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge