Paulo Borges

Deformable Cluster Manipulation via Whole-Arm Policy Learning

Jul 22, 2025Abstract:Manipulating clusters of deformable objects presents a substantial challenge with widespread applicability, but requires contact-rich whole-arm interactions. A potential solution must address the limited capacity for realistic model synthesis, high uncertainty in perception, and the lack of efficient spatial abstractions, among others. We propose a novel framework for learning model-free policies integrating two modalities: 3D point clouds and proprioceptive touch indicators, emphasising manipulation with full body contact awareness, going beyond traditional end-effector modes. Our reinforcement learning framework leverages a distributional state representation, aided by kernel mean embeddings, to achieve improved training efficiency and real-time inference. Furthermore, we propose a novel context-agnostic occlusion heuristic to clear deformables from a target region for exposure tasks. We deploy the framework in a power line clearance scenario and observe that the agent generates creative strategies leveraging multiple arm links for de-occlusion. Finally, we perform zero-shot sim-to-real policy transfer, allowing the arm to clear real branches with unknown occlusion patterns, unseen topology, and uncertain dynamics.

Online 6DoF Pose Estimation in Forests using Cross-View Factor Graph Optimisation and Deep Learned Re-localisation

Sep 25, 2024

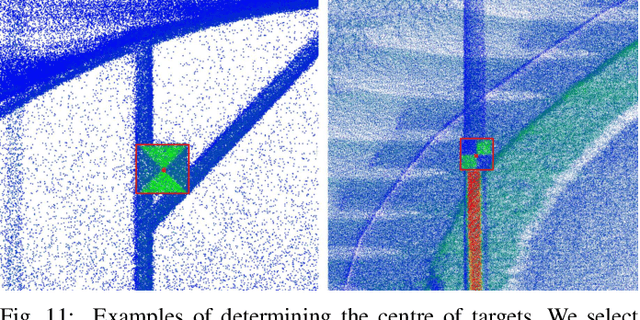

Abstract:This paper presents a novel approach for robust global localisation and 6DoF pose estimation of ground robots in forest environments by leveraging cross-view factor graph optimisation and deep-learned re-localisation. The proposed method addresses the challenges of aligning aerial and ground data for pose estimation, which is crucial for accurate point-to-point navigation in GPS-denied environments. By integrating information from both perspectives into a factor graph framework, our approach effectively estimates the robot's global position and orientation. We validate the performance of our method through extensive experiments in diverse forest scenarios, demonstrating its superiority over existing baselines in terms of accuracy and robustness in these challenging environments. Experimental results show that our proposed localisation system can achieve drift-free localisation with bounded positioning errors, ensuring reliable and safe robot navigation under canopies.

Under-Canopy Navigation using Aerial Lidar Maps

Apr 05, 2024

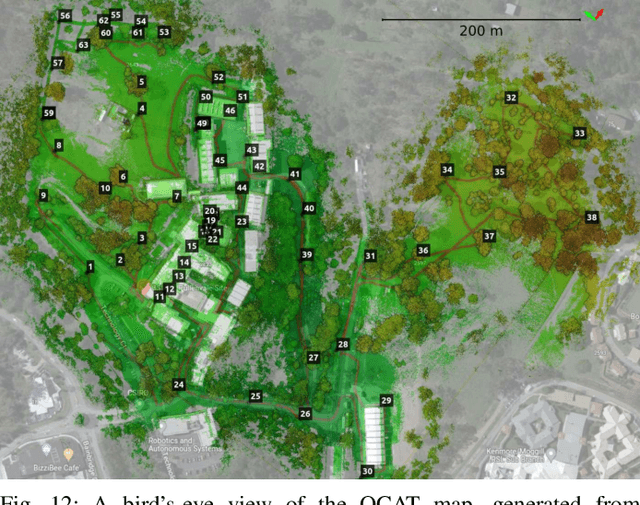

Abstract:Autonomous navigation in unstructured natural environments poses a significant challenge. In goal navigation tasks without prior information, the limited look-ahead of onboard sensors utilised by robots compromises path efficiency. We propose a novel approach that leverages an above-the-canopy aerial map for improved ground robot navigation. Our system utilises aerial lidar scans to create a 3D probabilistic occupancy map, uniquely incorporating the uncertainty in the aerial vehicle's trajectory for improved accuracy. Novel path planning cost functions are introduced, combining path length with obstruction risk estimated from the probabilistic map. The D-Star Lite algorithm then calculates an optimal (minimum-cost) path to the goal. This system also allows for dynamic replanning upon encountering unforeseen obstacles on the ground. Extensive experiments and ablation studies in simulated and real forests demonstrate the effectiveness of our system.

Multi-Agent 3D Map Reconstruction and Change Detection in Microgravity with Free-Flying Robots

Nov 05, 2023Abstract:Assistive free-flyer robots autonomously caring for future crewed outposts -- such as NASA's Astrobee robots on the International Space Station (ISS) -- must be able to detect day-to-day interior changes to track inventory, detect and diagnose faults, and monitor the outpost status. This work presents a framework for multi-agent cooperative mapping and change detection to enable robotic maintenance of space outposts. One agent is used to reconstruct a 3D model of the environment from sequences of images and corresponding depth information. Another agent is used to periodically scan the environment for inconsistencies against the 3D model. Change detection is validated after completing the surveys using real image and pose data collected by Astrobee robots in a ground testing environment and from microgravity aboard the ISS. This work outlines the objectives, requirements, and algorithmic modules for the multi-agent reconstruction system, including recommendations for its use by assistive free-flyers aboard future microgravity outposts.

Path Signatures for Diversity in Probabilistic Trajectory Optimisation

Aug 08, 2023

Abstract:Motion planning can be cast as a trajectory optimisation problem where a cost is minimised as a function of the trajectory being generated. In complex environments with several obstacles and complicated geometry, this optimisation problem is usually difficult to solve and prone to local minima. However, recent advancements in computing hardware allow for parallel trajectory optimisation where multiple solutions are obtained simultaneously, each initialised from a different starting point. Unfortunately, without a strategy preventing two solutions to collapse on each other, naive parallel optimisation can suffer from mode collapse diminishing the efficiency of the approach and the likelihood of finding a global solution. In this paper we leverage on recent advances in the theory of rough paths to devise an algorithm for parallel trajectory optimisation that promotes diversity over the range of solutions, therefore avoiding mode collapses and achieving better global properties. Our approach builds on path signatures and Hilbert space representations of trajectories, and connects parallel variational inference for trajectory estimation with diversity promoting kernels. We empirically demonstrate that this strategy achieves lower average costs than competing alternatives on a range of problems, from 2D navigation to robotic manipulators operating in cluttered environments.

Learning to Simulate Tree-Branch Dynamics for Manipulation

Jun 06, 2023Abstract:We propose to use a simulation driven inverse inference approach to model the joint dynamics of tree branches under manipulation. Learning branch dynamics and gaining the ability to manipulate deformable vegetation can help with occlusion-prone tasks, such as fruit picking in dense foliage, as well as moving overhanging vines and branches for navigation in dense vegetation. The underlying deformable tree geometry is encapsulated as coarse spring abstractions executed on parallel, non-differentiable simulators. The implicit statistical model defined by the simulator, reference trajectories obtained by actively probing the ground truth, and the Bayesian formalism, together guide the spring parameter posterior density estimation. Our non-parametric inference algorithm, based on Stein Variational Gradient Descent, incorporates biologically motivated assumptions into the inference process as neural network driven learnt joint priors; moreover, it leverages the finite difference scheme for gradient approximations. Real and simulated experiments confirm that our model can predict deformation trajectories, quantify the estimation uncertainty, and it can perform better when base-lined against other inference algorithms, particularly from the Monte Carlo family. The model displays strong robustness properties in the presence of heteroscedastic sensor noise; furthermore, it can generalise to unseen grasp locations.

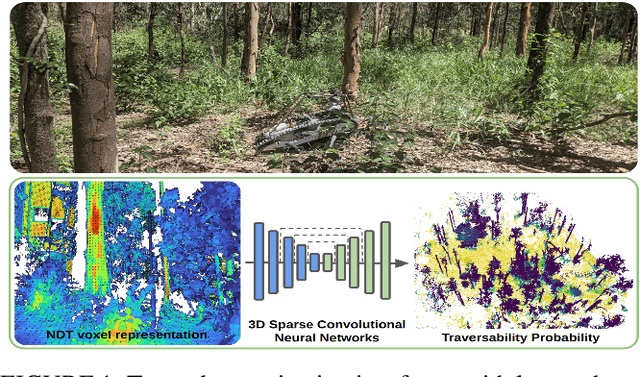

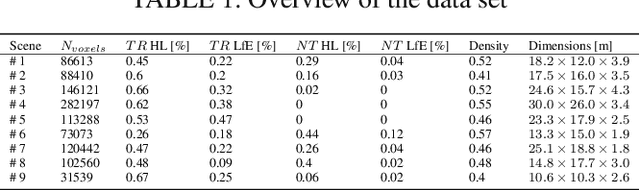

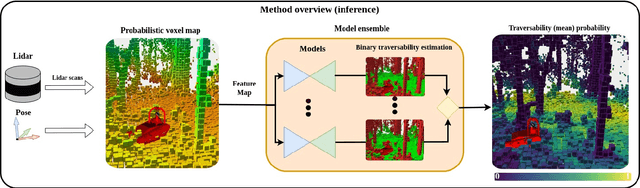

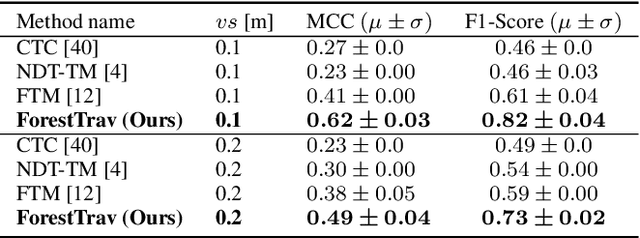

ForestTrav: Accurate, Efficient and Deployable Forest Traversability Estimation for Autonomous Ground Vehicles

May 22, 2023

Abstract:Autonomous navigation in unstructured vegetated environments remains an open challenge. To successfully operate in these settings, ground vehicles must assess the traversability of the environment and determine which vegetation is pliable enough to push through. In this work, we propose a novel method that combines a high-fidelity and feature-rich 3D voxel representation while leveraging the structural context and sparseness of \acfp{SCNN} to assess \ac{TE} in densely vegetated environments. The proposed method is thoroughly evaluated on an accurately-labeled real-world data set that we provide to the community. It is shown to outperform state-of-the-art methods by a significant margin (0.59 vs. 0.39 MCC score at 0.1m voxel resolution) in challenging scenes and to generalize to unseen environments. In addition, the method is economical in the amount of training data and training time required: a model is trained in minutes on a desktop computer. We show that by exploiting the context of the environment, our method can use different feature combinations with only limited performance variations. For example, our approach can be used with lidar-only features, whilst still assessing complex vegetated environments accurately, which was not demonstrated previously in the literature in such environments. In addition, we propose an approach to assess a traversability estimator's sensitivity to information quality and show our method's sensitivity is low.

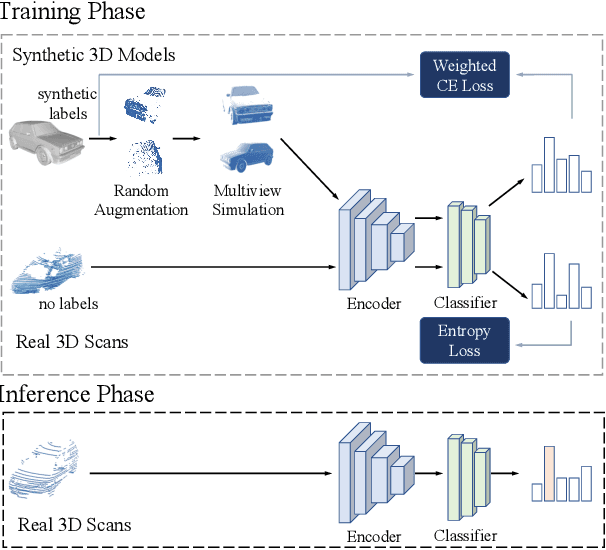

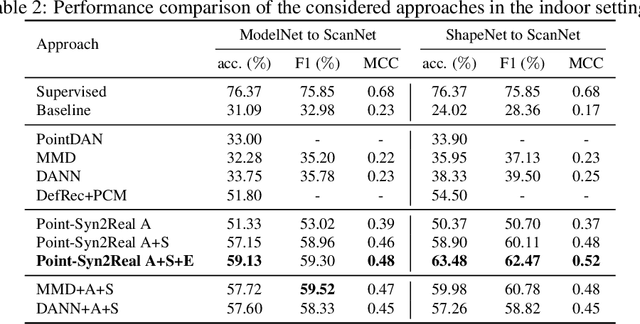

Point-Syn2Real: Semi-Supervised Synthetic-to-Real Cross-Domain Learning for Object Classification in 3D Point Clouds

Oct 31, 2022

Abstract:Object classification using LiDAR 3D point cloud data is critical for modern applications such as autonomous driving. However, labeling point cloud data is labor-intensive as it requires human annotators to visualize and inspect the 3D data from different perspectives. In this paper, we propose a semi-supervised cross-domain learning approach that does not rely on manual annotations of point clouds and performs similar to fully-supervised approaches. We utilize available 3D object models to train classifiers that can generalize to real-world point clouds. We simulate the acquisition of point clouds by sampling 3D object models from multiple viewpoints and with arbitrary partial occlusions. We then augment the resulting set of point clouds through random rotations and adding Gaussian noise to better emulate the real-world scenarios. We then train point cloud encoding models, e.g., DGCNN, PointNet++, on the synthesized and augmented datasets and evaluate their cross-domain classification performance on corresponding real-world datasets. We also introduce Point-Syn2Real, a new benchmark dataset for cross-domain learning on point clouds. The results of our extensive experiments with this dataset demonstrate that the proposed cross-domain learning approach for point clouds outperforms the related baseline and state-of-the-art approaches in both indoor and outdoor settings in terms of cross-domain generalizability. The code and data will be available upon publishing.

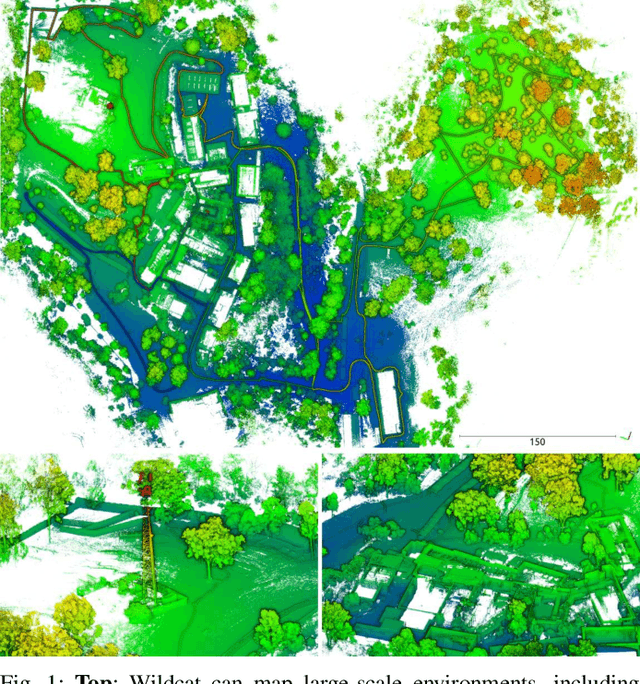

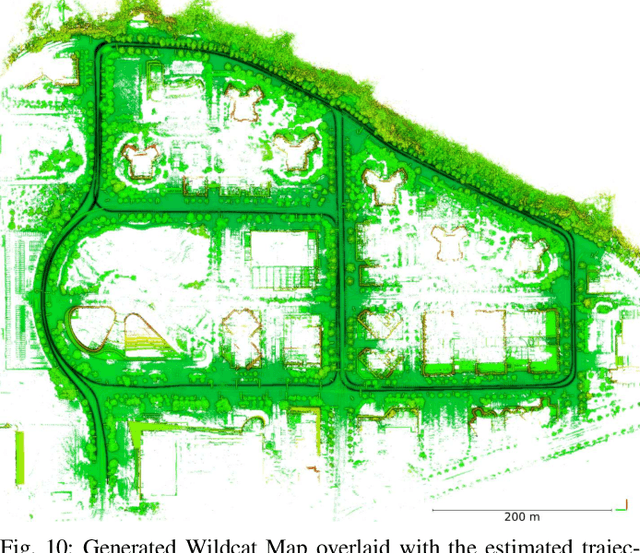

Wildcat: Online Continuous-Time 3D Lidar-Inertial SLAM

May 25, 2022

Abstract:We present Wildcat, a novel online 3D lidar-inertial SLAM system with exceptional versatility and robustness. At its core, Wildcat combines a robust real-time lidar-inertial odometry module, utilising a continuous-time trajectory representation, with an efficient pose-graph optimisation module that seamlessly supports both the single- and multi-agent settings. The robustness of Wildcat was recently demonstrated in the DARPA Subterranean Challenge where it outperformed other SLAM systems across various types of sensing-degraded and perceptually challenging environments. In this paper, we extensively evaluate Wildcat in a diverse set of new and publicly available real-world datasets and showcase its superior robustness and versatility over two existing state-of-the-art lidar-inertial SLAM systems.

Dual Online Stein Variational Inference for Control and Dynamics

Mar 23, 2021

Abstract:Model predictive control (MPC) schemes have a proven track record for delivering aggressive and robust performance in many challenging control tasks, coping with nonlinear system dynamics, constraints, and observational noise. Despite their success, these methods often rely on simple control distributions, which can limit their performance in highly uncertain and complex environments. MPC frameworks must be able to accommodate changing distributions over system parameters, based on the most recent measurements. In this paper, we devise an implicit variational inference algorithm able to estimate distributions over model parameters and control inputs on-the-fly. The method incorporates Stein Variational gradient descent to approximate the target distributions as a collection of particles, and performs updates based on a Bayesian formulation. This enables the approximation of complex multi-modal posterior distributions, typically occurring in challenging and realistic robot navigation tasks. We demonstrate our approach on both simulated and real-world experiments requiring real-time execution in the face of dynamically changing environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge