Brian Coltin

DLO-Splatting: Tracking Deformable Linear Objects Using 3D Gaussian Splatting

May 13, 2025Abstract:This work presents DLO-Splatting, an algorithm for estimating the 3D shape of Deformable Linear Objects (DLOs) from multi-view RGB images and gripper state information through prediction-update filtering. The DLO-Splatting algorithm uses a position-based dynamics model with shape smoothness and rigidity dampening corrections to predict the object shape. Optimization with a 3D Gaussian Splatting-based rendering loss iteratively renders and refines the prediction to align it with the visual observations in the update step. Initial experiments demonstrate promising results in a knot tying scenario, which is challenging for existing vision-only methods.

Deformable Cargo Transport in Microgravity with Astrobee

May 02, 2025Abstract:We present pyastrobee: a simulation environment and control stack for Astrobee in Python, with an emphasis on cargo manipulation and transport tasks. We also demonstrate preliminary success from a sampling-based MPC controller, using reduced-order models of NASA's cargo transfer bag (CTB) to control a high-order deformable finite element model. Our code is open-source, fully documented, and available at https://danielpmorton.github.io/pyastrobee

Semantic Masking and Visual Feature Matching for Robust Localization

Nov 04, 2024

Abstract:We are interested in long-term deployments of autonomous robots to aid astronauts with maintenance and monitoring operations in settings such as the International Space Station. Unfortunately, such environments tend to be highly dynamic and unstructured, and their frequent reconfiguration poses a challenge for robust long-term localization of robots. Many state-of-the-art visual feature-based localization algorithms are not robust towards spatial scene changes, and SLAM algorithms, while promising, cannot run within the low-compute budget available to space robots. To address this gap, we present a computationally efficient semantic masking approach for visual feature matching that improves the accuracy and robustness of visual localization systems during long-term deployment in changing environments. Our method introduces a lightweight check that enforces matches to be within long-term static objects and have consistent semantic classes. We evaluate this approach using both map-based relocalization and relative pose estimation and show that it improves Absolute Trajectory Error (ATE) and correct match ratios on the publicly available Astrobee dataset. While this approach was originally developed for microgravity robotic freeflyers, it can be applied to any visual feature matching pipeline to improve robustness.

Unsupervised Change Detection for Space Habitats Using 3D Point Clouds

Dec 04, 2023

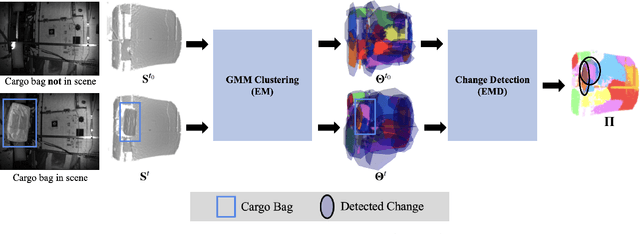

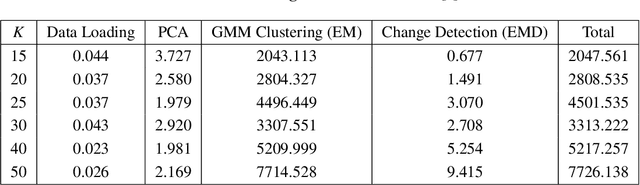

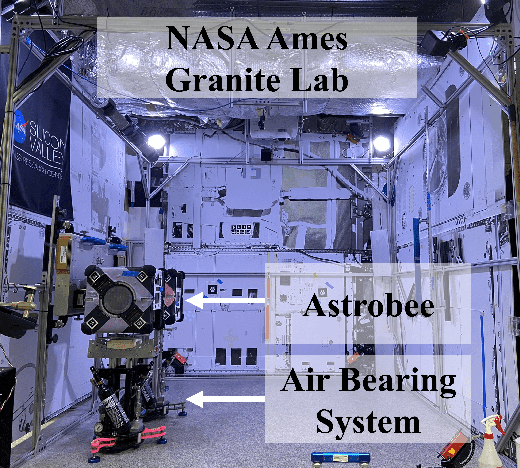

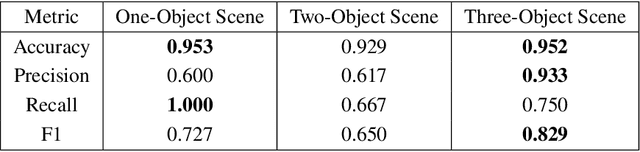

Abstract:This work presents an algorithm for scene change detection from point clouds to enable autonomous robotic caretaking in future space habitats. Autonomous robotic systems will help maintain future deep-space habitats, such as the Gateway space station, which will be uncrewed for extended periods. Existing scene analysis software used on the International Space Station (ISS) relies on manually-labeled images for detecting changes. In contrast, the algorithm presented in this work uses raw, unlabeled point clouds as inputs. The algorithm first applies modified Expectation-Maximization Gaussian Mixture Model (GMM) clustering to two input point clouds. It then performs change detection by comparing the GMMs using the Earth Mover's Distance. The algorithm is validated quantitatively and qualitatively using a test dataset collected by an Astrobee robot in the NASA Ames Granite Lab comprising single frame depth images taken directly by Astrobee and full-scene reconstructed maps built with RGB-D and pose data from Astrobee. The runtimes of the approach are also analyzed in depth. The source code is publicly released to promote further development.

Multi-Agent 3D Map Reconstruction and Change Detection in Microgravity with Free-Flying Robots

Nov 05, 2023Abstract:Assistive free-flyer robots autonomously caring for future crewed outposts -- such as NASA's Astrobee robots on the International Space Station (ISS) -- must be able to detect day-to-day interior changes to track inventory, detect and diagnose faults, and monitor the outpost status. This work presents a framework for multi-agent cooperative mapping and change detection to enable robotic maintenance of space outposts. One agent is used to reconstruct a 3D model of the environment from sequences of images and corresponding depth information. Another agent is used to periodically scan the environment for inconsistencies against the 3D model. Change detection is validated after completing the surveys using real image and pose data collected by Astrobee robots in a ground testing environment and from microgravity aboard the ISS. This work outlines the objectives, requirements, and algorithmic modules for the multi-agent reconstruction system, including recommendations for its use by assistive free-flyers aboard future microgravity outposts.

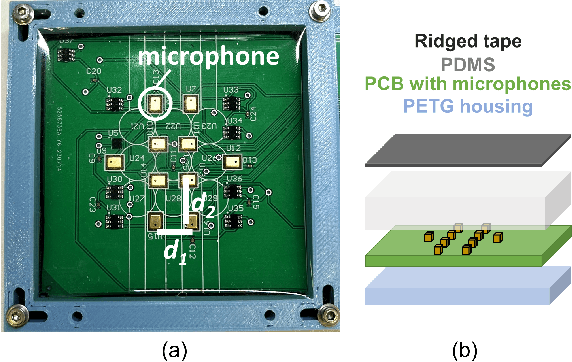

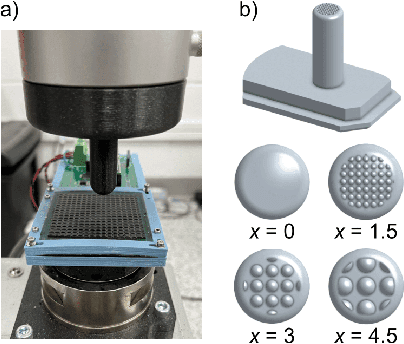

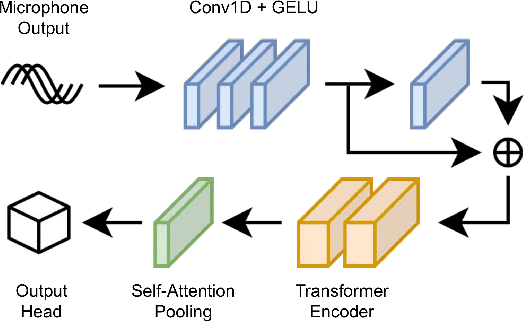

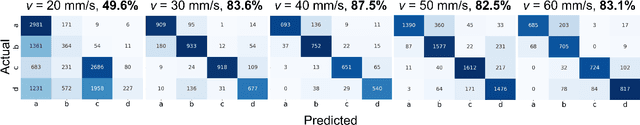

An Investigation of Multi-feature Extraction and Super-resolution with Fast Microphone Arrays

Sep 30, 2023

Abstract:In this work, we use MEMS microphones as vibration sensors to simultaneously classify texture and estimate contact position and velocity. Vibration sensors are an important facet of both human and robotic tactile sensing, providing fast detection of contact and onset of slip. Microphones are an attractive option for implementing vibration sensing as they offer a fast response and can be sampled quickly, are affordable, and occupy a very small footprint. Our prototype sensor uses only a sparse array of distributed MEMS microphones (8-9 mm spacing) embedded under an elastomer. We use transformer-based architectures for data analysis, taking advantage of the microphones' high sampling rate to run our models on time-series data as opposed to individual snapshots. This approach allows us to obtain 77.3% average accuracy on 4-class texture classification (84.2% when excluding the slowest drag velocity), 1.5 mm median error on contact localization, and 4.5 mm/s median error on contact velocity. We show that the learned texture and localization models are robust to varying velocity and generalize to unseen velocities. We also report that our sensor provides fast contact detection, an important advantage of fast transducers. This investigation illustrates the capabilities one can achieve with a MEMS microphone array alone, leaving valuable sensor real estate available for integration with complementary tactile sensing modalities.

LunarNav: Crater-based Localization for Long-range Autonomous Lunar Rover Navigation

Jan 03, 2023

Abstract:The Artemis program requires robotic and crewed lunar rovers for resource prospecting and exploitation, construction and maintenance of facilities, and human exploration. These rovers must support navigation for 10s of kilometers (km) from base camps. A lunar science rover mission concept - Endurance-A, has been recommended by the new Decadal Survey as the highest priority medium-class mission of the Lunar Discovery and Exploration Program, and would be required to traverse approximately 2000 km in the South Pole-Aitkin (SPA) Basin, with individual drives of several kilometers between stops for downlink. These rover mission scenarios require functionality that provides onboard, autonomous, global position knowledge ( aka absolute localization). However, planetary rovers have no onboard global localization capability to date; they have only used relative localization, by integrating combinations of wheel odometry, visual odometry, and inertial measurements during each drive to track position relative to the start of each drive. In this work, we summarize recent developments from the LunarNav project, where we have developed algorithms and software to enable lunar rovers to estimate their global position and heading on the Moon with a goal performance of position error less than 5 meters (m) and heading error less than 3-degree, 3-sigma, in sunlit areas. This will be achieved autonomously onboard by detecting craters in the vicinity of the rover and matching them to a database of known craters mapped from orbit. The overall technical framework consists of three main elements: 1) crater detection, 2) crater matching, and 3) state estimation. In previous work, we developed crater detection algorithms for three different sensing modalities. Our results suggest that rover localization with an error less than 5 m is highly probable during daytime operations.

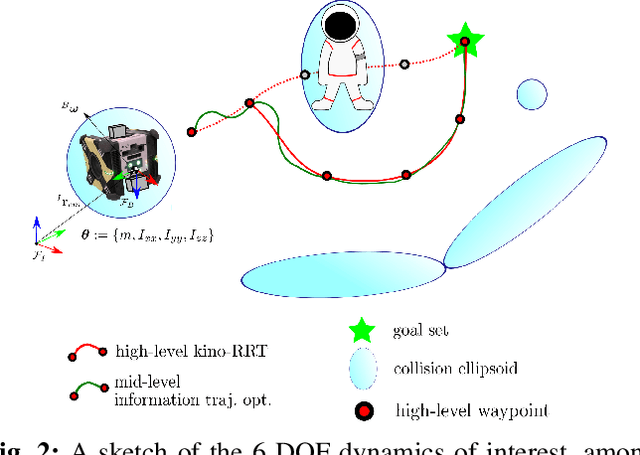

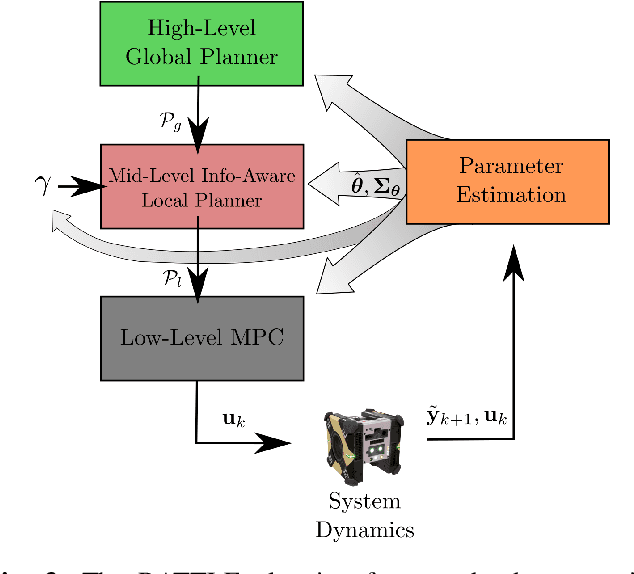

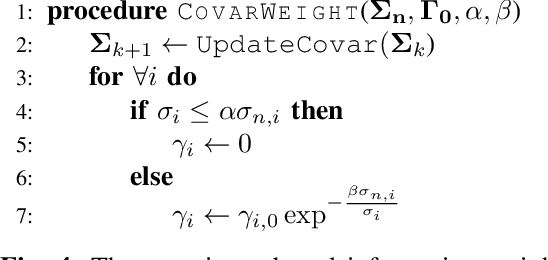

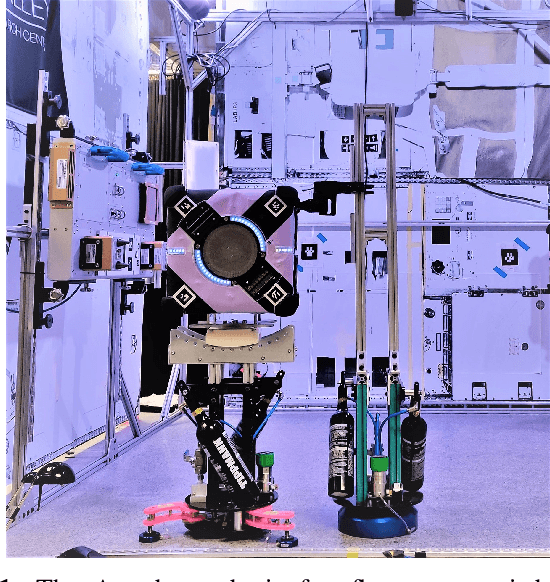

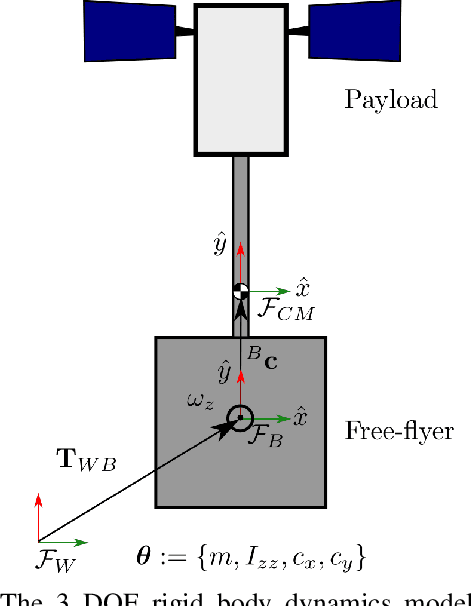

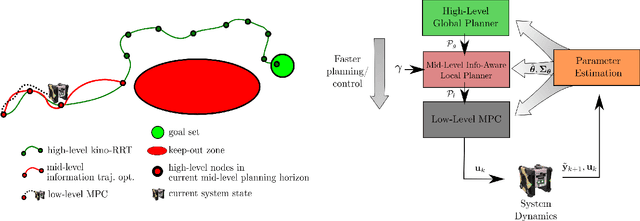

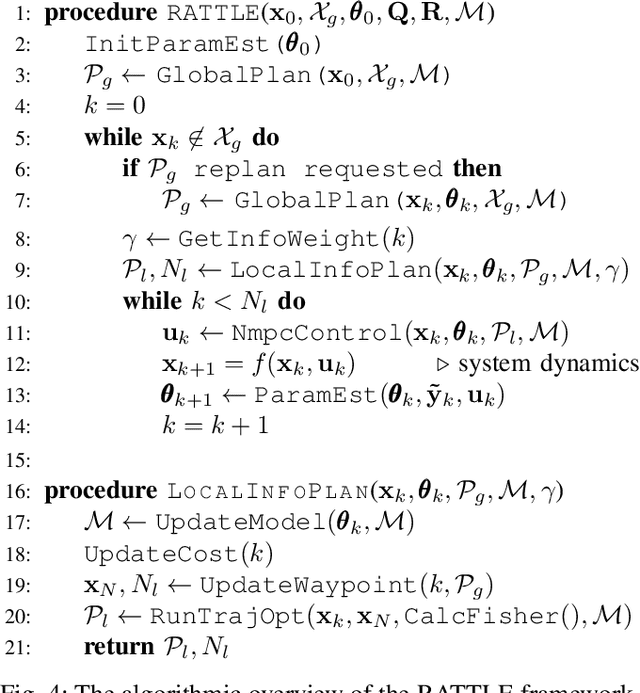

The RATTLE Motion Planning Algorithm for Robust Online Parametric Model Improvement with On-Orbit Validation

Mar 03, 2022

Abstract:Certain forms of uncertainty that robotic systems encounter can be explicitly learned within the context of a known model, like parametric model uncertainties such as mass and moments of inertia. Quantifying such parametric uncertainty is important for more accurate prediction of the system behavior, leading to safe and precise task execution. In tandem, providing a form of robustness guarantee against prevailing uncertainty levels like environmental disturbances and current model knowledge is also desirable. To that end, the authors' previously proposed RATTLE algorithm, a framework for online information-aware motion planning, is outlined and extended to enhance its applicability to real robotic systems. RATTLE provides a clear tradeoff between information-seeking motion and traditional goal-achieving motion and features online-updateable models. Additionally, online-updateable low level control robustness guarantees and a new method for automatic adjustment of information content down to a specified estimation precision is proposed. Results of extensive experimentation in microgravity using the Astrobee robots aboard the International Space Station and practical implementation details are presented, demonstrating RATTLE's capabilities for real-time, robust, online-updateable, and model information-seeking motion planning capabilities under parametric uncertainty.

Online Information-Aware Motion Planning with Inertial Parameter Learning for Robotic Free-Flyers

Dec 11, 2021

Abstract:Space free-flyers like the Astrobee robots currently operating aboard the International Space Station must operate with inherent system uncertainties. Parametric uncertainties like mass and moment of inertia are especially important to quantify in these safety-critical space systems and can change in scenarios such as on-orbit cargo movement, where unknown grappled payloads significantly change the system dynamics. Cautiously learning these uncertainties en route can potentially avoid time- and fuel-consuming pure system identification maneuvers. Recognizing this, this work proposes RATTLE, an online information-aware motion planning algorithm that explicitly weights parametric model-learning coupled with real-time replanning capability that can take advantage of improved system models. The method consists of a two-tiered (global and local) planner, a low-level model predictive controller, and an online parameter estimator that produces estimates of the robot's inertial properties for more informed control and replanning on-the-fly; all levels of the planning and control feature online update-able models. Simulation results of RATTLE for the Astrobee free-flyer grappling an uncertain payload are presented alongside results of a hardware demonstration showcasing the ability to explicitly encourage model parametric learning while achieving otherwise useful motion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge