Richard Linares

Autonomous Reasoning for Spacecraft Control: A Large Language Model Framework with Group Relative Policy Optimization

Jan 07, 2026Abstract:This paper presents a learning-based guidance-and-control approach that couples a reasoning-enabled Large Language Model (LLM) with Group Relative Policy Optimization (GRPO). A two-stage procedure consisting of Supervised Fine-Tuning (SFT) to learn formatting and control primitives, followed by GRPO for interaction-driven policy improvement, trains controllers for each environment. The framework is demonstrated on four control problems spanning a gradient of dynamical complexity, from canonical linear systems through nonlinear oscillatory dynamics to three-dimensional spacecraft attitude control with gyroscopic coupling and thrust constraints. Results demonstrate that an LLM with explicit reasoning, optimized via GRPO, can synthesize feasible stabilizing policies under consistent training settings across both linear and nonlinear systems. The two-stage training methodology enables models to generate control sequences while providing human-readable explanations of their decision-making process. This work establishes a foundation for applying GRPO-based reasoning to autonomous control systems, with potential applications in aerospace and other safety-critical domains.

Tiny Recursive Control: Iterative Reasoning for Efficient Optimal Control

Dec 18, 2025Abstract:Neural network controllers increasingly demand millions of parameters, and language model approaches push into the billions. For embedded aerospace systems with strict power and latency constraints, this scaling is prohibitive. We present Tiny Recursive Control (TRC), a neural architecture based on a counterintuitive principle: capacity can emerge from iteration depth rather than parameter count. TRC applies compact networks (approximately 1.5M parameters) repeatedly through a two-level hierarchical latent structure, refining control sequences by simulating trajectories and correcting based on tracking error. Because the same weights process every refinement step, adding iterations increases computation without increasing memory. We evaluate TRC on nonlinear control problems including oscillator stabilization and powered descent with fuel constraints. Across these domains, TRC achieves near-optimal control costs while requiring only millisecond-scale inference on GPU and under 10~MB memory, two orders of magnitude smaller than language model baselines. These results demonstrate that recursive reasoning, previously confined to discrete tasks, transfers effectively to continuous control synthesis.

Multi-Phase Spacecraft Trajectory Optimization via Transformer-Based Reinforcement Learning

Nov 14, 2025

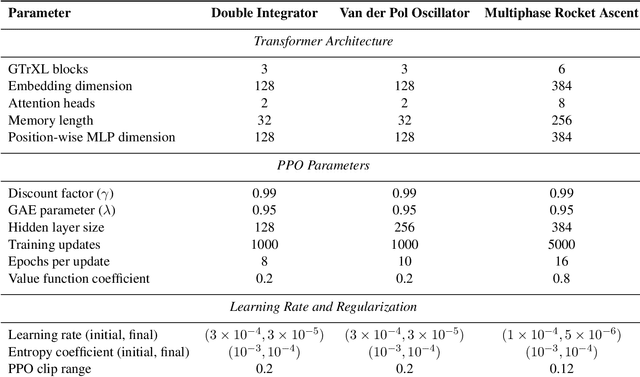

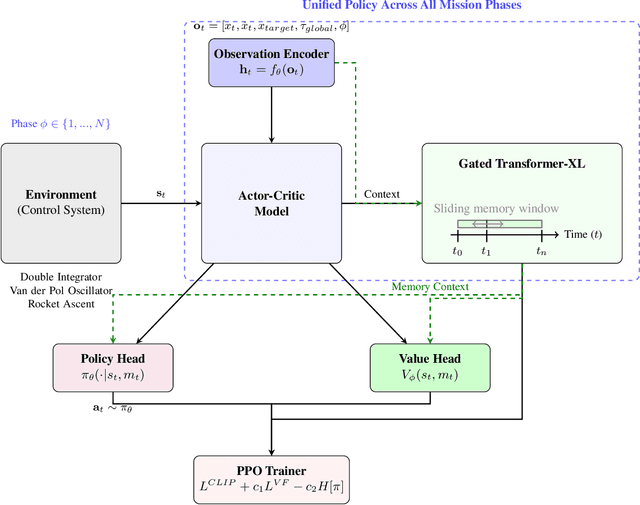

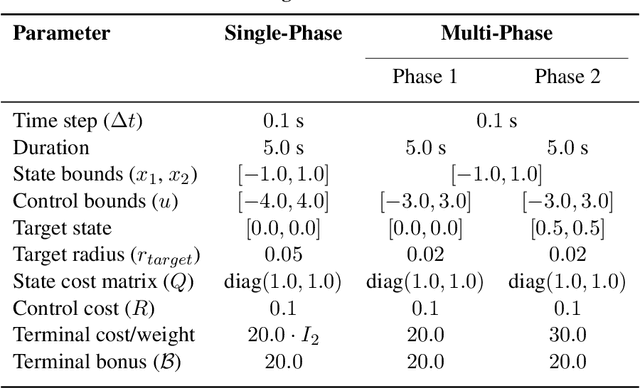

Abstract:Autonomous spacecraft control for mission phases such as launch, ascent, stage separation, and orbit insertion remains a critical challenge due to the need for adaptive policies that generalize across dynamically distinct regimes. While reinforcement learning (RL) has shown promise in individual astrodynamics tasks, existing approaches often require separate policies for distinct mission phases, limiting adaptability and increasing operational complexity. This work introduces a transformer-based RL framework that unifies multi-phase trajectory optimization through a single policy architecture, leveraging the transformer's inherent capacity to model extended temporal contexts. Building on proximal policy optimization (PPO), our framework replaces conventional recurrent networks with a transformer encoder-decoder structure, enabling the agent to maintain coherent memory across mission phases spanning seconds to minutes during critical operations. By integrating a Gated Transformer-XL (GTrXL) architecture, the framework eliminates manual phase transitions while maintaining stability in control decisions. We validate our approach progressively: first demonstrating near-optimal performance on single-phase benchmarks (double integrator and Van der Pol oscillator), then extending to multiphase waypoint navigation variants, and finally tackling a complex multiphase rocket ascent problem that includes atmospheric flight, stage separation, and vacuum operations. Results demonstrate that the transformer-based framework not only matches analytical solutions in simple cases but also effectively learns coherent control policies across dynamically distinct regimes, establishing a foundation for scalable autonomous mission planning that reduces reliance on phase-specific controllers while maintaining compatibility with safety-critical verification protocols.

Action Chunking with Transformers for Image-Based Spacecraft Guidance and Control

Sep 04, 2025Abstract:We present an imitation learning approach for spacecraft guidance, navigation, and control(GNC) that achieves high performance from limited data. Using only 100 expert demonstrations, equivalent to 6,300 environment interactions, our method, which implements Action Chunking with Transformers (ACT), learns a control policy that maps visual and state observations to thrust and torque commands. ACT generates smoother, more consistent trajectories than a meta-reinforcement learning (meta-RL) baseline trained with 40 million interactions. We evaluate ACT on a rendezvous task: in-orbit docking with the International Space Station (ISS). We show that our approach achieves greater accuracy, smoother control, and greater sample efficiency.

Large Language Models as Autonomous Spacecraft Operators in Kerbal Space Program

May 26, 2025Abstract:Recent trends are emerging in the use of Large Language Models (LLMs) as autonomous agents that take actions based on the content of the user text prompts. We intend to apply these concepts to the field of Control in space, enabling LLMs to play a significant role in the decision-making process for autonomous satellite operations. As a first step towards this goal, we have developed a pure LLM-based solution for the Kerbal Space Program Differential Games (KSPDG) challenge, a public software design competition where participants create autonomous agents for maneuvering satellites involved in non-cooperative space operations, running on the KSP game engine. Our approach leverages prompt engineering, few-shot prompting, and fine-tuning techniques to create an effective LLM-based agent that ranked 2nd in the competition. To the best of our knowledge, this work pioneers the integration of LLM agents into space research. The project comprises several open repositories to facilitate replication and further research. The codebase is accessible on \href{https://github.com/ARCLab-MIT/kspdg}{GitHub}, while the trained models and datasets are available on \href{https://huggingface.co/OhhTuRnz}{Hugging Face}. Additionally, experiment tracking and detailed results can be reviewed on \href{https://wandb.ai/carrusk/huggingface}{Weights \& Biases

Fine-Tuned Language Models as Space Systems Controllers

Jan 28, 2025Abstract:Large language models (LLMs), or foundation models (FMs), are pretrained transformers that coherently complete sentences auto-regressively. In this paper, we show that LLMs can control simplified space systems after some additional training, called fine-tuning. We look at relatively small language models, ranging between 7 and 13 billion parameters. We focus on four problems: a three-dimensional spring toy problem, low-thrust orbit transfer, low-thrust cislunar control, and powered descent guidance. The fine-tuned LLMs are capable of controlling systems by generating sufficiently accurate outputs that are multi-dimensional vectors with up to 10 significant digits. We show that for several problems the amount of data required to perform fine-tuning is smaller than what is generally required of traditional deep neural networks (DNNs), and that fine-tuned LLMs are good at generalizing outside of the training dataset. Further, the same LLM can be fine-tuned with data from different problems, with only minor performance degradation with respect to LLMs trained for a single application. This work is intended as a first step towards the development of a general space systems controller.

Visual Language Models as Operator Agents in the Space Domain

Jan 14, 2025Abstract:This paper explores the application of Vision-Language Models (VLMs) as operator agents in the space domain, focusing on both software and hardware operational paradigms. Building on advances in Large Language Models (LLMs) and their multimodal extensions, we investigate how VLMs can enhance autonomous control and decision-making in space missions. In the software context, we employ VLMs within the Kerbal Space Program Differential Games (KSPDG) simulation environment, enabling the agent to interpret visual screenshots of the graphical user interface to perform complex orbital maneuvers. In the hardware context, we integrate VLMs with robotic systems equipped with cameras to inspect and diagnose physical space objects, such as satellites. Our results demonstrate that VLMs can effectively process visual and textual data to generate contextually appropriate actions, competing with traditional methods and non-multimodal LLMs in simulation tasks, and showing promise in real-world applications.

Tight Constraint Prediction of Six-Degree-of-Freedom Transformer-based Powered Descent Guidance

Jan 01, 2025Abstract:This work introduces Transformer-based Successive Convexification (T-SCvx), an extension of Transformer-based Powered Descent Guidance (T-PDG), generalizable for efficient six-degree-of-freedom (DoF) fuel-optimal powered descent trajectory generation. Our approach significantly enhances the sample efficiency and solution quality for nonconvex-powered descent guidance by employing a rotation invariant transformation of the sampled dataset. T-PDG was previously applied to the 3-DoF minimum fuel powered descent guidance problem, improving solution times by up to an order of magnitude compared to lossless convexification (LCvx). By learning to predict the set of tight or active constraints at the optimal control problem's solution, Transformer-based Successive Convexification (T-SCvx) creates the minimal reduced-size problem initialized with only the tight constraints, then uses the solution of this reduced problem to warm-start the direct optimization solver. 6-DoF powered descent guidance is known to be challenging to solve quickly and reliably due to the nonlinear and non-convex nature of the problem, the discretization scheme heavily influencing solution validity, and reference trajectory initialization determining algorithm convergence or divergence. Our contributions in this work address these challenges by extending T-PDG to learn the set of tight constraints for the successive convexification (SCvx) formulation of the 6-DoF powered descent guidance problem. In addition to reducing the problem size, feasible and locally optimal reference trajectories are also learned to facilitate convergence from the initial guess. T-SCvx enables onboard computation of real-time guidance trajectories, demonstrated by a 6-DoF Mars powered landing application problem.

Diffusion Policies for Generative Modeling of Spacecraft Trajectories

Jan 01, 2025Abstract:Machine learning has demonstrated remarkable promise for solving the trajectory generation problem and in paving the way for online use of trajectory optimization for resource-constrained spacecraft. However, a key shortcoming in current machine learning-based methods for trajectory generation is that they require large datasets and even small changes to the original trajectory design requirements necessitate retraining new models to learn the parameter-to-solution mapping. In this work, we leverage compositional diffusion modeling to efficiently adapt out-of-distribution data and problem variations in a few-shot framework for 6 degree-of-freedom (DoF) powered descent trajectory generation. Unlike traditional deep learning methods that can only learn the underlying structure of one specific trajectory optimization problem, diffusion models are a powerful generative modeling framework that represents the solution as a probability density function (PDF) and this allows for the composition of PDFs encompassing a variety of trajectory design specifications and constraints. We demonstrate the capability of compositional diffusion models for inference-time 6 DoF minimum-fuel landing site selection and composable constraint representations. Using these samples as initial guesses for 6 DoF powered descent guidance enables dynamically feasible and computationally efficient trajectory generation.

DreamSat: Towards a General 3D Model for Novel View Synthesis of Space Objects

Oct 07, 2024

Abstract:Novel view synthesis (NVS) enables to generate new images of a scene or convert a set of 2D images into a comprehensive 3D model. In the context of Space Domain Awareness, since space is becoming increasingly congested, NVS can accurately map space objects and debris, improving the safety and efficiency of space operations. Similarly, in Rendezvous and Proximity Operations missions, 3D models can provide details about a target object's shape, size, and orientation, allowing for better planning and prediction of the target's behavior. In this work, we explore the generalization abilities of these reconstruction techniques, aiming to avoid the necessity of retraining for each new scene, by presenting a novel approach to 3D spacecraft reconstruction from single-view images, DreamSat, by fine-tuning the Zero123 XL, a state-of-the-art single-view reconstruction model, on a high-quality dataset of 190 high-quality spacecraft models and integrating it into the DreamGaussian framework. We demonstrate consistent improvements in reconstruction quality across multiple metrics, including Contrastive Language-Image Pretraining (CLIP) score (+0.33%), Peak Signal-to-Noise Ratio (PSNR) (+2.53%), Structural Similarity Index (SSIM) (+2.38%), and Learned Perceptual Image Patch Similarity (LPIPS) (+0.16%) on a test set of 30 previously unseen spacecraft images. Our method addresses the lack of domain-specific 3D reconstruction tools in the space industry by leveraging state-of-the-art diffusion models and 3D Gaussian splatting techniques. This approach maintains the efficiency of the DreamGaussian framework while enhancing the accuracy and detail of spacecraft reconstructions. The code for this work can be accessed on GitHub (https://github.com/ARCLab-MIT/space-nvs).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge