Victor Rodriguez-Fernandez

Can LLMs Do Rocket Science? Exploring the Limits of Complex Reasoning with GTOC 12

Feb 03, 2026Abstract:Large Language Models (LLMs) have demonstrated remarkable proficiency in code generation and general reasoning, yet their capacity for autonomous multi-stage planning in high-dimensional, physically constrained environments remains an open research question. This study investigates the limits of current AI agents by evaluating them against the 12th Global Trajectory Optimization Competition (GTOC 12), a complex astrodynamics challenge requiring the design of a large-scale asteroid mining campaign. We adapt the MLE-Bench framework to the domain of orbital mechanics and deploy an AIDE-based agent architecture to autonomously generate and refine mission solutions. To assess performance beyond binary validity, we employ an "LLM-as-a-Judge" methodology, utilizing a rubric developed by domain experts to evaluate strategic viability across five structural categories. A comparative analysis of models, ranging from GPT-4-Turbo to reasoning-enhanced architectures like Gemini 2.5 Pro, and o3, reveals a significant trend: the average strategic viability score has nearly doubled in the last two years (rising from 9.3 to 17.2 out of 26). However, we identify a critical capability gap between strategy and execution. While advanced models demonstrate sophisticated conceptual understanding, correctly framing objective functions and mission architectures, they consistently fail at implementation due to physical unit inconsistencies, boundary condition errors, and inefficient debugging loops. We conclude that, while current LLMs often demonstrate sufficient knowledge and intelligence to tackle space science tasks, they remain limited by an implementation barrier, functioning as powerful domain facilitators rather than fully autonomous engineers.

* Extended version of the paper presented at AIAA SciTech 2026 Forum. Includes futher experiments, corrections and new appendix

Multi-Phase Spacecraft Trajectory Optimization via Transformer-Based Reinforcement Learning

Nov 14, 2025

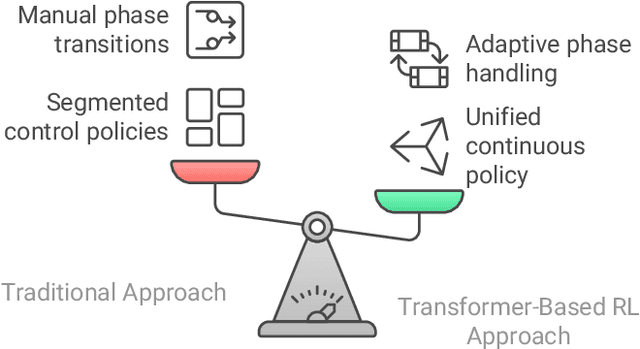

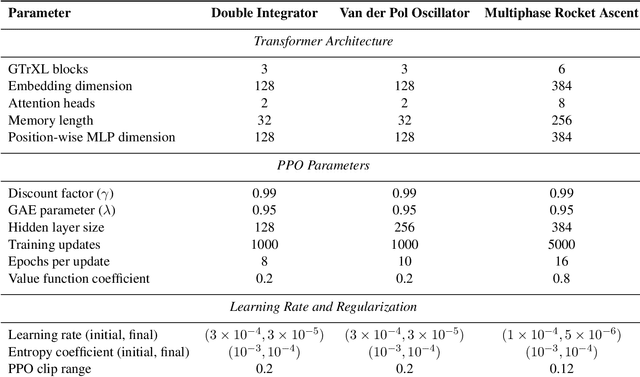

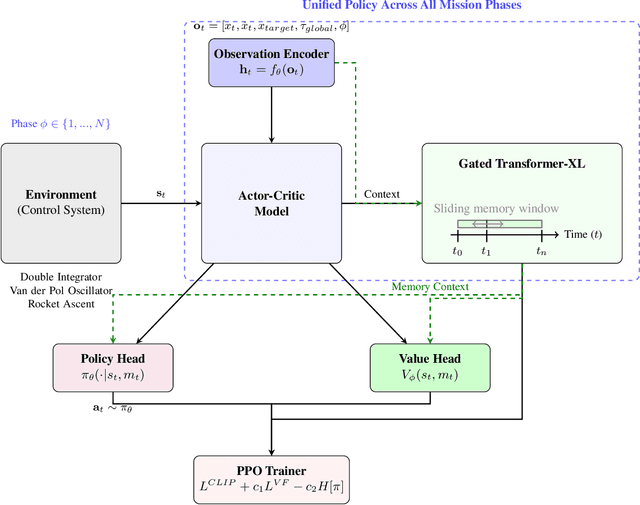

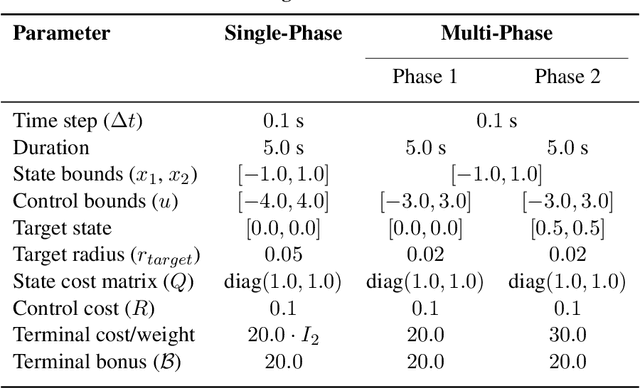

Abstract:Autonomous spacecraft control for mission phases such as launch, ascent, stage separation, and orbit insertion remains a critical challenge due to the need for adaptive policies that generalize across dynamically distinct regimes. While reinforcement learning (RL) has shown promise in individual astrodynamics tasks, existing approaches often require separate policies for distinct mission phases, limiting adaptability and increasing operational complexity. This work introduces a transformer-based RL framework that unifies multi-phase trajectory optimization through a single policy architecture, leveraging the transformer's inherent capacity to model extended temporal contexts. Building on proximal policy optimization (PPO), our framework replaces conventional recurrent networks with a transformer encoder-decoder structure, enabling the agent to maintain coherent memory across mission phases spanning seconds to minutes during critical operations. By integrating a Gated Transformer-XL (GTrXL) architecture, the framework eliminates manual phase transitions while maintaining stability in control decisions. We validate our approach progressively: first demonstrating near-optimal performance on single-phase benchmarks (double integrator and Van der Pol oscillator), then extending to multiphase waypoint navigation variants, and finally tackling a complex multiphase rocket ascent problem that includes atmospheric flight, stage separation, and vacuum operations. Results demonstrate that the transformer-based framework not only matches analytical solutions in simple cases but also effectively learns coherent control policies across dynamically distinct regimes, establishing a foundation for scalable autonomous mission planning that reduces reliance on phase-specific controllers while maintaining compatibility with safety-critical verification protocols.

Large Language Models as Autonomous Spacecraft Operators in Kerbal Space Program

May 26, 2025Abstract:Recent trends are emerging in the use of Large Language Models (LLMs) as autonomous agents that take actions based on the content of the user text prompts. We intend to apply these concepts to the field of Control in space, enabling LLMs to play a significant role in the decision-making process for autonomous satellite operations. As a first step towards this goal, we have developed a pure LLM-based solution for the Kerbal Space Program Differential Games (KSPDG) challenge, a public software design competition where participants create autonomous agents for maneuvering satellites involved in non-cooperative space operations, running on the KSP game engine. Our approach leverages prompt engineering, few-shot prompting, and fine-tuning techniques to create an effective LLM-based agent that ranked 2nd in the competition. To the best of our knowledge, this work pioneers the integration of LLM agents into space research. The project comprises several open repositories to facilitate replication and further research. The codebase is accessible on \href{https://github.com/ARCLab-MIT/kspdg}{GitHub}, while the trained models and datasets are available on \href{https://huggingface.co/OhhTuRnz}{Hugging Face}. Additionally, experiment tracking and detailed results can be reviewed on \href{https://wandb.ai/carrusk/huggingface}{Weights \& Biases

Decoding Latent Spaces: Assessing the Interpretability of Time Series Foundation Models for Visual Analytics

Apr 26, 2025Abstract:The present study explores the interpretability of latent spaces produced by time series foundation models, focusing on their potential for visual analysis tasks. Specifically, we evaluate the MOMENT family of models, a set of transformer-based, pre-trained architectures for multivariate time series tasks such as: imputation, prediction, classification, and anomaly detection. We evaluate the capacity of these models on five datasets to capture the underlying structures in time series data within their latent space projection and validate whether fine tuning improves the clarity of the resulting embedding spaces. Notable performance improvements in terms of loss reduction were observed after fine tuning. Visual analysis shows limited improvement in the interpretability of the embeddings, requiring further work. Results suggest that, although Time Series Foundation Models such as MOMENT are robust, their latent spaces may require additional methodological refinements to be adequately interpreted, such as alternative projection techniques, loss functions, or data preprocessing strategies. Despite the limitations of MOMENT, foundation models supose a big reduction in execution time and so a great advance for interactive visual analytics.

Fine-Tuned Language Models as Space Systems Controllers

Jan 28, 2025Abstract:Large language models (LLMs), or foundation models (FMs), are pretrained transformers that coherently complete sentences auto-regressively. In this paper, we show that LLMs can control simplified space systems after some additional training, called fine-tuning. We look at relatively small language models, ranging between 7 and 13 billion parameters. We focus on four problems: a three-dimensional spring toy problem, low-thrust orbit transfer, low-thrust cislunar control, and powered descent guidance. The fine-tuned LLMs are capable of controlling systems by generating sufficiently accurate outputs that are multi-dimensional vectors with up to 10 significant digits. We show that for several problems the amount of data required to perform fine-tuning is smaller than what is generally required of traditional deep neural networks (DNNs), and that fine-tuned LLMs are good at generalizing outside of the training dataset. Further, the same LLM can be fine-tuned with data from different problems, with only minor performance degradation with respect to LLMs trained for a single application. This work is intended as a first step towards the development of a general space systems controller.

Visual Language Models as Operator Agents in the Space Domain

Jan 14, 2025Abstract:This paper explores the application of Vision-Language Models (VLMs) as operator agents in the space domain, focusing on both software and hardware operational paradigms. Building on advances in Large Language Models (LLMs) and their multimodal extensions, we investigate how VLMs can enhance autonomous control and decision-making in space missions. In the software context, we employ VLMs within the Kerbal Space Program Differential Games (KSPDG) simulation environment, enabling the agent to interpret visual screenshots of the graphical user interface to perform complex orbital maneuvers. In the hardware context, we integrate VLMs with robotic systems equipped with cameras to inspect and diagnose physical space objects, such as satellites. Our results demonstrate that VLMs can effectively process visual and textual data to generate contextually appropriate actions, competing with traditional methods and non-multimodal LLMs in simulation tasks, and showing promise in real-world applications.

DreamSat: Towards a General 3D Model for Novel View Synthesis of Space Objects

Oct 07, 2024

Abstract:Novel view synthesis (NVS) enables to generate new images of a scene or convert a set of 2D images into a comprehensive 3D model. In the context of Space Domain Awareness, since space is becoming increasingly congested, NVS can accurately map space objects and debris, improving the safety and efficiency of space operations. Similarly, in Rendezvous and Proximity Operations missions, 3D models can provide details about a target object's shape, size, and orientation, allowing for better planning and prediction of the target's behavior. In this work, we explore the generalization abilities of these reconstruction techniques, aiming to avoid the necessity of retraining for each new scene, by presenting a novel approach to 3D spacecraft reconstruction from single-view images, DreamSat, by fine-tuning the Zero123 XL, a state-of-the-art single-view reconstruction model, on a high-quality dataset of 190 high-quality spacecraft models and integrating it into the DreamGaussian framework. We demonstrate consistent improvements in reconstruction quality across multiple metrics, including Contrastive Language-Image Pretraining (CLIP) score (+0.33%), Peak Signal-to-Noise Ratio (PSNR) (+2.53%), Structural Similarity Index (SSIM) (+2.38%), and Learned Perceptual Image Patch Similarity (LPIPS) (+0.16%) on a test set of 30 previously unseen spacecraft images. Our method addresses the lack of domain-specific 3D reconstruction tools in the space industry by leveraging state-of-the-art diffusion models and 3D Gaussian splatting techniques. This approach maintains the efficiency of the DreamGaussian framework while enhancing the accuracy and detail of spacecraft reconstructions. The code for this work can be accessed on GitHub (https://github.com/ARCLab-MIT/space-nvs).

Fine-tuning LLMs for Autonomous Spacecraft Control: A Case Study Using Kerbal Space Program

Aug 16, 2024Abstract:Recent trends are emerging in the use of Large Language Models (LLMs) as autonomous agents that take actions based on the content of the user text prompt. This study explores the use of fine-tuned Large Language Models (LLMs) for autonomous spacecraft control, using the Kerbal Space Program Differential Games suite (KSPDG) as a testing environment. Traditional Reinforcement Learning (RL) approaches face limitations in this domain due to insufficient simulation capabilities and data. By leveraging LLMs, specifically fine-tuning models like GPT-3.5 and LLaMA, we demonstrate how these models can effectively control spacecraft using language-based inputs and outputs. Our approach integrates real-time mission telemetry into textual prompts processed by the LLM, which then generate control actions via an agent. The results open a discussion about the potential of LLMs for space operations beyond their nominal use for text-related tasks. Future work aims to expand this methodology to other space control tasks and evaluate the performance of different LLM families. The code is available at this URL: \texttt{https://github.com/ARCLab-MIT/kspdg}.

Exploring Scalability in Large-Scale Time Series in DeepVATS framework

Aug 08, 2024Abstract:Visual analytics is essential for studying large time series due to its ability to reveal trends, anomalies, and insights. DeepVATS is a tool that merges Deep Learning (Deep) with Visual Analytics (VA) for the analysis of large time series data (TS). It has three interconnected modules. The Deep Learning module, developed in R, manages the load of datasets and Deep Learning models from and to the Storage module. This module also supports models training and the acquisition of the embeddings from the latent space of the trained model. The Storage module operates using the Weights and Biases system. Subsequently, these embeddings can be analyzed in the Visual Analytics module. This module, based on an R Shiny application, allows the adjustment of the parameters related to the projection and clustering of the embeddings space. Once these parameters are set, interactive plots representing both the embeddings, and the time series are shown. This paper introduces the tool and examines its scalability through log analytics. The execution time evolution is examined while the length of the time series is varied. This is achieved by resampling a large data series into smaller subsets and logging the main execution and rendering times for later analysis of scalability.

Generative Design of Periodic Orbits in the Restricted Three-Body Problem

Aug 07, 2024Abstract:The Three-Body Problem has fascinated scientists for centuries and it has been crucial in the design of modern space missions. Recent developments in Generative Artificial Intelligence hold transformative promise for addressing this longstanding problem. This work investigates the use of Variational Autoencoder (VAE) and its internal representation to generate periodic orbits. We utilize a comprehensive dataset of periodic orbits in the Circular Restricted Three-Body Problem (CR3BP) to train deep-learning architectures that capture key orbital characteristics, and we set up physical evaluation metrics for the generated trajectories. Through this investigation, we seek to enhance the understanding of how Generative AI can improve space mission planning and astrodynamics research, leading to novel, data-driven approaches in the field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge