Rodrigo Ventura

Conditional Denoising Model as a Physical Surrogate Model

Jan 28, 2026Abstract:Surrogate modeling for complex physical systems typically faces a trade-off between data-fitting accuracy and physical consistency. Physics-consistent approaches typically treat physical laws as soft constraints within the loss function, a strategy that frequently fails to guarantee strict adherence to the governing equations, or rely on post-processing corrections that do not intrinsically learn the underlying solution geometry. To address these limitations, we introduce the {Conditional Denoising Model (CDM)}, a generative model designed to learn the geometry of the physical manifold itself. By training the network to restore clean states from noisy ones, the model learns a vector field that points continuously towards the valid solution subspace. We introduce a time-independent formulation that transforms inference into a deterministic fixed-point iteration, effectively projecting noisy approximations onto the equilibrium manifold. Validated on a low-temperature plasma physics and chemistry benchmark, the CDM achieves higher parameter and data efficiency than physics-consistent baselines. Crucially, we demonstrate that the denoising objective acts as a powerful implicit regularizer: despite never seeing the governing equations during training, the model adheres to physical constraints more strictly than baselines trained with explicit physics losses.

FLORA: Efficient Synthetic Data Generation for Object Detection in Low-Data Regimes via finetuning Flux LoRA

Aug 29, 2025Abstract:Recent advances in diffusion-based generative models have demonstrated significant potential in augmenting scarce datasets for object detection tasks. Nevertheless, most recent models rely on resource-intensive full fine-tuning of large-scale diffusion models, requiring enterprise-grade GPUs (e.g., NVIDIA V100) and thousands of synthetic images. To address these limitations, we propose Flux LoRA Augmentation (FLORA), a lightweight synthetic data generation pipeline. Our approach uses the Flux 1.1 Dev diffusion model, fine-tuned exclusively through Low-Rank Adaptation (LoRA). This dramatically reduces computational requirements, enabling synthetic dataset generation with a consumer-grade GPU (e.g., NVIDIA RTX 4090). We empirically evaluate our approach on seven diverse object detection datasets. Our results demonstrate that training object detectors with just 500 synthetic images generated by our approach yields superior detection performance compared to models trained on 5000 synthetic images from the ODGEN baseline, achieving improvements of up to 21.3% in mAP@.50:.95. This work demonstrates that it is possible to surpass state-of-the-art performance with far greater efficiency, as FLORA achieves superior results using only 10% of the data and a fraction of the computational cost. This work demonstrates that a quality and efficiency-focused approach is more effective than brute-force generation, making advanced synthetic data creation more practical and accessible for real-world scenarios.

CAD2DMD-SET: Synthetic Generation Tool of Digital Measurement Device CAD Model Datasets for fine-tuning Large Vision-Language Models

Aug 29, 2025Abstract:Recent advancements in Large Vision-Language Models (LVLMs) have demonstrated impressive capabilities across various multimodal tasks. They continue, however, to struggle with trivial scenarios such as reading values from Digital Measurement Devices (DMDs), particularly in real-world conditions involving clutter, occlusions, extreme viewpoints, and motion blur; common in head-mounted cameras and Augmented Reality (AR) applications. Motivated by these limitations, this work introduces CAD2DMD-SET, a synthetic data generation tool designed to support visual question answering (VQA) tasks involving DMDs. By leveraging 3D CAD models, advanced rendering, and high-fidelity image composition, our tool produces diverse, VQA-labelled synthetic DMD datasets suitable for fine-tuning LVLMs. Additionally, we present DMDBench, a curated validation set of 1,000 annotated real-world images designed to evaluate model performance under practical constraints. Benchmarking three state-of-the-art LVLMs using Average Normalised Levenshtein Similarity (ANLS) and further fine-tuning LoRA's of these models with CAD2DMD-SET's generated dataset yielded substantial improvements, with InternVL showcasing a score increase of 200% without degrading on other tasks. This demonstrates that the CAD2DMD-SET training dataset substantially improves the robustness and performance of LVLMs when operating under the previously stated challenging conditions. The CAD2DMD-SET tool is expected to be released as open-source once the final version of this manuscript is prepared, allowing the community to add different measurement devices and generate their own datasets.

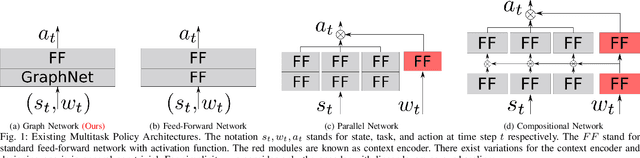

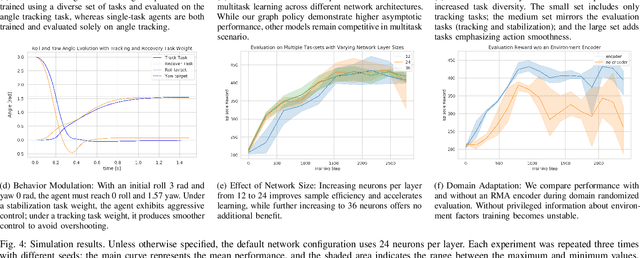

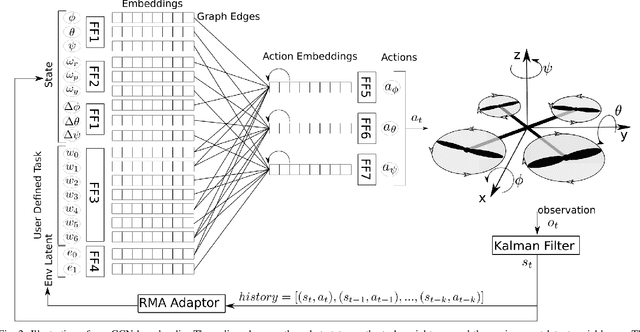

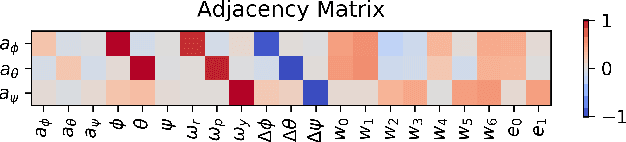

Multitask Reinforcement Learning for Quadcopter Attitude Stabilization and Tracking using Graph Policy

Mar 11, 2025

Abstract:Quadcopter attitude control involves two tasks: smooth attitude tracking and aggressive stabilization from arbitrary states. Although both can be formulated as tracking problems, their distinct state spaces and control strategies complicate a unified reward function. We propose a multitask deep reinforcement learning framework that leverages parallel simulation with IsaacGym and a Graph Convolutional Network (GCN) policy to address both tasks effectively. Our multitask Soft Actor-Critic (SAC) approach achieves faster, more reliable learning and higher sample efficiency than single-task methods. We validate its real-world applicability by deploying the learned policy - a compact two-layer network with 24 neurons per layer - on a Pixhawk flight controller, achieving 400 Hz control without extra computational resources. We provide our code at https://github.com/robot-perception-group/GraphMTSAC\_UAV/.

AI-Powered Augmented Reality for Satellite Assembly, Integration and Test

Sep 26, 2024

Abstract:The integration of Artificial Intelligence (AI) and Augmented Reality (AR) is set to transform satellite Assembly, Integration, and Testing (AIT) processes by enhancing precision, minimizing human error, and improving operational efficiency in cleanroom environments. This paper presents a technical description of the European Space Agency's (ESA) project "AI for AR in Satellite AIT," which combines real-time computer vision and AR systems to assist technicians during satellite assembly. Leveraging Microsoft HoloLens 2 as the AR interface, the system delivers context-aware instructions and real-time feedback, tackling the complexities of object recognition and 6D pose estimation in AIT workflows. All AI models demonstrated over 70% accuracy, with the detection model exceeding 95% accuracy, indicating a high level of performance and reliability. A key contribution of this work lies in the effective use of synthetic data for training AI models in AR applications, addressing the significant challenges of obtaining real-world datasets in highly dynamic satellite environments, as well as the creation of the Segmented Anything Model for Automatic Labelling (SAMAL), which facilitates the automatic annotation of real data, achieving speeds up to 20 times faster than manual human annotation. The findings demonstrate the efficacy of AI-driven AR systems in automating critical satellite assembly tasks, setting a foundation for future innovations in the space industry.

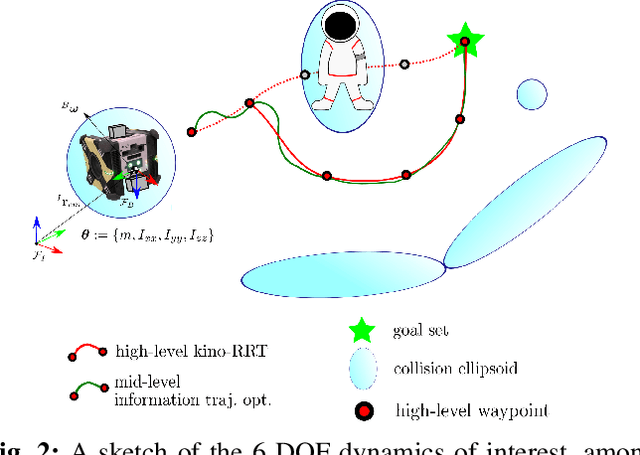

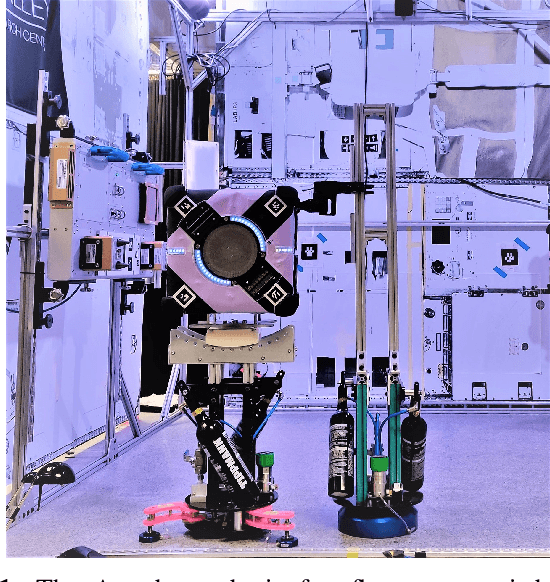

The ReSWARM Microgravity Flight Experiments: Planning, Control, and Model Estimation for On-Orbit Close Proximity Operations

Jan 03, 2023Abstract:On-orbit close proximity operations involve robotic spacecraft maneuvering and making decisions for a growing number of mission scenarios demanding autonomy, including on-orbit assembly, repair, and astronaut assistance. Of these scenarios, on-orbit assembly is an enabling technology that will allow large space structures to be built in-situ, using smaller building block modules. However, robotic on-orbit assembly involves a number of technical hurdles such as changing system models. For instance, grappled modules moved by a free-flying "assembler" robot can cause significant shifts in system inertial properties, which has cascading impacts on motion planning and control portions of the autonomy stack. Further, on-orbit assembly and other scenarios require collision-avoiding motion planning, particularly when operating in a "construction site" scenario of multiple assembler robots and structures. These complicating factors, relevant to many autonomous microgravity robotics use cases, are tackled in the ReSWARM flight experiments as a set of tests on the International Space Station using NASA's Astrobee robots. RElative Satellite sWarming and Robotic Maneuvering, or ReSWARM, demonstrates multiple key technologies for close proximity operations and on-orbit assembly: (1) global long-horizon planning, accomplished using offline and online sampling-based planner options that consider the system dynamics; (2) on-orbit reconfiguration model learning, using the recently-proposed RATTLE information-aware planning framework; and (3) robust control tools to provide low-level control robustness using current system knowledge. These approaches are detailed individually and in an "on-orbit assembly scenario" of multi-waypoint tracking on-orbit. Additionally, detail is provided discussing the practicalities of hardware implementation and unique aspects of working with Astrobee in microgravity.

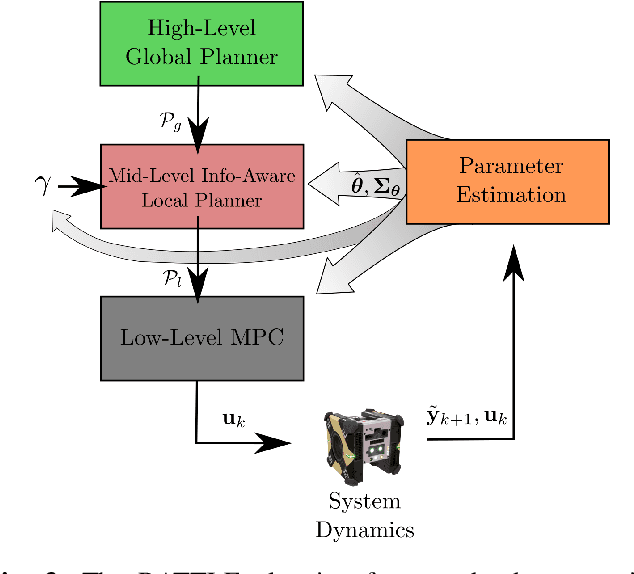

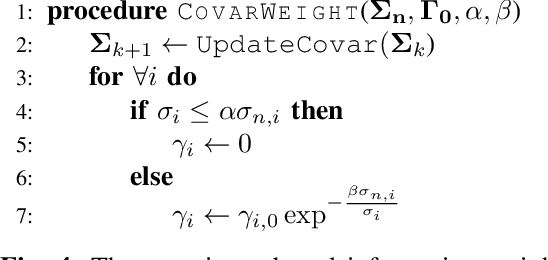

The RATTLE Motion Planning Algorithm for Robust Online Parametric Model Improvement with On-Orbit Validation

Mar 03, 2022

Abstract:Certain forms of uncertainty that robotic systems encounter can be explicitly learned within the context of a known model, like parametric model uncertainties such as mass and moments of inertia. Quantifying such parametric uncertainty is important for more accurate prediction of the system behavior, leading to safe and precise task execution. In tandem, providing a form of robustness guarantee against prevailing uncertainty levels like environmental disturbances and current model knowledge is also desirable. To that end, the authors' previously proposed RATTLE algorithm, a framework for online information-aware motion planning, is outlined and extended to enhance its applicability to real robotic systems. RATTLE provides a clear tradeoff between information-seeking motion and traditional goal-achieving motion and features online-updateable models. Additionally, online-updateable low level control robustness guarantees and a new method for automatic adjustment of information content down to a specified estimation precision is proposed. Results of extensive experimentation in microgravity using the Astrobee robots aboard the International Space Station and practical implementation details are presented, demonstrating RATTLE's capabilities for real-time, robust, online-updateable, and model information-seeking motion planning capabilities under parametric uncertainty.

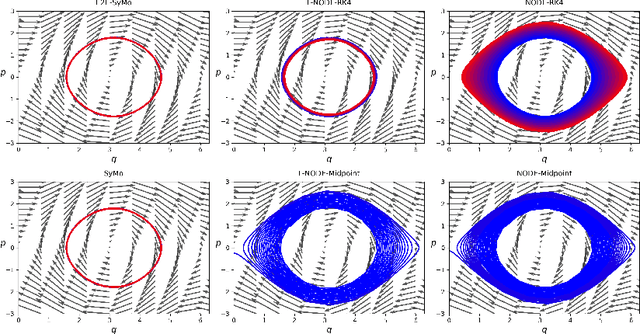

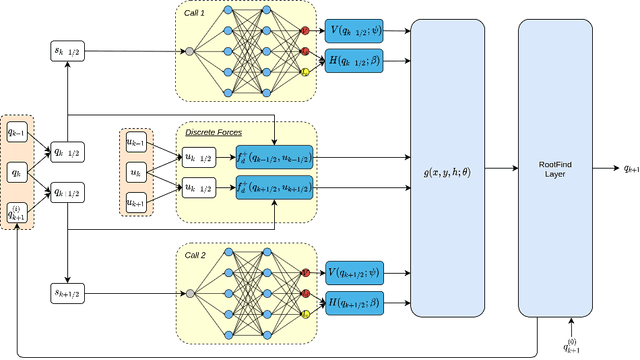

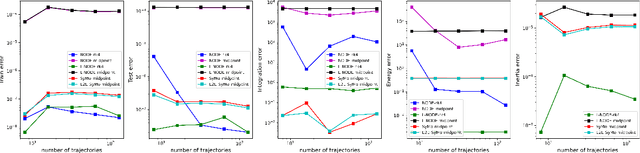

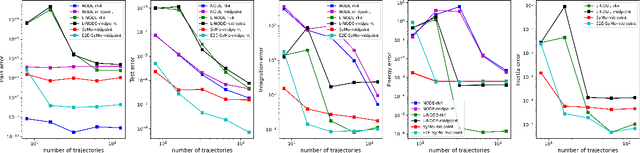

Symplectic Momentum Neural Networks -- Using Discrete Variational Mechanics as a prior in Deep Learning

Jan 21, 2022

Abstract:With deep learning being gaining attention from the research community for prediction and control of real physical systems, learning important representations is becoming now more than ever mandatory. It is of extremely importance that deep learning representations are coherent with physics. When learning from discrete data this can be guaranteed by including some sort of prior into the learning, however not all discretization priors preserve important structures from the physics. In this paper we introduce Symplectic Momentum Neural Networks (SyMo) as models from a discrete formulation of mechanics for non-separable mechanical systems. The combination of such formulation leads SyMos to be constrained towards preserving important geometric structures such as momentum and a symplectic form and learn from limited data. Furthermore, it allows to learn dynamics only from the poses as training data. We extend SyMos to include variational integrators within the learning framework by developing an implicit root-find layer which leads to End-to-End Symplectic Momentum Neural Networks (E2E-SyMo). Through experimental results, using the pendulum and cartpole we show that such combination not only allows these models tol earn from limited data but also provides the models with the capability of preserving the symplectic form and show better long-term behaviour.

Online Information-Aware Motion Planning with Inertial Parameter Learning for Robotic Free-Flyers

Dec 11, 2021

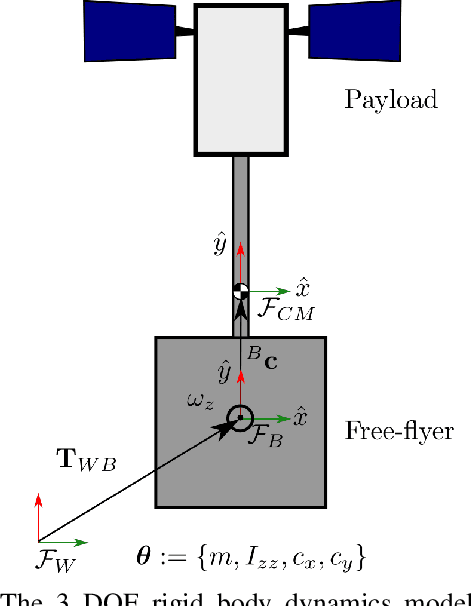

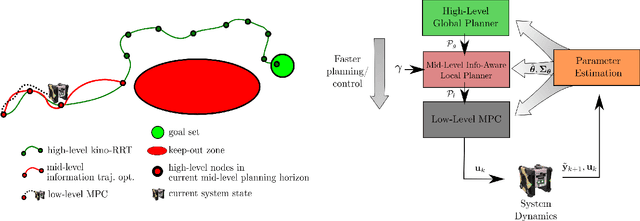

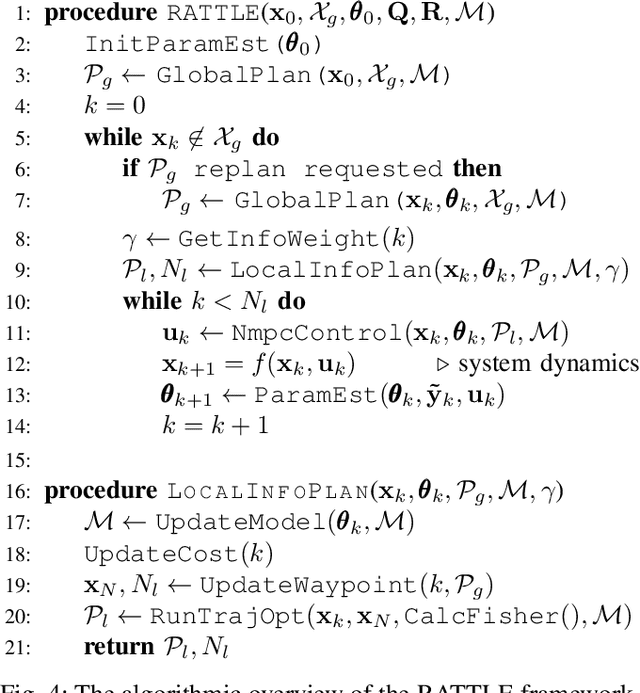

Abstract:Space free-flyers like the Astrobee robots currently operating aboard the International Space Station must operate with inherent system uncertainties. Parametric uncertainties like mass and moment of inertia are especially important to quantify in these safety-critical space systems and can change in scenarios such as on-orbit cargo movement, where unknown grappled payloads significantly change the system dynamics. Cautiously learning these uncertainties en route can potentially avoid time- and fuel-consuming pure system identification maneuvers. Recognizing this, this work proposes RATTLE, an online information-aware motion planning algorithm that explicitly weights parametric model-learning coupled with real-time replanning capability that can take advantage of improved system models. The method consists of a two-tiered (global and local) planner, a low-level model predictive controller, and an online parameter estimator that produces estimates of the robot's inertial properties for more informed control and replanning on-the-fly; all levels of the planning and control feature online update-able models. Simulation results of RATTLE for the Astrobee free-flyer grappling an uncertain payload are presented alongside results of a hardware demonstration showcasing the ability to explicitly encourage model parametric learning while achieving otherwise useful motion.

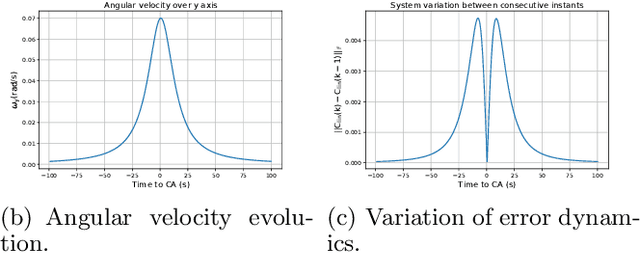

COSMIC: fast closed-form identification from large-scale data for LTV systems

Dec 08, 2021

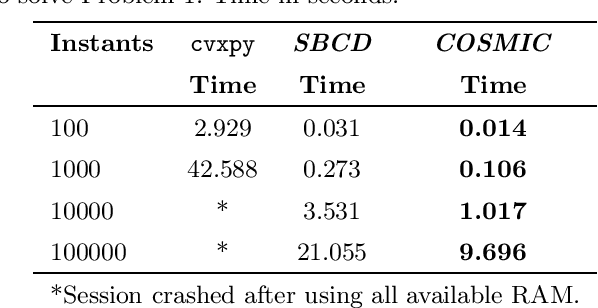

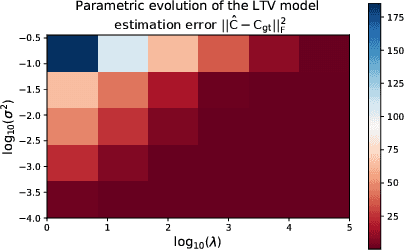

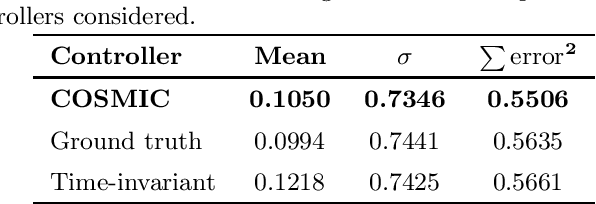

Abstract:We introduce a closed-form method for identification of discrete-time linear time-variant systems from data, formulating the learning problem as a regularized least squares problem where the regularizer favors smooth solutions within a trajectory. We develop a closed-form algorithm with guarantees of optimality and with a complexity that increases linearly with the number of instants considered per trajectory. The COSMIC algorithm achieves the desired result even in the presence of large volumes of data. Our method solved the problem using two orders of magnitude less computational power than a general purpose convex solver and was about 3 times faster than a Stochastic Block Coordinate Descent especially designed method. Computational times of our method remained in the order of magnitude of the second even for 10k and 100k time instants, where the general purpose solver crashed. To prove its applicability to real world systems, we test with spring-mass-damper system and use the estimated model to find the optimal control path. Our algorithm was applied to both a Low Fidelity and Functional Engineering Simulators for the Comet Interceptor mission, that requires precise pointing of the on-board cameras in a fast dynamics environment. Thus, this paper provides a fast alternative to classical system identification techniques for linear time-variant systems, while proving to be a solid base for applications in the Space industry and a step forward to the incorporation of algorithms that leverage data in such a safety-critical environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge