Paulo V. K. Borges

Online Adaptive Traversability Estimation through Interaction for Unstructured, Densely Vegetated Environments

Feb 04, 2025

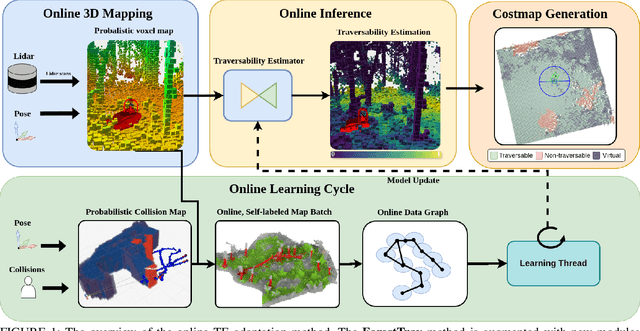

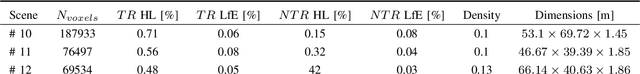

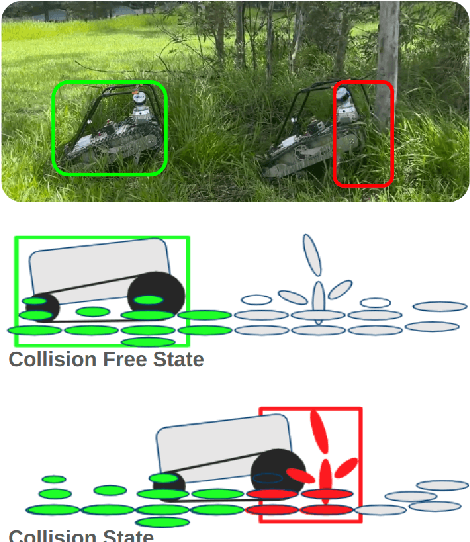

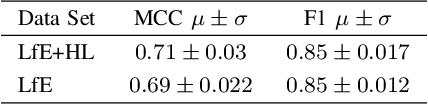

Abstract:Navigating densely vegetated environments poses significant challenges for autonomous ground vehicles. Learning-based systems typically use prior and in-situ data to predict terrain traversability but often degrade in performance when encountering out-of-distribution elements caused by rapid environmental changes or novel conditions. This paper presents a novel, lidar-only, online adaptive traversability estimation (TE) method that trains a model directly on the robot using self-supervised data collected through robot-environment interaction. The proposed approach utilises a probabilistic 3D voxel representation to integrate lidar measurements and robot experience, creating a salient environmental model. To ensure computational efficiency, a sparse graph-based representation is employed to update temporarily evolving voxel distributions. Extensive experiments with an unmanned ground vehicle in natural terrain demonstrate that the system adapts to complex environments with as little as 8 minutes of operational data, achieving a Matthews Correlation Coefficient (MCC) score of 0.63 and enabling safe navigation in densely vegetated environments. This work examines different training strategies for voxel-based TE methods and offers recommendations for training strategies to improve adaptability. The proposed method is validated on a robotic platform with limited computational resources (25W GPU), achieving accuracy comparable to offline-trained models while maintaining reliable performance across varied environments.

Unsupervised Change Detection for Space Habitats Using 3D Point Clouds

Dec 04, 2023

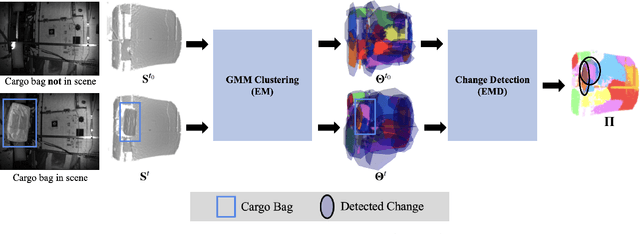

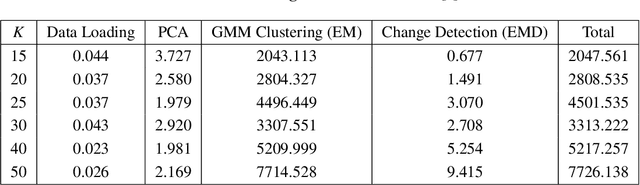

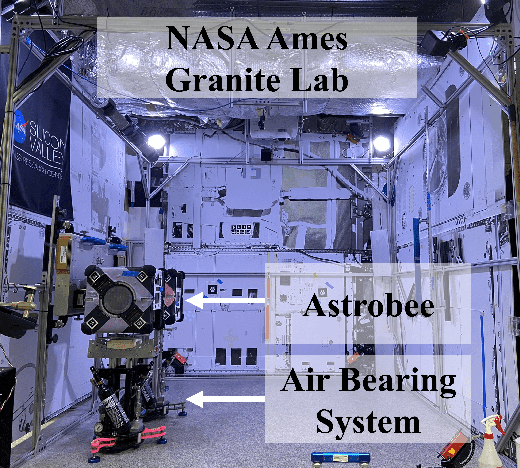

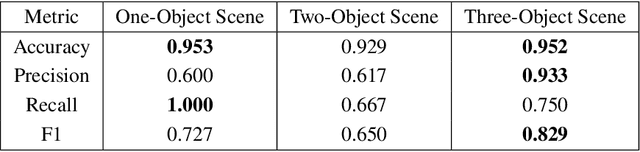

Abstract:This work presents an algorithm for scene change detection from point clouds to enable autonomous robotic caretaking in future space habitats. Autonomous robotic systems will help maintain future deep-space habitats, such as the Gateway space station, which will be uncrewed for extended periods. Existing scene analysis software used on the International Space Station (ISS) relies on manually-labeled images for detecting changes. In contrast, the algorithm presented in this work uses raw, unlabeled point clouds as inputs. The algorithm first applies modified Expectation-Maximization Gaussian Mixture Model (GMM) clustering to two input point clouds. It then performs change detection by comparing the GMMs using the Earth Mover's Distance. The algorithm is validated quantitatively and qualitatively using a test dataset collected by an Astrobee robot in the NASA Ames Granite Lab comprising single frame depth images taken directly by Astrobee and full-scene reconstructed maps built with RGB-D and pose data from Astrobee. The runtimes of the approach are also analyzed in depth. The source code is publicly released to promote further development.

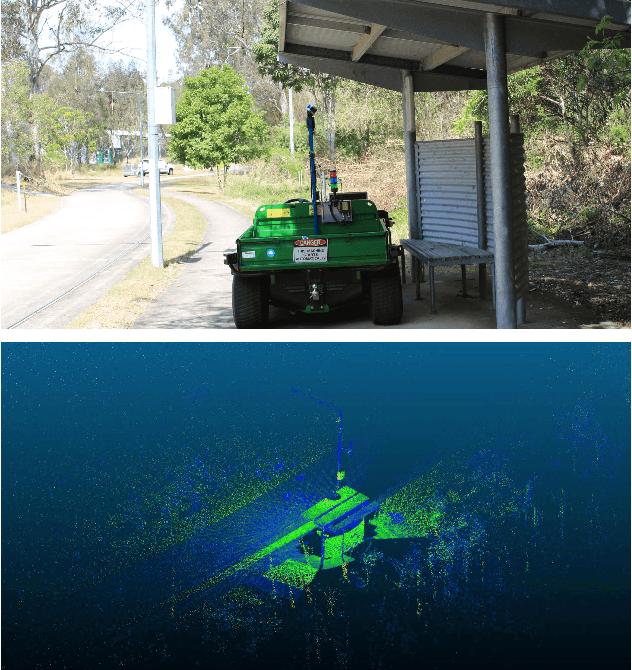

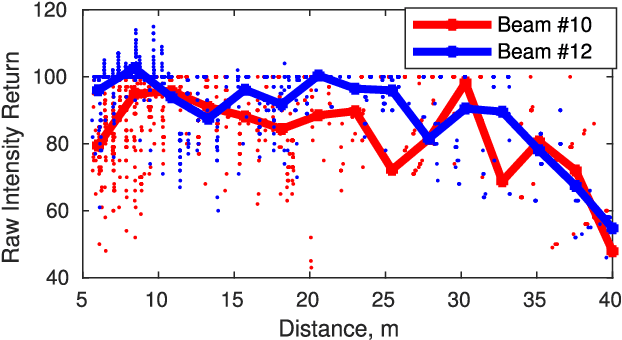

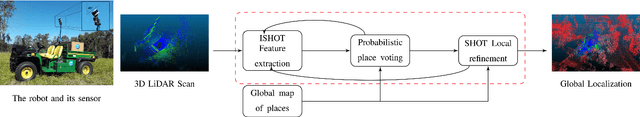

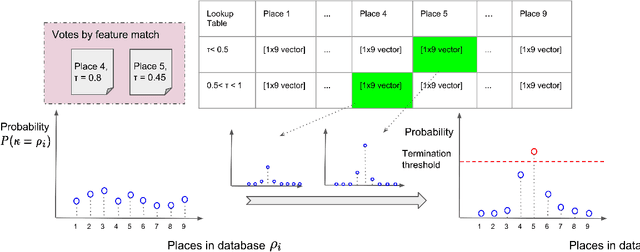

Local Descriptor for Robust Place Recognition using LiDAR Intensity

Nov 30, 2018

Abstract:Place recognition is a challenging problem in mobile robotics, especially in unstructured environments or under viewpoint and illumination changes. Most LiDAR-based methods rely on geometrical features to overcome such challenges, as generally scene geometry is invariant to these changes, but tend to affect camera-based solutions significantly. Compared to cameras, however, LiDARs lack the strong and descriptive appearance information that imaging can provide. To combine the benefits of geometry and appearance, we propose coupling the conventional geometric information from the LiDAR with its calibrated intensity return. This strategy extracts extremely useful information in the form of a new descriptor design, coined ISHOT, outperforming popular state-of-art geometric-only descriptors by significant margin in our local descriptor evaluation. To complete the framework, we furthermore develop a probabilistic keypoint voting place recognition algorithm, leveraging the new descriptor and yielding sublinear place recognition performance. The efficacy of our approach is validated in challenging global localization experiments in large-scale built-up and unstructured environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge