Emili Hernández

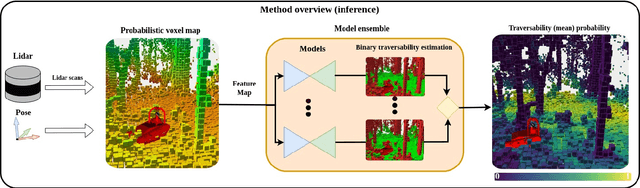

Online Adaptive Traversability Estimation through Interaction for Unstructured, Densely Vegetated Environments

Feb 04, 2025

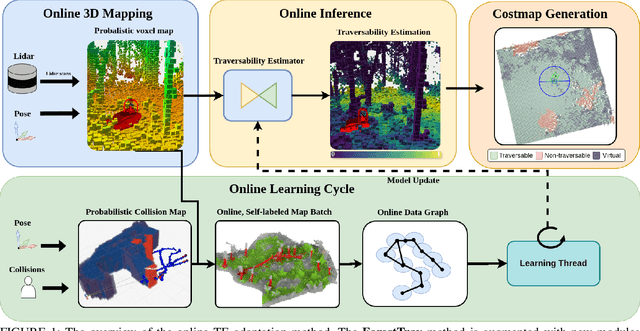

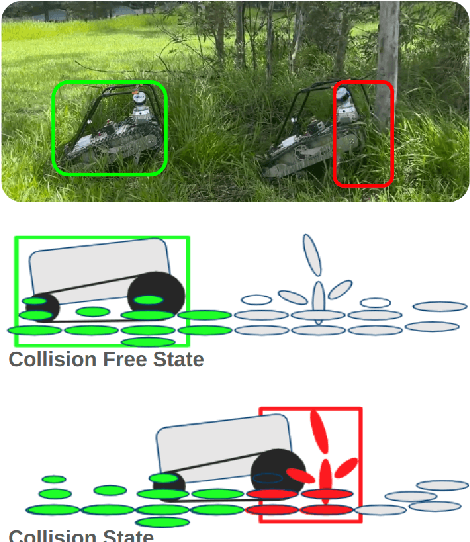

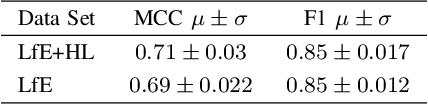

Abstract:Navigating densely vegetated environments poses significant challenges for autonomous ground vehicles. Learning-based systems typically use prior and in-situ data to predict terrain traversability but often degrade in performance when encountering out-of-distribution elements caused by rapid environmental changes or novel conditions. This paper presents a novel, lidar-only, online adaptive traversability estimation (TE) method that trains a model directly on the robot using self-supervised data collected through robot-environment interaction. The proposed approach utilises a probabilistic 3D voxel representation to integrate lidar measurements and robot experience, creating a salient environmental model. To ensure computational efficiency, a sparse graph-based representation is employed to update temporarily evolving voxel distributions. Extensive experiments with an unmanned ground vehicle in natural terrain demonstrate that the system adapts to complex environments with as little as 8 minutes of operational data, achieving a Matthews Correlation Coefficient (MCC) score of 0.63 and enabling safe navigation in densely vegetated environments. This work examines different training strategies for voxel-based TE methods and offers recommendations for training strategies to improve adaptability. The proposed method is validated on a robotic platform with limited computational resources (25W GPU), achieving accuracy comparable to offline-trained models while maintaining reliable performance across varied environments.

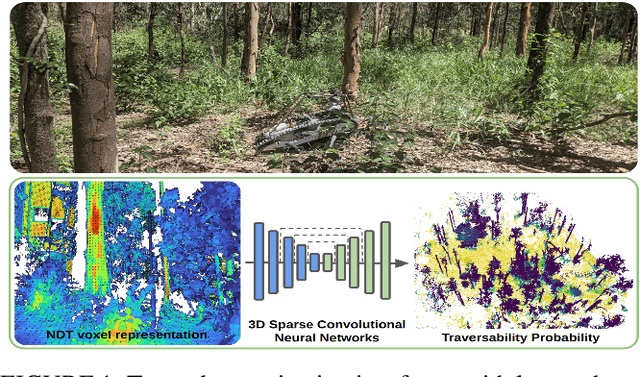

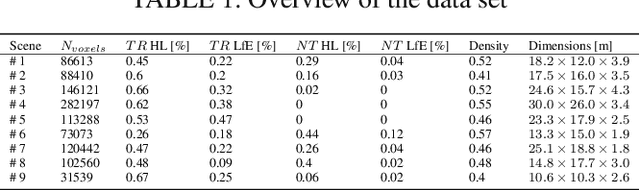

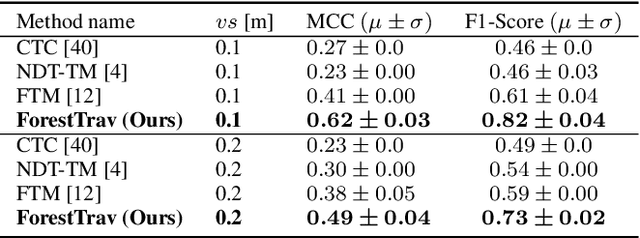

ForestTrav: Accurate, Efficient and Deployable Forest Traversability Estimation for Autonomous Ground Vehicles

May 22, 2023

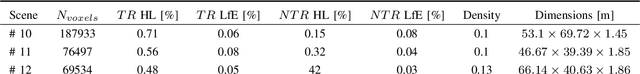

Abstract:Autonomous navigation in unstructured vegetated environments remains an open challenge. To successfully operate in these settings, ground vehicles must assess the traversability of the environment and determine which vegetation is pliable enough to push through. In this work, we propose a novel method that combines a high-fidelity and feature-rich 3D voxel representation while leveraging the structural context and sparseness of \acfp{SCNN} to assess \ac{TE} in densely vegetated environments. The proposed method is thoroughly evaluated on an accurately-labeled real-world data set that we provide to the community. It is shown to outperform state-of-the-art methods by a significant margin (0.59 vs. 0.39 MCC score at 0.1m voxel resolution) in challenging scenes and to generalize to unseen environments. In addition, the method is economical in the amount of training data and training time required: a model is trained in minutes on a desktop computer. We show that by exploiting the context of the environment, our method can use different feature combinations with only limited performance variations. For example, our approach can be used with lidar-only features, whilst still assessing complex vegetated environments accurately, which was not demonstrated previously in the literature in such environments. In addition, we propose an approach to assess a traversability estimator's sensitivity to information quality and show our method's sensitivity is low.

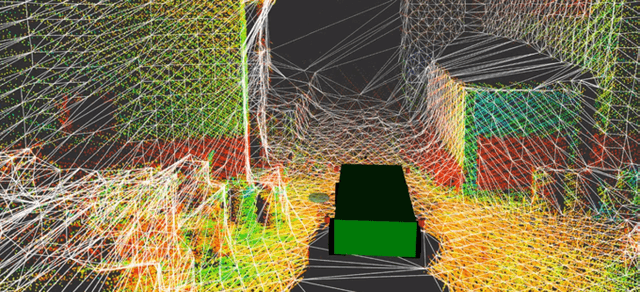

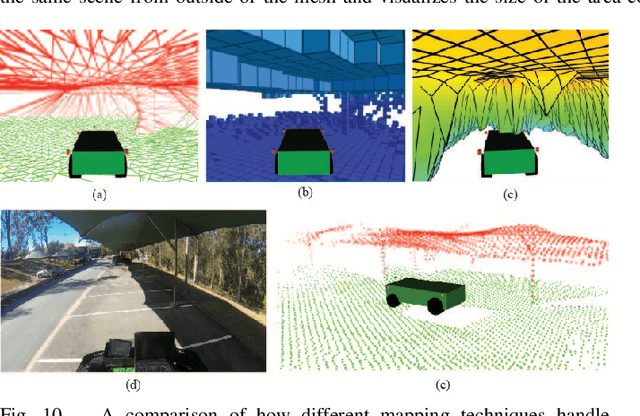

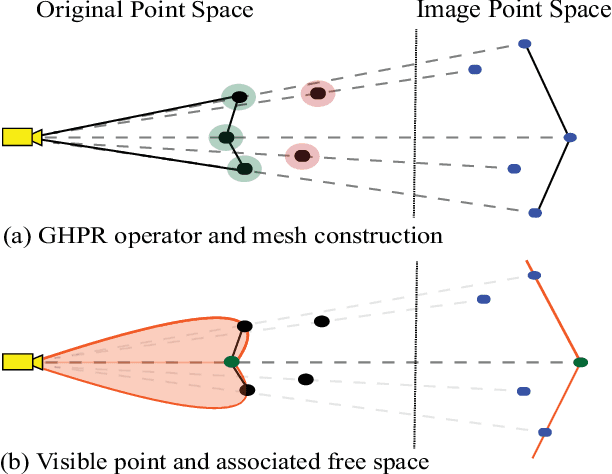

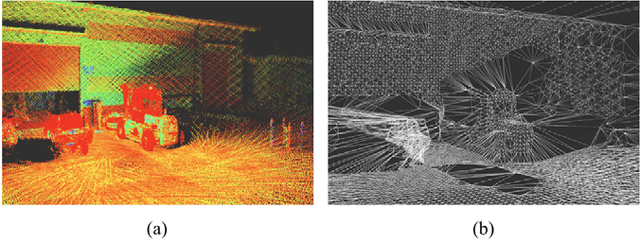

OVPC Mesh: 3D Free-space Representation for Local Ground Vehicle Navigation

Nov 26, 2018

Abstract:This paper presents a novel approach for local 3D environment representation for autonomous unmanned ground vehicle (UGV) navigation called On Visible Point Clouds Mesh(OVPC Mesh). Our approach represents the surrounding of the robot as a watertight 3D mesh generated from local point cloud data in order to represent the free space surrounding the robot. It is a conservative estimation of the free space and provides a desirable trade-off between representation precision and computational efficiency, without having to discretize the environment into a fixed grid size. Our experiments analyze the usability of the approach for UGV navigation in rough terrain, both in simulation and in a fully integrated real-world system. Additionally, we compare our approach to well-known state-of-the-art solutions, such as Octomap and Elevation Mapping and show that OVPC Mesh can provide reliable 3D information for trajectory planning while fulfilling real-time constraints.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge