Kasra Khosoussi

Joint State and Noise Covariance Estimation

Feb 07, 2025

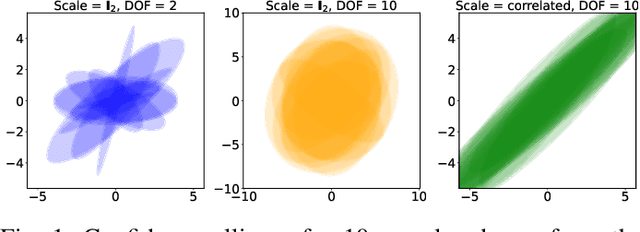

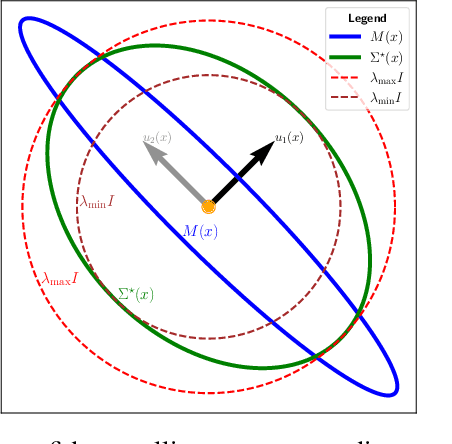

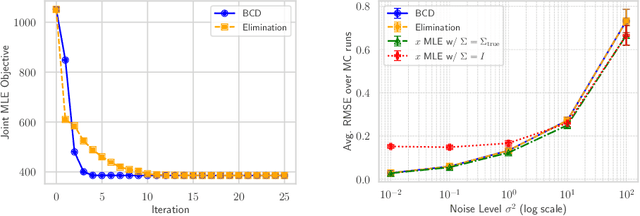

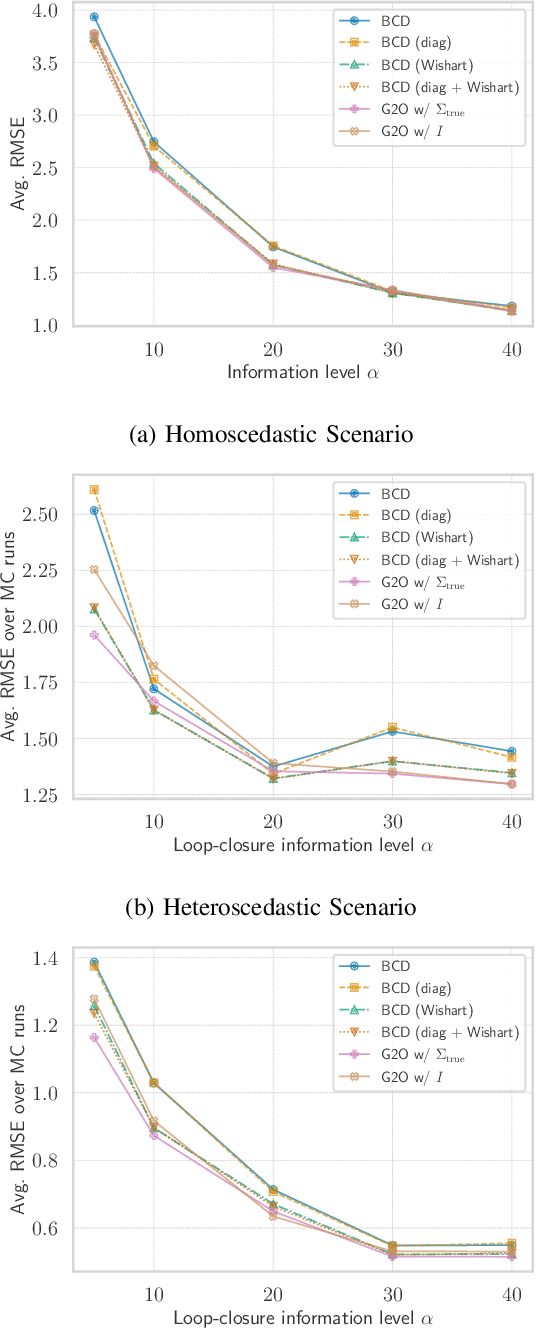

Abstract:This paper tackles the problem of jointly estimating the noise covariance matrix alongside primary parameters (such as poses and points) from measurements corrupted by Gaussian noise. In such settings, the noise covariance matrix determines the weights assigned to individual measurements in the least squares problem. We show that the joint problem exhibits a convex structure and provide a full characterization of the optimal noise covariance estimate (with analytical solutions) within joint maximum a posteriori and likelihood frameworks and several variants. Leveraging this theoretical result, we propose two novel algorithms that jointly estimate the primary parameters and the noise covariance matrix. To validate our approach, we conduct extensive experiments across diverse scenarios and offer practical insights into their application in robotics and computer vision estimation problems with a particular focus on SLAM.

Under-Canopy Navigation using Aerial Lidar Maps

Apr 05, 2024

Abstract:Autonomous navigation in unstructured natural environments poses a significant challenge. In goal navigation tasks without prior information, the limited look-ahead of onboard sensors utilised by robots compromises path efficiency. We propose a novel approach that leverages an above-the-canopy aerial map for improved ground robot navigation. Our system utilises aerial lidar scans to create a 3D probabilistic occupancy map, uniquely incorporating the uncertainty in the aerial vehicle's trajectory for improved accuracy. Novel path planning cost functions are introduced, combining path length with obstruction risk estimated from the probabilistic map. The D-Star Lite algorithm then calculates an optimal (minimum-cost) path to the goal. This system also allows for dynamic replanning upon encountering unforeseen obstacles on the ground. Extensive experiments and ablation studies in simulated and real forests demonstrate the effectiveness of our system.

Data-Association-Free Landmark-based SLAM

Mar 07, 2023

Abstract:We study landmark-based SLAM with unknown data association: our robot navigates in a completely unknown environment and has to simultaneously reason over its own trajectory, the positions of an unknown number of landmarks in the environment, and potential data associations between measurements and landmarks. This setup is interesting since: (i) it arises when recovering from data association failures or from SLAM with information-poor sensors, (ii) it sheds light on fundamental limits (and hardness) of landmark-based SLAM problems irrespective of the front-end data association method, and (iii) it generalizes existing approaches where data association is assumed to be known or partially known. We approach the problem by splitting it into an inner problem of estimating the trajectory, landmark positions and data associations and an outer problem of estimating the number of landmarks. Our approach creates useful and novel connections with existing techniques from discrete-continuous optimization (e.g., k-means clustering), which has the potential to trigger novel research. We demonstrate the proposed approaches in extensive simulations and on real datasets and show that the proposed techniques outperform typical data association baselines and are even competitive against an "oracle" baseline which has access to the number of landmarks and an initial guess for each landmark.

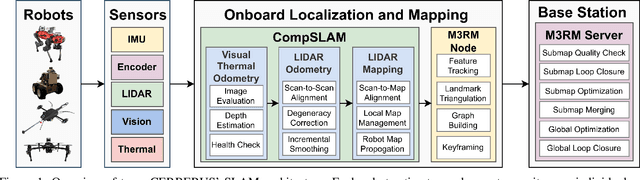

Heterogeneous robot teams with unified perception and autonomy: How Team CSIRO Data61 tied for the top score at the DARPA Subterranean Challenge

Feb 26, 2023

Abstract:The DARPA Subterranean Challenge was designed for competitors to develop and deploy teams of autonomous robots to explore difficult unknown underground environments. Categorised in to human-made tunnels, underground urban infrastructure and natural caves, each of these subdomains had many challenging elements for robot perception, locomotion, navigation and autonomy. These included degraded wireless communication, poor visibility due to smoke, narrow passages and doorways, clutter, uneven ground, slippery and loose terrain, stairs, ledges, overhangs, dripping water, and dynamic obstacles that move to block paths among others. In the Final Event of this challenge held in September 2021, the course consisted of all three subdomains. The task was for the robot team to perform a scavenger hunt for a number of pre-defined artefacts within a limited time frame. Only one human supervisor was allowed to communicate with the robots once they were in the course. Points were scored when accurate detections and their locations were communicated back to the scoring server. A total of 8 teams competed in the finals held at the Mega Cavern in Louisville, KY, USA. This article describes the systems deployed by Team CSIRO Data61 that tied for the top score and won second place at the event.

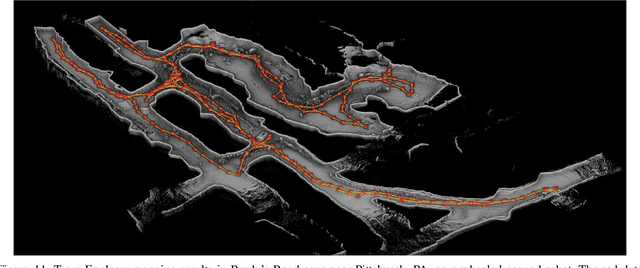

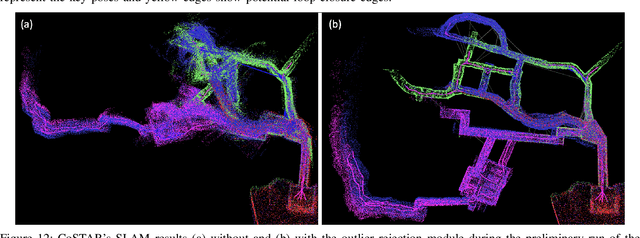

Present and Future of SLAM in Extreme Underground Environments

Aug 02, 2022

Abstract:This paper reports on the state of the art in underground SLAM by discussing different SLAM strategies and results across six teams that participated in the three-year-long SubT competition. In particular, the paper has four main goals. First, we review the algorithms, architectures, and systems adopted by the teams; particular emphasis is put on lidar-centric SLAM solutions (the go-to approach for virtually all teams in the competition), heterogeneous multi-robot operation (including both aerial and ground robots), and real-world underground operation (from the presence of obscurants to the need to handle tight computational constraints). We do not shy away from discussing the dirty details behind the different SubT SLAM systems, which are often omitted from technical papers. Second, we discuss the maturity of the field by highlighting what is possible with the current SLAM systems and what we believe is within reach with some good systems engineering. Third, we outline what we believe are fundamental open problems, that are likely to require further research to break through. Finally, we provide a list of open-source SLAM implementations and datasets that have been produced during the SubT challenge and related efforts, and constitute a useful resource for researchers and practitioners.

Energy-Aware, Collision-Free Information Gathering for Heterogeneous Robot Teams

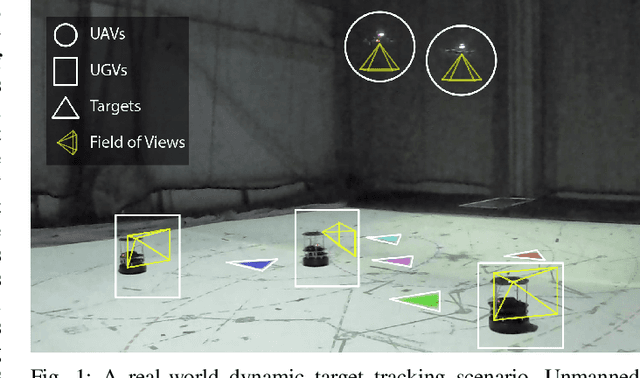

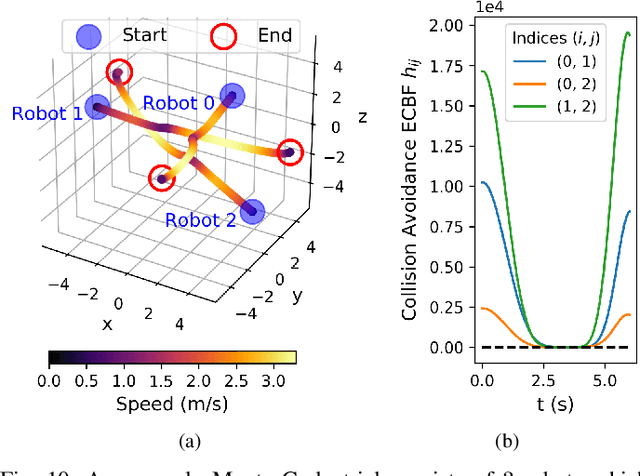

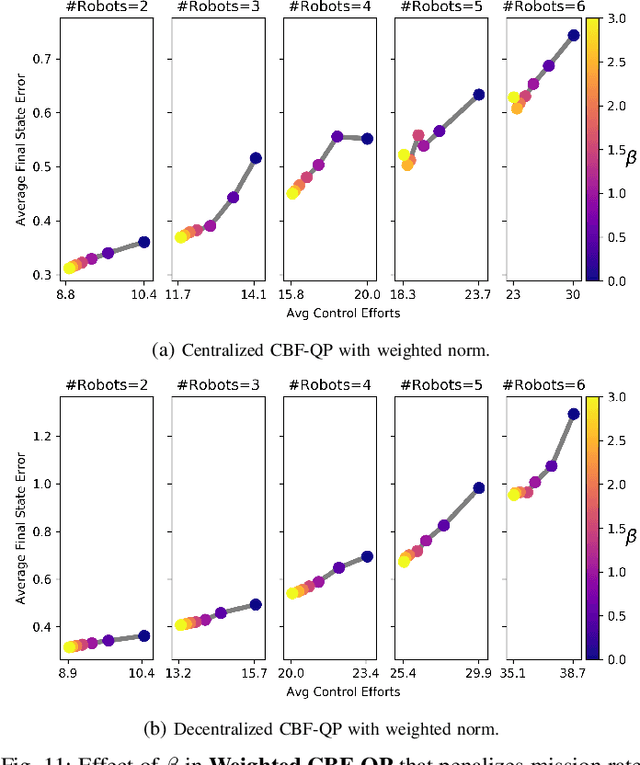

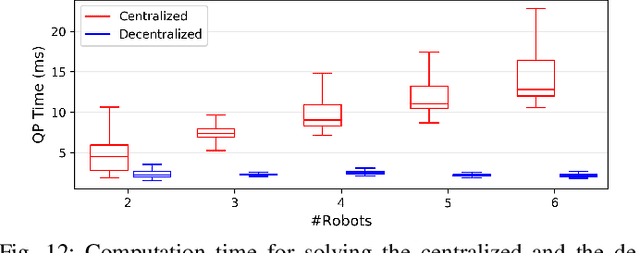

Jul 30, 2022

Abstract:This paper considers the problem of safely coordinating a team of sensor-equipped robots to reduce uncertainty about a dynamical process, where the objective trades off information gain and energy cost. Optimizing this trade-off is desirable, but leads to a non-monotone objective function in the set of robot trajectories. Therefore, common multi-robot planners based on coordinate descent lose their performance guarantees. Furthermore, methods that handle non-monotonicity lose their performance guarantees when subject to inter-robot collision avoidance constraints. As it is desirable to retain both the performance guarantee and safety guarantee, this work proposes a hierarchical approach with a distributed planner that uses local search with a worst-case performance guarantees and a decentralized controller based on control barrier functions that ensures safety and encourages timely arrival at sensing locations. Via extensive simulations, hardware-in-the-loop tests and hardware experiments, we demonstrate that the proposed approach achieves a better trade-off between sensing and energy cost than coordinate descent based algorithms.

Visual Navigation for Autonomous Vehicles: An Open-source Hands-on Robotics Course at MIT

Jun 01, 2022

Abstract:This paper reports on the development, execution, and open-sourcing of a new robotics course at MIT. The course is a modern take on "Visual Navigation for Autonomous Vehicles" (VNAV) and targets first-year graduate students and senior undergraduates with prior exposure to robotics. VNAV has the goal of preparing the students to perform research in robotics and vision-based navigation, with emphasis on drones and self-driving cars. The course spans the entire autonomous navigation pipeline; as such, it covers a broad set of topics, including geometric control and trajectory optimization, 2D and 3D computer vision, visual and visual-inertial odometry, place recognition, simultaneous localization and mapping, and geometric deep learning for perception. VNAV has three key features. First, it bridges traditional computer vision and robotics courses by exposing the challenges that are specific to embodied intelligence, e.g., limited computation and need for just-in-time and robust perception to close the loop over control and decision making. Second, it strikes a balance between depth and breadth by combining rigorous technical notes (including topics that are less explored in typical robotics courses, e.g., on-manifold optimization) with slides and videos showcasing the latest research results. Third, it provides a compelling approach to hands-on robotics education by leveraging a physical drone platform (mostly suitable for small residential courses) and a photo-realistic Unity-based simulator (open-source and scalable to large online courses). VNAV has been offered at MIT in the Falls of 2018-2021 and is now publicly available on MIT OpenCourseWare (OCW).

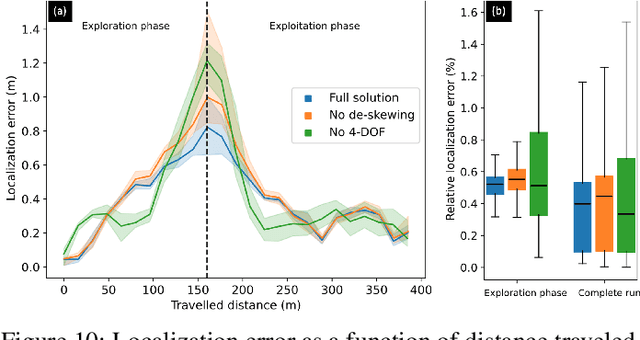

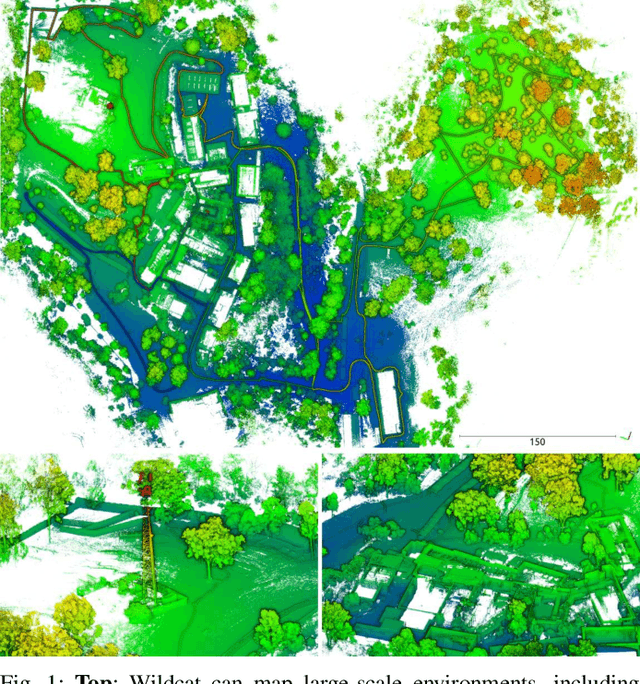

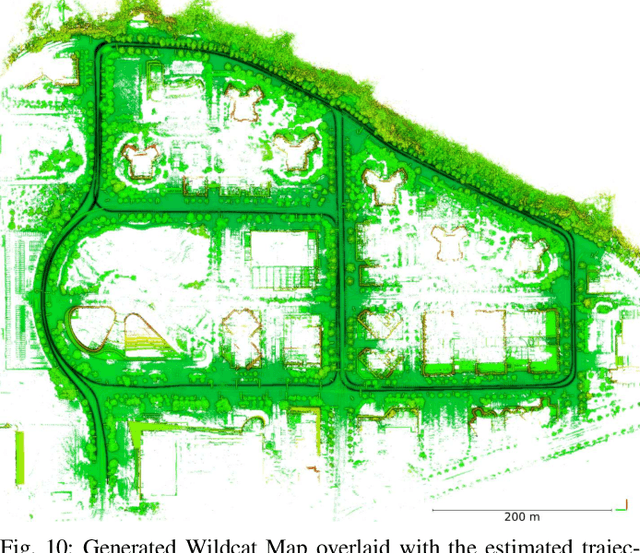

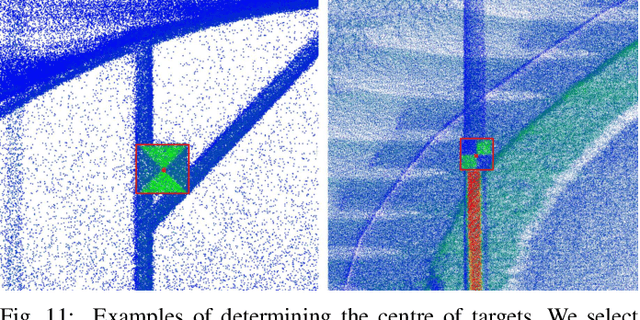

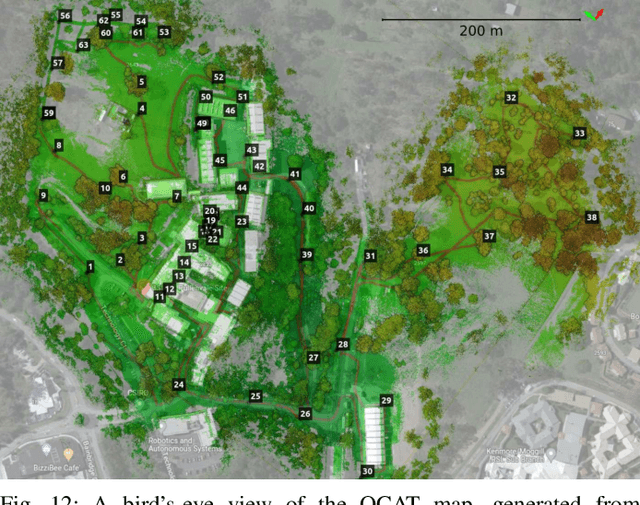

Wildcat: Online Continuous-Time 3D Lidar-Inertial SLAM

May 25, 2022

Abstract:We present Wildcat, a novel online 3D lidar-inertial SLAM system with exceptional versatility and robustness. At its core, Wildcat combines a robust real-time lidar-inertial odometry module, utilising a continuous-time trajectory representation, with an efficient pose-graph optimisation module that seamlessly supports both the single- and multi-agent settings. The robustness of Wildcat was recently demonstrated in the DARPA Subterranean Challenge where it outperformed other SLAM systems across various types of sensing-degraded and perceptually challenging environments. In this paper, we extensively evaluate Wildcat in a diverse set of new and publicly available real-world datasets and showcase its superior robustness and versatility over two existing state-of-the-art lidar-inertial SLAM systems.

Online Incremental Non-Gaussian Inference for SLAM Using Normalizing Flows

Oct 02, 2021

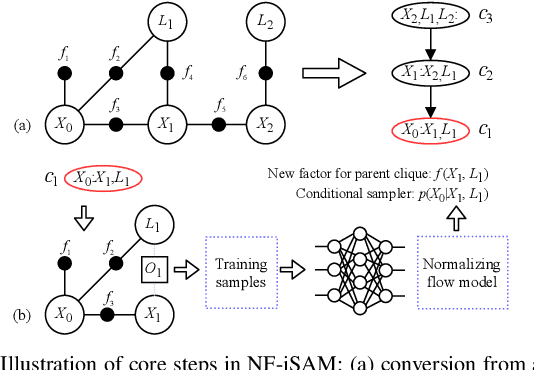

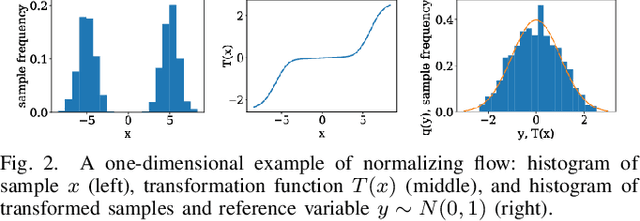

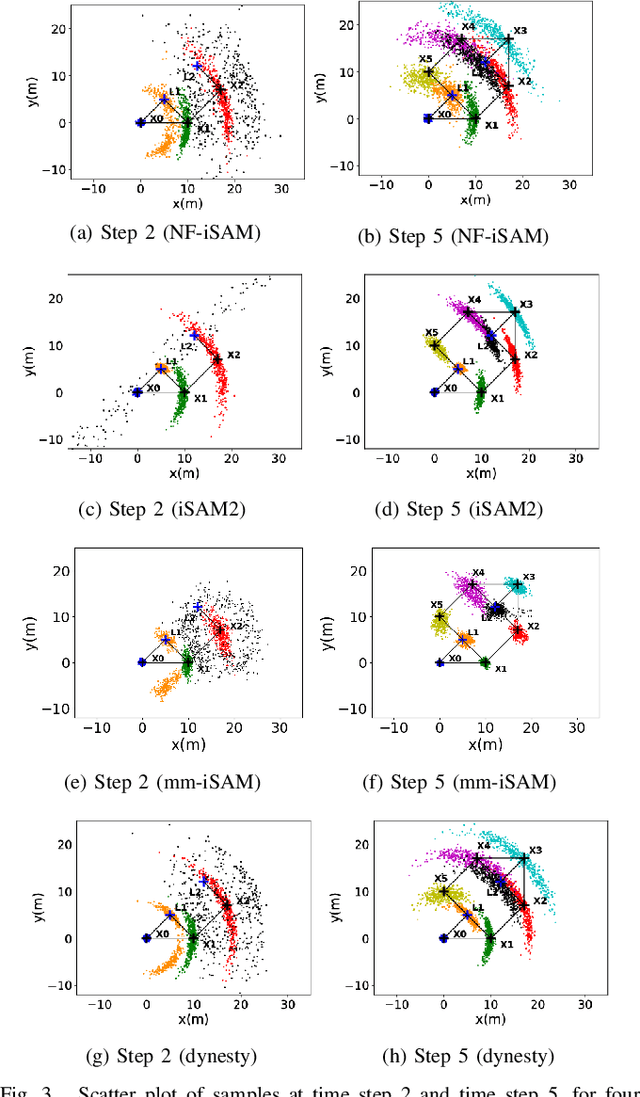

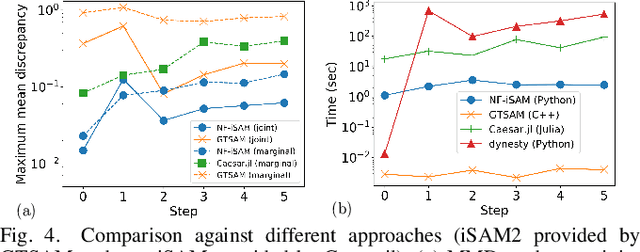

Abstract:This paper presents a novel non-Gaussian inference algorithm, Normalizing Flow iSAM (NF-iSAM), for solving SLAM problems with non-Gaussian factors and/or nonlinear measurement models. NF-iSAM exploits the expressive power of neural networks to model normalizing flows that can accurately approximate the joint posterior of highly nonlinear and non-Gaussian factor graphs. By leveraging the Bayes tree, NF-iSAM is able to exploit the sparsity structure of SLAM, thus enabling efficient incremental updates similar to iSAM2, although in the more challenging non-Gaussian setting. We demonstrate the performance of NF-iSAM and compare it against state-of-the-art algorithms such as iSAM2 (Gaussian) and mm-iSAM (non-Gaussian) in synthetic and real range-only SLAM datasets with data association ambiguity.

NF-iSAM: Incremental Smoothing and Mapping via Normalizing Flows

May 11, 2021

Abstract:This paper presents a novel non-Gaussian inference algorithm, Normalizing Flow iSAM (NF-iSAM), for solving SLAM problems with non-Gaussian factors and/or non-linear measurement models. NF-iSAM exploits the expressive power of neural networks, and trains normalizing flows to draw samples from the joint posterior of non-Gaussian factor graphs. By leveraging the Bayes tree, NF-iSAM is able to exploit the sparsity structure of SLAM, thus enabling efficient incremental updates similar to iSAM2, albeit in the more challenging non-Gaussian setting. We demonstrate the performance of NF-iSAM and compare it against the state-of-the-art algorithms such as iSAM2 (Gaussian) and mm-iSAM (non-Gaussian) in synthetic and real range-only SLAM datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge