Kyle Harlow

Department of Computer Science, University of Colorado Boulder, USA

Cost-Effective Radar Sensors for Field-Based Water Level Monitoring with Sub-Centimeter Accuracy

Jan 06, 2026Abstract:Water level monitoring is critical for flood management, water resource allocation, and ecological assessment, yet traditional methods remain costly and limited in coverage. This work explores radar-based sensing as a low-cost alternative for water level estimation, leveraging its non-contact nature and robustness to environmental conditions. Commercial radar sensors are evaluated in real-world field tests, applying statistical filtering techniques to improve accuracy. Results show that a single radar sensor can achieve centimeter-scale precision with minimal calibration, making it a practical solution for autonomous water monitoring using drones and robotic platforms.

RMap: Millimeter-Wave Radar Mapping Through Volumetric Upsampling

Oct 19, 2023

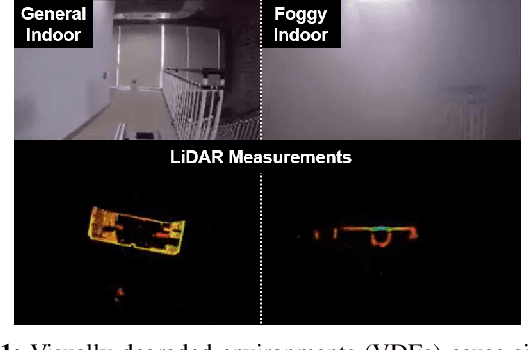

Abstract:Millimeter Wave Radar is being adopted as a viable alternative to lidar and radar in adverse visually degraded conditions, such as the presence of fog and dust. However, this sensor modality suffers from severe sparsity and noise under nominal conditions, which makes it difficult to use in precise applications such as mapping. This work presents a novel solution to generate accurate 3D maps from sparse radar point clouds. RMap uses a custom generative transformer architecture, UpPoinTr, which upsamples, denoises, and fills the incomplete radar maps to resemble lidar maps. We test this method on the ColoRadar dataset to demonstrate its efficacy.

A New Wave in Robotics: Survey on Recent mmWave Radar Applications in Robotics

May 02, 2023

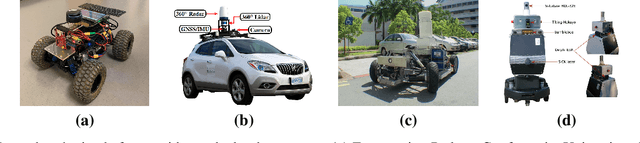

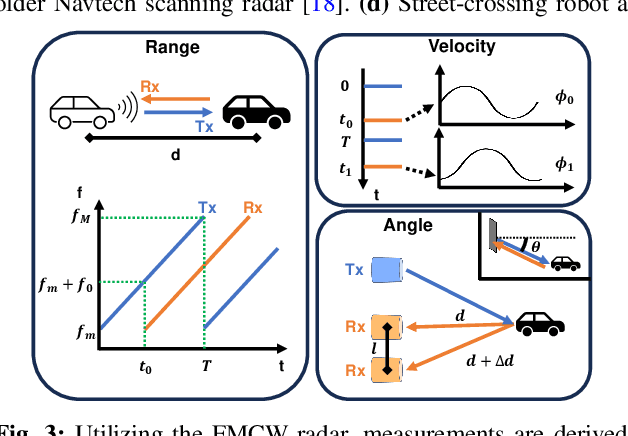

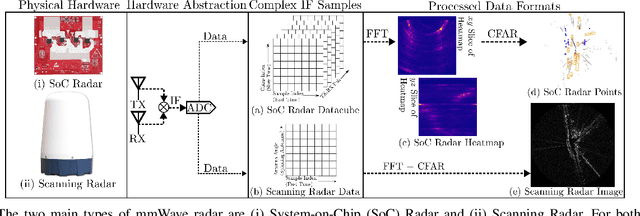

Abstract:We survey the current state of millimeterwave (mmWave) radar applications in robotics with a focus on unique capabilities, and discuss future opportunities based on the state of the art. Frequency Modulated Continuous Wave (FMCW) mmWave radars operating in the 76--81GHz range are an appealing alternative to lidars, cameras and other sensors operating in the near visual spectrum. Radar has been made more widely available in new packaging classes, more convenient for robotics and its longer wavelengths have the ability to bypass visual clutter such as fog, dust, and smoke. We begin by covering radar principles as they relate to robotics. We then review the relevant new research across a broad spectrum of robotics applications beginning with motion estimation, localization, and mapping. We then cover object detection and classification, and then close with an analysis of current datasets and calibration techniques that provide entry points into radar research.

Flexible Supervised Autonomy for Exploration in Subterranean Environments

Jan 02, 2023

Abstract:While the capabilities of autonomous systems have been steadily improving in recent years, these systems still struggle to rapidly explore previously unknown environments without the aid of GPS-assisted navigation. The DARPA Subterranean (SubT) Challenge aimed to fast track the development of autonomous exploration systems by evaluating their performance in real-world underground search-and-rescue scenarios. Subterranean environments present a plethora of challenges for robotic systems, such as limited communications, complex topology, visually-degraded sensing, and harsh terrain. The presented solution enables long-term autonomy with minimal human supervision by combining a powerful and independent single-agent autonomy stack, with higher level mission management operating over a flexible mesh network. The autonomy suite deployed on quadruped and wheeled robots was fully independent, freeing the human supervision to loosely supervise the mission and make high-impact strategic decisions. We also discuss lessons learned from fielding our system at the SubT Final Event, relating to vehicle versatility, system adaptability, and re-configurable communications.

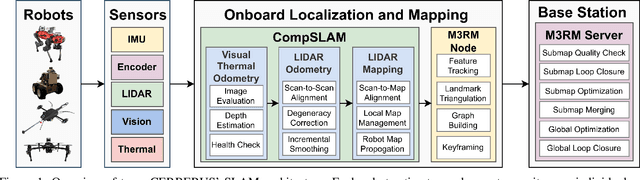

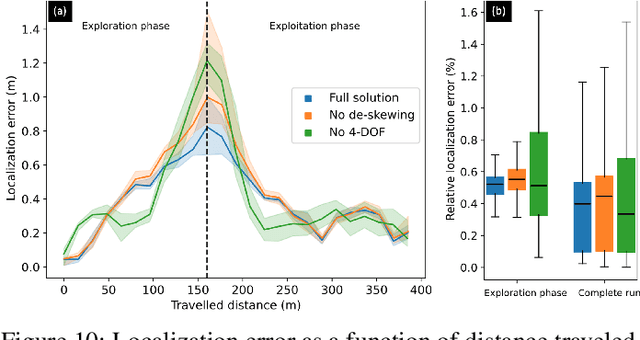

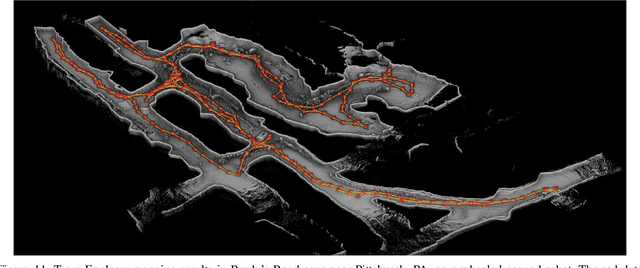

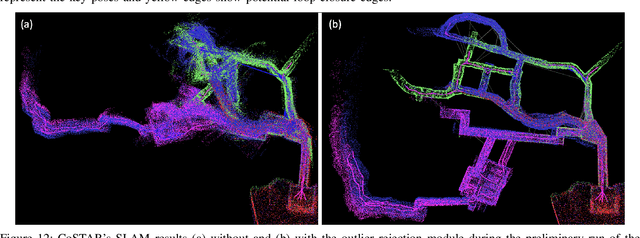

Present and Future of SLAM in Extreme Underground Environments

Aug 02, 2022

Abstract:This paper reports on the state of the art in underground SLAM by discussing different SLAM strategies and results across six teams that participated in the three-year-long SubT competition. In particular, the paper has four main goals. First, we review the algorithms, architectures, and systems adopted by the teams; particular emphasis is put on lidar-centric SLAM solutions (the go-to approach for virtually all teams in the competition), heterogeneous multi-robot operation (including both aerial and ground robots), and real-world underground operation (from the presence of obscurants to the need to handle tight computational constraints). We do not shy away from discussing the dirty details behind the different SubT SLAM systems, which are often omitted from technical papers. Second, we discuss the maturity of the field by highlighting what is possible with the current SLAM systems and what we believe is within reach with some good systems engineering. Third, we outline what we believe are fundamental open problems, that are likely to require further research to break through. Finally, we provide a list of open-source SLAM implementations and datasets that have been produced during the SubT challenge and related efforts, and constitute a useful resource for researchers and practitioners.

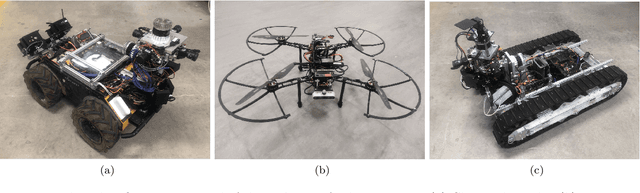

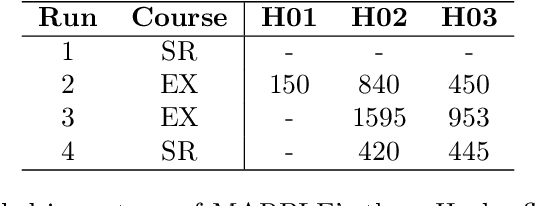

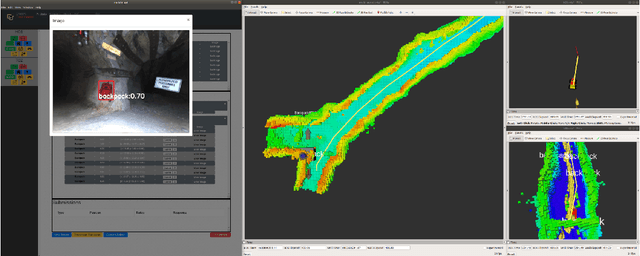

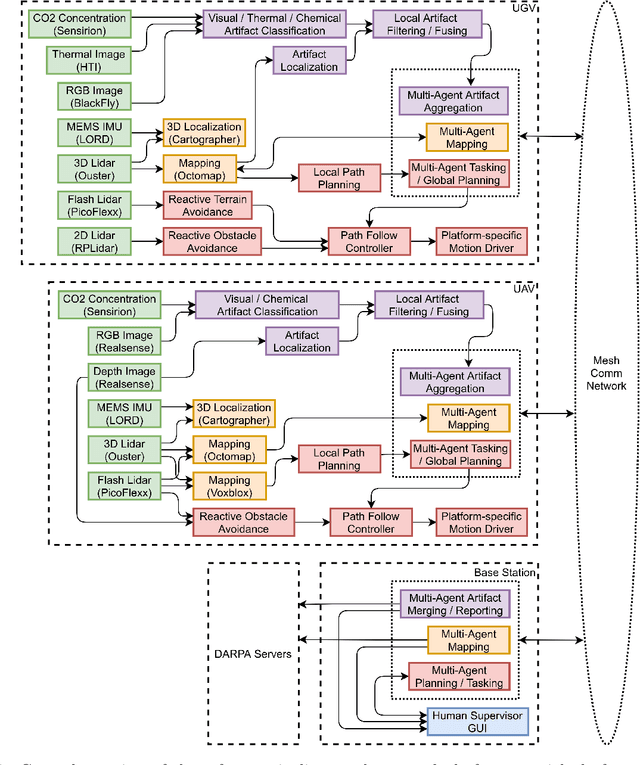

Multi-Agent Autonomy: Advancements and Challenges in Subterranean Exploration

Oct 08, 2021

Abstract:Artificial intelligence has undergone immense growth and maturation in recent years, though autonomous systems have traditionally struggled when fielded in diverse and previously unknown environments. DARPA is seeking to change that with the Subterranean Challenge, by providing roboticists the opportunity to support civilian and military first responders in complex and high-risk underground scenarios. The subterranean domain presents a handful of challenges, such as limited communication, diverse topology and terrain, and degraded sensing. Team MARBLE proposes a solution for autonomous exploration of unknown subterranean environments in which coordinated agents search for artifacts of interest. The team presents two navigation algorithms in the form of a metric-topological graph-based planner and a continuous frontier-based planner. To facilitate multi-agent coordination, agents share and merge new map information and candidate goal-points. Agents deploy communication beacons at different points in the environment, extending the range at which maps and other information can be shared. Onboard autonomy reduces the load on human supervisors, allowing agents to detect and localize artifacts and explore autonomously outside established communication networks. Given the scale, complexity, and tempo of this challenge, a range of lessons were learned, most importantly, that frequent and comprehensive field testing in representative environments is key to rapidly refining system performance.

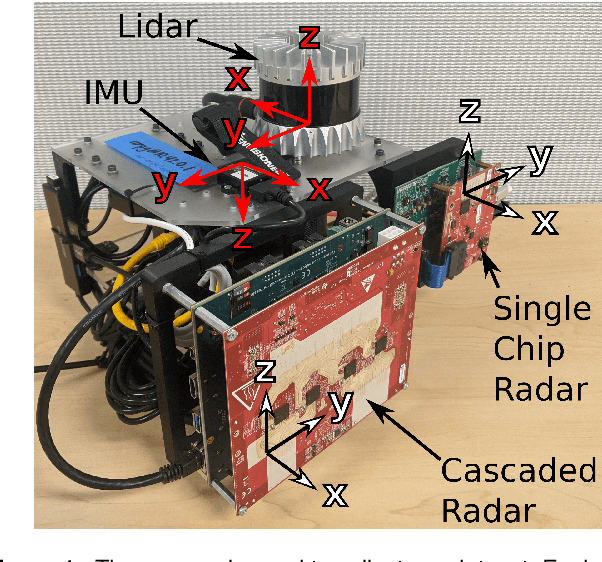

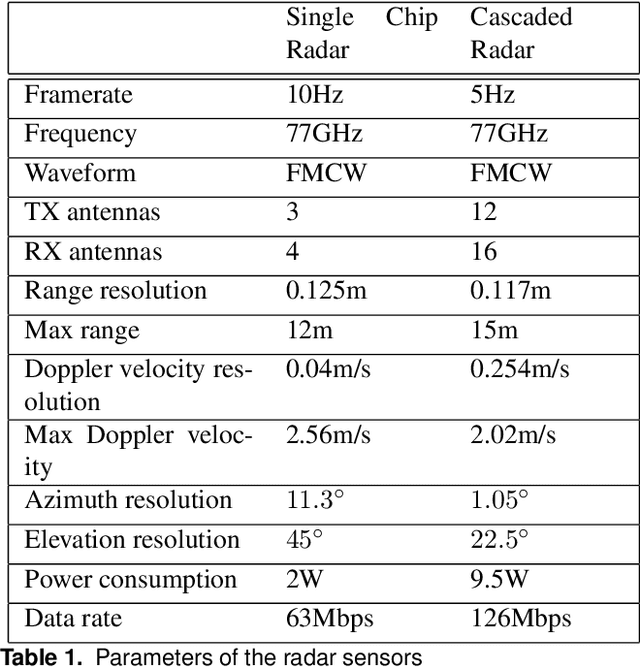

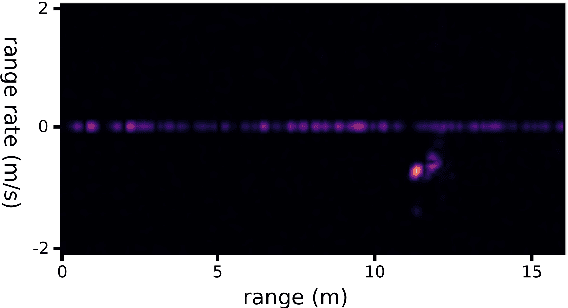

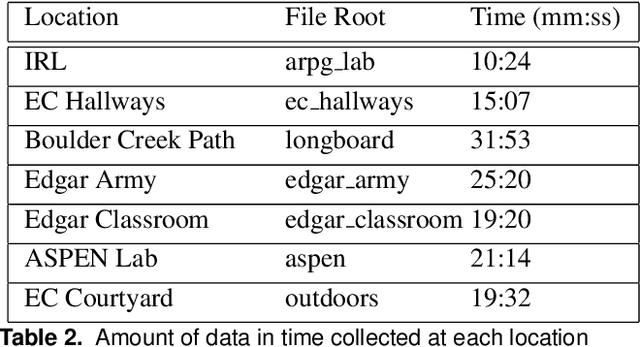

ColoRadar: The Direct 3D Millimeter Wave Radar Dataset

Mar 08, 2021

Abstract:Millimeter wave radar is becoming increasingly popular as a sensing modality for robotic mapping and state estimation. However, there are very few publicly available datasets that include dense, high-resolution millimeter wave radar scans and there are none focused on 3D odometry and mapping. In this paper we present a solution to that problem. The ColoRadar dataset includes 3 different forms of dense, high-resolution radar data from 2 FMCW radar sensors as well as 3D lidar, IMU, and highly accurate groundtruth for the sensor rig's pose over approximately 2 hours of data collection in highly diverse 3D environments.

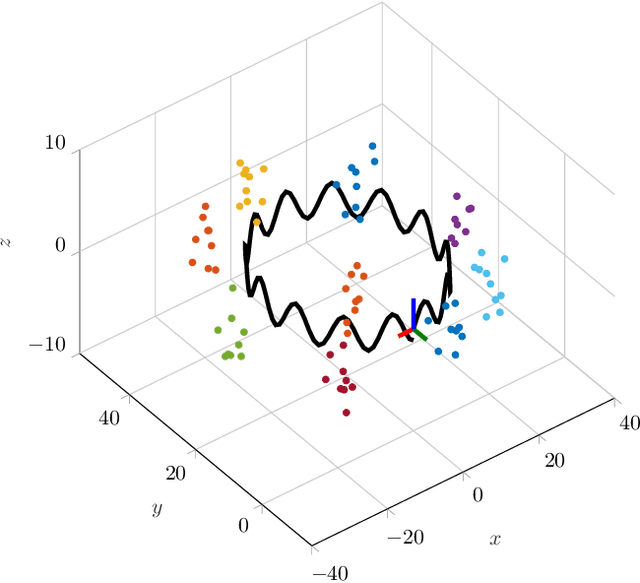

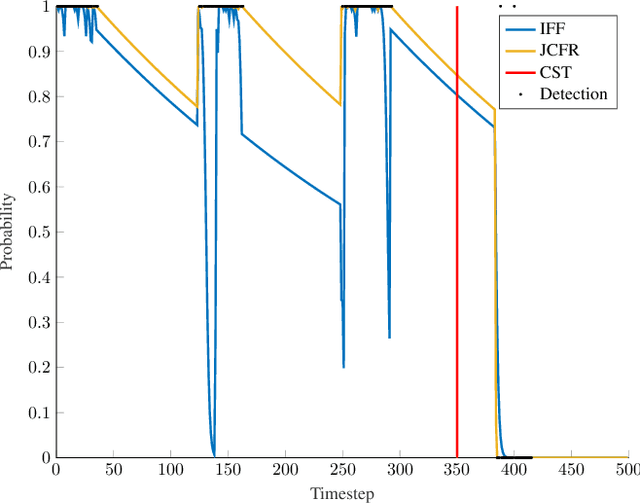

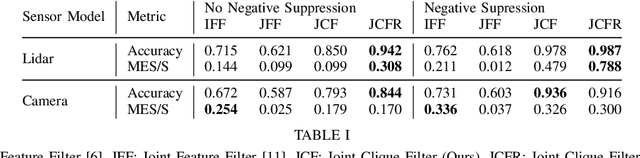

Better Together: Online Probabilistic Clique Change Detection in 3D Landmark-Based Maps

Aug 02, 2020

Abstract:Many modern simultaneous localization and mapping (SLAM) techniques rely on sparse landmark-based maps due to their real-time performance. However, these techniques frequently assert that these landmarks are fixed in position over time, known as the static-world assumption. This is rarely, if ever, the case in most real-world environments. Even worse, over long deployments, robots are bound to observe traditionally static landmarks change, for example when an autonomous vehicle encounters a construction zone. This work addresses this challenge, accounting for changes in complex three-dimensional environments with the creation of a probabilistic filter that operates on the features that give rise to landmarks. To accomplish this, landmarks are clustered into cliques and a filter is developed to estimate their persistence jointly among observations of the landmarks in a clique. This filter uses estimated spatial-temporal priors of geometric objects, allowing for dynamic and semi-static objects to be removed from a formally static map. The proposed algorithm is validated in a 3D simulated environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge