Christoffer Heckman

Department of Computer Science, University of Colorado Boulder, USA

Cost-Effective Radar Sensors for Field-Based Water Level Monitoring with Sub-Centimeter Accuracy

Jan 06, 2026Abstract:Water level monitoring is critical for flood management, water resource allocation, and ecological assessment, yet traditional methods remain costly and limited in coverage. This work explores radar-based sensing as a low-cost alternative for water level estimation, leveraging its non-contact nature and robustness to environmental conditions. Commercial radar sensors are evaluated in real-world field tests, applying statistical filtering techniques to improve accuracy. Results show that a single radar sensor can achieve centimeter-scale precision with minimal calibration, making it a practical solution for autonomous water monitoring using drones and robotic platforms.

CU-Multi: A Dataset for Multi-Robot Data Association

May 23, 2025Abstract:Multi-robot systems (MRSs) are valuable for tasks such as search and rescue due to their ability to coordinate over shared observations. A central challenge in these systems is aligning independently collected perception data across space and time, i.e., multi-robot data association. While recent advances in collaborative SLAM (C-SLAM), map merging, and inter-robot loop closure detection have significantly progressed the field, evaluation strategies still predominantly rely on splitting a single trajectory from single-robot SLAM datasets into multiple segments to simulate multiple robots. Without careful consideration to how a single trajectory is split, this approach will fail to capture realistic pose-dependent variation in observations of a scene inherent to multi-robot systems. To address this gap, we present CU-Multi, a multi-robot dataset collected over multiple days at two locations on the University of Colorado Boulder campus. Using a single robotic platform, we generate four synchronized runs with aligned start times and deliberate percentages of trajectory overlap. CU-Multi includes RGB-D, GPS with accurate geospatial heading, and semantically annotated LiDAR data. By introducing controlled variations in trajectory overlap and dense lidar annotations, CU-Multi offers a compelling alternative for evaluating methods in multi-robot data association. Instructions on accessing the dataset, support code, and the latest updates are publicly available at https://arpg.github.io/cumulti

Spatial-LLaVA: Enhancing Large Language Models with Spatial Referring Expressions for Visual Understanding

May 18, 2025Abstract:Multimodal large language models (MLLMs) have demonstrated remarkable abilities in comprehending visual input alongside text input. Typically, these models are trained on extensive data sourced from the internet, which are sufficient for general tasks such as scene understanding and question answering. However, they often underperform on specialized tasks where online data is scarce, such as determining spatial relationships between objects or localizing unique target objects within a group of objects sharing similar features. In response to this challenge, we introduce the SUN-Spot v2.0 dataset1, now comprising a total of 90k image-caption pairs and additional annotations on the landmark objects. Each image-caption pair utilizes Set-of-Marks prompting as an additional indicator, mapping each landmark object in the image to the corresponding object mentioned in the caption. Furthermore, we present Spatial-LLaVA, an MLLM trained on conversational data generated by a state-of-the-art language model using the SUNSpot v2.0 dataset. Our approach ensures a robust alignment between the objects in the images and their corresponding object mentions in the captions, enabling our model to learn spatial referring expressions without bias from the semantic information of the objects. Spatial-LLaVA outperforms previous methods by 3.15% on the zero-shot Visual Spatial Reasoning benchmark dataset. Spatial-LLaVA is specifically designed to precisely understand spatial referring expressions, making it highly applicable for tasks in real-world scenarios such as autonomous navigation and interactive robotics, where precise object recognition is critical.

Foundation Models for Rapid Autonomy Validation

Oct 22, 2024Abstract:We are motivated by the problem of autonomous vehicle performance validation. A key challenge is that an autonomous vehicle requires testing in every kind of driving scenario it could encounter, including rare events, to provide a strong case for safety and show there is no edge-case pathological behavior. Autonomous vehicle companies rely on potentially millions of miles driven in realistic simulation to expose the driving stack to enough miles to estimate rates and severity of collisions. To address scalability and coverage, we propose the use of a behavior foundation model, specifically a masked autoencoder (MAE), trained to reconstruct driving scenarios. We leverage the foundation model in two complementary ways: we (i) use the learned embedding space to group qualitatively similar scenarios together and (ii) fine-tune the model to label scenario difficulty based on the likelihood of a collision upon re-simulation. We use the difficulty scoring as importance weighting for the groups of scenarios. The result is an approach which can more rapidly estimate the rates and severity of collisions by prioritizing hard scenarios while ensuring exposure to every kind of driving scenario.

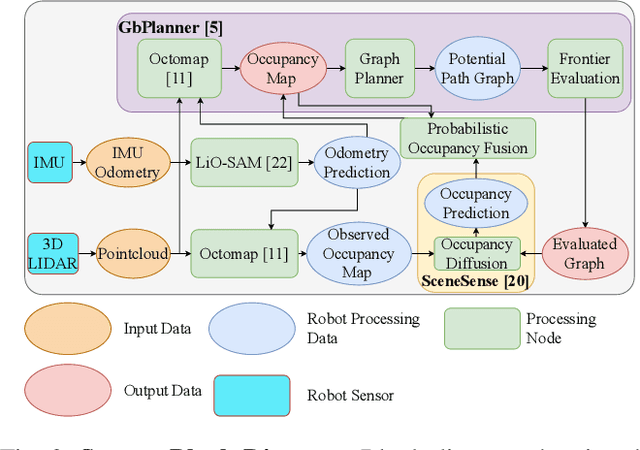

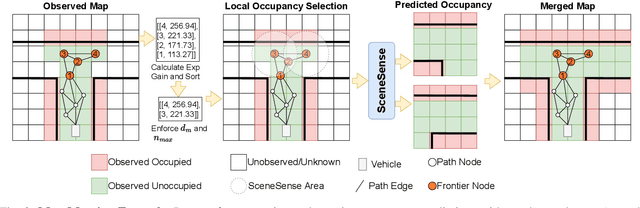

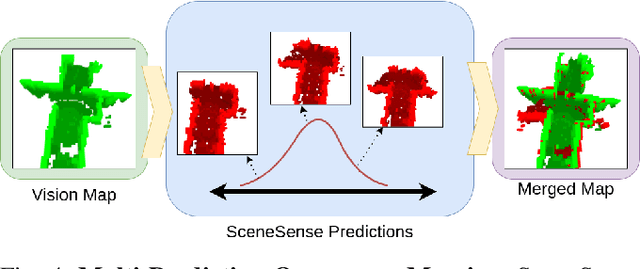

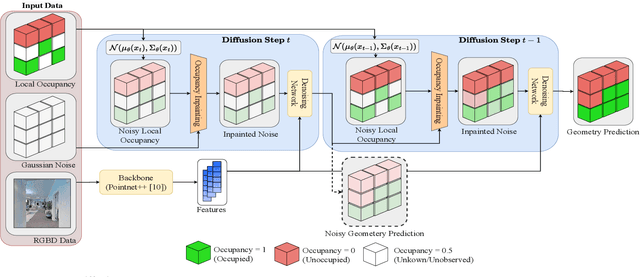

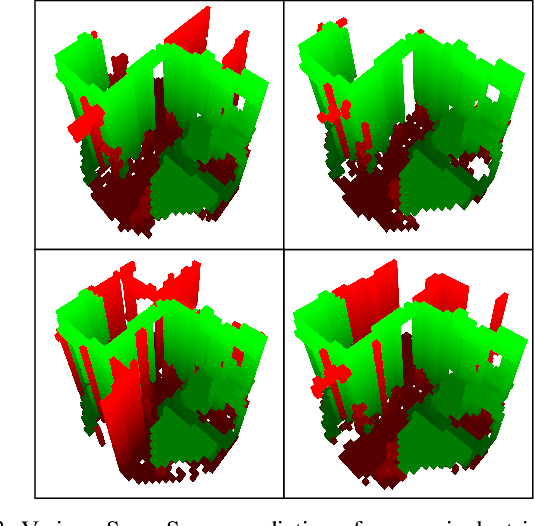

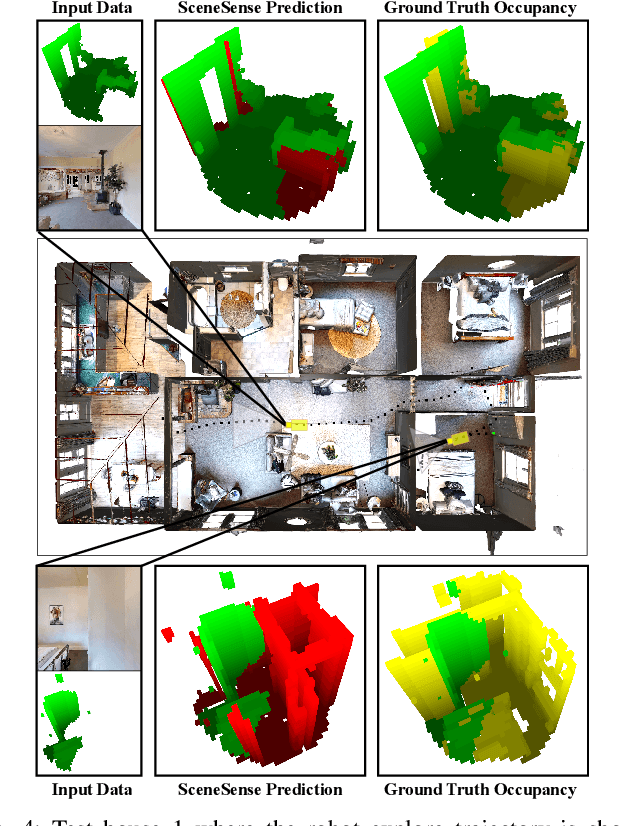

Online Diffusion-Based 3D Occupancy Prediction at the Frontier with Probabilistic Map Reconciliation

Sep 16, 2024

Abstract:Autonomous navigation and exploration in unmapped environments remains a significant challenge in robotics due to the difficulty robots face in making commonsense inference of unobserved geometries. Recent advancements have demonstrated that generative modeling techniques, particularly diffusion models, can enable systems to infer these geometries from partial observation. In this work, we present implementation details and results for real-time, online occupancy prediction using a modified diffusion model. By removing attention-based visual conditioning and visual feature extraction components, we achieve a 73$\%$ reduction in runtime with minimal accuracy reduction. These modifications enable occupancy prediction across the entire map, rather than being limited to the area around the robot where camera data can be collected. We introduce a probabilistic update method for merging predicted occupancy data into running occupancy maps, resulting in a 71$\%$ improvement in predicting occupancy at map frontiers compared to previous methods. Finally, we release our code and a ROS node for on-robot operation <upon publication> at github.com/arpg/sceneSense_ws.

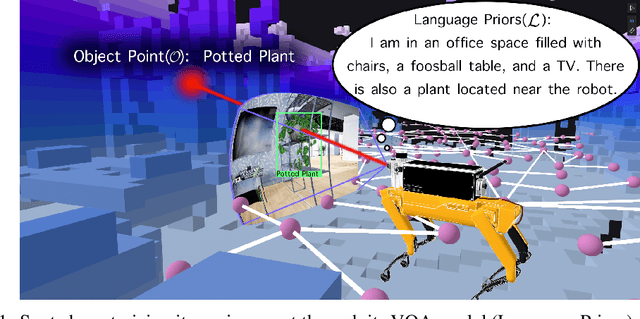

CogExplore: Contextual Exploration with Language-Encoded Environment Representations

Jun 24, 2024

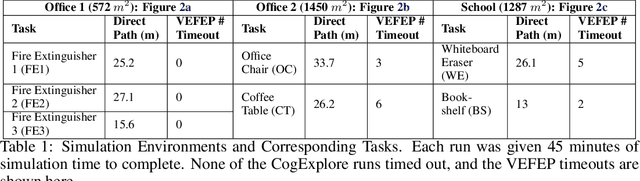

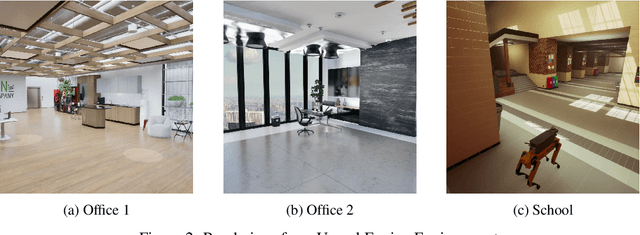

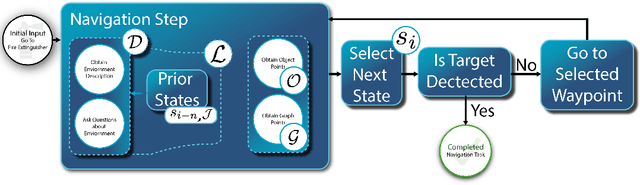

Abstract:Integrating language models into robotic exploration frameworks improves performance in unmapped environments by providing the ability to reason over semantic groundings, contextual cues, and temporal states. The proposed method employs large language models (GPT-3.5 and Claude Haiku) to reason over these cues and express that reasoning in terms of natural language, which can be used to inform future states. We are motivated by the context of search-and-rescue applications where efficient exploration is critical. We find that by leveraging natural language, semantics, and tracking temporal states, the proposed method greatly reduces exploration path distance and further exposes the need for environment-dependent heuristics. Moreover, the method is highly robust to a variety of environments and noisy vision detections, as shown with a 100% success rate in a series of comprehensive experiments across three different environments conducted in a custom simulation pipeline operating in Unreal Engine.

Radar-Based Localization For Autonomous Ground Vehicles In Suburban Neighborhoods

May 01, 2024Abstract:For autonomous ground vehicles (AGVs) deployed in suburban neighborhoods and other human-centric environments the problem of localization remains a fundamental challenge. There are well established methods for localization with GPS, lidar, and cameras. But even in ideal conditions these have limitations. GPS is not always available and is often not accurate enough on its own, visual methods have difficulty coping with appearance changes due to weather and other factors, and lidar methods are prone to defective solutions due to ambiguous scene geometry. Radar on the other hand is not highly susceptible to these problems, owing in part to its longer range. Further, radar is also robust to challenging conditions that interfere with vision and lidar including fog, smoke, rain, and darkness. We present a radar-based localization system that includes a novel method for highly-accurate radar odometry for smooth, high-frequency relative pose estimation and a novel method for radar-based place recognition and relocalization. We present experiments demonstrating our methods' accuracy and reliability, which are comparable with \new{other methods' published results for radar localization and we find outperform a similar method as ours applied to lidar measurements}. Further, we show our methods are lightweight enough to run on common low-power embedded hardware with ample headroom for other autonomy functions.

SceneSense: Diffusion Models for 3D Occupancy Synthesis from Partial Observation

Mar 18, 2024

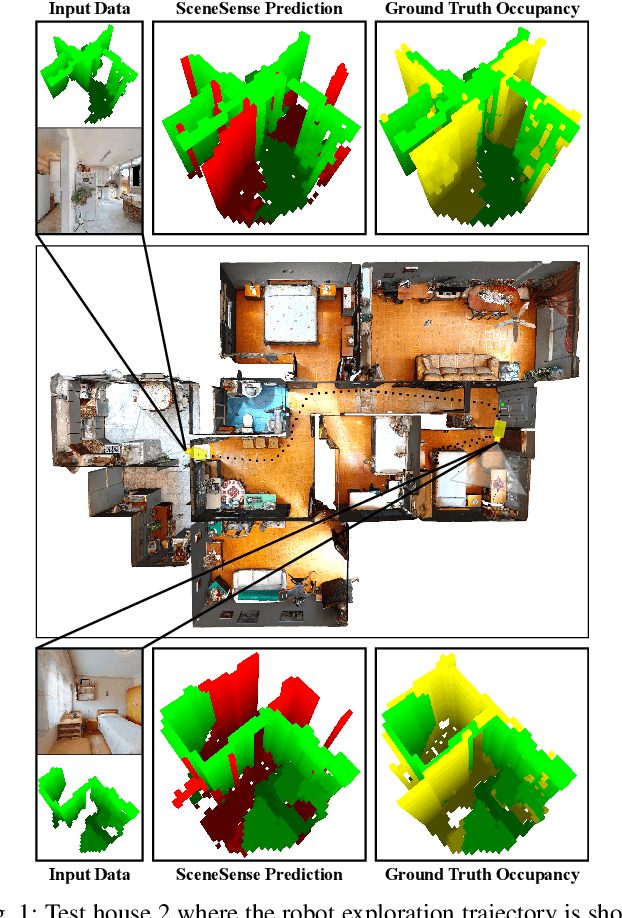

Abstract:When exploring new areas, robotic systems generally exclusively plan and execute controls over geometry that has been directly measured. When entering space that was previously obstructed from view such as turning corners in hallways or entering new rooms, robots often pause to plan over the newly observed space. To address this we present SceneScene, a real-time 3D diffusion model for synthesizing 3D occupancy information from partial observations that effectively predicts these occluded or out of view geometries for use in future planning and control frameworks. SceneSense uses a running occupancy map and a single RGB-D camera to generate predicted geometry around the platform at runtime, even when the geometry is occluded or out of view. Our architecture ensures that SceneSense never overwrites observed free or occupied space. By preserving the integrity of the observed map, SceneSense mitigates the risk of corrupting the observed space with generative predictions. While SceneSense is shown to operate well using a single RGB-D camera, the framework is flexible enough to extend to additional modalities. SceneSense operates as part of any system that generates a running occupancy map `out of the box', removing conditioning from the framework. Alternatively, for maximum performance in new modalities, the perception backbone can be replaced and the model retrained for inference in new applications. Unlike existing models that necessitate multiple views and offline scene synthesis, or are focused on filling gaps in observed data, our findings demonstrate that SceneSense is an effective approach to estimating unobserved local occupancy information at runtime. Local occupancy predictions from SceneSense are shown to better represent the ground truth occupancy distribution during the test exploration trajectories than the running occupancy map.

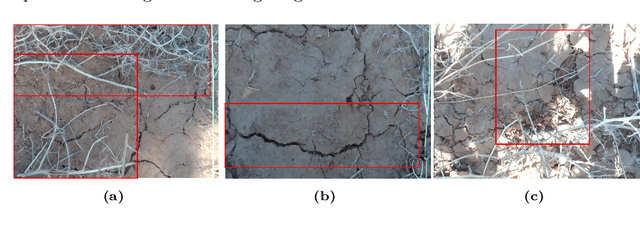

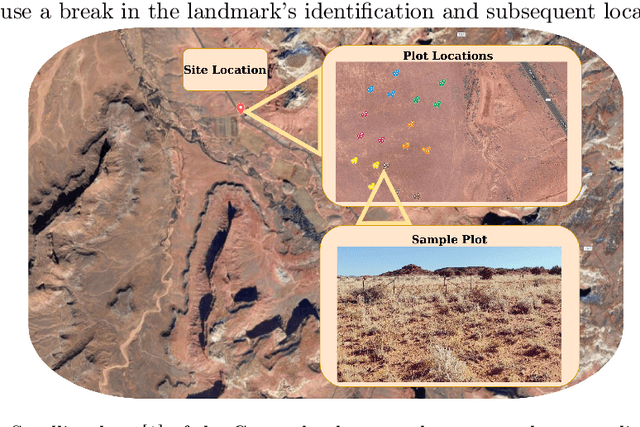

Restorebot: Towards an Autonomous Robotics Platform for Degraded Rangeland Restoration

Dec 12, 2023

Abstract:Degraded rangelands undergo continual shifts in the appearance and distribution of plant life. The nature of these changes however is subtle: between seasons seedlings sprout up and some flourish while others perish, meanwhile, over multiple seasons they experience fluctuating precipitation volumes and can be grazed by livestock. The nature of these conditioning variables makes it difficult for ecologists to quantify the efficacy of intervention techniques under study. To support these observation and intervention tasks, we develop RestoreBot: a mobile robotic platform designed for gathering data in degraded rangelands for the purpose of data collection and intervention in order to support revegetation. Over the course of multiple deployments, we outline the opportunities and challenges of autonomous data collection for revegetation and the importance of further effort in this area. Specifically, we identify that localization, mapping, data association, and terrain assessment remain open problems for deployment, but that recent advances in computer vision, sensing, and autonomy offer promising prospects for autonomous revegetation.

RMap: Millimeter-Wave Radar Mapping Through Volumetric Upsampling

Oct 19, 2023

Abstract:Millimeter Wave Radar is being adopted as a viable alternative to lidar and radar in adverse visually degraded conditions, such as the presence of fog and dust. However, this sensor modality suffers from severe sparsity and noise under nominal conditions, which makes it difficult to use in precise applications such as mapping. This work presents a novel solution to generate accurate 3D maps from sparse radar point clouds. RMap uses a custom generative transformer architecture, UpPoinTr, which upsamples, denoises, and fills the incomplete radar maps to resemble lidar maps. We test this method on the ColoRadar dataset to demonstrate its efficacy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge