Kristen Such

CogExplore: Contextual Exploration with Language-Encoded Environment Representations

Jun 24, 2024

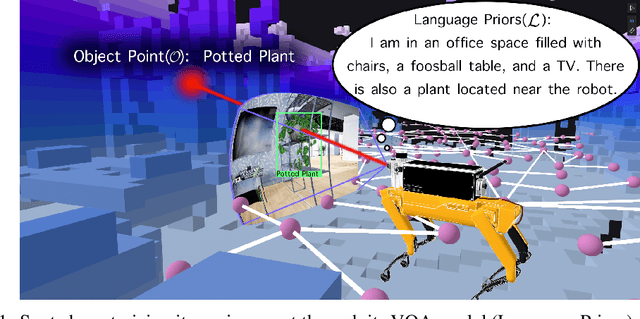

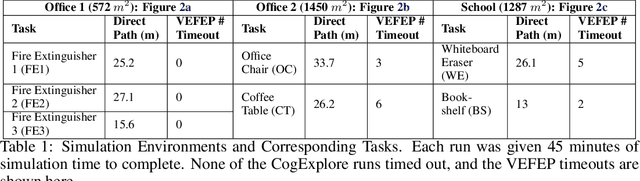

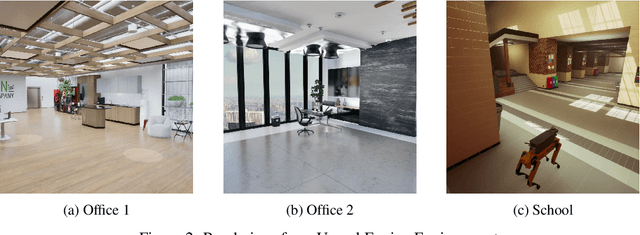

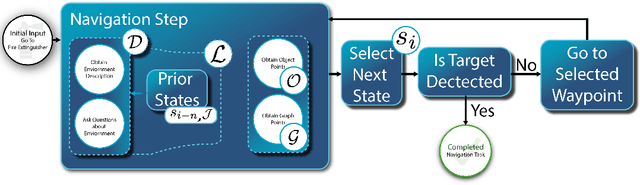

Abstract:Integrating language models into robotic exploration frameworks improves performance in unmapped environments by providing the ability to reason over semantic groundings, contextual cues, and temporal states. The proposed method employs large language models (GPT-3.5 and Claude Haiku) to reason over these cues and express that reasoning in terms of natural language, which can be used to inform future states. We are motivated by the context of search-and-rescue applications where efficient exploration is critical. We find that by leveraging natural language, semantics, and tracking temporal states, the proposed method greatly reduces exploration path distance and further exposes the need for environment-dependent heuristics. Moreover, the method is highly robust to a variety of environments and noisy vision detections, as shown with a 100% success rate in a series of comprehensive experiments across three different environments conducted in a custom simulation pipeline operating in Unreal Engine.

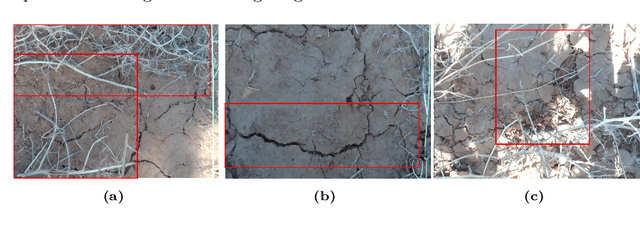

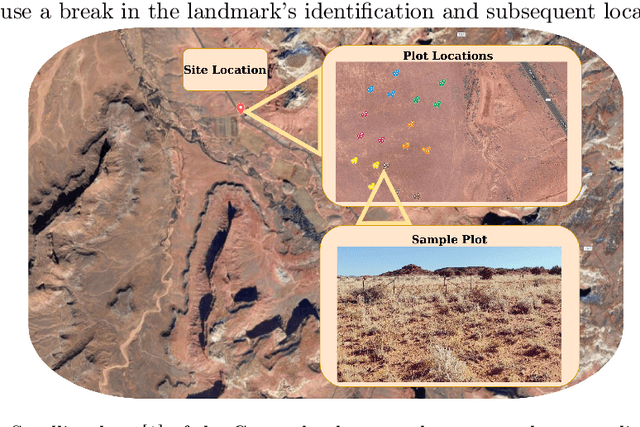

Restorebot: Towards an Autonomous Robotics Platform for Degraded Rangeland Restoration

Dec 12, 2023

Abstract:Degraded rangelands undergo continual shifts in the appearance and distribution of plant life. The nature of these changes however is subtle: between seasons seedlings sprout up and some flourish while others perish, meanwhile, over multiple seasons they experience fluctuating precipitation volumes and can be grazed by livestock. The nature of these conditioning variables makes it difficult for ecologists to quantify the efficacy of intervention techniques under study. To support these observation and intervention tasks, we develop RestoreBot: a mobile robotic platform designed for gathering data in degraded rangelands for the purpose of data collection and intervention in order to support revegetation. Over the course of multiple deployments, we outline the opportunities and challenges of autonomous data collection for revegetation and the importance of further effort in this area. Specifically, we identify that localization, mapping, data association, and terrain assessment remain open problems for deployment, but that recent advances in computer vision, sensing, and autonomy offer promising prospects for autonomous revegetation.

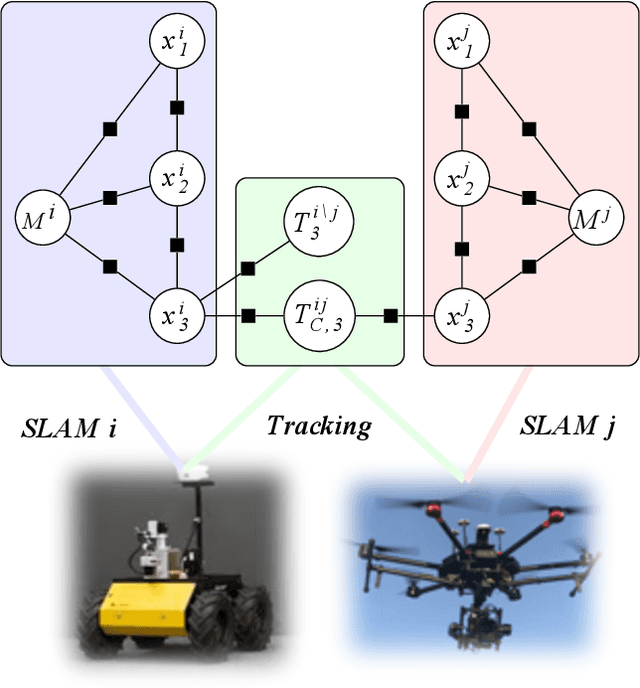

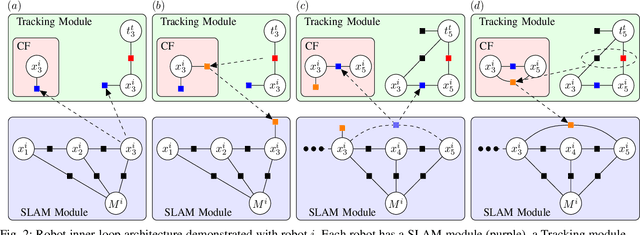

Towards Decentralized Heterogeneous Multi-Robot SLAM and Target Tracking

Jun 07, 2023

Abstract:In many robotics problems, there is a significant gain in collaborative information sharing between multiple robots, for exploration, search and rescue, tracking multiple targets, or mapping large environments. One of the key implicit assumptions when solving cooperative multi-robot problems is that all robots use the same (homogeneous) underlying algorithm. However, in practice, we want to allow collaboration between robots possessing different capabilities and that therefore must rely on heterogeneous algorithms. We present a system architecture and the supporting theory, to enable collaboration in a decentralized network of robots, where each robot relies on different estimation algorithms. To develop our approach, we focus on multi-robot simultaneous localization and mapping (SLAM) with multi-target tracking. Our theoretical framework builds on our idea of exploiting the conditional independence structure inherent to many robotics applications to separate between each robot's local inference (estimation) tasks and fuse only relevant parts of their non-equal, but overlapping probability density function (pdfs). We present a new decentralized graph-based approach to the multi-robot SLAM and tracking problem. We leverage factor graphs to split between different parts of the problem for efficient data sharing between robots in the network while enabling robots to use different local sparse landmark/dense/metric-semantic SLAM algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge