Harel Biggie

CU-Multi: A Dataset for Multi-Robot Data Association

May 23, 2025Abstract:Multi-robot systems (MRSs) are valuable for tasks such as search and rescue due to their ability to coordinate over shared observations. A central challenge in these systems is aligning independently collected perception data across space and time, i.e., multi-robot data association. While recent advances in collaborative SLAM (C-SLAM), map merging, and inter-robot loop closure detection have significantly progressed the field, evaluation strategies still predominantly rely on splitting a single trajectory from single-robot SLAM datasets into multiple segments to simulate multiple robots. Without careful consideration to how a single trajectory is split, this approach will fail to capture realistic pose-dependent variation in observations of a scene inherent to multi-robot systems. To address this gap, we present CU-Multi, a multi-robot dataset collected over multiple days at two locations on the University of Colorado Boulder campus. Using a single robotic platform, we generate four synchronized runs with aligned start times and deliberate percentages of trajectory overlap. CU-Multi includes RGB-D, GPS with accurate geospatial heading, and semantically annotated LiDAR data. By introducing controlled variations in trajectory overlap and dense lidar annotations, CU-Multi offers a compelling alternative for evaluating methods in multi-robot data association. Instructions on accessing the dataset, support code, and the latest updates are publicly available at https://arpg.github.io/cumulti

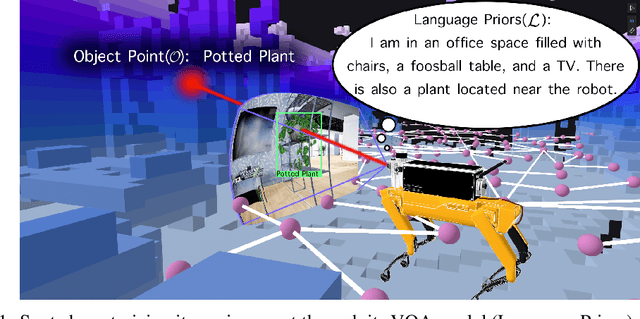

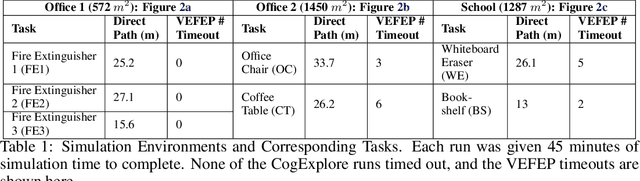

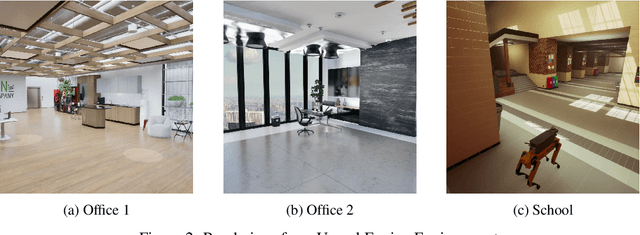

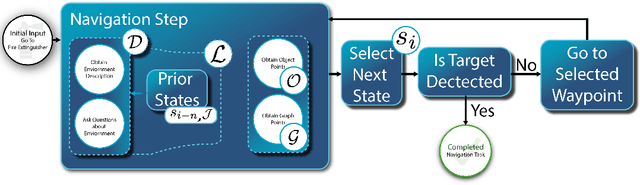

CogExplore: Contextual Exploration with Language-Encoded Environment Representations

Jun 24, 2024

Abstract:Integrating language models into robotic exploration frameworks improves performance in unmapped environments by providing the ability to reason over semantic groundings, contextual cues, and temporal states. The proposed method employs large language models (GPT-3.5 and Claude Haiku) to reason over these cues and express that reasoning in terms of natural language, which can be used to inform future states. We are motivated by the context of search-and-rescue applications where efficient exploration is critical. We find that by leveraging natural language, semantics, and tracking temporal states, the proposed method greatly reduces exploration path distance and further exposes the need for environment-dependent heuristics. Moreover, the method is highly robust to a variety of environments and noisy vision detections, as shown with a 100% success rate in a series of comprehensive experiments across three different environments conducted in a custom simulation pipeline operating in Unreal Engine.

Restorebot: Towards an Autonomous Robotics Platform for Degraded Rangeland Restoration

Dec 12, 2023

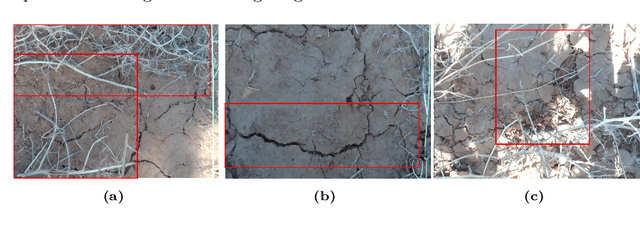

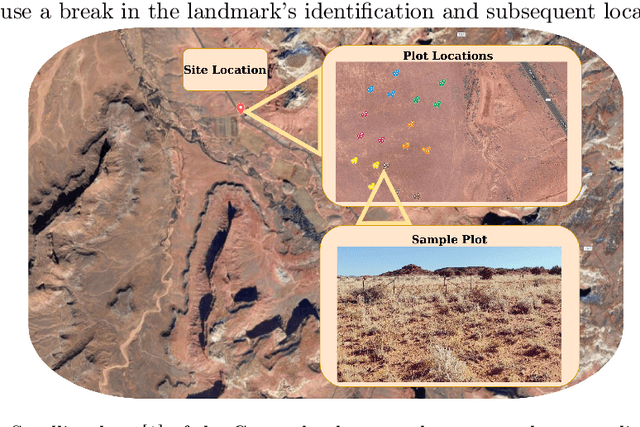

Abstract:Degraded rangelands undergo continual shifts in the appearance and distribution of plant life. The nature of these changes however is subtle: between seasons seedlings sprout up and some flourish while others perish, meanwhile, over multiple seasons they experience fluctuating precipitation volumes and can be grazed by livestock. The nature of these conditioning variables makes it difficult for ecologists to quantify the efficacy of intervention techniques under study. To support these observation and intervention tasks, we develop RestoreBot: a mobile robotic platform designed for gathering data in degraded rangelands for the purpose of data collection and intervention in order to support revegetation. Over the course of multiple deployments, we outline the opportunities and challenges of autonomous data collection for revegetation and the importance of further effort in this area. Specifically, we identify that localization, mapping, data association, and terrain assessment remain open problems for deployment, but that recent advances in computer vision, sensing, and autonomy offer promising prospects for autonomous revegetation.

Tell Me Where to Go: A Composable Framework for Context-Aware Embodied Robot Navigation

Jun 19, 2023Abstract:Humans have the remarkable ability to navigate through unfamiliar environments by solely relying on our prior knowledge and descriptions of the environment. For robots to perform the same type of navigation, they need to be able to associate natural language descriptions with their associated physical environment with a limited amount of prior knowledge. Recently, Large Language Models (LLMs) have been able to reason over billions of parameters and utilize them in multi-modal chat-based natural language responses. However, LLMs lack real-world awareness and their outputs are not always predictable. In this work, we develop NavCon, a low-bandwidth framework that solves this lack of real-world generalization by creating an intermediate layer between an LLM and a robot navigation framework in the form of Python code. Our intermediate shoehorns the vast prior knowledge inherent in an LLM model into a series of input and output API instructions that a mobile robot can understand. We evaluate our method across four different environments and command classes on a mobile robot and highlight our NavCon's ability to interpret contextual commands.

Kalman Filter Auto-tuning through Enforcing Chi-Squared Normalized Error Distributions with Bayesian Optimization

Jun 12, 2023Abstract:The nonlinear and stochastic relationship between noise covariance parameter values and state estimator performance makes optimal filter tuning a very challenging problem. Popular optimization-based tuning approaches can easily get trapped in local minima, leading to poor noise parameter identification and suboptimal state estimation. Recently, black box techniques based on Bayesian optimization with Gaussian processes (GPBO) have been shown to overcome many of these issues, using normalized estimation error squared (NEES) and normalized innovation error (NIS) statistics to derive cost functions for Kalman filter auto-tuning. While reliable noise parameter estimates are obtained in many cases, GPBO solutions obtained with these conventional cost functions do not always converge to optimal filter noise parameters and lack robustness to parameter ambiguities in time-discretized system models. This paper addresses these issues by making two main contributions. First, we show that NIS and NEES errors are only chi-squared distributed for tuned estimators. As a result, chi-square tests are not sufficient to ensure that an estimator has been correctly tuned. We use this to extend the familiar consistency tests for NIS and NEES to penalize if the distribution is not chi-squared distributed. Second, this cost measure is applied within a Student-t processes Bayesian Optimization (TPBO) to achieve robust estimator performance for time discretized state space models. The robustness, accuracy, and reliability of our approach are illustrated on classical state estimation problems.

BO-ICP: Initialization of Iterative Closest Point Based on Bayesian Optimization

Apr 25, 2023Abstract:Typical algorithms for point cloud registration such as Iterative Closest Point (ICP) require a favorable initial transform estimate between two point clouds in order to perform a successful registration. State-of-the-art methods for choosing this starting condition rely on stochastic sampling or global optimization techniques such as branch and bound. In this work, we present a new method based on Bayesian optimization for finding the critical initial ICP transform. We provide three different configurations for our method which highlights the versatility of the algorithm to both find rapid results and refine them in situations where more runtime is available such as offline map building. Experiments are run on popular data sets and we show that our approach outperforms state-of-the-art methods when given similar computation time. Furthermore, it is compatible with other improvements to ICP, as it focuses solely on the selection of an initial transform, a starting point for all ICP-based methods.

Flexible Supervised Autonomy for Exploration in Subterranean Environments

Jan 02, 2023

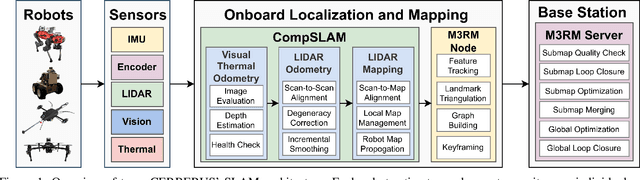

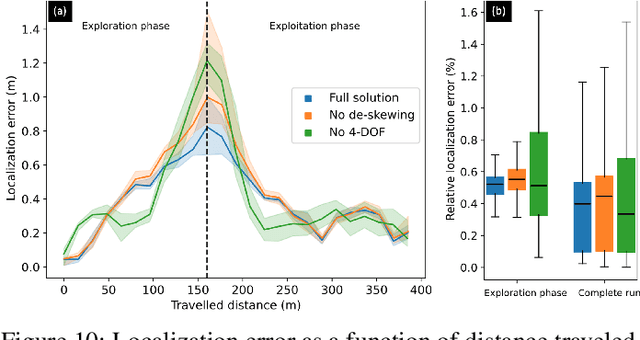

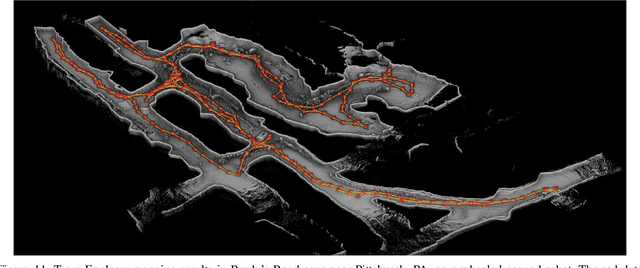

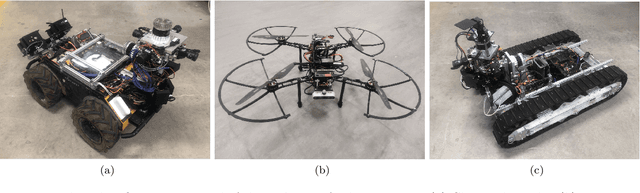

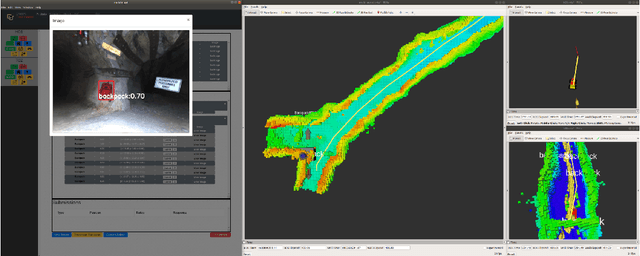

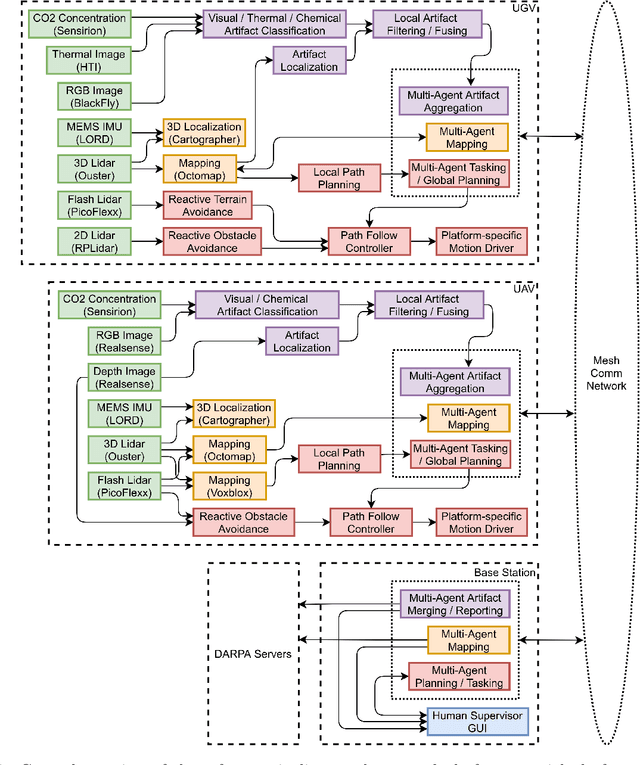

Abstract:While the capabilities of autonomous systems have been steadily improving in recent years, these systems still struggle to rapidly explore previously unknown environments without the aid of GPS-assisted navigation. The DARPA Subterranean (SubT) Challenge aimed to fast track the development of autonomous exploration systems by evaluating their performance in real-world underground search-and-rescue scenarios. Subterranean environments present a plethora of challenges for robotic systems, such as limited communications, complex topology, visually-degraded sensing, and harsh terrain. The presented solution enables long-term autonomy with minimal human supervision by combining a powerful and independent single-agent autonomy stack, with higher level mission management operating over a flexible mesh network. The autonomy suite deployed on quadruped and wheeled robots was fully independent, freeing the human supervision to loosely supervise the mission and make high-impact strategic decisions. We also discuss lessons learned from fielding our system at the SubT Final Event, relating to vehicle versatility, system adaptability, and re-configurable communications.

Present and Future of SLAM in Extreme Underground Environments

Aug 02, 2022

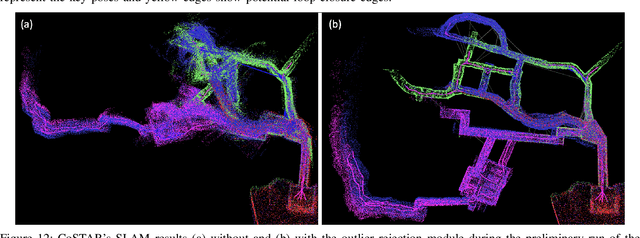

Abstract:This paper reports on the state of the art in underground SLAM by discussing different SLAM strategies and results across six teams that participated in the three-year-long SubT competition. In particular, the paper has four main goals. First, we review the algorithms, architectures, and systems adopted by the teams; particular emphasis is put on lidar-centric SLAM solutions (the go-to approach for virtually all teams in the competition), heterogeneous multi-robot operation (including both aerial and ground robots), and real-world underground operation (from the presence of obscurants to the need to handle tight computational constraints). We do not shy away from discussing the dirty details behind the different SubT SLAM systems, which are often omitted from technical papers. Second, we discuss the maturity of the field by highlighting what is possible with the current SLAM systems and what we believe is within reach with some good systems engineering. Third, we outline what we believe are fundamental open problems, that are likely to require further research to break through. Finally, we provide a list of open-source SLAM implementations and datasets that have been produced during the SubT challenge and related efforts, and constitute a useful resource for researchers and practitioners.

Heterogeneous Ground-Air Autonomous Vehicle Networking in Austere Environments: Practical Implementation of a Mesh Network in the DARPA Subterranean Challenge

Mar 24, 2022

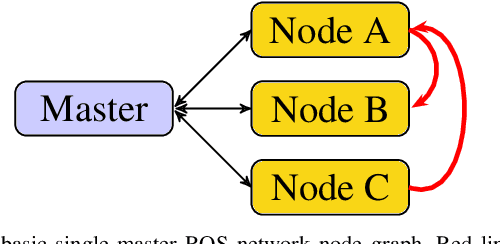

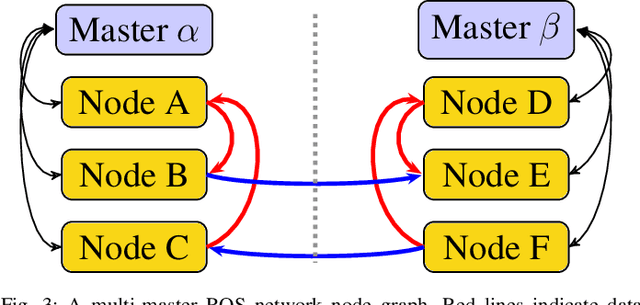

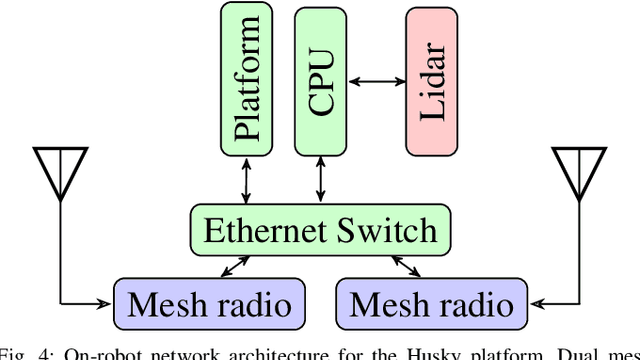

Abstract:Implementing a wireless mesh network in a real-life scenario requires a significant systems engineering effort to turn a network concept into a complete system. This paper presents an evaluation of a fielded system within the DARPA Subterranean (SubT) Challenge Final Event that contributed to a 3rd place finish. Our system included a team of air and ground robots, deployable mesh extender nodes, and a human operator base station. This paper presents a real-world evaluation of a stack optimized for air and ground robotic exploration in a RF-limited environment under practical system design limitations. Our highly customizable solution utilizes a minimum of non-free components with form factor options suited for UAV operations and provides insight into network operations at all levels. We present performance metrics based on our performance in the Final Event of the DARPA Subterranean Challenge, demonstrating the practical successes and limitations of our approach, as well as a set of lessons learned and suggestions for future improvements.

Multi-Agent Autonomy: Advancements and Challenges in Subterranean Exploration

Oct 08, 2021

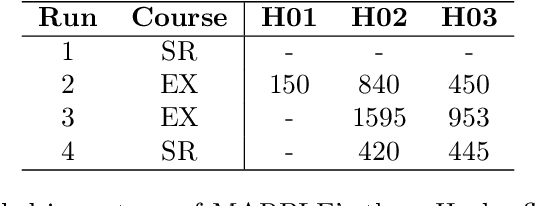

Abstract:Artificial intelligence has undergone immense growth and maturation in recent years, though autonomous systems have traditionally struggled when fielded in diverse and previously unknown environments. DARPA is seeking to change that with the Subterranean Challenge, by providing roboticists the opportunity to support civilian and military first responders in complex and high-risk underground scenarios. The subterranean domain presents a handful of challenges, such as limited communication, diverse topology and terrain, and degraded sensing. Team MARBLE proposes a solution for autonomous exploration of unknown subterranean environments in which coordinated agents search for artifacts of interest. The team presents two navigation algorithms in the form of a metric-topological graph-based planner and a continuous frontier-based planner. To facilitate multi-agent coordination, agents share and merge new map information and candidate goal-points. Agents deploy communication beacons at different points in the environment, extending the range at which maps and other information can be shared. Onboard autonomy reduces the load on human supervisors, allowing agents to detect and localize artifacts and explore autonomously outside established communication networks. Given the scale, complexity, and tempo of this challenge, a range of lessons were learned, most importantly, that frequent and comprehensive field testing in representative environments is key to rapidly refining system performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge