Steve McGuire

CU-Multi: A Dataset for Multi-Robot Data Association

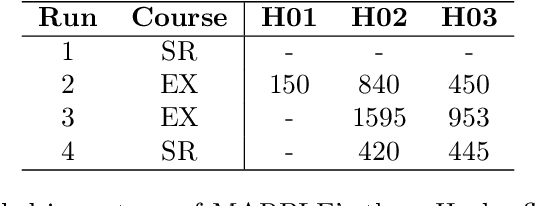

May 23, 2025Abstract:Multi-robot systems (MRSs) are valuable for tasks such as search and rescue due to their ability to coordinate over shared observations. A central challenge in these systems is aligning independently collected perception data across space and time, i.e., multi-robot data association. While recent advances in collaborative SLAM (C-SLAM), map merging, and inter-robot loop closure detection have significantly progressed the field, evaluation strategies still predominantly rely on splitting a single trajectory from single-robot SLAM datasets into multiple segments to simulate multiple robots. Without careful consideration to how a single trajectory is split, this approach will fail to capture realistic pose-dependent variation in observations of a scene inherent to multi-robot systems. To address this gap, we present CU-Multi, a multi-robot dataset collected over multiple days at two locations on the University of Colorado Boulder campus. Using a single robotic platform, we generate four synchronized runs with aligned start times and deliberate percentages of trajectory overlap. CU-Multi includes RGB-D, GPS with accurate geospatial heading, and semantically annotated LiDAR data. By introducing controlled variations in trajectory overlap and dense lidar annotations, CU-Multi offers a compelling alternative for evaluating methods in multi-robot data association. Instructions on accessing the dataset, support code, and the latest updates are publicly available at https://arpg.github.io/cumulti

NeRF-Accelerated Ecological Monitoring in Mixed-Evergreen Redwood Forest

Oct 09, 2024Abstract:Forest mapping provides critical observational data needed to understand the dynamics of forest environments. Notably, tree diameter at breast height (DBH) is a metric used to estimate forest biomass and carbon dioxide (CO$_2$) sequestration. Manual methods of forest mapping are labor intensive and time consuming, a bottleneck for large-scale mapping efforts. Automated mapping relies on acquiring dense forest reconstructions, typically in the form of point clouds. Terrestrial laser scanning (TLS) and mobile laser scanning (MLS) generate point clouds using expensive LiDAR sensing, and have been used successfully to estimate tree diameter. Neural radiance fields (NeRFs) are an emergent technology enabling photorealistic, vision-based reconstruction by training a neural network on a sparse set of input views. In this paper, we present a comparison of MLS and NeRF forest reconstructions for the purpose of trunk diameter estimation in a mixed-evergreen Redwood forest. In addition, we propose an improved DBH-estimation method using convex-hull modeling. Using this approach, we achieved 1.68 cm RMSE, which consistently outperformed standard cylinder modeling approaches. Our code contributions and forest datasets are freely available at https://github.com/harelab-ucsc/RedwoodNeRF.

Evaluating geometric accuracy of NeRF reconstructions compared to SLAM method

Jul 15, 2024Abstract:As Neural Radiance Field (NeRF) implementations become faster, more efficient and accurate, their applicability to real world mapping tasks becomes more accessible. Traditionally, 3D mapping, or scene reconstruction, has relied on expensive LiDAR sensing. Photogrammetry can perform image-based 3D reconstruction but is computationally expensive and requires extremely dense image representation to recover complex geometry and photorealism. NeRFs perform 3D scene reconstruction by training a neural network on sparse image and pose data, achieving superior results to photogrammetry with less input data. This paper presents an evaluation of two NeRF scene reconstructions for the purpose of estimating the diameter of a vertical PVC cylinder. One of these are trained on commodity iPhone data and the other is trained on robot-sourced imagery and poses. This neural-geometry is compared to state-of-the-art lidar-inertial SLAM in terms of scene noise and metric-accuracy.

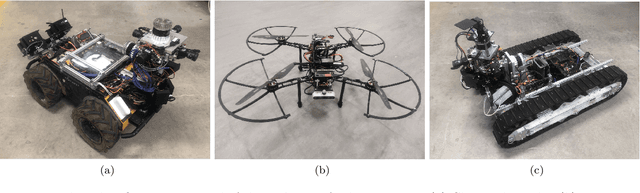

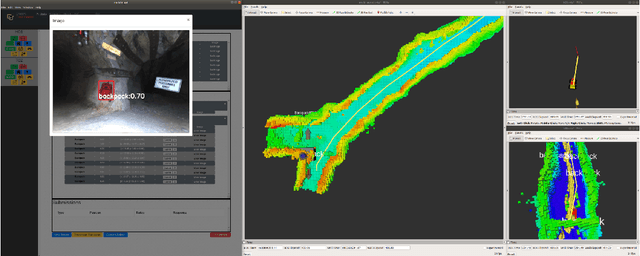

Flexible Supervised Autonomy for Exploration in Subterranean Environments

Jan 02, 2023

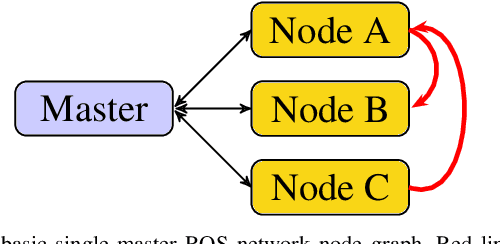

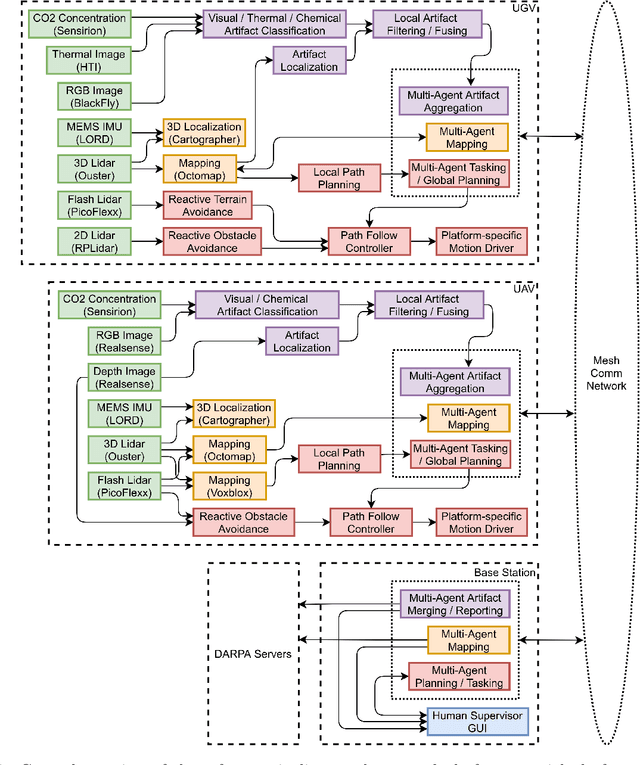

Abstract:While the capabilities of autonomous systems have been steadily improving in recent years, these systems still struggle to rapidly explore previously unknown environments without the aid of GPS-assisted navigation. The DARPA Subterranean (SubT) Challenge aimed to fast track the development of autonomous exploration systems by evaluating their performance in real-world underground search-and-rescue scenarios. Subterranean environments present a plethora of challenges for robotic systems, such as limited communications, complex topology, visually-degraded sensing, and harsh terrain. The presented solution enables long-term autonomy with minimal human supervision by combining a powerful and independent single-agent autonomy stack, with higher level mission management operating over a flexible mesh network. The autonomy suite deployed on quadruped and wheeled robots was fully independent, freeing the human supervision to loosely supervise the mission and make high-impact strategic decisions. We also discuss lessons learned from fielding our system at the SubT Final Event, relating to vehicle versatility, system adaptability, and re-configurable communications.

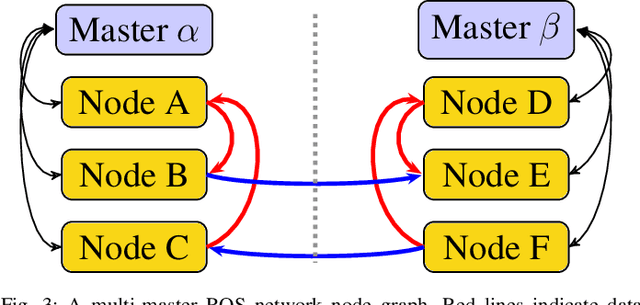

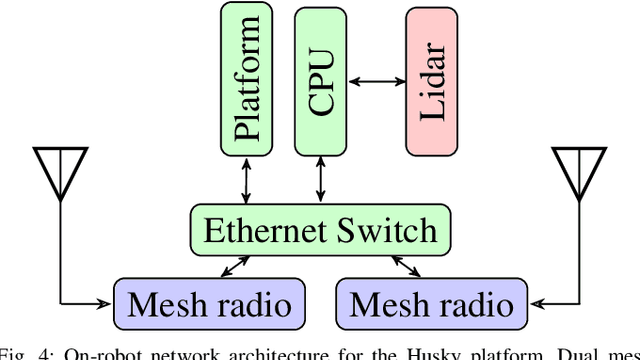

Heterogeneous Ground-Air Autonomous Vehicle Networking in Austere Environments: Practical Implementation of a Mesh Network in the DARPA Subterranean Challenge

Mar 24, 2022

Abstract:Implementing a wireless mesh network in a real-life scenario requires a significant systems engineering effort to turn a network concept into a complete system. This paper presents an evaluation of a fielded system within the DARPA Subterranean (SubT) Challenge Final Event that contributed to a 3rd place finish. Our system included a team of air and ground robots, deployable mesh extender nodes, and a human operator base station. This paper presents a real-world evaluation of a stack optimized for air and ground robotic exploration in a RF-limited environment under practical system design limitations. Our highly customizable solution utilizes a minimum of non-free components with form factor options suited for UAV operations and provides insight into network operations at all levels. We present performance metrics based on our performance in the Final Event of the DARPA Subterranean Challenge, demonstrating the practical successes and limitations of our approach, as well as a set of lessons learned and suggestions for future improvements.

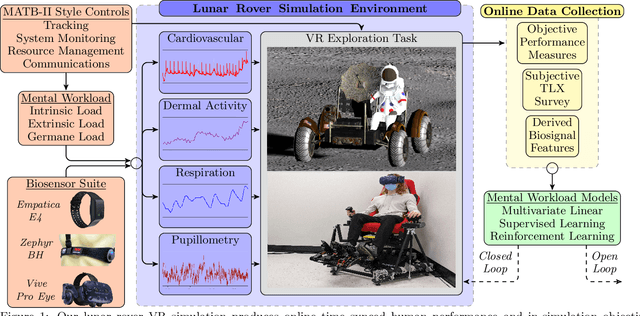

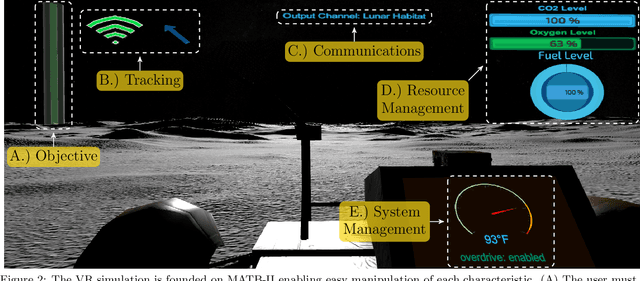

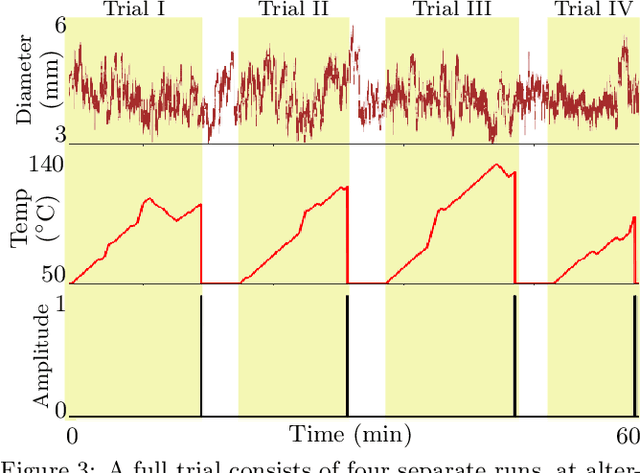

A Virtual Reality Simulation Pipeline for Online Mental Workload Modeling

Nov 24, 2021

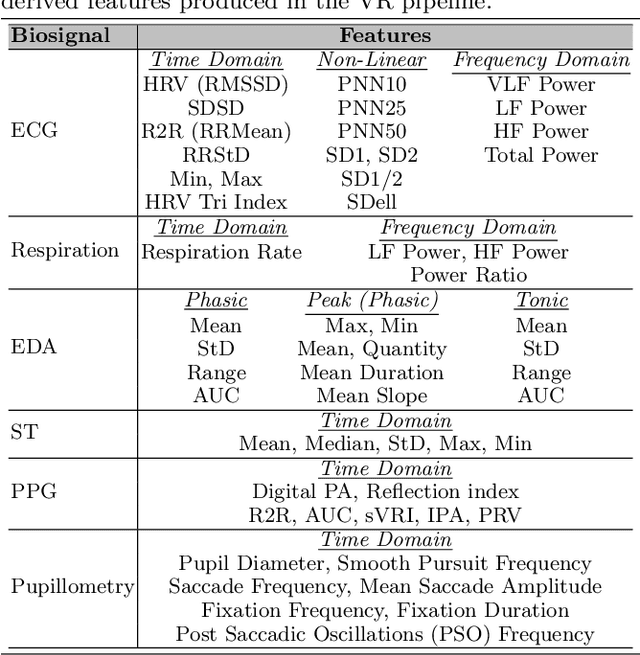

Abstract:Seamless human robot interaction (HRI) and cooperative human-robot (HR) teaming critically rely upon accurate and timely human mental workload (MW) models. Cognitive Load Theory (CLT) suggests representative physical environments produce representative mental processes; physical environment fidelity corresponds with improved modeling accuracy. Virtual Reality (VR) systems provide immersive environments capable of replicating complicated scenarios, particularly those associated with high-risk, high-stress scenarios. Passive biosignal modeling shows promise as a noninvasive method of MW modeling. However, VR systems rarely include multimodal psychophysiological feedback or capitalize on biosignal data for online MW modeling. Here, we develop a novel VR simulation pipeline, inspired by the NASA Multi-Attribute Task Battery II (MATB-II) task architecture, capable of synchronous collection of objective performance, subjective performance, and passive human biosignals in a simulated hazardous exploration environment. Our system design extracts and publishes biofeatures through the Robot Operating System (ROS), facilitating real time psychophysiology-based MW model integration into complete end-to-end systems. A VR simulation pipeline capable of evaluating MWs online could be foundational for advancing HR systems and VR experiences by enabling these systems to adaptively alter their behaviors in response to operator MW.

Multi-Agent Autonomy: Advancements and Challenges in Subterranean Exploration

Oct 08, 2021

Abstract:Artificial intelligence has undergone immense growth and maturation in recent years, though autonomous systems have traditionally struggled when fielded in diverse and previously unknown environments. DARPA is seeking to change that with the Subterranean Challenge, by providing roboticists the opportunity to support civilian and military first responders in complex and high-risk underground scenarios. The subterranean domain presents a handful of challenges, such as limited communication, diverse topology and terrain, and degraded sensing. Team MARBLE proposes a solution for autonomous exploration of unknown subterranean environments in which coordinated agents search for artifacts of interest. The team presents two navigation algorithms in the form of a metric-topological graph-based planner and a continuous frontier-based planner. To facilitate multi-agent coordination, agents share and merge new map information and candidate goal-points. Agents deploy communication beacons at different points in the environment, extending the range at which maps and other information can be shared. Onboard autonomy reduces the load on human supervisors, allowing agents to detect and localize artifacts and explore autonomously outside established communication networks. Given the scale, complexity, and tempo of this challenge, a range of lessons were learned, most importantly, that frequent and comprehensive field testing in representative environments is key to rapidly refining system performance.

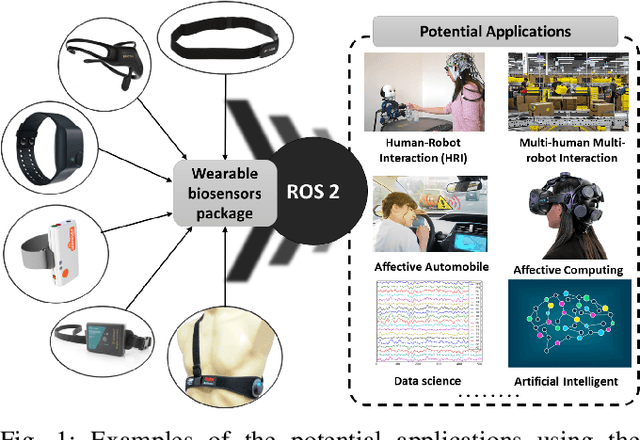

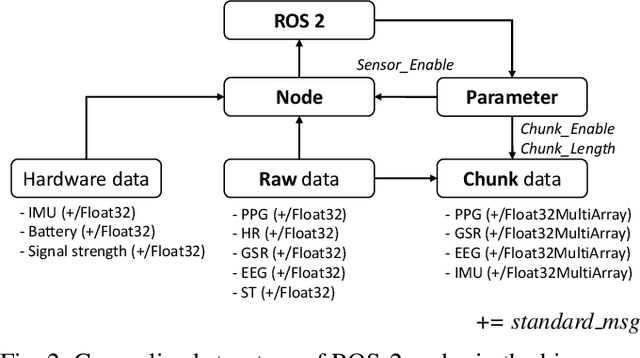

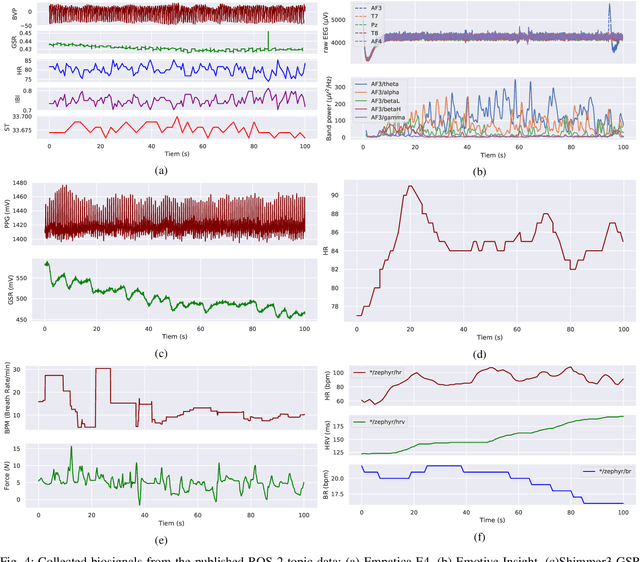

Toward a Wearable Biosensor Ecosystem on ROS 2 for Real-time Human-Robot Interaction Systems

Oct 08, 2021

Abstract:Wearable biosensors can enable continuous human data capture, facilitating development of real-world Human-Robot Interaction (HRI) systems. However, a lack of standardized libraries and implementations adds extraneous complexity to HRI system designs, and precludes collaboration across disciplines and institutions. Here, we introduce a novel wearable biosensor package for the Robot Operating System 2 (ROS 2) system. The ROS2 officially supports real-time computing and multi-robot systems, and thus provides easy-to-use and reliable streaming data from multiple nodes. The package standardizes biosensor HRI integration, lowers the technical barrier of entry, and expands the biosensor ecosystem into the robotics field. Each biosensor package node follows a generalized node and topic structure concentrated on ease of use. Current package capabilities, listed by biosensor, highlight package standardization. Collected example data demonstrate a full integration of each biosensor into ROS2. We expect that standardization of this biosensors package for ROS2 will greatly simplify use and cross-collaboration across many disciplines. The wearable biosensor package is made publicly available on GitHub at \https://github.com/SMARTlab-Purdue/ros2-foxy-wearable-biosensors.

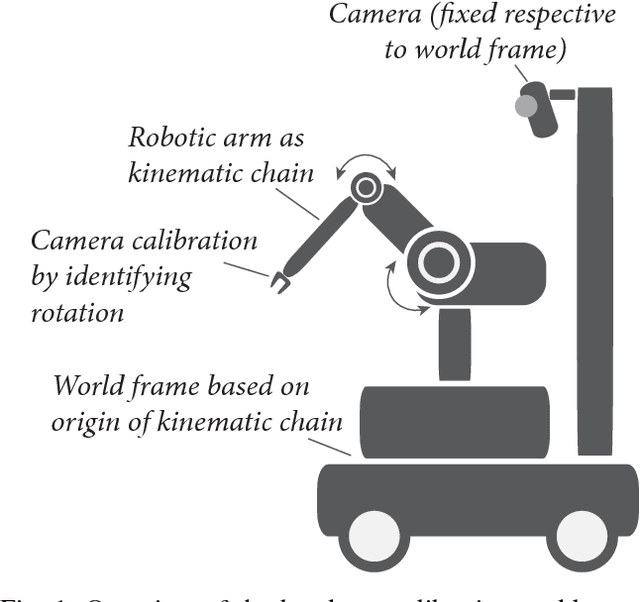

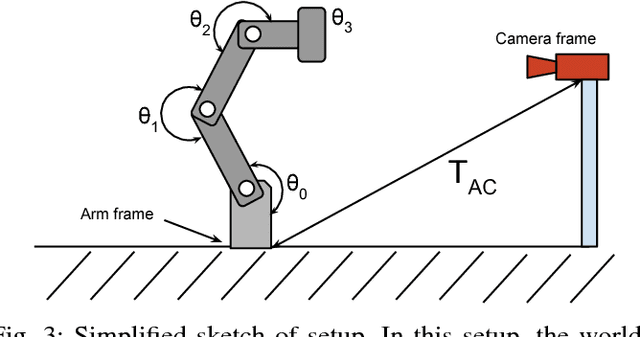

Extrinisic Calibration of a Camera-Arm System Through Rotation Identification

Dec 19, 2018

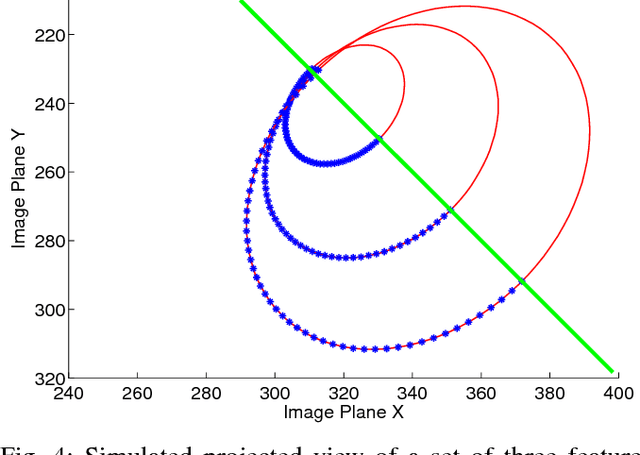

Abstract:Determining extrinsic calibration parameters is a necessity in any robotic system composed of actuators and cameras. Once a system is outside the lab environment, parameters must be determined without relying on outside artifacts such as calibration targets. We propose a method that relies on structured motion of an observed arm to recover extrinsic calibration parameters. Our method combines known arm kinematics with observations of conics in the image plane to calculate maximum-likelihood estimates for calibration extrinsics. This method is validated in simulation and tested against a real-world model, yielding results consistent with ruler-based estimates. Our method shows promise for estimating the pose of a camera relative to an articulated arm's end effector without requiring tedious measurements or external artifacts. Index Terms: robotics, hand-eye problem, self-calibration, structure from motion

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge