Wonse Jo

University of Michigan

Local Minima Prediction using Dynamic Bayesian Filtering for UGV Navigation in Unstructured Environments

May 20, 2025Abstract:Path planning is crucial for the navigation of autonomous vehicles, yet these vehicles face challenges in complex and real-world environments. Although a global view may be provided, it is often outdated, necessitating the reliance of Unmanned Ground Vehicles (UGVs) on real-time local information. This reliance on partial information, without considering the global context, can lead to UGVs getting stuck in local minima. This paper develops a method to proactively predict local minima using Dynamic Bayesian filtering, based on the detected obstacles in the local view and the global goal. This approach aims to enhance the autonomous navigation of self-driving vehicles by allowing them to predict potential pitfalls before they get stuck, and either ask for help from a human, or re-plan an alternate trajectory.

Training Human-Robot Teams by Improving Transparency Through a Virtual Spectator Interface

Mar 12, 2025Abstract:After-action reviews (AARs) are professional discussions that help operators and teams enhance their task performance by analyzing completed missions with peers and professionals. Previous studies that compared different formats of AARs have mainly focused on human teams. However, the inclusion of robotic teammates brings along new challenges in understanding teammate intent and communication. Traditional AAR between human teammates may not be satisfactory for human-robot teams. To address this limitation, we propose a new training review (TR) tool, called the Virtual Spectator Interface (VSI), to enhance human-robot team performance and situational awareness (SA) in a simulated search mission. The proposed VSI primarily utilizes visual feedback to review subjects' behavior. To examine the effectiveness of VSI, we took elements from AAR to conduct our own TR, designed a 1 x 3 between-subjects experiment with experimental conditions: TR with (1) VSI, (2) screen recording, and (3) non-technology (only verbal descriptions). The results of our experiments demonstrated that the VSI did not result in significantly better team performance than other conditions. However, the TR with VSI led to more improvement in the subjects SA over the other conditions.

SafePlan: Leveraging Formal Logic and Chain-of-Thought Reasoning for Enhanced Safety in LLM-based Robotic Task Planning

Mar 10, 2025

Abstract:Robotics researchers increasingly leverage large language models (LLM) in robotics systems, using them as interfaces to receive task commands, generate task plans, form team coalitions, and allocate tasks among multi-robot and human agents. However, despite their benefits, the growing adoption of LLM in robotics has raised several safety concerns, particularly regarding executing malicious or unsafe natural language prompts. In addition, ensuring that task plans, team formation, and task allocation outputs from LLMs are adequately examined, refined, or rejected is crucial for maintaining system integrity. In this paper, we introduce SafePlan, a multi-component framework that combines formal logic and chain-of-thought reasoners for enhancing the safety of LLM-based robotics systems. Using the components of SafePlan, including Prompt Sanity COT Reasoner and Invariant, Precondition, and Postcondition COT reasoners, we examined the safety of natural language task prompts, task plans, and task allocation outputs generated by LLM-based robotic systems as means of investigating and enhancing system safety profile. Our results show that SafePlan outperforms baseline models by leading to 90.5% reduction in harmful task prompt acceptance while still maintaining reasonable acceptance of safe tasks.

Investigating the Impact of Trust in Multi-Human Multi-Robot Task Allocation

Sep 24, 2024

Abstract:Trust is essential in human-robot collaboration. Even more so in multi-human multi-robot teams where trust is vital to ensure teaming cohesion in complex operational environments. Yet, at the moment, trust is rarely considered a factor during task allocation and reallocation in algorithms used in multi-human, multi-robot collaboration contexts. Prior work on trust in single-human-robot interaction has identified that including trust as a parameter in human-robot interaction significantly improves both performance outcomes and human experience with robotic systems. However, very little research has explored the impact of trust in multi-human multi-robot collaboration, specifically in the context of task allocation. In this paper, we introduce a new trust model, the Expectation Comparison Trust (ECT) model, and employ it with three trust models from prior work and a baseline no-trust model to investigate the impact of trust on task allocation outcomes in multi-human multi-robot collaboration. Our experiment involved different team configurations, including 2 humans, 2 robots, 5 humans, 5 robots, and 10 humans, 10 robots. Results showed that using trust-based models generally led to better task allocation outcomes in larger teams (10 humans and 10 robots) than in smaller teams. We discuss the implications of our findings and provide recommendations for future work on integrating trust as a variable for task allocation in multi-human, multi-robot collaboration.

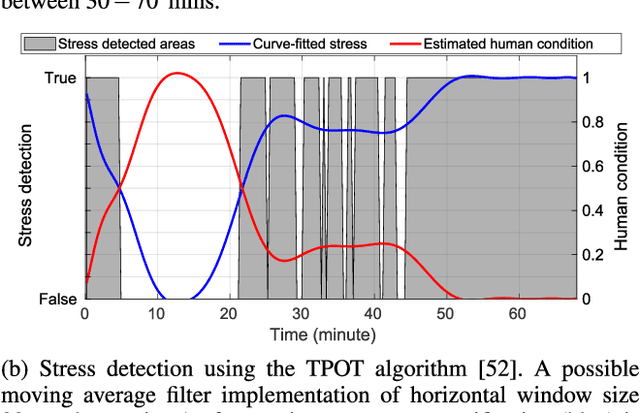

Affective Workload Allocation for Multi-human Multi-robot Teams

Mar 18, 2023

Abstract:The interaction and collaboration between humans and multiple robots represent a novel field of research known as human multi-robot systems. Adequately designed systems within this field allow teams composed of both humans and robots to work together effectively on tasks such as monitoring, exploration, and search and rescue operations. This paper presents a deep reinforcement learning-based affective workload allocation controller specifically for multi-human multi-robot teams. The proposed controller can dynamically reallocate workloads based on the performance of the operators during collaborative missions with multi-robot systems. The operators' performances are evaluated through the scores of a self-reported questionnaire (i.e., subjective measurement) and the results of a deep learning-based cognitive workload prediction algorithm that uses physiological and behavioral data (i.e., objective measurement). To evaluate the effectiveness of the proposed controller, we use a multi-human multi-robot CCTV monitoring task as an example and carry out comprehensive real-world experiments with 32 human subjects for both quantitative measurement and qualitative analysis. Our results demonstrate the performance and effectiveness of the proposed controller and highlight the importance of incorporating both subjective and objective measurements of the operators' cognitive workload as well as seeking consent for workload transitions, to enhance the performance of multi-human multi-robot teams.

Implications of Personality on Cognitive Workload, Affect, and Task Performance in Robot Remote Control

Mar 08, 2023

Abstract:This paper explores how the personality traits of robot operators can impact their task performance during remote control of robots. The influence of personal dispositions on information processing, either directly or indirectly, needs to be examined when working with robots on specific tasks. To investigate this relationship, we utilize the open-access multi-modal dataset MOCAS to examine the operator's personality, affect, cognitive load, and task performance. Our objective is to confirm if personal traits have a total effect, including both direct and indirect effects, that could significantly impact operator performance level. We specifically examine the relationship between personality traits such as extroversion, conscientiousness, and agreeableness, and task performance. We analyze the correlation between cognitive load, self-ratings of workload and affect, and the quantified individual personality traits and their experimental scores. As a result, we confirm that personality traits have no total effect on task performance.

Husformer: A Multi-Modal Transformer for Multi-Modal Human State Recognition

Sep 30, 2022

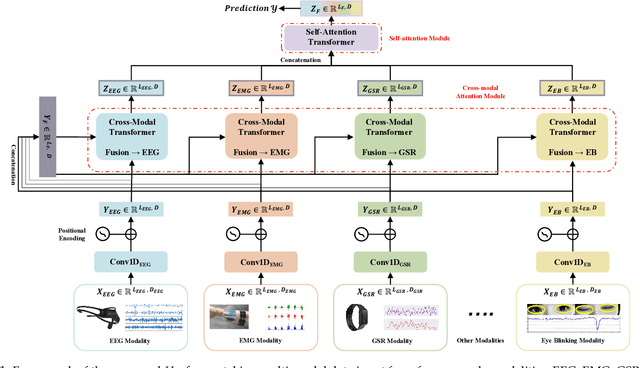

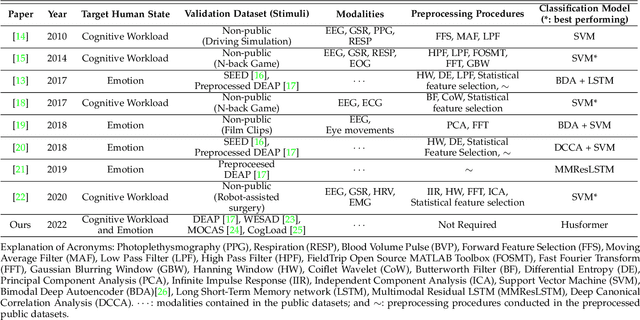

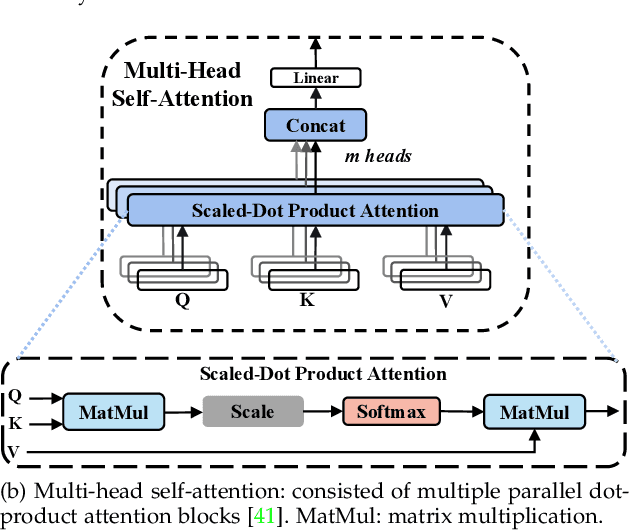

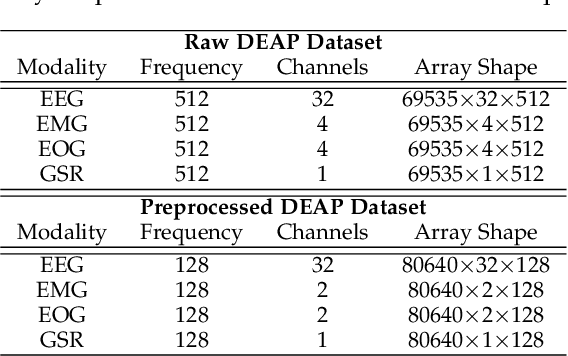

Abstract:Human state recognition is a critical topic with pervasive and important applications in human-machine systems.Multi-modal fusion, the combination of metrics from multiple data sources, has been shown as a sound method for improving the recognition performance. However, while promising results have been reported by recent multi-modal-based models, they generally fail to leverage the sophisticated fusion strategies that would model sufficient cross-modal interactions when producing the fusion representation; instead, current methods rely on lengthy and inconsistent data preprocessing and feature crafting. To address this limitation, we propose an end-to-end multi-modal transformer framework for multi-modal human state recognition called Husformer.Specifically, we propose to use cross-modal transformers, which inspire one modality to reinforce itself through directly attending to latent relevance revealed in other modalities, to fuse different modalities while ensuring sufficient awareness of the cross-modal interactions introduced. Subsequently, we utilize a self-attention transformer to further prioritize contextual information in the fusion representation. Using two such attention mechanisms enables effective and adaptive adjustments to noise and interruptions in multi-modal signals during the fusion process and in relation to high-level features. Extensive experiments on two human emotion corpora (DEAP and WESAD) and two cognitive workload datasets (MOCAS and CogLoad) demonstrate that in the recognition of human state, our Husformer outperforms both state-of-the-art multi-modal baselines and the use of a single modality by a large margin, especially when dealing with raw multi-modal signals. We also conducted an ablation study to show the benefits of each component in Husformer/

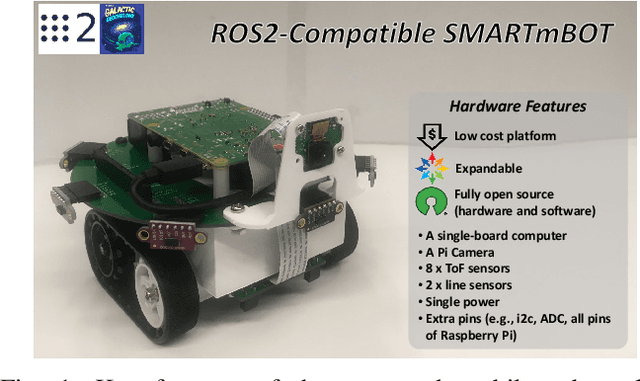

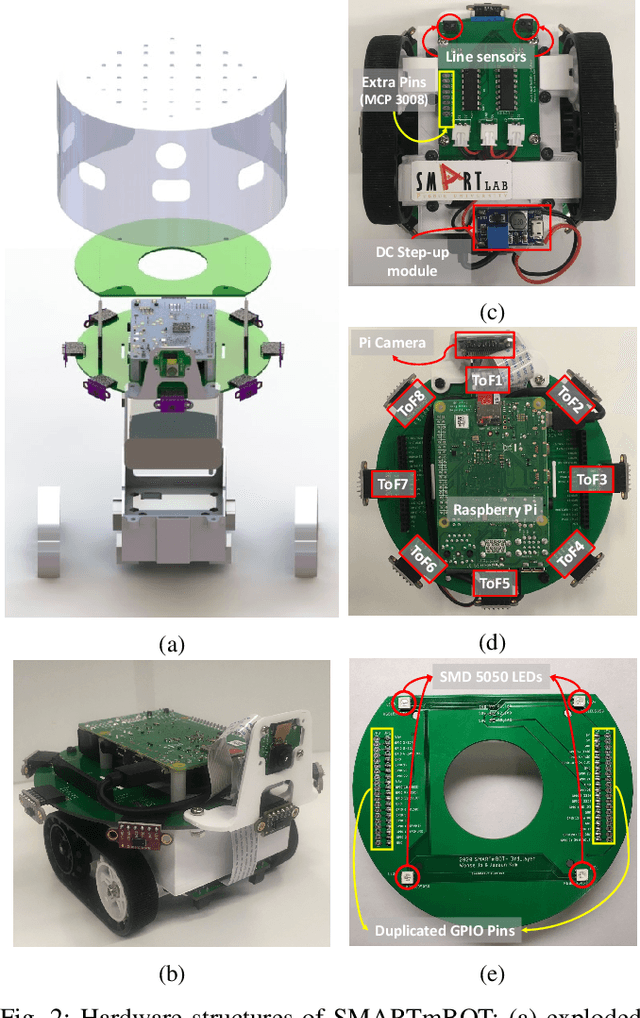

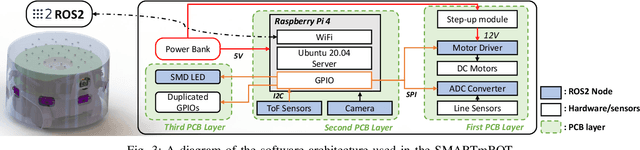

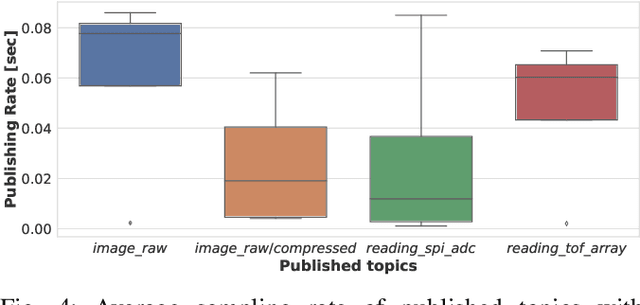

SMARTmBOT: A ROS2-based Low-cost and Open-source Mobile Robot Platform

Mar 16, 2022

Abstract:This paper introduces SMARTmBOT, an open-source mobile robot platform based on Robot Operating System 2 (ROS2). The characteristics of the SMARTmBOT, including low-cost, modular-typed, customizable and expandable design, make it an easily achievable and effective robot platform to support broad robotics research and education involving either single-robot or multi-robot systems. The total cost per robot is approximately $210, and most hardware components can be fabricated by a generic 3D printer, hence allowing users to build the robots or replace any broken parts conveniently. The SMARTmBot is also equipped with a rich range of sensors, making it competent for general task scenarios, such as point-to-point navigation and obstacle avoidance. We validated the mobility and function of SMARTmBOT through various robot navigation experiments and applications with tasks including go-to-goal, pure-pursuit, line following, and swarming. All source code necessary for reading sensors, streaming from an embedded camera, and controlling the robot including robot navigation controllers is available through an online repository that can be found at https://github.com/SMARTlab-Purdue/SMARTmBOT.

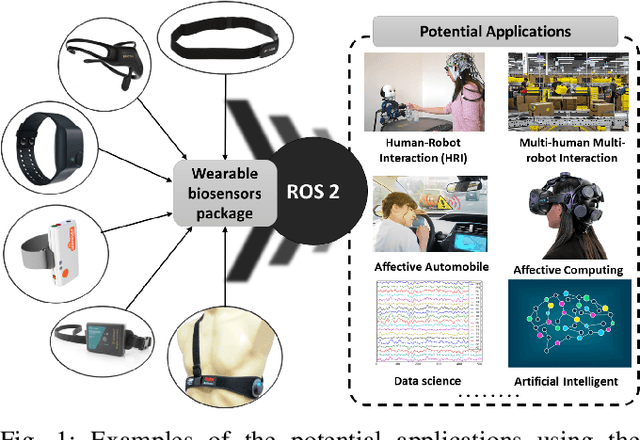

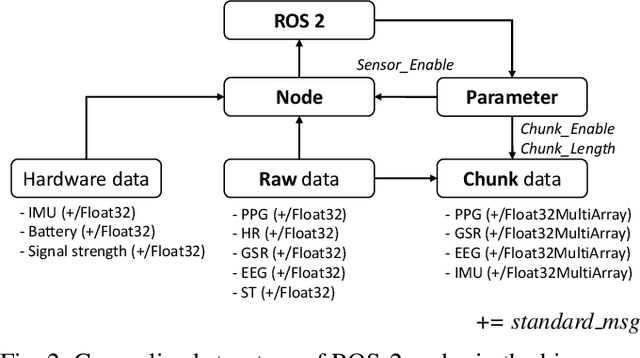

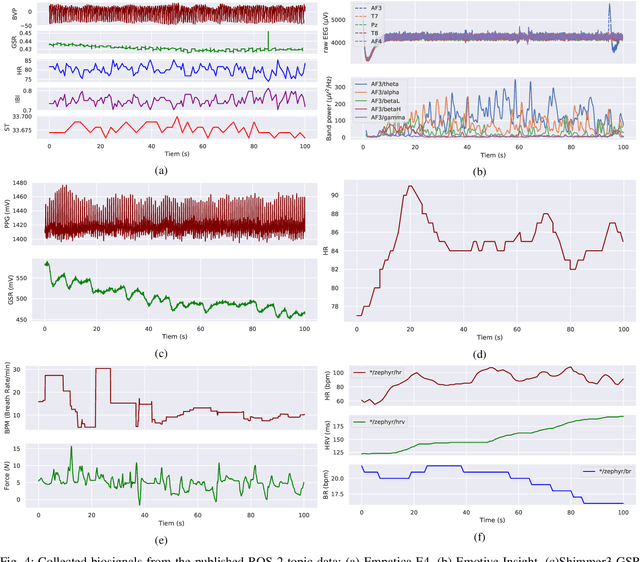

Toward a Wearable Biosensor Ecosystem on ROS 2 for Real-time Human-Robot Interaction Systems

Oct 08, 2021

Abstract:Wearable biosensors can enable continuous human data capture, facilitating development of real-world Human-Robot Interaction (HRI) systems. However, a lack of standardized libraries and implementations adds extraneous complexity to HRI system designs, and precludes collaboration across disciplines and institutions. Here, we introduce a novel wearable biosensor package for the Robot Operating System 2 (ROS 2) system. The ROS2 officially supports real-time computing and multi-robot systems, and thus provides easy-to-use and reliable streaming data from multiple nodes. The package standardizes biosensor HRI integration, lowers the technical barrier of entry, and expands the biosensor ecosystem into the robotics field. Each biosensor package node follows a generalized node and topic structure concentrated on ease of use. Current package capabilities, listed by biosensor, highlight package standardization. Collected example data demonstrate a full integration of each biosensor into ROS2. We expect that standardization of this biosensors package for ROS2 will greatly simplify use and cross-collaboration across many disciplines. The wearable biosensor package is made publicly available on GitHub at \https://github.com/SMARTlab-Purdue/ros2-foxy-wearable-biosensors.

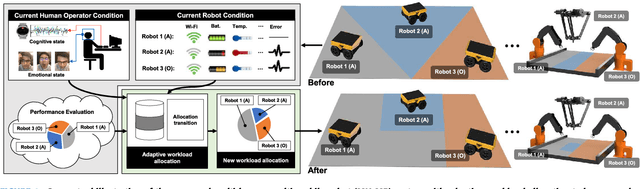

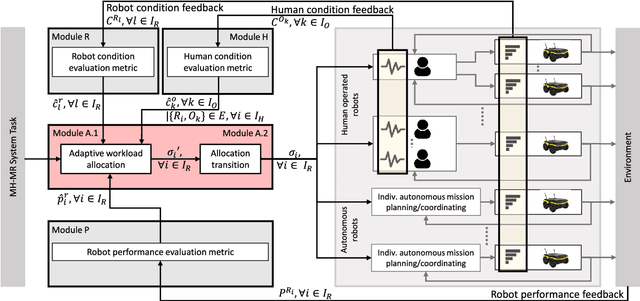

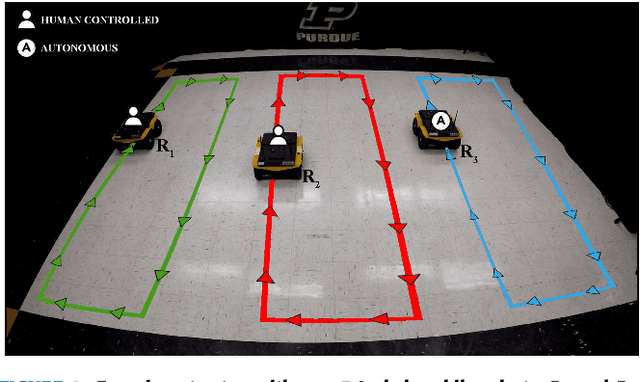

Adaptive Workload Allocation for Multi-human Multi-robot Teams for Independent and Homogeneous Tasks

Jul 27, 2020

Abstract:Multi-human multi-robot (MH-MR) systems have the ability to combine the potential advantages of robotic systems with those of having humans in the loop. Robotic systems contribute precision performance and long operation on repetitive tasks without tiring, while humans in the loop improve situational awareness and enhance decision-making abilities. A system's ability to adapt allocated workload to changing conditions and the performance of each individual (human and robot) during the mission is vital to maintaining overall system performance. Previous works from literature including market-based and optimization approaches have attempted to address the task/workload allocation problem with focus on maximizing the system output without regarding individual agent conditions, lacking in real-time processing and have mostly focused exclusively on multi-robot systems. Given the variety of possible combination of teams (autonomous robots and human-operated robots: any number of human operators operating any number of robots at a time) and the operational scale of MH-MR systems, development of a generalized framework of workload allocation has been a particularly challenging task. In this paper, we present such a framework for independent homogeneous missions, capable of adaptively allocating the system workload in relation to health conditions and work performances of human-operated and autonomous robots in real-time. The framework consists of removable modular function blocks ensuring its applicability to different MH-MR scenarios. A new workload transition function block ensures smooth transition without the workload change having adverse effects on individual agents. The effectiveness and scalability of the system's workload adaptability is validated by experiments applying the proposed framework in a MH-MR patrolling scenario with changing human and robot condition, and failing robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge