Lionel P. Robert Jr.

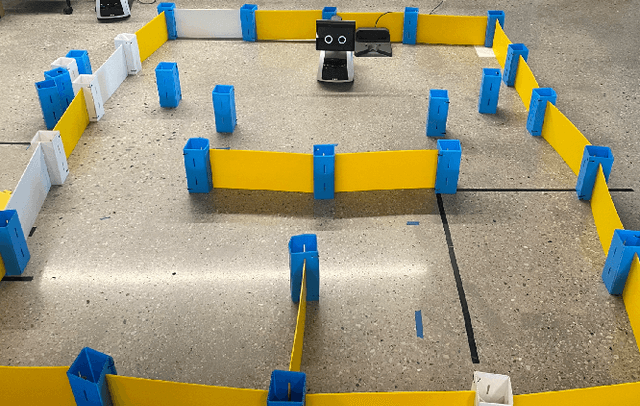

Local Minima Prediction using Dynamic Bayesian Filtering for UGV Navigation in Unstructured Environments

May 20, 2025Abstract:Path planning is crucial for the navigation of autonomous vehicles, yet these vehicles face challenges in complex and real-world environments. Although a global view may be provided, it is often outdated, necessitating the reliance of Unmanned Ground Vehicles (UGVs) on real-time local information. This reliance on partial information, without considering the global context, can lead to UGVs getting stuck in local minima. This paper develops a method to proactively predict local minima using Dynamic Bayesian filtering, based on the detected obstacles in the local view and the global goal. This approach aims to enhance the autonomous navigation of self-driving vehicles by allowing them to predict potential pitfalls before they get stuck, and either ask for help from a human, or re-plan an alternate trajectory.

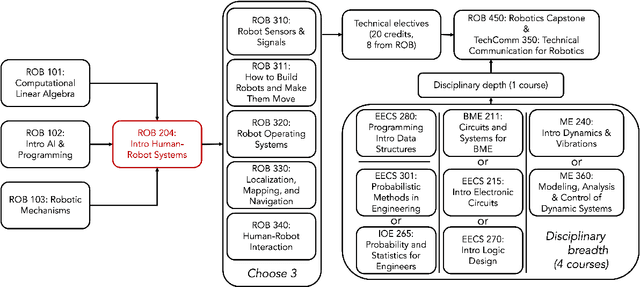

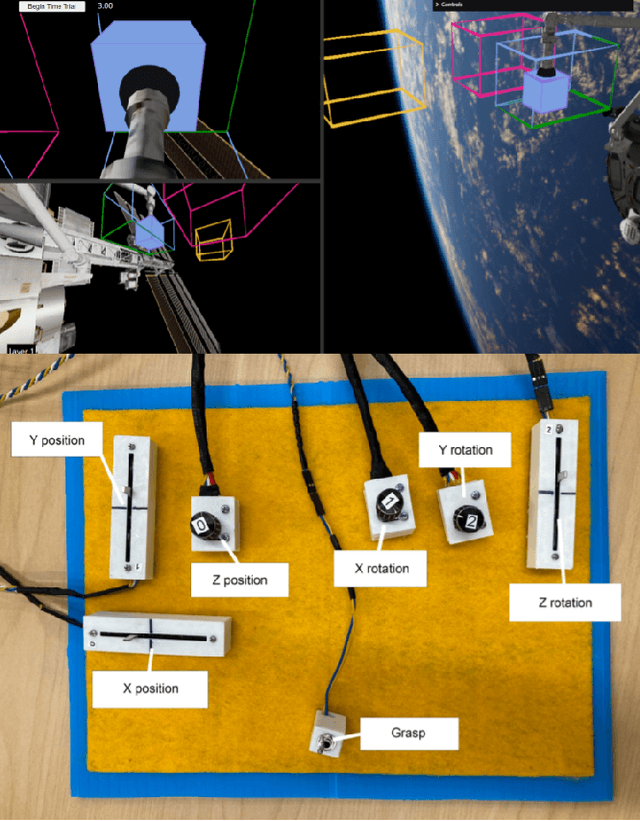

ROB 204: Introduction to Human-Robot Systems at the University of Michigan, Ann Arbor

May 23, 2024

Abstract:The University of Michigan Robotics program focuses on the study of embodied intelligence that must sense, reason, act, and work with people to improve quality of life and productivity equitably across society. ROB 204, part of the core curriculum towards the undergraduate degree in Robotics, introduces students to topics that enable conceptually designing a robotic system to address users' needs from a sociotechnical context. Students are introduced to human-robot interaction (HRI) concepts and the process for socially-engaged design with a Learn-Reinforce-Integrate approach. In this paper, we discuss the course topics and our teaching methodology, and provide recommendations for delivering this material. Overall, students leave the course with a new understanding and appreciation for how human capabilities can inform requirements for a robotics system, how humans can interact with a robot, and how to assess the usability of robotic systems.

A Unified Bi-directional Model for Natural and Artificial Trust in Human-Robot Collaboration

Jun 04, 2021

Abstract:We introduce a novel capabilities-based bi-directional multi-task trust model that can be used for trust prediction from either a human or a robotic trustor agent. Tasks are represented in terms of their capability requirements, while trustee agents are characterized by their individual capabilities. Trustee agents' capabilities are not deterministic; they are represented by belief distributions. For each task to be executed, a higher level of trust is assigned to trustee agents who have demonstrated that their capabilities exceed the task's requirements. We report results of an online experiment with 284 participants, revealing that our model outperforms existing models for multi-task trust prediction from a human trustor. We also present simulations of the model for determining trust from a robotic trustor. Our model is useful for control authority allocation applications that involve human-robot teams.

Using Trust in Automation to Enhance Driver-Autonomous Vehicle Interaction and Improve Team Performance

Jun 03, 2021

Abstract:Trust in robots has been gathering attention from multiple directions, as it has special relevance in the theoretical descriptions of human-robot interactions. It is essential for reaching high acceptance and usage rates of robotic technologies in society, as well as for enabling effective human-robot teaming. Researchers have been trying to model the development of trust in robots to improve the overall rapport between humans and robots. Unfortunately, the miscalibration of trust in automation is a common issue that jeopardizes the effectiveness of automation use. It happens when a user's trust levels are not appropriate to the capabilities of the automation being used. Users can be: under-trusting the automation -- when they do not use the functionalities that the machine can perform correctly because of a lack of trust; or over-trusting the automation -- when, due to an excess of trust, they use the machine in situations where its capabilities are not adequate. The main objective of this work is to examine driver's trust development in the ADS. We aim to model how risk factors (e.g.: false alarms and misses from the ADS) and the short-term interactions associated with these risk factors influence the dynamics of drivers' trust in the ADS. The driving context facilitates the instrumentation to measure trusting behaviors, such as drivers' eye movements and usage time of the automated features. Our findings indicate that a reliable characterization of drivers' trusting behaviors and a consequent estimation of trust levels is possible. We expect that these techniques will permit the design of ADSs able to adapt their behaviors to attempt to adjust driver's trust levels. This capability could avoid under- and over-trusting, which could harm their safety or their performance.

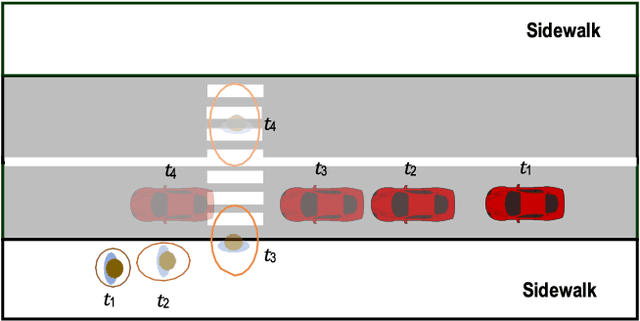

Efficient Behavior-aware Control of Automated Vehicles at Crosswalks using Minimal Information Pedestrian Prediction Model

Mar 22, 2020

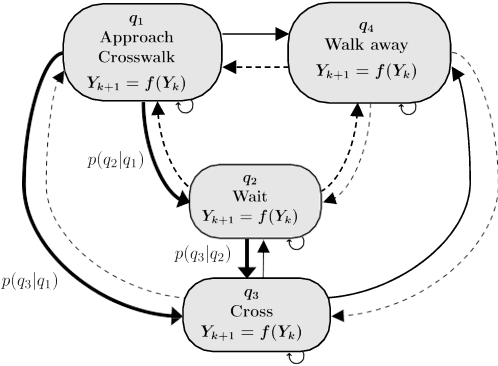

Abstract:For automated vehicles (AVs) to reliably navigate through crosswalks, they need to understand pedestrians crossing behaviors. Simple and reliable pedestrian behavior models aid in real-time AV control by allowing the AVs to predict future pedestrian behaviors. In this paper, we present a Behavior aware Model Predictive Controller (B-MPC) for AVs that incorporates long-term predictions of pedestrian crossing behavior using a previously developed pedestrian crossing model. The model incorporates pedestrians gap acceptance behavior and utilizes minimal pedestrian information, namely their position and speed, to predict pedestrians crossing behaviors. The BMPC controller is validated through simulations and compared to a rule-based controller. By incorporating predictions of pedestrian behavior, the B-MPC controller is able to efficiently plan for longer horizons and handle a wider range of pedestrian interaction scenarios than the rule-based controller. Results demonstrate the applicability of the controller for safe and efficient navigation at crossing scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge