Christoforos Mavrogiannis

ReloPush-BOSS: Optimization-guided Nonmonotone Rearrangement Planning for a Car-like Robot Pusher

Jan 29, 2026Abstract:We focus on multi-object rearrangement planning in densely cluttered environments using a car-like robot pusher. The combination of kinematic, geometric and physics constraints underlying this domain results in challenging nonmonotone problem instances which demand breaking each manipulation action into multiple parts to achieve a desired object rearrangement. Prior work tackles such instances by planning prerelocations, temporary object displacements that enable constraint satisfaction, but deciding where to prerelocate remains difficult due to local minima leading to infeasible or high-cost paths. Our key insight is that these minima can be avoided by steering a prerelocation optimization toward low-cost regions informed by Dubins path classification. These optimized prerelocations are integrated into an object traversability graph that encodes kinematic, geometric, and pushing constraints. Searching this graph in a depth-first fashion results in efficient, feasible rearrangement sequences. Across a series of densely cluttered scenarios with up to 13 objects, our framework, ReloPush-BOSS, exhibits consistently highest success rates and shortest pushing paths compared to state-of-the-art baselines. Hardware experiments on a 1/10 car-like pusher demonstrate the robustness of our approach. Code and footage from our experiments can be found at: https://fluentrobotics.com/relopushboss.

How Human Motion Prediction Quality Shapes Social Robot Navigation Performance in Constrained Spaces

Jan 14, 2026Abstract:Motivated by the vision of integrating mobile robots closer to humans in warehouses, hospitals, manufacturing plants, and the home, we focus on robot navigation in dynamic and spatially constrained environments. Ensuring human safety, comfort, and efficiency in such settings requires that robots are endowed with a model of how humans move around them. Human motion prediction around robots is especially challenging due to the stochasticity of human behavior, differences in user preferences, and data scarcity. In this work, we perform a methodical investigation of the effects of human motion prediction quality on robot navigation performance, as well as human productivity and impressions. We design a scenario involving robot navigation among two human subjects in a constrained workspace and instantiate it in a user study ($N=80$) involving two different robot platforms, conducted across two sites from different world regions. Key findings include evidence that: 1) the widely adopted average displacement error is not a reliable predictor of robot navigation performance and human impressions; 2) the common assumption of human cooperation breaks down in constrained environments, with users often not reciprocating robot cooperation, and causing performance degradations; 3) more efficient robot navigation often comes at the expense of human efficiency and comfort.

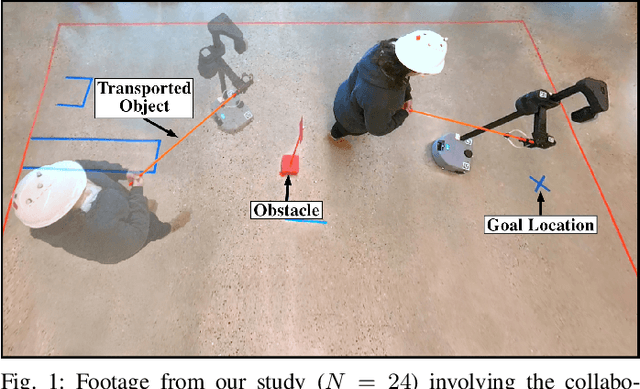

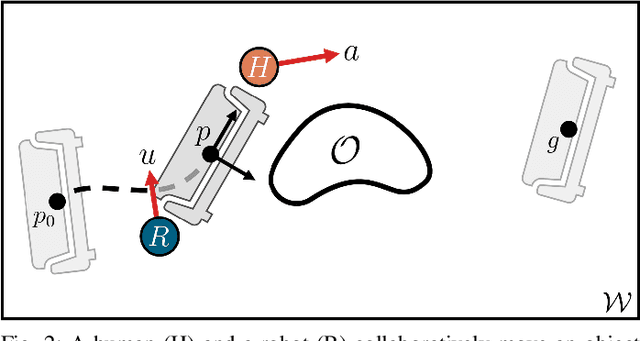

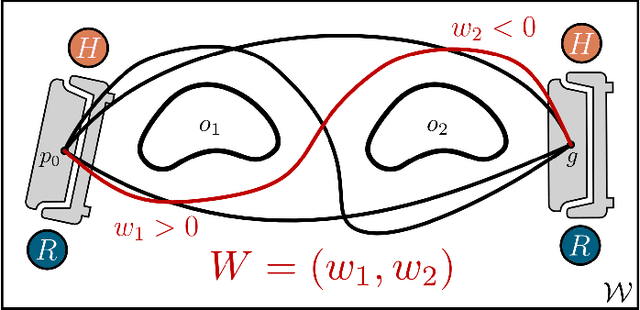

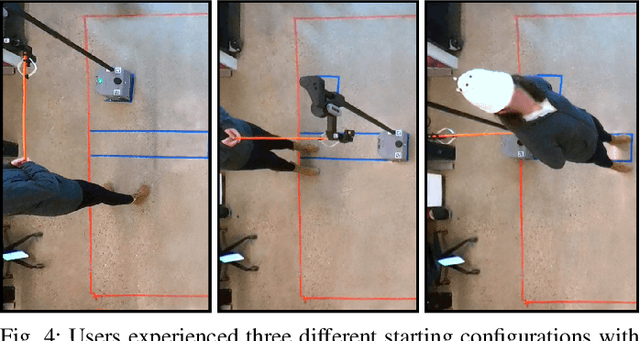

Implicit Communication in Human-Robot Collaborative Transport

Feb 05, 2025

Abstract:We focus on human-robot collaborative transport, in which a robot and a user collaboratively move an object to a goal pose. In the absence of explicit communication, this problem is challenging because it demands tight implicit coordination between two heterogeneous agents, who have very different sensing, actuation, and reasoning capabilities. Our key insight is that the two agents can coordinate fluently by encoding subtle, communicative signals into actions that affect the state of the transported object. To this end, we design an inference mechanism that probabilistically maps observations of joint actions executed by the two agents to a set of joint strategies of workspace traversal. Based on this mechanism, we define a cost representing the human's uncertainty over the unfolding traversal strategy and introduce it into a model predictive controller that balances between uncertainty minimization and efficiency maximization. We deploy our framework on a mobile manipulator (Hello Robot Stretch) and evaluate it in a within-subjects lab study (N=24). We show that our framework enables greater team performance and empowers the robot to be perceived as a significantly more fluent and competent partner compared to baselines lacking a communicative mechanism.

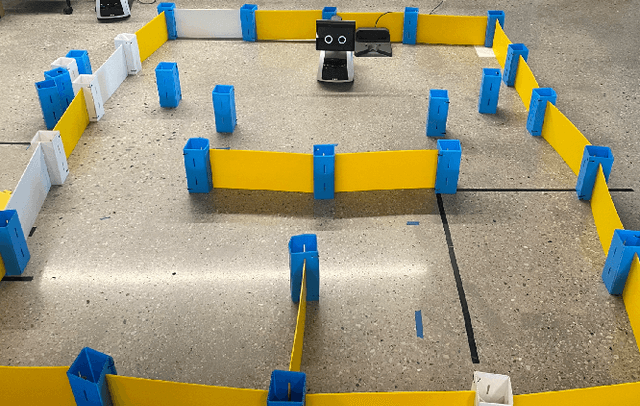

ReloPush: Multi-object Rearrangement in Confined Spaces with a Nonholonomic Mobile Robot Pusher

Sep 26, 2024

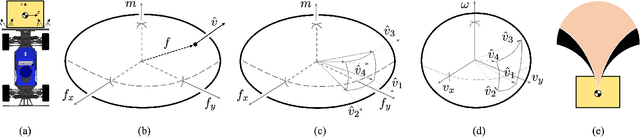

Abstract:We focus on the problem of rearranging a set of objects within a confined space with a nonholonomically constrained mobile robot pusher. This problem is relevant to many real-world domains, including warehouse automation and construction. These domains give rise to instances involving a combination of geometric, kinematic, and physics constraints, which make planning particularly challenging. Prior work often makes simplifying assumptions like the use of holonomic mobile robots or dexterous manipulators capable of unconstrained overhand reaching. Our key insight is we can empower even a constrained mobile pusher to tackle complex rearrangement tasks by enabling it to modify the environment to its favor in a constraint-aware fashion. To this end, we describe a Push-Traversability graph, whose vertices represent poses that the pusher can push objects from and edges represent optimal, kinematically feasible, and stable push-rearrangements of objects. Based on this graph, we develop ReloPush, a planning framework that leverages Dubins curves and standard graph search techniques to generate an efficient sequence of object rearrangements to be executed by the pusher. We evaluate ReloPush across a series of challenging scenarios, involving the rearrangement of densely cluttered workspaces with up to eight objects by a 1tenth mobile robot pusher. ReloPush exhibits orders of magnitude faster runtimes and significantly more robust execution in the real world, evidenced in lower execution times and fewer losses of object contact, compared to two baselines lacking our proposed graph structure.

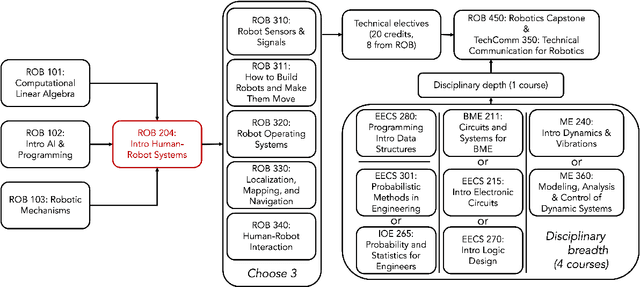

ROB 204: Introduction to Human-Robot Systems at the University of Michigan, Ann Arbor

May 23, 2024

Abstract:The University of Michigan Robotics program focuses on the study of embodied intelligence that must sense, reason, act, and work with people to improve quality of life and productivity equitably across society. ROB 204, part of the core curriculum towards the undergraduate degree in Robotics, introduces students to topics that enable conceptually designing a robotic system to address users' needs from a sociotechnical context. Students are introduced to human-robot interaction (HRI) concepts and the process for socially-engaged design with a Learn-Reinforce-Integrate approach. In this paper, we discuss the course topics and our teaching methodology, and provide recommendations for delivering this material. Overall, students leave the course with a new understanding and appreciation for how human capabilities can inform requirements for a robotics system, how humans can interact with a robot, and how to assess the usability of robotic systems.

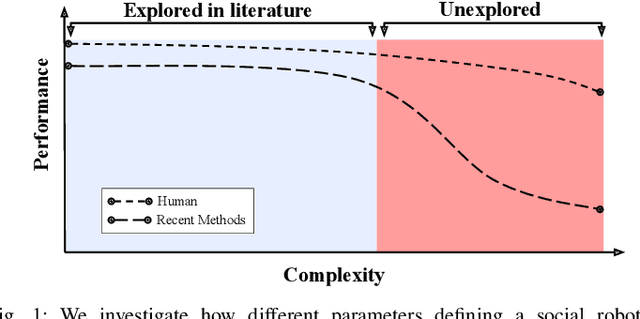

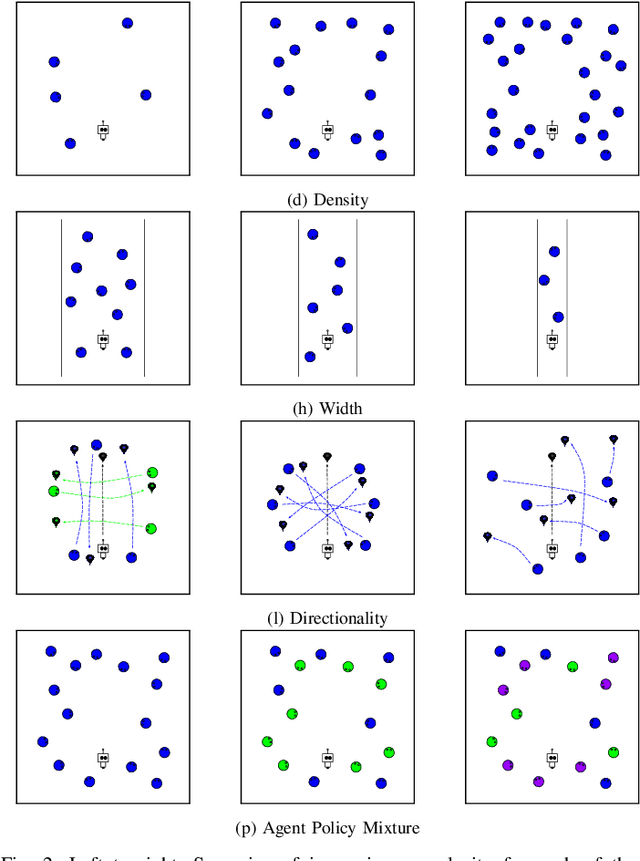

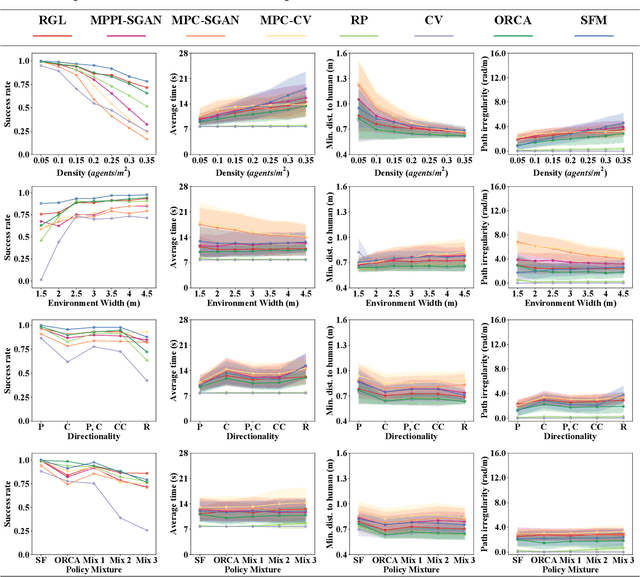

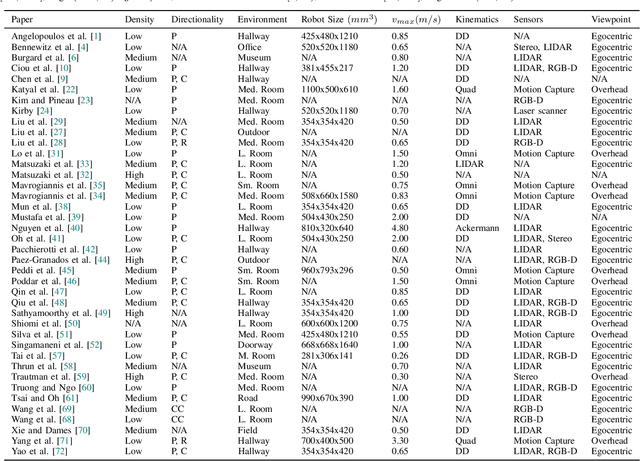

Characterizing the Complexity of Social Robot Navigation Scenarios

May 18, 2024

Abstract:Social robot navigation algorithms are often demonstrated in overly simplified scenarios, prohibiting the extraction of practical insights about their relevance to real world domains. Our key insight is that an understanding of the inherent complexity of a social robot navigation scenario could help characterize the limitations of existing navigation algorithms and provide actionable directions for improvement. Through an exploration of recent literature, we identify a series of factors contributing to the complexity of a scenario, disambiguating between contextual and robot-related ones. We then conduct a simulation study investigating how manipulations of contextual factors impact the performance of a variety of navigation algorithms. We find that dense and narrow environments correlate most strongly with performance drops, while the heterogeneity of agent policies and directionality of interactions have a less pronounced effect. This motivates a shift towards developing and testing algorithms under higher-complexity settings.

HOUND: An Open-Source, Low-cost Research Platform for High-speed Off-road Underactuated Nonholonomic Driving

Nov 19, 2023

Abstract:Off-road vehicles are susceptible to rollovers in terrains with large elevation features, such as steep hills, ditches, and berms. One way to protect them against rollovers is ruggedization through the use of industrial-grade parts and physical modifications. However, this solution can be prohibitively expensive for academic research labs. Our key insight is that a software-based rollover-prevention system (RPS) enables the use of commercial-off-the-shelf hardware parts that are cheaper than their industrial counterparts, thus reducing overall cost. In this paper, we present HOUND, a small-scale, inexpensive, off-road autonomy platform that can handle challenging outdoor terrains at high speeds through the integration of an RPS. HOUND is integrated with a complete stack for perception and control, geared towards aggressive offroad driving. We deploy HOUND in the real world, at high speeds, on four different terrains covering 50 km of driving and highlight its utility in preventing rollovers and traversing difficult terrain. Additionally, through integration with BeamNG, a state-of-the-art driving simulator, we demonstrate a significant reduction in rollovers without compromising turning ability across a series of simulated experiments. Supplementary material can be found on our website, where we will also release all design documents for the platform: https://sites.google.com/view/prl-hound .

Cook2LTL: Translating Cooking Recipes to LTL Formulae using Large Language Models

Sep 29, 2023

Abstract:Cooking recipes are especially challenging to translate to robot plans as they feature rich linguistic complexity, temporally-extended interconnected tasks, and an almost infinite space of possible actions. Our key insight is that combining a source of background cooking domain knowledge with a formalism capable of handling the temporal richness of cooking recipes could enable the extraction of unambiguous, robot-executable plans. In this work, we use Linear Temporal Logic (LTL) as a formal language expressible enough to model the temporal nature of cooking recipes. Leveraging pre-trained Large Language Models (LLMs), we present a system that translates instruction steps from an arbitrary cooking recipe found on the internet to a series of LTL formulae, grounding high-level cooking actions to a set of primitive actions that are executable by a manipulator in a kitchen environment. Our approach makes use of a caching scheme, dynamically building a queryable action library at runtime, significantly decreasing LLM API calls (-51%), latency (-59%) and cost (-42%) compared to a baseline that queries the LLM for every newly encountered action at runtime. We demonstrate the transferability of our system in a realistic simulation platform through showcasing a set of simple cooking tasks.

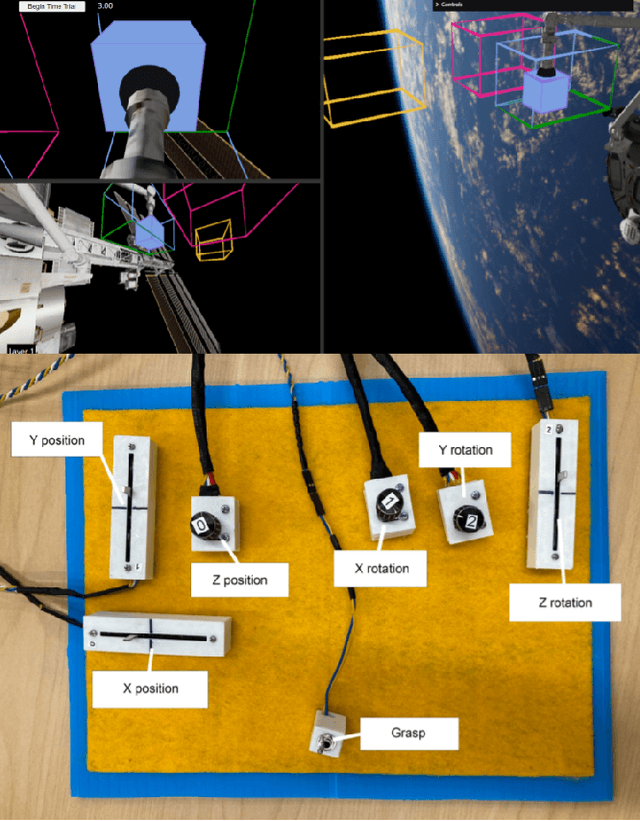

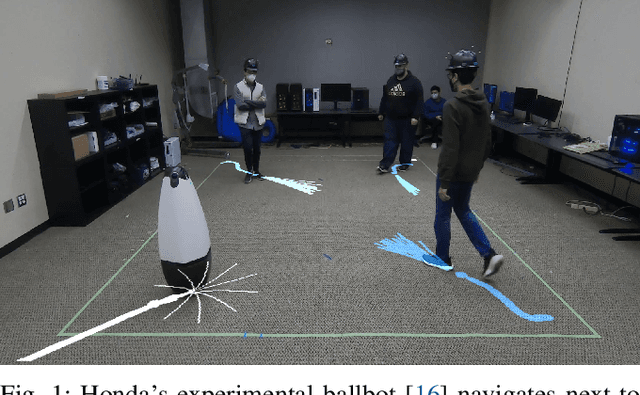

From Crowd Motion Prediction to Robot Navigation in Crowds

Mar 02, 2023

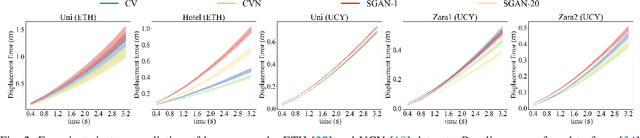

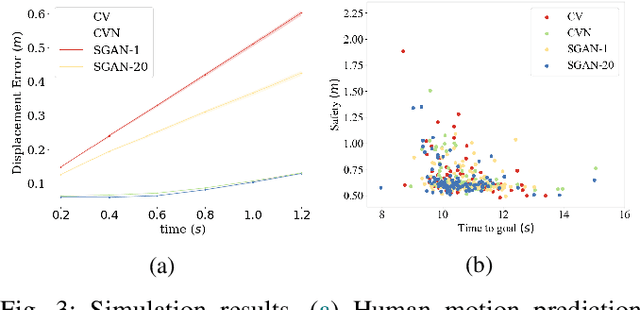

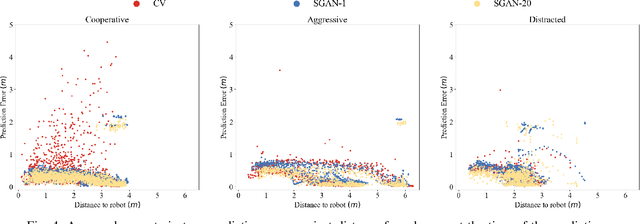

Abstract:We focus on robot navigation in crowded environments. To navigate safely and efficiently within crowds, robots need models for crowd motion prediction. Building such models is hard due to the high dimensionality of multiagent domains and the challenge of collecting or simulating interaction-rich crowd-robot demonstrations. While there has been important progress on models for offline pedestrian motion forecasting, transferring their performance on real robots is nontrivial due to close interaction settings and novelty effects on users. In this paper, we investigate the utility of a recent state-of-the-art motion prediction model (S-GAN) for crowd navigation tasks. We incorporate this model into a model predictive controller (MPC) and deploy it on a self-balancing robot which we subject to a diverse range of crowd behaviors in the lab. We demonstrate that while S-GAN motion prediction accuracy transfers to the real world, its value is not reflected on navigation performance, measured with respect to safety and efficiency; in fact, the MPC performs indistinguishably even when using a simple constant-velocity prediction model, suggesting that substantial model improvements might be needed to yield significant gains for crowd navigation tasks. Footage from our experiments can be found at https://youtu.be/mzFiXg8KsZ0.

PuSHR: A Multirobot System for Nonprehensile Rearrangement

Mar 02, 2023

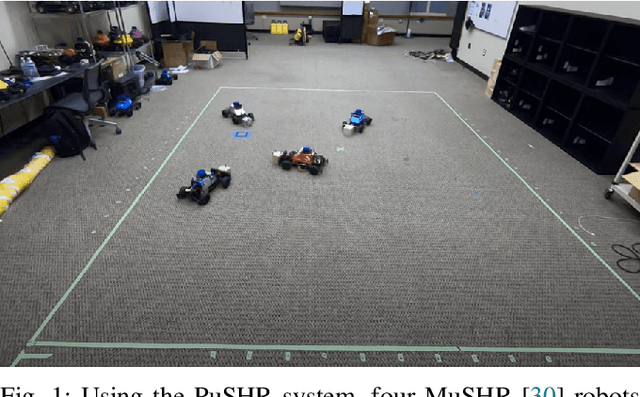

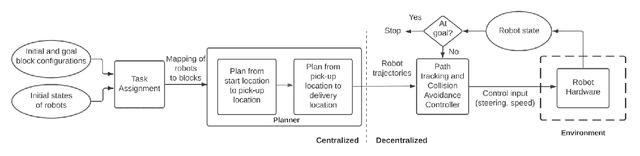

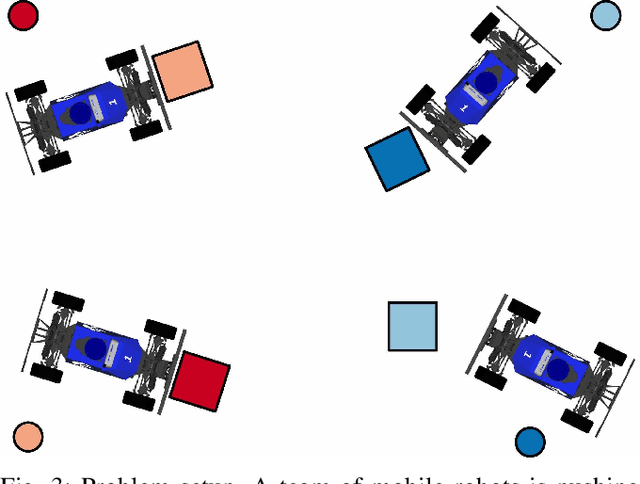

Abstract:We focus on the problem of rearranging a set of objects with a team of car-like robot pushers built using off-the-shelf components. Maintaining control of pushed objects while avoiding collisions in a tight space demands highly coordinated motion that is challenging to execute on constrained hardware. Centralized replanning approaches become intractable even for small-sized problems whereas decentralized approaches often get stuck in deadlocks. Our key insight is that by carefully assigning pushing tasks to robots, we could reduce the complexity of the rearrangement task, enabling robust performance via scalable decentralized control. Based on this insight, we built PuSHR, a system that optimally assigns pushing tasks and trajectories to robots offline, and performs trajectory tracking via decentralized control online. Through an ablation study in simulation, we demonstrate that PuSHR dominates baselines ranging from purely decentralized to fully decentralized in terms of success rate and time efficiency across challenging tasks with up to 4 robots. Hardware experiments demonstrate the transfer of our system to the real world and highlight its robustness to model inaccuracies. Our code can be found at https://github.com/prl-mushr/pushr, and videos from our experiments at https://youtu.be/DIWmZerF_O8.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge