Sriyash Poddar

Personalizing Reinforcement Learning from Human Feedback with Variational Preference Learning

Aug 19, 2024Abstract:Reinforcement Learning from Human Feedback (RLHF) is a powerful paradigm for aligning foundation models to human values and preferences. However, current RLHF techniques cannot account for the naturally occurring differences in individual human preferences across a diverse population. When these differences arise, traditional RLHF frameworks simply average over them, leading to inaccurate rewards and poor performance for individual subgroups. To address the need for pluralistic alignment, we develop a class of multimodal RLHF methods. Our proposed techniques are based on a latent variable formulation - inferring a novel user-specific latent and learning reward models and policies conditioned on this latent without additional user-specific data. While conceptually simple, we show that in practice, this reward modeling requires careful algorithmic considerations around model architecture and reward scaling. To empirically validate our proposed technique, we first show that it can provide a way to combat underspecification in simulated control problems, inferring and optimizing user-specific reward functions. Next, we conduct experiments on pluralistic language datasets representing diverse user preferences and demonstrate improved reward function accuracy. We additionally show the benefits of this probabilistic framework in terms of measuring uncertainty, and actively learning user preferences. This work enables learning from diverse populations of users with divergent preferences, an important challenge that naturally occurs in problems from robot learning to foundation model alignment.

From Crowd Motion Prediction to Robot Navigation in Crowds

Mar 02, 2023

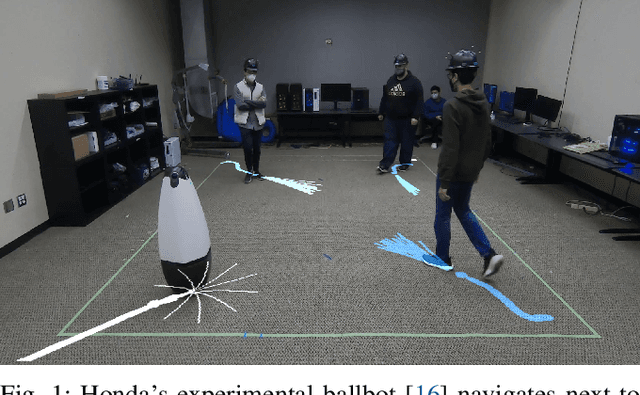

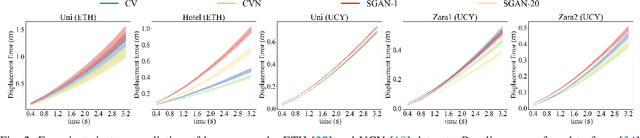

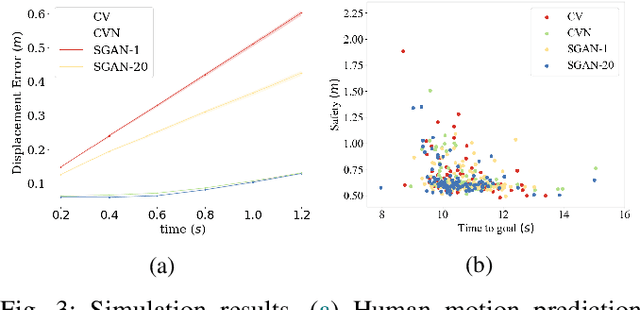

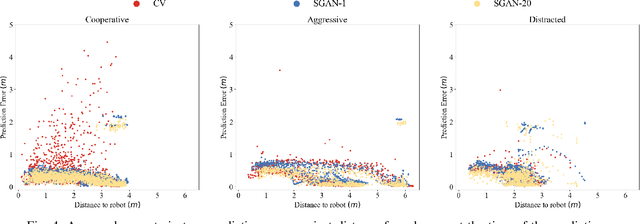

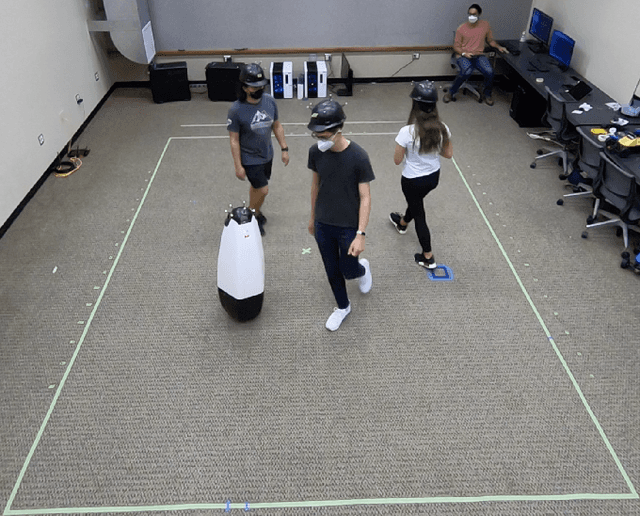

Abstract:We focus on robot navigation in crowded environments. To navigate safely and efficiently within crowds, robots need models for crowd motion prediction. Building such models is hard due to the high dimensionality of multiagent domains and the challenge of collecting or simulating interaction-rich crowd-robot demonstrations. While there has been important progress on models for offline pedestrian motion forecasting, transferring their performance on real robots is nontrivial due to close interaction settings and novelty effects on users. In this paper, we investigate the utility of a recent state-of-the-art motion prediction model (S-GAN) for crowd navigation tasks. We incorporate this model into a model predictive controller (MPC) and deploy it on a self-balancing robot which we subject to a diverse range of crowd behaviors in the lab. We demonstrate that while S-GAN motion prediction accuracy transfers to the real world, its value is not reflected on navigation performance, measured with respect to safety and efficiency; in fact, the MPC performs indistinguishably even when using a simple constant-velocity prediction model, suggesting that substantial model improvements might be needed to yield significant gains for crowd navigation tasks. Footage from our experiments can be found at https://youtu.be/mzFiXg8KsZ0.

Topology-Informed Model Predictive Control for Anticipatory Collision Avoidance on a Ballbot

Sep 10, 2021

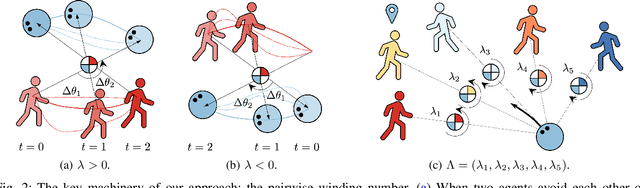

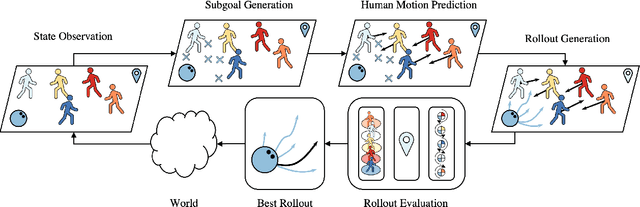

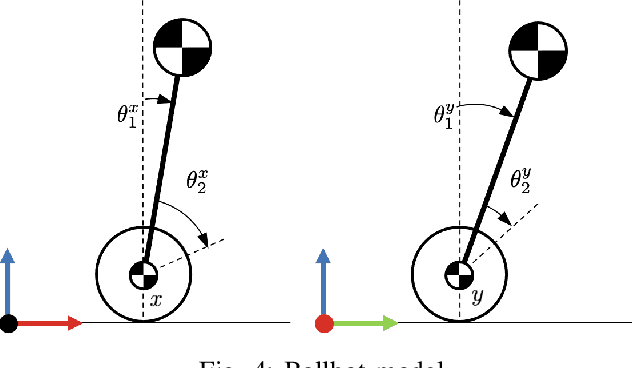

Abstract:We focus on the problem of planning safe and efficient motion for a ballbot (i.e., a dynamically balancing mobile robot), navigating in a crowded environment. The ballbot's design gives rise to human-readable motion which is valuable for crowd navigation. However, dynamic stabilization introduces kinematic constraints that severely limit the ability of the robot to execute aggressive maneuvers, complicating collision avoidance and respect for human personal space. Past works reduce the need for aggressive maneuvering by motivating anticipatory collision avoidance through the use of human motion prediction models. However, multiagent behavior prediction is hard due to the combinatorial structure of the space. Our key insight is that we can accomplish anticipatory multiagent collision avoidance without high-fidelity prediction models if we capture fundamental features of multiagent dynamics. To this end, we build a model predictive control architecture that employs a constant-velocity model of human motion prediction but monitors and proactively adapts to the unfolding homotopy class of crowd-robot dynamics by taking actions that maximize the pairwise winding numbers between the robot and each human agent. This results in robot motion that accomplishes statistically significantly higher clearances from the crowd compared to state-of-the-art baselines while maintaining similar levels of efficiency, across a variety of challenging physical scenarios and crowd simulators.

Understanding the Role of Affect Dimensions in Detecting Emotions from Tweets: A Multi-task Approach

May 09, 2021

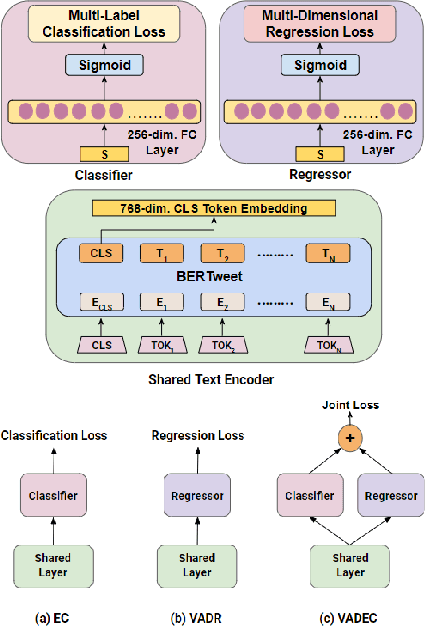

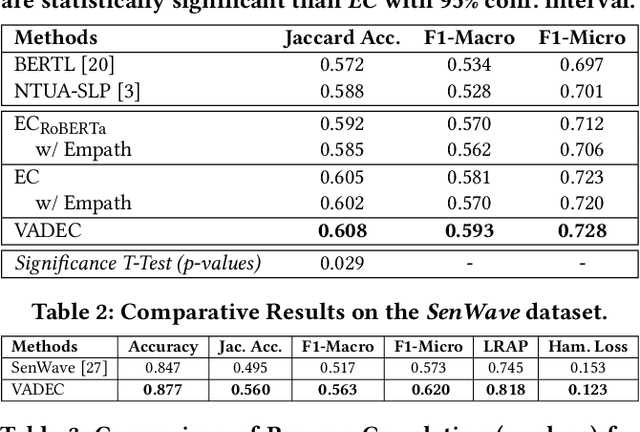

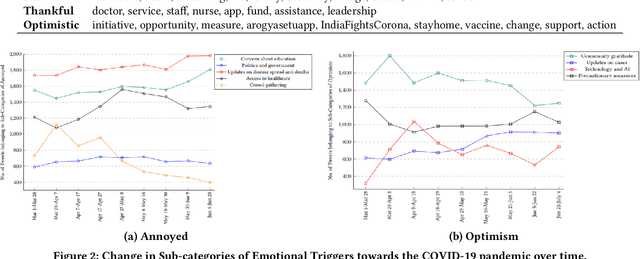

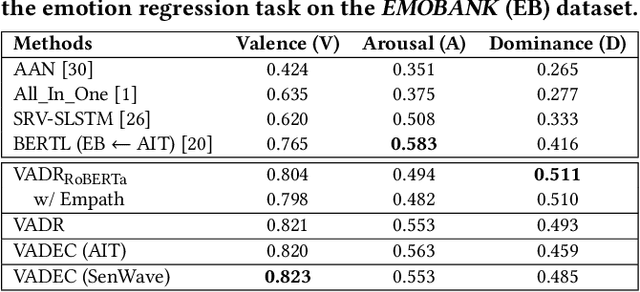

Abstract:We propose VADEC, a multi-task framework that exploits the correlation between the categorical and dimensional models of emotion representation for better subjectivity analysis. Focusing primarily on the effective detection of emotions from tweets, we jointly train multi-label emotion classification and multi-dimensional emotion regression, thereby utilizing the inter-relatedness between the tasks. Co-training especially helps in improving the performance of the classification task as we outperform the strongest baselines with 3.4%, 11%, and 3.9% gains in Jaccard Accuracy, Macro-F1, and Micro-F1 scores respectively on the AIT dataset. We also achieve state-of-the-art results with 11.3% gains averaged over six different metrics on the SenWave dataset. For the regression task, VADEC, when trained with SenWave, achieves 7.6% and 16.5% gains in Pearson Correlation scores over the current state-of-the-art on the EMOBANK dataset for the Valence (V) and Dominance (D) affect dimensions respectively. We conclude our work with a case study on COVID-19 tweets posted by Indians that further helps in establishing the efficacy of our proposed solution.

How Have We Reacted To The COVID-19 Pandemic? Analyzing Changing Indian Emotions Through The Lens of Twitter

Aug 20, 2020

Abstract:Since its outbreak, the ongoing COVID-19 pandemic has caused unprecedented losses to human lives and economies around the world. As of 18th July 2020, the World Health Organization (WHO) has reported more than 13 million confirmed cases including close to 600,000 deaths across 216 countries and territories. Despite several government measures, India has gradually moved up the ranks to become the third worst-hit nation by the pandemic after the US and Brazil, thus causing widespread anxiety and fear among her citizens. As majority of the world's population continues to remain confined to their homes, more and more people have started relying on social media platforms such as Twitter for expressing their feelings and attitudes towards various aspects of the pandemic. With rising concerns of mental well-being, it becomes imperative to analyze the dynamics of public affect in order to anticipate any potential threats and take precautionary measures. Since affective states of human mind are more nuanced than meager binary sentiments, here we propose a deep learning-based system to identify people's emotions from their tweets. We achieve competitive results on two benchmark datasets for multi-label emotion classification. We then use our system to analyze the evolution of emotional responses among Indians as the pandemic continues to spread its wings. We also study the development of salient factors contributing towards the changes in attitudes over time. Finally, we discuss directions to further improve our work and hope that our analysis can aid in better public health monitoring.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge