Kris Hauser

Department of Computer Science at the University of Illinois at Urbana-Champaign, Urbana, Illinois, USA

Flexible Multitask Learning with Factorized Diffusion Policy

Dec 26, 2025Abstract:Multitask learning poses significant challenges due to the highly multimodal and diverse nature of robot action distributions. However, effectively fitting policies to these complex task distributions is often difficult, and existing monolithic models often underfit the action distribution and lack the flexibility required for efficient adaptation. We introduce a novel modular diffusion policy framework that factorizes complex action distributions into a composition of specialized diffusion models, each capturing a distinct sub-mode of the behavior space for a more effective overall policy. In addition, this modular structure enables flexible policy adaptation to new tasks by adding or fine-tuning components, which inherently mitigates catastrophic forgetting. Empirically, across both simulation and real-world robotic manipulation settings, we illustrate how our method consistently outperforms strong modular and monolithic baselines.

Particle-Grid Neural Dynamics for Learning Deformable Object Models from RGB-D Videos

Jun 18, 2025Abstract:Modeling the dynamics of deformable objects is challenging due to their diverse physical properties and the difficulty of estimating states from limited visual information. We address these challenges with a neural dynamics framework that combines object particles and spatial grids in a hybrid representation. Our particle-grid model captures global shape and motion information while predicting dense particle movements, enabling the modeling of objects with varied shapes and materials. Particles represent object shapes, while the spatial grid discretizes the 3D space to ensure spatial continuity and enhance learning efficiency. Coupled with Gaussian Splattings for visual rendering, our framework achieves a fully learning-based digital twin of deformable objects and generates 3D action-conditioned videos. Through experiments, we demonstrate that our model learns the dynamics of diverse objects -- such as ropes, cloths, stuffed animals, and paper bags -- from sparse-view RGB-D recordings of robot-object interactions, while also generalizing at the category level to unseen instances. Our approach outperforms state-of-the-art learning-based and physics-based simulators, particularly in scenarios with limited camera views. Furthermore, we showcase the utility of our learned models in model-based planning, enabling goal-conditioned object manipulation across a range of tasks. The project page is available at https://kywind.github.io/pgnd .

Localized Graph-Based Neural Dynamics Models for Terrain Manipulation

Mar 30, 2025

Abstract:Predictive models can be particularly helpful for robots to effectively manipulate terrains in construction sites and extraterrestrial surfaces. However, terrain state representations become extremely high-dimensional especially to capture fine-resolution details and when depth is unknown or unbounded. This paper introduces a learning-based approach for terrain dynamics modeling and manipulation, leveraging the Graph-based Neural Dynamics (GBND) framework to represent terrain deformation as motion of a graph of particles. Based on the principle that the moving portion of a terrain is usually localized, our approach builds a large terrain graph (potentially millions of particles) but only identifies a very small active subgraph (hundreds of particles) for predicting the outcomes of robot-terrain interaction. To minimize the size of the active subgraph we introduce a learning-based approach that identifies a small region of interest (RoI) based on the robot's control inputs and the current scene. We also introduce a novel domain boundary feature encoding that allows GBNDs to perform accurate dynamics prediction in the RoI interior while avoiding particle penetration through RoI boundaries. Our proposed method is both orders of magnitude faster than naive GBND and it achieves better overall prediction accuracy. We further evaluated our framework on excavation and shaping tasks on terrain with different granularity.

Map Space Belief Prediction for Manipulation-Enhanced Mapping

Feb 28, 2025

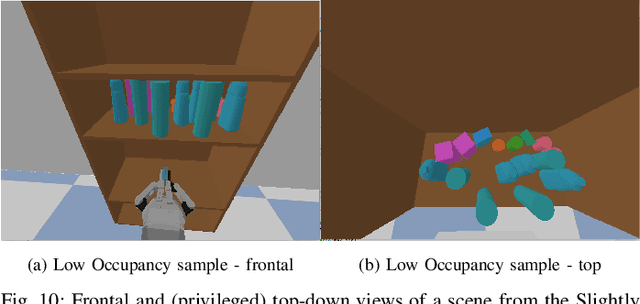

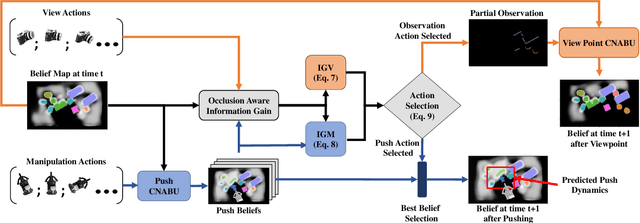

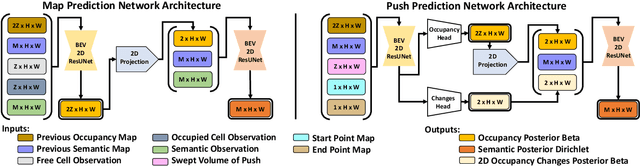

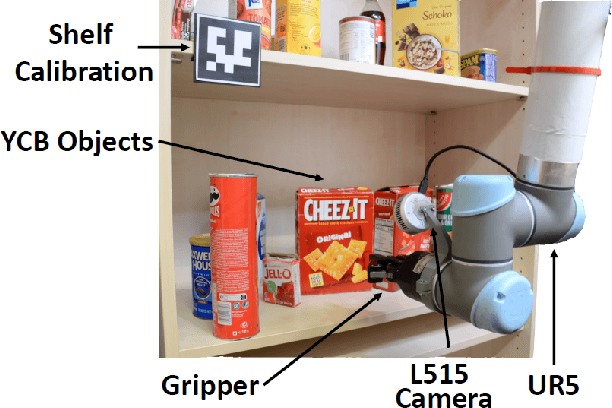

Abstract:Searching for objects in cluttered environments requires selecting efficient viewpoints and manipulation actions to remove occlusions and reduce uncertainty in object locations, shapes, and categories. In this work, we address the problem of manipulation-enhanced semantic mapping, where a robot has to efficiently identify all objects in a cluttered shelf. Although Partially Observable Markov Decision Processes~(POMDPs) are standard for decision-making under uncertainty, representing unstructured interactive worlds remains challenging in this formalism. To tackle this, we define a POMDP whose belief is summarized by a metric-semantic grid map and propose a novel framework that uses neural networks to perform map-space belief updates to reason efficiently and simultaneously about object geometries, locations, categories, occlusions, and manipulation physics. Further, to enable accurate information gain analysis, the learned belief updates should maintain calibrated estimates of uncertainty. Therefore, we propose Calibrated Neural-Accelerated Belief Updates (CNABUs) to learn a belief propagation model that generalizes to novel scenarios and provides confidence-calibrated predictions for unknown areas. Our experiments show that our novel POMDP planner improves map completeness and accuracy over existing methods in challenging simulations and successfully transfers to real-world cluttered shelves in zero-shot fashion.

Autonomous Excavation of Challenging Terrain using Oscillatory Primitives and Adaptive Impedance Control

Sep 26, 2024Abstract:This paper addresses the challenge of autonomous excavation of challenging terrains, in particular those that are prone to jamming and inter-particle adhesion when tackled by a standard penetrate-drag-scoop motion pattern. Inspired by human excavation strategies, our approach incorporates oscillatory rotation elements -- including swivel, twist, and dive motions -- to break up compacted, tangled grains and reduce jamming. We also present an adaptive impedance control method, the Reactive Attractor Impedance Controller (RAIC), that adapts a motion trajectory to unexpected forces during loading in a manner that tracks a trajectory closely when loads are low, but avoids excessive loads when significant resistance is met. Our method is evaluated on four terrains using a robotic arm, demonstrating improved excavation performance across multiple metrics, including volume scooped, protective stop rate, and trajectory completion percentage.

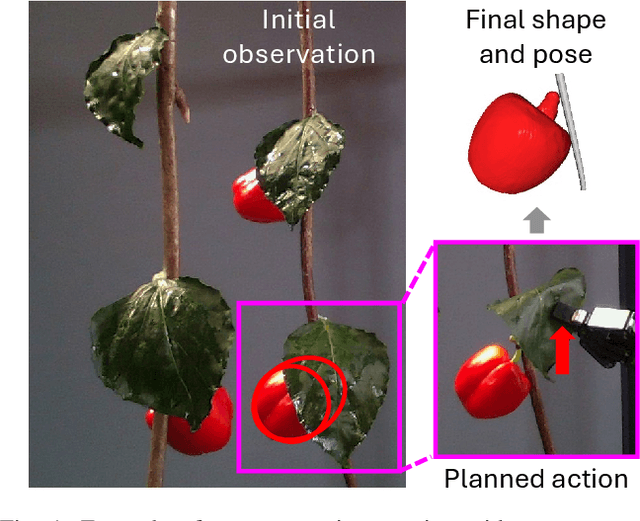

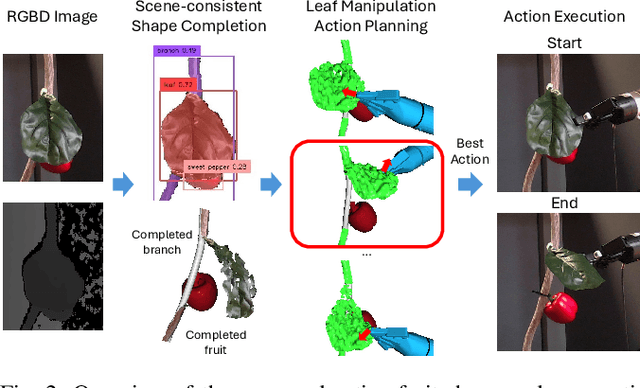

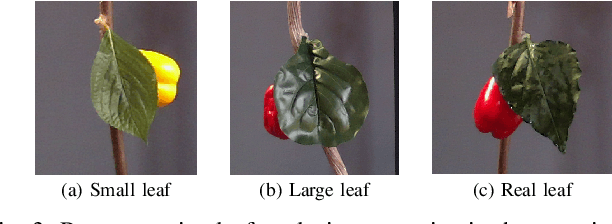

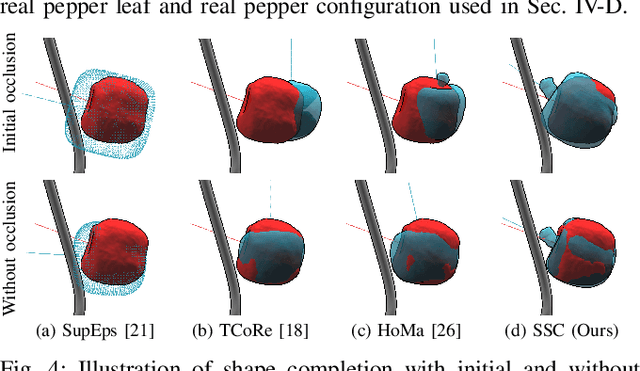

Safe Leaf Manipulation for Accurate Shape and Pose Estimation of Occluded Fruits

Sep 25, 2024

Abstract:Fruit monitoring plays an important role in crop management, and rising global fruit consumption combined with labor shortages necessitates automated monitoring with robots. However, occlusions from plant foliage often hinder accurate shape and pose estimation. Therefore, we propose an active fruit shape and pose estimation method that physically manipulates occluding leaves to reveal hidden fruits. This paper introduces a framework that plans robot actions to maximize visibility and minimize leaf damage. We developed a novel scene-consistent shape completion technique to improve fruit estimation under heavy occlusion and utilize a perception-driven deformation graph model to predict leaf deformation during planning. Experiments on artificial and real sweet pepper plants demonstrate that our method enables robots to safely move leaves aside, exposing fruits for accurate shape and pose estimation, outperforming baseline methods. Project page: https://shaoxiongyao.github.io/lmap-ssc/.

Few-shot Scooping Under Domain Shift via Simulated Maximal Deployment Gaps

Aug 06, 2024

Abstract:Autonomous lander missions on extraterrestrial bodies need to sample granular materials while coping with domain shifts, even when sampling strategies are extensively tuned on Earth. To tackle this challenge, this paper studies the few-shot scooping problem and proposes a vision-based adaptive scooping strategy that uses the deep kernel Gaussian process method trained with a novel meta-training strategy to learn online from very limited experience on out-of-distribution target terrains. Our Deep Kernel Calibration with Maximal Deployment Gaps (kCMD) strategy explicitly trains a deep kernel model to adapt to large domain shifts by creating simulated maximal deployment gaps from an offline training dataset and training models to overcome these deployment gaps during training. Employed in a Bayesian Optimization sequential decision-making framework, the proposed method allows the robot to perform high-quality scooping actions on out-of-distribution terrains after a few attempts, significantly outperforming non-adaptive methods proposed in the excavation literature as well as other state-of-the-art meta-learning methods. The proposed method also demonstrates zero-shot transfer capability, successfully adapting to the NASA OWLAT platform, which serves as a state-of-the-art simulator for potential future planetary missions. These results demonstrate the potential of training deep models with simulated deployment gaps for more generalizable meta-learning in high-capacity models. Furthermore, they highlight the promise of our method in autonomous lander sampling missions by enabling landers to overcome the deployment gap between Earth and extraterrestrial bodies.

AdaptiGraph: Material-Adaptive Graph-Based Neural Dynamics for Robotic Manipulation

Jul 10, 2024

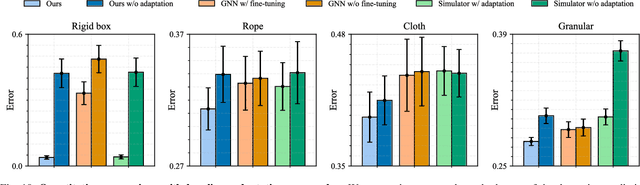

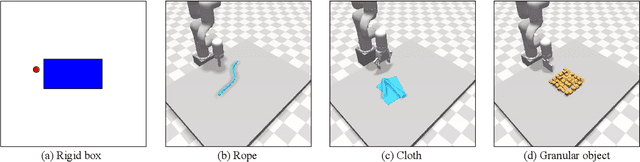

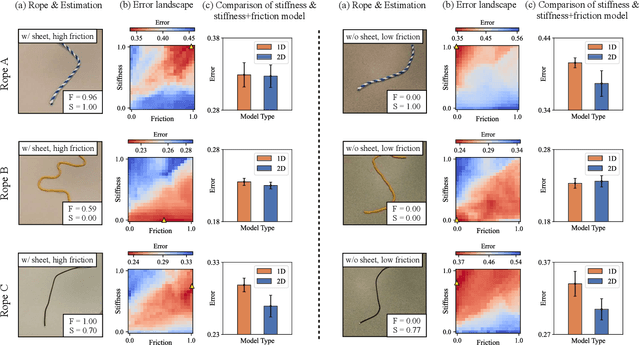

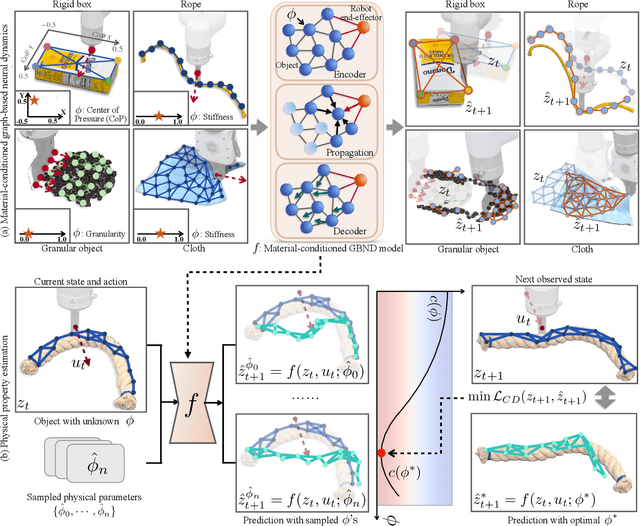

Abstract:Predictive models are a crucial component of many robotic systems. Yet, constructing accurate predictive models for a variety of deformable objects, especially those with unknown physical properties, remains a significant challenge. This paper introduces AdaptiGraph, a learning-based dynamics modeling approach that enables robots to predict, adapt to, and control a wide array of challenging deformable materials with unknown physical properties. AdaptiGraph leverages the highly flexible graph-based neural dynamics (GBND) framework, which represents material bits as particles and employs a graph neural network (GNN) to predict particle motion. Its key innovation is a unified physical property-conditioned GBND model capable of predicting the motions of diverse materials with varying physical properties without retraining. Upon encountering new materials during online deployment, AdaptiGraph utilizes a physical property optimization process for a few-shot adaptation of the model, enhancing its fit to the observed interaction data. The adapted models can precisely simulate the dynamics and predict the motion of various deformable materials, such as ropes, granular media, rigid boxes, and cloth, while adapting to different physical properties, including stiffness, granular size, and center of pressure. On prediction and manipulation tasks involving a diverse set of real-world deformable objects, our method exhibits superior prediction accuracy and task proficiency over non-material-conditioned and non-adaptive models. The project page is available at https://robopil.github.io/adaptigraph/ .

Characterizing the Complexity of Social Robot Navigation Scenarios

May 18, 2024

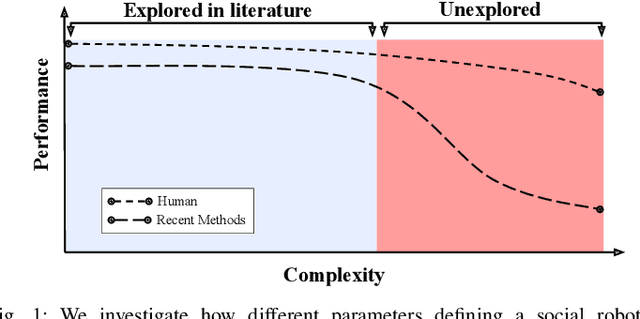

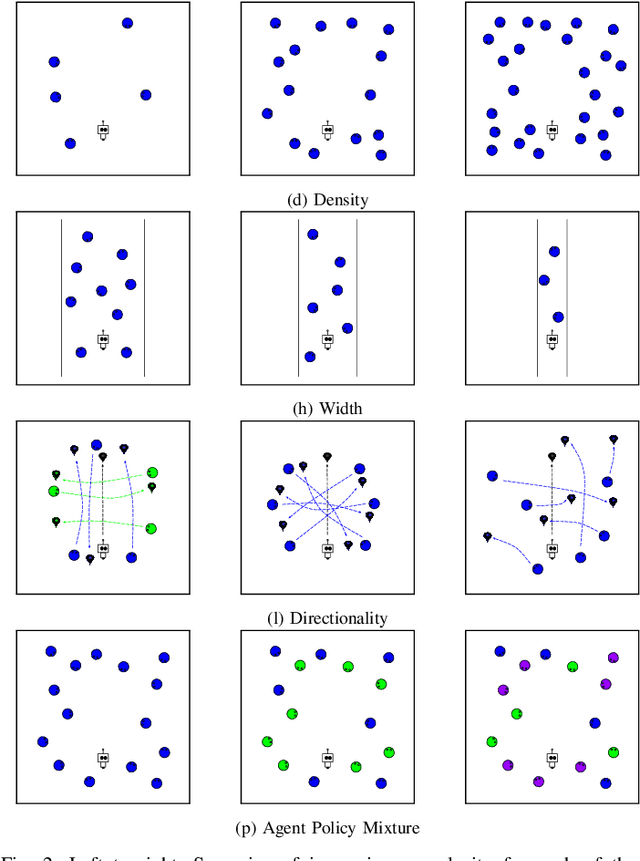

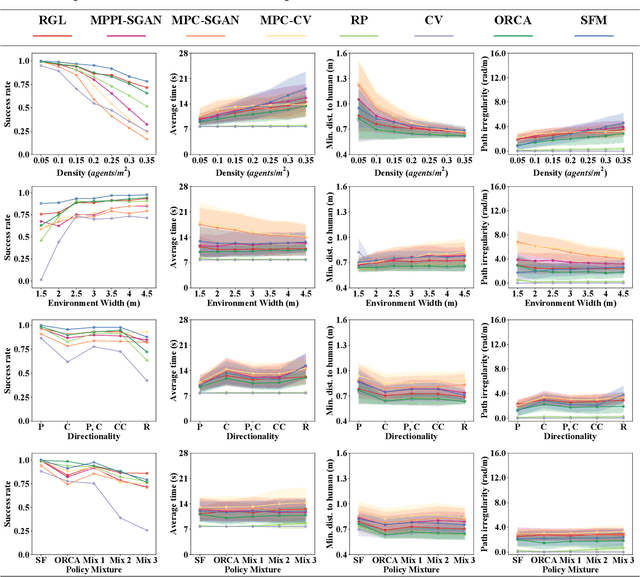

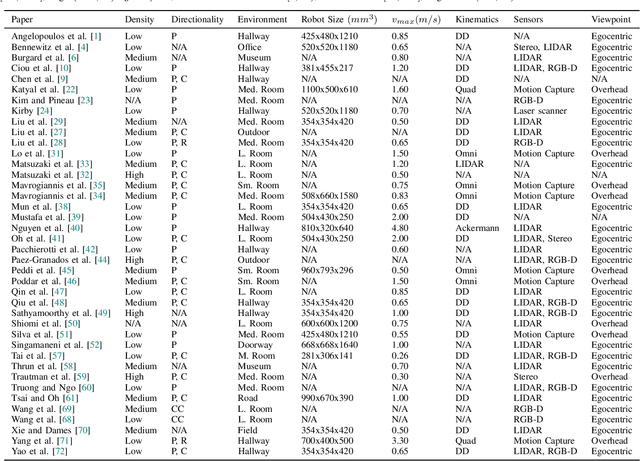

Abstract:Social robot navigation algorithms are often demonstrated in overly simplified scenarios, prohibiting the extraction of practical insights about their relevance to real world domains. Our key insight is that an understanding of the inherent complexity of a social robot navigation scenario could help characterize the limitations of existing navigation algorithms and provide actionable directions for improvement. Through an exploration of recent literature, we identify a series of factors contributing to the complexity of a scenario, disambiguating between contextual and robot-related ones. We then conduct a simulation study investigating how manipulations of contextual factors impact the performance of a variety of navigation algorithms. We find that dense and narrow environments correlate most strongly with performance drops, while the heterogeneity of agent policies and directionality of interactions have a less pronounced effect. This motivates a shift towards developing and testing algorithms under higher-complexity settings.

Efficient Automatic Tuning for Data-driven Model Predictive Control via Meta-Learning

Mar 30, 2024

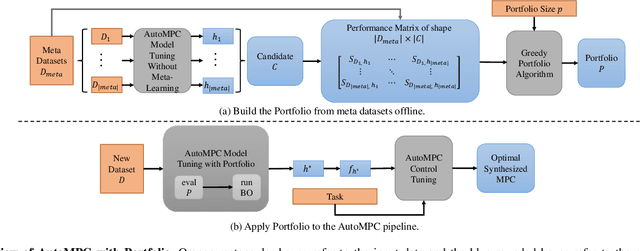

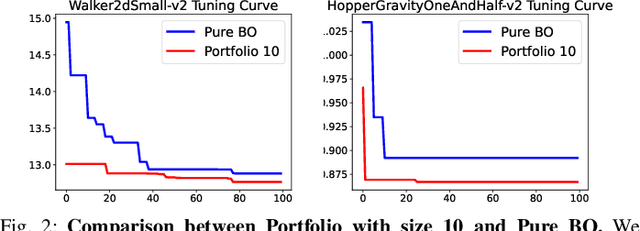

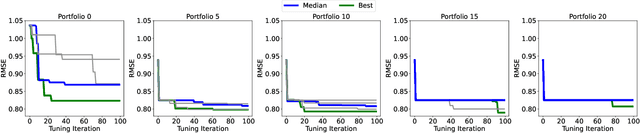

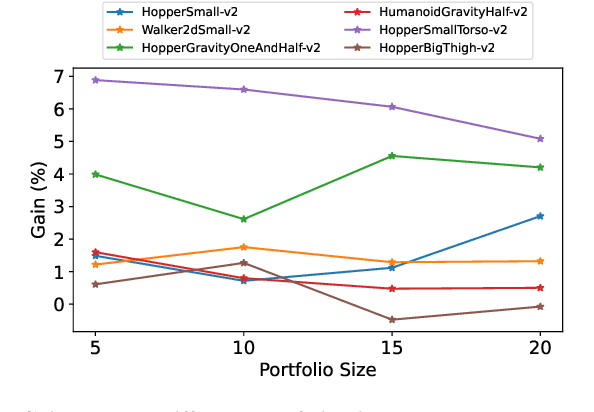

Abstract:AutoMPC is a Python package that automates and optimizes data-driven model predictive control. However, it can be computationally expensive and unstable when exploring large search spaces using pure Bayesian Optimization (BO). To address these issues, this paper proposes to employ a meta-learning approach called Portfolio that improves AutoMPC's efficiency and stability by warmstarting BO. Portfolio optimizes initial designs for BO using a diverse set of configurations from previous tasks and stabilizes the tuning process by fixing initial configurations instead of selecting them randomly. Experimental results demonstrate that Portfolio outperforms the pure BO in finding desirable solutions for AutoMPC within limited computational resources on 11 nonlinear control simulation benchmarks and 1 physical underwater soft robot dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge