Lantao Liu

Navigating the Wild: Pareto-Optimal Visual Decision-Making in Image Space

Nov 11, 2025Abstract:Navigating complex real-world environments requires semantic understanding and adaptive decision-making. Traditional reactive methods without maps often fail in cluttered settings, map-based approaches demand heavy mapping effort, and learning-based solutions rely on large datasets with limited generalization. To address these challenges, we present Pareto-Optimal Visual Navigation, a lightweight image-space framework that combines data-driven semantics, Pareto-optimal decision-making, and visual servoing for real-time navigation.

AFRDA: Attentive Feature Refinement for Domain Adaptive Semantic Segmentation

Jul 23, 2025Abstract:In Unsupervised Domain Adaptive Semantic Segmentation (UDA-SS), a model is trained on labeled source domain data (e.g., synthetic images) and adapted to an unlabeled target domain (e.g., real-world images) without access to target annotations. Existing UDA-SS methods often struggle to balance fine-grained local details with global contextual information, leading to segmentation errors in complex regions. To address this, we introduce the Adaptive Feature Refinement (AFR) module, which enhances segmentation accuracy by refining highresolution features using semantic priors from low-resolution logits. AFR also integrates high-frequency components, which capture fine-grained structures and provide crucial boundary information, improving object delineation. Additionally, AFR adaptively balances local and global information through uncertaintydriven attention, reducing misclassifications. Its lightweight design allows seamless integration into HRDA-based UDA methods, leading to state-of-the-art segmentation performance. Our approach improves existing UDA-SS methods by 1.05% mIoU on GTA V --> Cityscapes and 1.04% mIoU on Synthia-->Cityscapes. The implementation of our framework is available at: https://github.com/Masrur02/AFRDA

Situationally-Aware Dynamics Learning

May 26, 2025Abstract:Autonomous robots operating in complex, unstructured environments face significant challenges due to latent, unobserved factors that obscure their understanding of both their internal state and the external world. Addressing this challenge would enable robots to develop a more profound grasp of their operational context. To tackle this, we propose a novel framework for online learning of hidden state representations, with which the robots can adapt in real-time to uncertain and dynamic conditions that would otherwise be ambiguous and result in suboptimal or erroneous behaviors. Our approach is formalized as a Generalized Hidden Parameter Markov Decision Process, which explicitly models the influence of unobserved parameters on both transition dynamics and reward structures. Our core innovation lies in learning online the joint distribution of state transitions, which serves as an expressive representation of latent ego- and environmental-factors. This probabilistic approach supports the identification and adaptation to different operational situations, improving robustness and safety. Through a multivariate extension of Bayesian Online Changepoint Detection, our method segments changes in the underlying data generating process governing the robot's dynamics. The robot's transition model is then informed with a symbolic representation of the current situation derived from the joint distribution of latest state transitions, enabling adaptive and context-aware decision-making. To showcase the real-world effectiveness, we validate our approach in the challenging task of unstructured terrain navigation, where unmodeled and unmeasured terrain characteristics can significantly impact the robot's motion. Extensive experiments in both simulation and real world reveal significant improvements in data efficiency, policy performance, and the emergence of safer, adaptive navigation strategies.

Action Flow Matching for Continual Robot Learning

Apr 25, 2025

Abstract:Continual learning in robotics seeks systems that can constantly adapt to changing environments and tasks, mirroring human adaptability. A key challenge is refining dynamics models, essential for planning and control, while addressing issues such as safe adaptation, catastrophic forgetting, outlier management, data efficiency, and balancing exploration with exploitation -- all within task and onboard resource constraints. Towards this goal, we introduce a generative framework leveraging flow matching for online robot dynamics model alignment. Rather than executing actions based on a misaligned model, our approach refines planned actions to better match with those the robot would take if its model was well aligned. We find that by transforming the actions themselves rather than exploring with a misaligned model -- as is traditionally done -- the robot collects informative data more efficiently, thereby accelerating learning. Moreover, we validate that the method can handle an evolving and possibly imperfect model while reducing, if desired, the dependency on replay buffers or legacy model snapshots. We validate our approach using two platforms: an unmanned ground vehicle and a quadrotor. The results highlight the method's adaptability and efficiency, with a record 34.2\% higher task success rate, demonstrating its potential towards enabling continual robot learning. Code: https://github.com/AlejandroMllo/action_flow_matching.

Neural Fidelity Calibration for Informative Sim-to-Real Adaptation

Apr 11, 2025Abstract:Deep reinforcement learning can seamlessly transfer agile locomotion and navigation skills from the simulator to real world. However, bridging the sim-to-real gap with domain randomization or adversarial methods often demands expert physics knowledge to ensure policy robustness. Even so, cutting-edge simulators may fall short of capturing every real-world detail, and the reconstructed environment may introduce errors due to various perception uncertainties. To address these challenges, we propose Neural Fidelity Calibration (NFC), a novel framework that employs conditional score-based diffusion models to calibrate simulator physical coefficients and residual fidelity domains online during robot execution. Specifically, the residual fidelity reflects the simulation model shift relative to the real-world dynamics and captures the uncertainty of the perceived environment, enabling us to sample realistic environments under the inferred distribution for policy fine-tuning. Our framework is informative and adaptive in three key ways: (a) we fine-tune the pretrained policy only under anomalous scenarios, (b) we build sequential NFC online with the pretrained NFC's proposal prior, reducing the diffusion model's training burden, and (c) when NFC uncertainty is high and may degrade policy improvement, we leverage optimistic exploration to enable hallucinated policy optimization. Our framework achieves superior simulator calibration precision compared to state-of-the-art methods across diverse robots with high-dimensional parametric spaces. We study the critical contribution of residual fidelity to policy improvement in simulation and real-world experiments. Notably, our approach demonstrates robust robot navigation under challenging real-world conditions, such as a broken wheel axle on snowy surfaces.

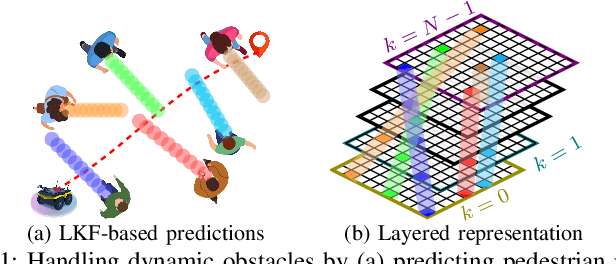

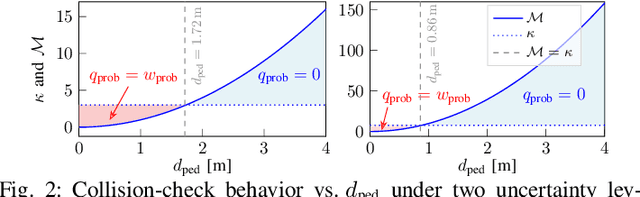

Chance-Constrained Sampling-Based MPC for Collision Avoidance in Uncertain Dynamic Environments

Jan 15, 2025

Abstract:Navigating safely in dynamic and uncertain environments is challenging due to uncertainties in perception and motion. This letter presents C2U-MPPI, a robust sampling-based Model Predictive Control (MPC) framework that addresses these challenges by leveraging the Unscented Model Predictive Path Integral (U-MPPI) control strategy with integrated probabilistic chance constraints, ensuring more reliable and efficient navigation under uncertainty. Unlike gradient-based MPC methods, our approach (i) avoids linearization of system dynamics and directly applies non-convex and nonlinear chance constraints, enabling more accurate and flexible optimization, and (ii) enhances computational efficiency by reformulating probabilistic constraints into a deterministic form and employing a layered dynamic obstacle representation, enabling real-time handling of multiple obstacles. Extensive experiments in simulated and real-world human-shared environments validate the effectiveness of our algorithm against baseline methods, showcasing its capability to generate feasible trajectories and control inputs that adhere to system dynamics and constraints in dynamic settings, enabled by unscented-based sampling strategy and risk-sensitive trajectory evaluation. A supplementary video is available at: https://youtu.be/FptAhvJlQm8

Adaptive Diffusion Terrain Generator for Autonomous Uneven Terrain Navigation

Oct 14, 2024

Abstract:Model-free reinforcement learning has emerged as a powerful method for developing robust robot control policies capable of navigating through complex and unstructured terrains. The effectiveness of these methods hinges on two essential elements: (1) the use of massively parallel physics simulations to expedite policy training, and (2) an environment generator tasked with crafting sufficiently challenging yet attainable terrains to facilitate continuous policy improvement. Existing methods of environment generation often rely on heuristics constrained by a set of parameters, limiting the diversity and realism. In this work, we introduce the Adaptive Diffusion Terrain Generator (ADTG), a novel method that leverages Denoising Diffusion Probabilistic Models to dynamically expand existing training environments by adding more diverse and complex terrains adaptive to the current policy. ADTG guides the diffusion model's generation process through initial noise optimization, blending noise-corrupted terrains from existing training environments weighted by the policy's performance in each corresponding environment. By manipulating the noise corruption level, ADTG seamlessly transitions between generating similar terrains for policy fine-tuning and novel ones to expand training diversity. Our experiments show that the policy trained by ADTG outperforms both procedural generated and natural environments, along with popular navigation methods.

Context-Generative Default Policy for Bounded Rational Agent

Sep 17, 2024Abstract:Bounded rational agents often make decisions by evaluating a finite selection of choices, typically derived from a reference point termed the $`$default policy,' based on previous experience. However, the inherent rigidity of the static default policy presents significant challenges for agents when operating in unknown environment, that are not included in agent's prior knowledge. In this work, we introduce a context-generative default policy that leverages the region observed by the robot to predict unobserved part of the environment, thereby enabling the robot to adaptively adjust its default policy based on both the actual observed map and the $\textit{imagined}$ unobserved map. Furthermore, the adaptive nature of the bounded rationality framework enables the robot to manage unreliable or incorrect imaginations by selectively sampling a few trajectories in the vicinity of the default policy. Our approach utilizes a diffusion model for map prediction and a sampling-based planning with B-spline trajectory optimization to generate the default policy. Extensive evaluations reveal that the context-generative policy outperforms the baseline methods in identifying and avoiding unseen obstacles. Additionally, real-world experiments conducted with the Crazyflie drones demonstrate the adaptability of our proposed method, even when acting in environments outside the domain of the training distribution.

Visual-Geometry GP-based Navigable Space for Autonomous Navigation

Jul 09, 2024Abstract:Autonomous navigation in unknown environments is challenging and demands the consideration of both geometric and semantic information in order to parse the navigability of the environment. In this work, we propose a novel space modeling framework, Visual-Geometry Sparse Gaussian Process (VG-SGP), that simultaneously considers semantics and geometry of the scene. Our proposed approach can overcome the limitation of visual planners that fail to recognize geometry associated with the semantic and the geometric planners that completely overlook the semantic information which is very critical in real-world navigation. The proposed method leverages dual Sparse Gaussian Processes in an integrated manner; the first is trained to forecast geometrically navigable spaces while the second predicts the semantically navigable areas. This integrated model is able to pinpoint the overlapping (geometric and semantic) navigable space. The simulation and real-world experiments demonstrate that the ability of the proposed VG-SGP model, coupled with our innovative navigation strategy, outperforms models solely reliant on visual or geometric navigation algorithms, highlighting a superior adaptive behavior.

POAM: Probabilistic Online Attentive Mapping for Efficient Robotic Information Gathering

Jun 06, 2024

Abstract:Gaussian Process (GP) models are widely used for Robotic Information Gathering (RIG) in exploring unknown environments due to their ability to model complex phenomena with non-parametric flexibility and accurately quantify prediction uncertainty. Previous work has developed informative planners and adaptive GP models to enhance the data efficiency of RIG by improving the robot's sampling strategy to focus on informative regions in non-stationary environments. However, computational efficiency becomes a bottleneck when using GP models in large-scale environments with limited computational resources. We propose a framework -- Probabilistic Online Attentive Mapping (POAM) -- that leverages the modeling strengths of the non-stationary Attentive Kernel while achieving constant-time computational complexity for online decision-making. POAM guides the optimization process via variational Expectation Maximization, providing constant-time update rules for inducing inputs, variational parameters, and hyperparameters. Extensive experiments in active bathymetric mapping tasks demonstrate that POAM significantly improves computational efficiency, model accuracy, and uncertainty quantification capability compared to existing online sparse GP models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge