Durgakant Pushp

Navigating the Wild: Pareto-Optimal Visual Decision-Making in Image Space

Nov 11, 2025Abstract:Navigating complex real-world environments requires semantic understanding and adaptive decision-making. Traditional reactive methods without maps often fail in cluttered settings, map-based approaches demand heavy mapping effort, and learning-based solutions rely on large datasets with limited generalization. To address these challenges, we present Pareto-Optimal Visual Navigation, a lightweight image-space framework that combines data-driven semantics, Pareto-optimal decision-making, and visual servoing for real-time navigation.

AFRDA: Attentive Feature Refinement for Domain Adaptive Semantic Segmentation

Jul 23, 2025Abstract:In Unsupervised Domain Adaptive Semantic Segmentation (UDA-SS), a model is trained on labeled source domain data (e.g., synthetic images) and adapted to an unlabeled target domain (e.g., real-world images) without access to target annotations. Existing UDA-SS methods often struggle to balance fine-grained local details with global contextual information, leading to segmentation errors in complex regions. To address this, we introduce the Adaptive Feature Refinement (AFR) module, which enhances segmentation accuracy by refining highresolution features using semantic priors from low-resolution logits. AFR also integrates high-frequency components, which capture fine-grained structures and provide crucial boundary information, improving object delineation. Additionally, AFR adaptively balances local and global information through uncertaintydriven attention, reducing misclassifications. Its lightweight design allows seamless integration into HRDA-based UDA methods, leading to state-of-the-art segmentation performance. Our approach improves existing UDA-SS methods by 1.05% mIoU on GTA V --> Cityscapes and 1.04% mIoU on Synthia-->Cityscapes. The implementation of our framework is available at: https://github.com/Masrur02/AFRDA

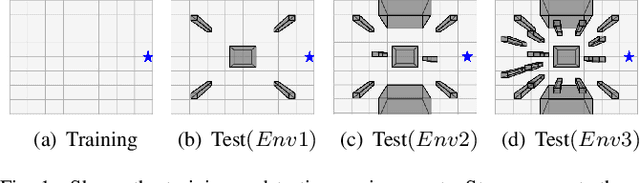

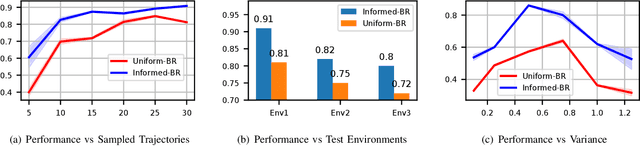

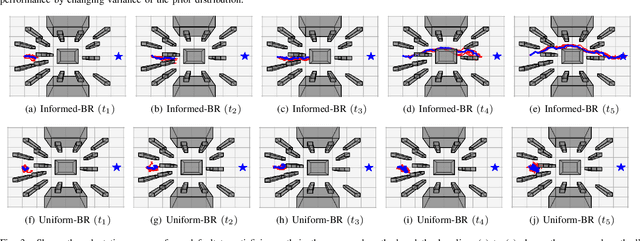

Context-Generative Default Policy for Bounded Rational Agent

Sep 17, 2024Abstract:Bounded rational agents often make decisions by evaluating a finite selection of choices, typically derived from a reference point termed the $`$default policy,' based on previous experience. However, the inherent rigidity of the static default policy presents significant challenges for agents when operating in unknown environment, that are not included in agent's prior knowledge. In this work, we introduce a context-generative default policy that leverages the region observed by the robot to predict unobserved part of the environment, thereby enabling the robot to adaptively adjust its default policy based on both the actual observed map and the $\textit{imagined}$ unobserved map. Furthermore, the adaptive nature of the bounded rationality framework enables the robot to manage unreliable or incorrect imaginations by selectively sampling a few trajectories in the vicinity of the default policy. Our approach utilizes a diffusion model for map prediction and a sampling-based planning with B-spline trajectory optimization to generate the default policy. Extensive evaluations reveal that the context-generative policy outperforms the baseline methods in identifying and avoiding unseen obstacles. Additionally, real-world experiments conducted with the Crazyflie drones demonstrate the adaptability of our proposed method, even when acting in environments outside the domain of the training distribution.

POVNav: A Pareto-Optimal Mapless Visual Navigator

Oct 21, 2023

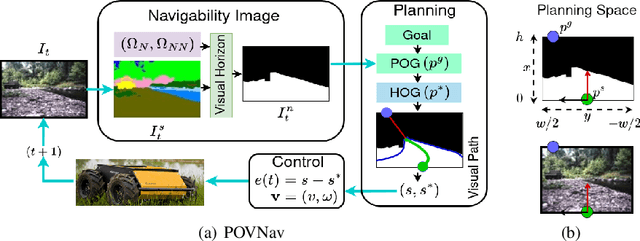

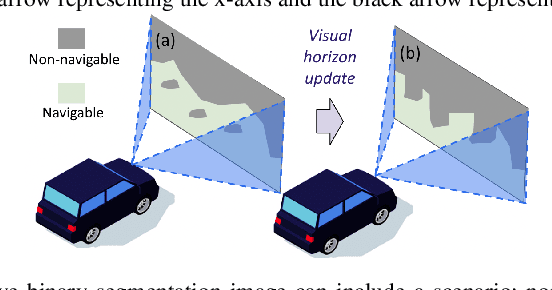

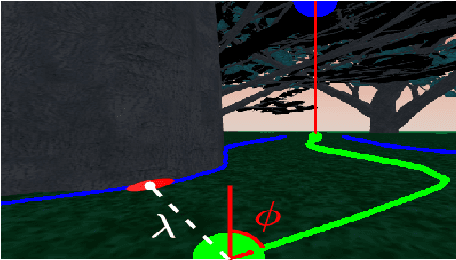

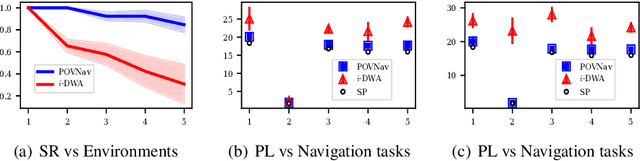

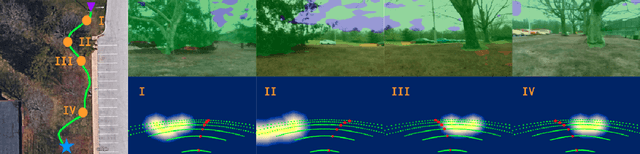

Abstract:Mapless navigation has emerged as a promising approach for enabling autonomous robots to navigate in environments where pre-existing maps may be inaccurate, outdated, or unavailable. In this work, we propose an image-based local representation of the environment immediately around a robot to parse navigability. We further develop a local planning and control framework, a Pareto-optimal mapless visual navigator (POVNav), to use this representation and enable autonomous navigation in various challenging and real-world environments. In POVNav, we choose a Pareto-optimal sub-goal in the image by evaluating all the navigable pixels, finding a safe visual path, and generating actions to follow the path using visual servo control. In addition to providing collision-free motion, our approach enables selective navigation behavior, such as restricting navigation to select terrain types, by only changing the navigability definition in the local representation. The ability of POVNav to navigate a robot to the goal using only a monocular camera without relying on a map makes it computationally light and easy to implement on various robotic platforms. Real-world experiments in diverse challenging environments, ranging from structured indoor environments to unstructured outdoor environments such as forest trails and roads after a heavy snowfall, using various image segmentation techniques demonstrate the remarkable efficacy of our proposed framework.

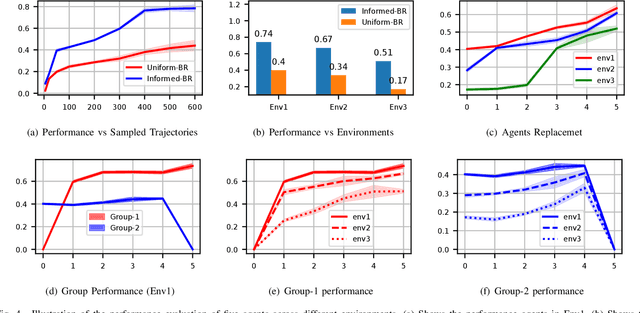

Coordination of Bounded Rational Drones through Informed Prior Policy

Jul 28, 2023

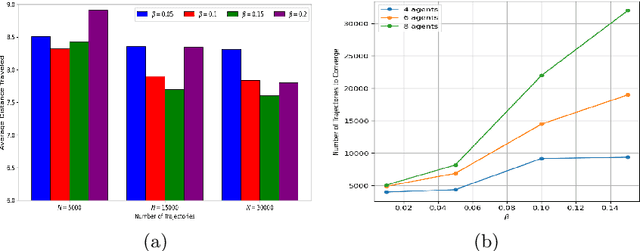

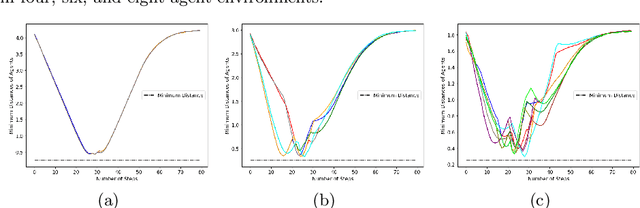

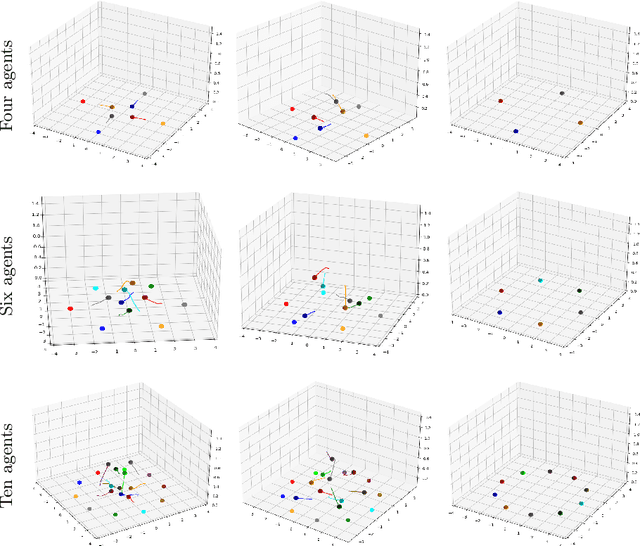

Abstract:Biological agents, such as humans and animals, are capable of making decisions out of a very large number of choices in a limited time. They can do so because they use their prior knowledge to find a solution that is not necessarily optimal but good enough for the given task. In this work, we study the motion coordination of multiple drones under the above-mentioned paradigm, Bounded Rationality (BR), to achieve cooperative motion planning tasks. Specifically, we design a prior policy that provides useful goal-directed navigation heuristics in familiar environments and is adaptive in unfamiliar ones via Reinforcement Learning augmented with an environment-dependent exploration noise. Integrating this prior policy in the game-theoretic bounded rationality framework allows agents to quickly make decisions in a group considering other agents' computational constraints. Our investigation assures that agents with a well-informed prior policy increase the efficiency of the collective decision-making capability of the group. We have conducted rigorous experiments in simulation and in the real world to demonstrate that the ability of informed agents to navigate to the goal safely can guide the group to coordinate efficiently under the BR framework.

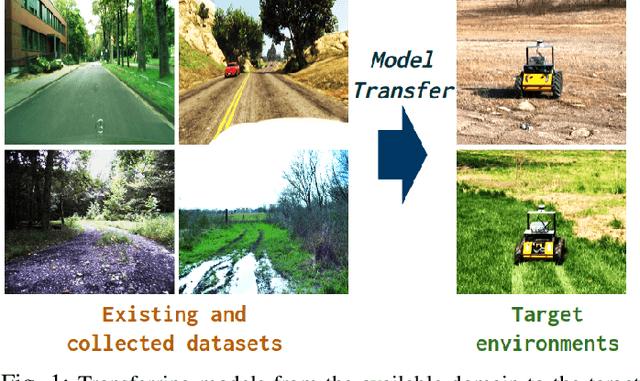

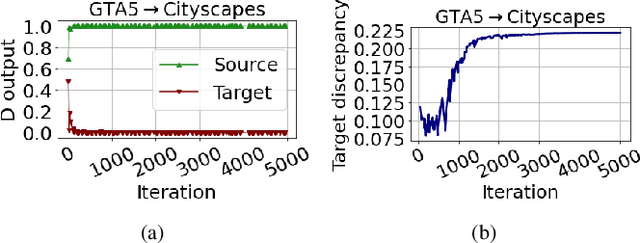

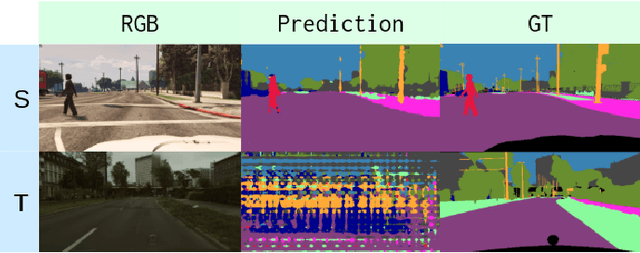

Pseudo-Trilateral Adversarial Training for Domain Adaptive Traversability Prediction

Jun 26, 2023Abstract:Traversability prediction is a fundamental perception capability for autonomous navigation. Deep neural networks (DNNs) have been widely used to predict traversability during the last decade. The performance of DNNs is significantly boosted by exploiting a large amount of data. However, the diversity of data in different domains imposes significant gaps in the prediction performance. In this work, we make efforts to reduce the gaps by proposing a novel pseudo-trilateral adversarial model that adopts a coarse-to-fine alignment (CALI) to perform unsupervised domain adaptation (UDA). Our aim is to transfer the perception model with high data efficiency, eliminate the prohibitively expensive data labeling, and improve the generalization capability during the adaptation from easy-to-access source domains to various challenging target domains. Existing UDA methods usually adopt a bilateral zero-sum game structure. We prove that our CALI model -- a pseudo-trilateral game structure is advantageous over existing bilateral game structures. This proposed work bridges theoretical analyses and algorithm designs, leading to an efficient UDA model with easy and stable training. We further develop a variant of CALI -- Informed CALI (ICALI), which is inspired by the recent success of mixup data augmentation techniques and mixes informative regions based on the results of CALI. This mixture step provides an explicit bridging between the two domains and exposes underperforming classes more during training. We show the superiorities of our proposed models over multiple baselines in several challenging domain adaptation setups. To further validate the effectiveness of our proposed models, we then combine our perception model with a visual planner to build a navigation system and show the high reliability of our model in complex natural environments.

Decision-Making Among Bounded Rational Agents

Oct 17, 2022

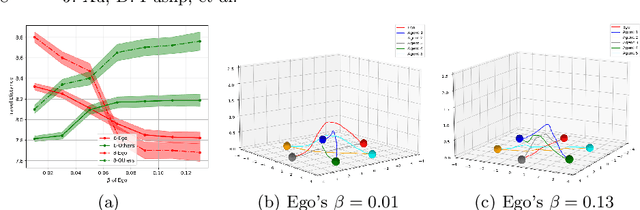

Abstract:When robots share the same workspace with other intelligent agents (e.g., other robots or humans), they must be able to reason about the behaviors of their neighboring agents while accomplishing the designated tasks. In practice, frequently, agents do not exhibit absolutely rational behavior due to their limited computational resources. Thus, predicting the optimal agent behaviors is undesirable (because it demands prohibitive computational resources) and undesirable (because the prediction may be wrong). Motivated by this observation, we remove the assumption of perfectly rational agents and propose incorporating the concept of bounded rationality from an information-theoretic view into the game-theoretic framework. This allows the robots to reason other agents' sub-optimal behaviors and act accordingly under their computational constraints. Specifically, bounded rationality directly models the agent's information processing ability, which is represented as the KL-divergence between nominal and optimized stochastic policies, and the solution to the bounded-optimal policy can be obtained by an efficient importance sampling approach. Using both simulated and real-world experiments in multi-robot navigation tasks, we demonstrate that the resulting framework allows the robots to reason about different levels of rational behaviors of other agents and compute a reasonable strategy under its computational constraint.

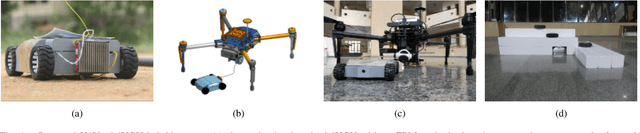

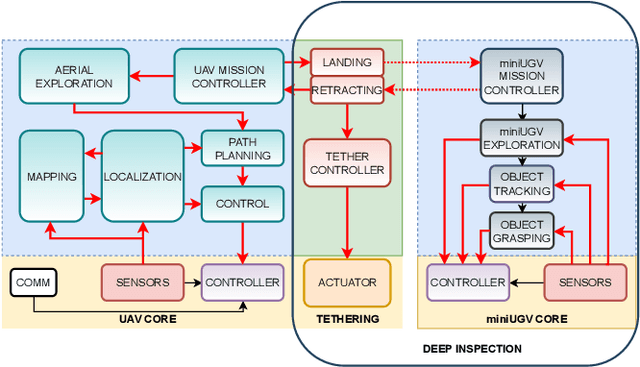

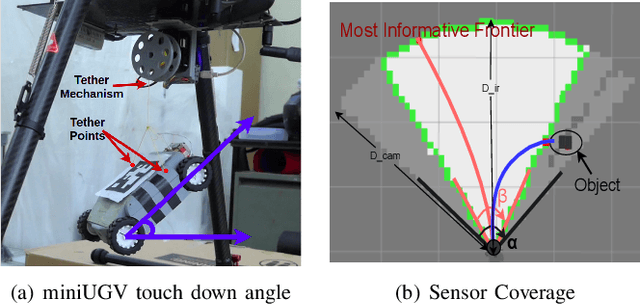

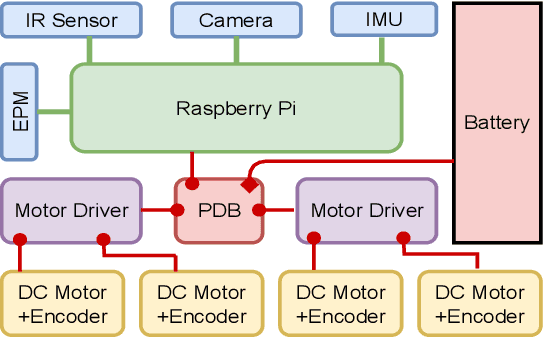

UAV-miniUGV Hybrid System for Hidden Area Exploration and Manipulation

Sep 23, 2022

Abstract:We propose a novel hybrid system (both hardware and software) of an Unmanned Aerial Vehicle (UAV) carrying a miniature Unmanned Ground Vehicle (miniUGV) to perform a complex search and manipulation task. This system leverages heterogeneous robots to accomplish a task that cannot be done using a single robot system. It enables the UAV to explore a hidden space with a narrow opening through which the miniUGV can easily enter and escape. The hidden space is assumed to be navigable for the miniUGV. The miniUGV uses Infrared (IR) sensors and a monocular camera to search for an object in the hidden space. The proposed system takes advantage of a wider field of view (fov) of the camera as well as the stochastic nature of the object detection algorithms to guide the miniUGV in the hidden space to find the object. Upon finding the object the miniUGV grabs it using visual servoing and then returns back to its start point from where the UAV retracts it back and transports the object to a safe place. In case there is no object found in the hidden space, UAV continues the aerial search. The tethered miniUGV gives the UAV an ability to act beyond its reach and perform a search and manipulation task which was not possible before for any of the robots individually. The system has a wide range of applications and we have demonstrated its feasibility through repetitive experiments.

CALI: Coarse-to-Fine ALIgnments Based Unsupervised Domain Adaptation of Traversability Prediction for Deployable Autonomous Navigation

Apr 20, 2022

Abstract:Traversability prediction is a fundamental perception capability for autonomous navigation. The diversity of data in different domains imposes significant gaps to the prediction performance of the perception model. In this work, we make efforts to reduce the gaps by proposing a novel coarse-to-fine unsupervised domain adaptation (UDA) model - CALI. Our aim is to transfer the perception model with high data efficiency, eliminate the prohibitively expensive data labeling, and improve the generalization capability during the adaptation from easy-to-obtain source domains to various challenging target domains. We prove that a combination of a coarse alignment and a fine alignment can be beneficial to each other and further design a first-coarse-then-fine alignment process. This proposed work bridges theoretical analyses and algorithm designs, leading to an efficient UDA model with easy and stable training. We show the advantages of our proposed model over multiple baselines in several challenging domain adaptation setups. To further validate the effectiveness of our model, we then combine our perception model with a visual planner to build a navigation system and show the high reliability of our model in complex natural environments where no labeled data is available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge