Mahmoud Ali

Chance-Constrained Sampling-Based MPC for Collision Avoidance in Uncertain Dynamic Environments

Jan 15, 2025

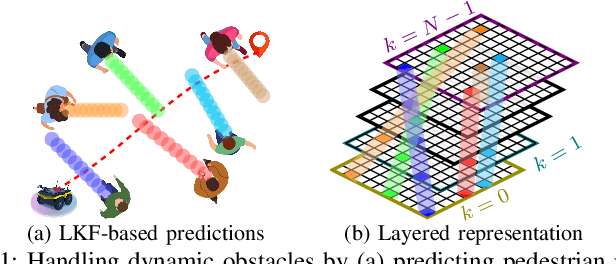

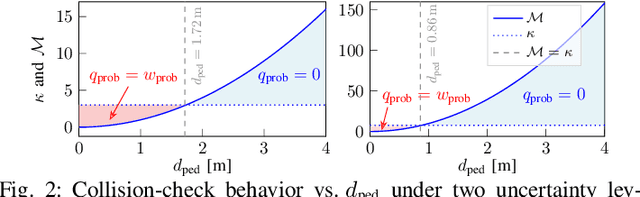

Abstract:Navigating safely in dynamic and uncertain environments is challenging due to uncertainties in perception and motion. This letter presents C2U-MPPI, a robust sampling-based Model Predictive Control (MPC) framework that addresses these challenges by leveraging the Unscented Model Predictive Path Integral (U-MPPI) control strategy with integrated probabilistic chance constraints, ensuring more reliable and efficient navigation under uncertainty. Unlike gradient-based MPC methods, our approach (i) avoids linearization of system dynamics and directly applies non-convex and nonlinear chance constraints, enabling more accurate and flexible optimization, and (ii) enhances computational efficiency by reformulating probabilistic constraints into a deterministic form and employing a layered dynamic obstacle representation, enabling real-time handling of multiple obstacles. Extensive experiments in simulated and real-world human-shared environments validate the effectiveness of our algorithm against baseline methods, showcasing its capability to generate feasible trajectories and control inputs that adhere to system dynamics and constraints in dynamic settings, enabled by unscented-based sampling strategy and risk-sensitive trajectory evaluation. A supplementary video is available at: https://youtu.be/FptAhvJlQm8

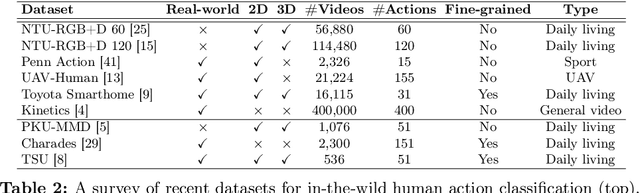

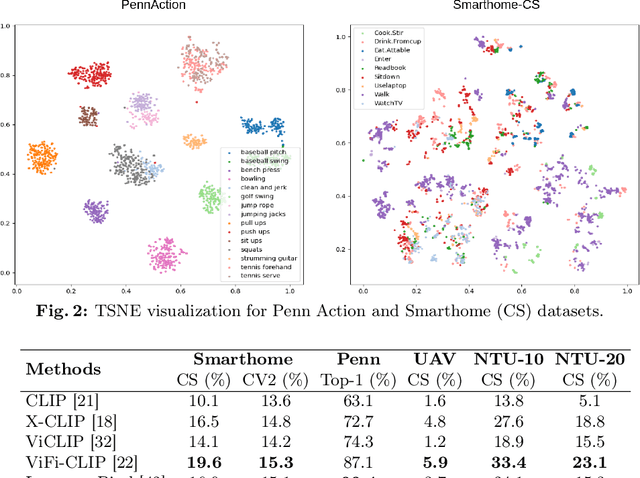

Are Visual-Language Models Effective in Action Recognition? A Comparative Study

Oct 22, 2024

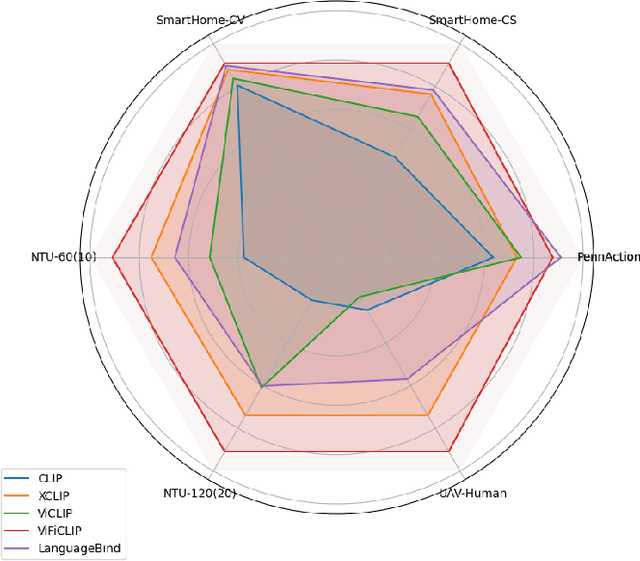

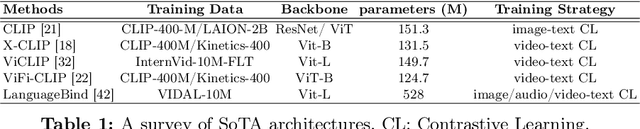

Abstract:Current vision-language foundation models, such as CLIP, have recently shown significant improvement in performance across various downstream tasks. However, whether such foundation models significantly improve more complex fine-grained action recognition tasks is still an open question. To answer this question and better find out the future research direction on human behavior analysis in-the-wild, this paper provides a large-scale study and insight on current state-of-the-art vision foundation models by comparing their transfer ability onto zero-shot and frame-wise action recognition tasks. Extensive experiments are conducted on recent fine-grained, human-centric action recognition datasets (e.g., Toyota Smarthome, Penn Action, UAV-Human, TSU, Charades) including action classification and segmentation.

Weakly-supervised Autism Severity Assessment in Long Videos

Jul 12, 2024Abstract:Autism Spectrum Disorder (ASD) is a diverse collection of neurobiological conditions marked by challenges in social communication and reciprocal interactions, as well as repetitive and stereotypical behaviors. Atypical behavior patterns in a long, untrimmed video can serve as biomarkers for children with ASD. In this paper, we propose a video-based weakly-supervised method that takes spatio-temporal features of long videos to learn typical and atypical behaviors for autism detection. On top of that, we propose a shallow TCN-MLP network, which is designed to further categorize the severity score. We evaluate our method on actual evaluation videos of children with autism collected and annotated (for severity score) by clinical professionals. Experimental results demonstrate the effectiveness of behavioral biomarkers that could help clinicians in autism spectrum analysis.

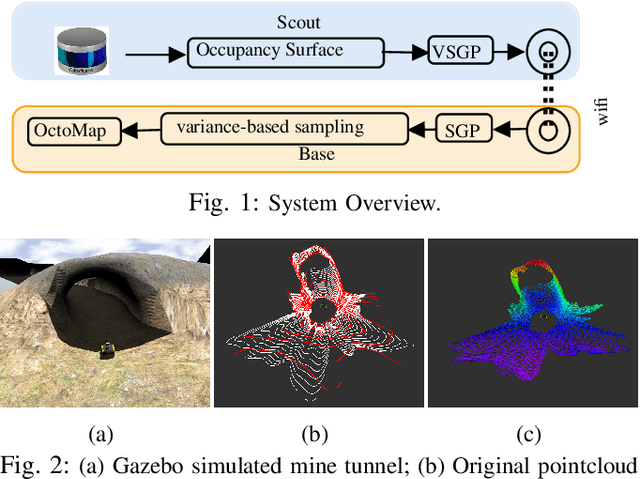

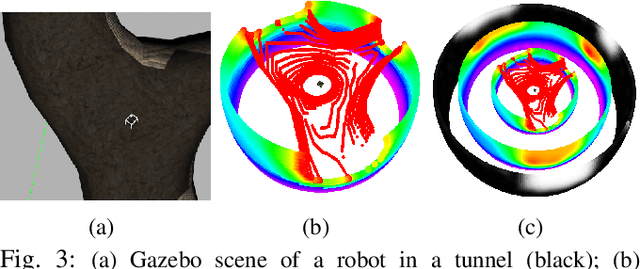

Visual-Geometry GP-based Navigable Space for Autonomous Navigation

Jul 09, 2024Abstract:Autonomous navigation in unknown environments is challenging and demands the consideration of both geometric and semantic information in order to parse the navigability of the environment. In this work, we propose a novel space modeling framework, Visual-Geometry Sparse Gaussian Process (VG-SGP), that simultaneously considers semantics and geometry of the scene. Our proposed approach can overcome the limitation of visual planners that fail to recognize geometry associated with the semantic and the geometric planners that completely overlook the semantic information which is very critical in real-world navigation. The proposed method leverages dual Sparse Gaussian Processes in an integrated manner; the first is trained to forecast geometrically navigable spaces while the second predicts the semantically navigable areas. This integrated model is able to pinpoint the overlapping (geometric and semantic) navigable space. The simulation and real-world experiments demonstrate that the ability of the proposed VG-SGP model, coupled with our innovative navigation strategy, outperforms models solely reliant on visual or geometric navigation algorithms, highlighting a superior adaptive behavior.

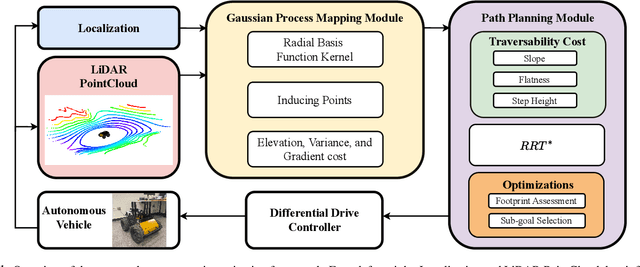

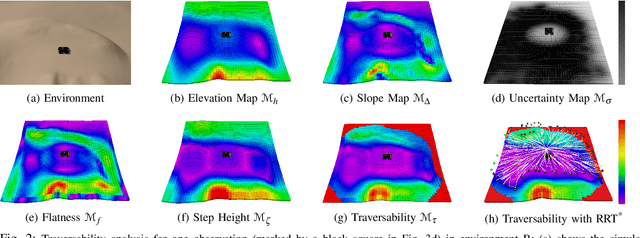

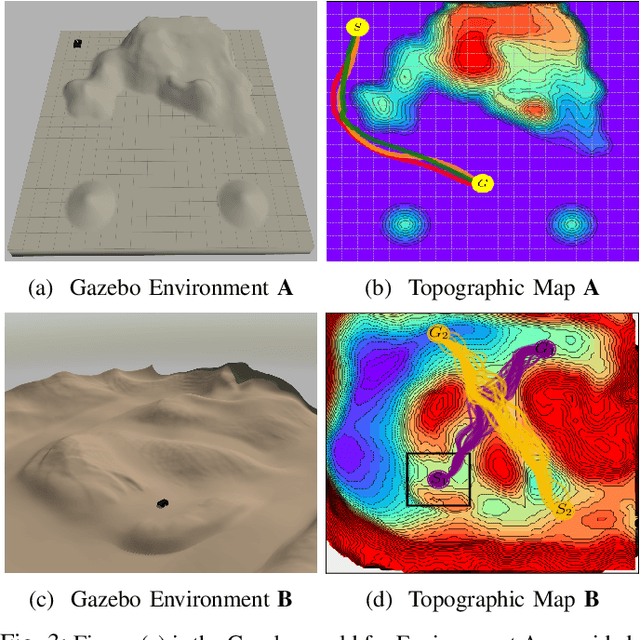

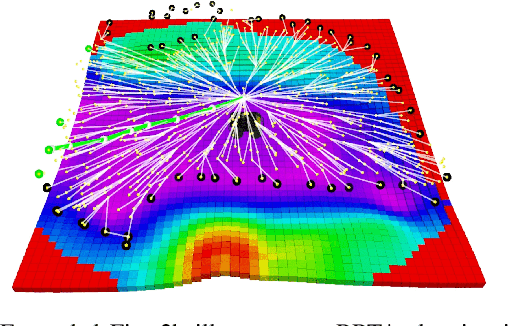

Gaussian Process-based Traversability Analysis for Terrain Mapless Navigation

Mar 27, 2024

Abstract:Efficient navigation through uneven terrain remains a challenging endeavor for autonomous robots. We propose a new geometric-based uneven terrain mapless navigation framework combining a Sparse Gaussian Process (SGP) local map with a Rapidly-Exploring Random Tree* (RRT*) planner. Our approach begins with the generation of a high-resolution SGP local map, providing an interpolated representation of the robot's immediate environment. This map captures crucial environmental variations, including height, uncertainties, and slope characteristics. Subsequently, we construct a traversability map based on the SGP representation to guide our planning process. The RRT* planner efficiently generates real-time navigation paths, avoiding untraversable terrain in pursuit of the goal. This combination of SGP-based terrain interpretation and RRT* planning enables ground robots to safely navigate environments with varying elevations and steep obstacles. We evaluate the performance of our proposed approach through robust simulation testing, highlighting its effectiveness in achieving safe and efficient navigation compared to existing methods.

Autonomous Mapless Navigation on Uneven Terrains

Feb 21, 2024Abstract:We propose a new method for autonomous navigation in uneven terrains by utilizing a sparse Gaussian Process (SGP) based local perception model. The SGP local perception model is trained on local ranging observation (pointcloud) to learn the terrain elevation profile and extract the feasible navigation subgoals around the robot. Subsequently, a cost function, which prioritizes the safety of the robot in terms of keeping the robot's roll and pitch angles bounded within a specified range, is used to select a safety-aware subgoal that leads the robot to its final destination. The algorithm is designed to run in real-time and is intensively evaluated in simulation and real world experiments. The results compellingly demonstrate that our proposed algorithm consistently navigates uneven terrains with high efficiency and surpasses the performance of other planners. The code and video can be found here: https://rb.gy/3ov2r8

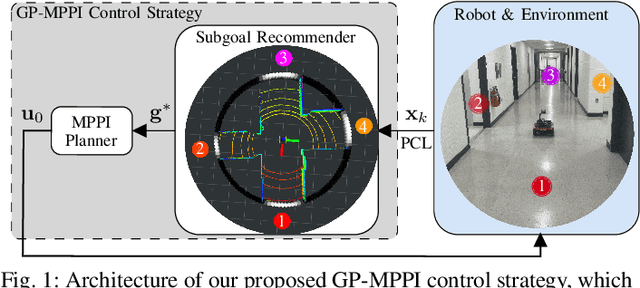

GP-guided MPPI for Efficient Navigation in Complex Unknown Cluttered Environments

Jul 28, 2023

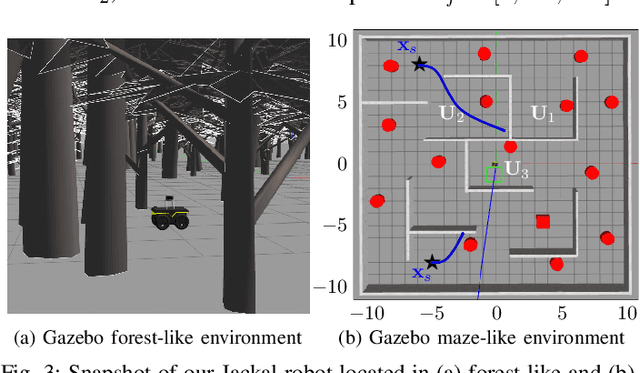

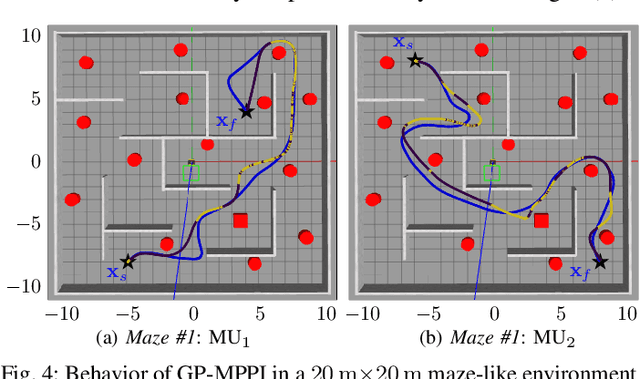

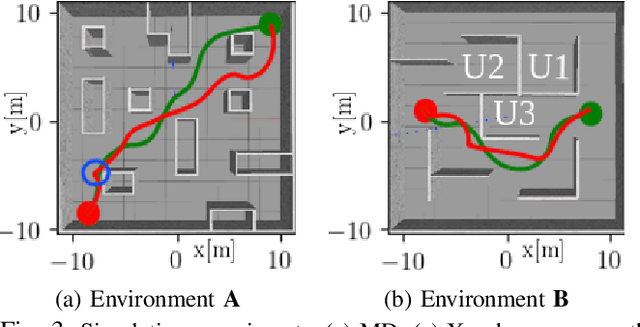

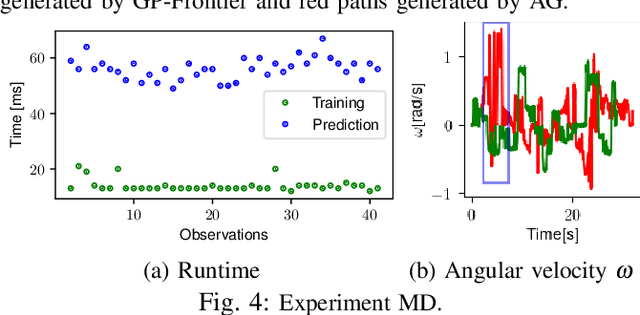

Abstract:Robotic navigation in unknown, cluttered environments with limited sensing capabilities poses significant challenges in robotics. Local trajectory optimization methods, such as Model Predictive Path Intergal (MPPI), are a promising solution to this challenge. However, global guidance is required to ensure effective navigation, especially when encountering challenging environmental conditions or navigating beyond the planning horizon. This study presents the GP-MPPI, an online learning-based control strategy that integrates MPPI with a local perception model based on Sparse Gaussian Process (SGP). The key idea is to leverage the learning capability of SGP to construct a variance (uncertainty) surface, which enables the robot to learn about the navigable space surrounding it, identify a set of suggested subgoals, and ultimately recommend the optimal subgoal that minimizes a predefined cost function to the local MPPI planner. Afterward, MPPI computes the optimal control sequence that satisfies the robot and collision avoidance constraints. Such an approach eliminates the necessity of a global map of the environment or an offline training process. We validate the efficiency and robustness of our proposed control strategy through both simulated and real-world experiments of 2D autonomous navigation tasks in complex unknown environments, demonstrating its superiority in guiding the robot safely towards its desired goal while avoiding obstacles and escaping entrapment in local minima. The GPU implementation of GP-MPPI, including the supplementary video, is available at https://github.com/IhabMohamed/GP-MPPI.

GP-Frontier for Local Mapless Navigation

Jul 21, 2023

Abstract:We propose a new frontier concept called the Gaussian Process Frontier (GP-Frontier) that can be used to locally navigate a robot towards a goal without building a map. The GP-Frontier is built on the uncertainty assessment of an efficient variant of sparse Gaussian Process. Based only on local ranging sensing measurement, the GP-Frontier can be used for navigation in both known and unknown environments. The proposed method is validated through intensive evaluations, and the results show that the GP-Frontier can navigate the robot in a safe and persistent way, i.e., the robot moves in the most open space (thus reducing the risk of collision) without relying on a map or a path planner.

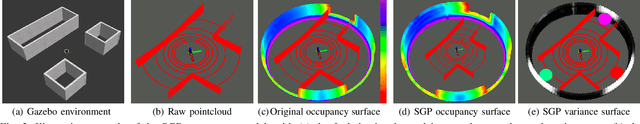

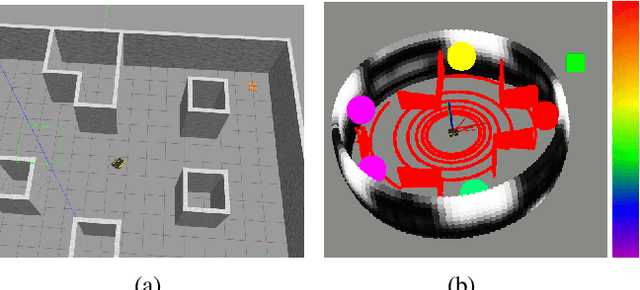

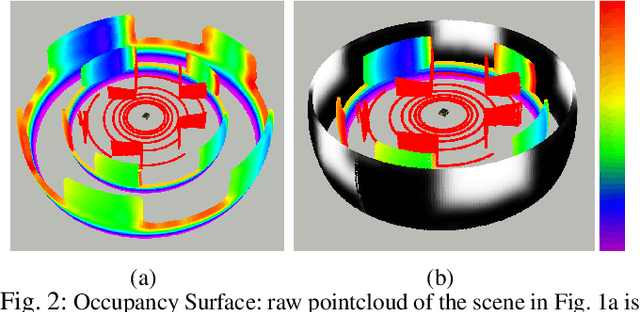

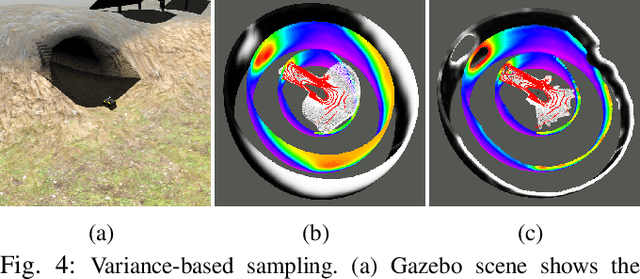

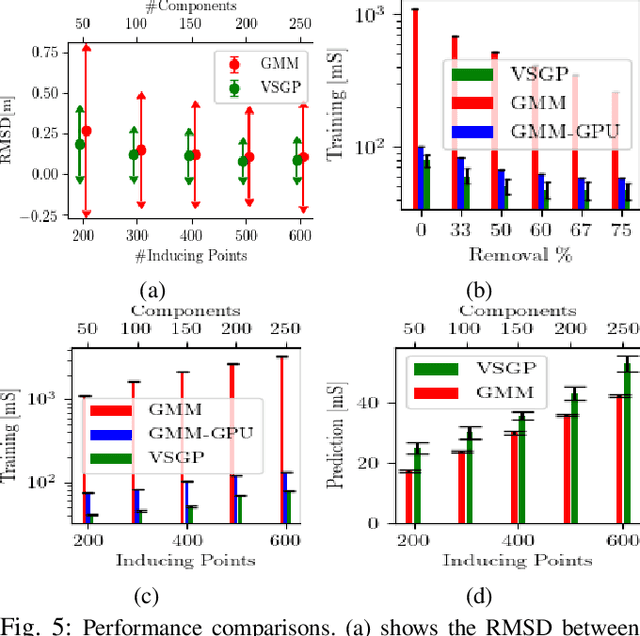

Light-Weight Pointcloud Representation with Sparse Gaussian Process

Jan 26, 2023

Abstract:This paper presents a framework to represent high-fidelity pointcloud sensor observations for efficient communication and storage. The proposed approach exploits Sparse Gaussian Process to encode pointcloud into a compact form. Our approach represents both the free space and the occupied space using only one model (one 2D Sparse Gaussian Process) instead of the existing two-model framework (two 3D Gaussian Mixture Models). We achieve this by proposing a variance-based sampling technique that effectively discriminates between the free and occupied space. The new representation requires less memory footprint and can be transmitted across limitedbandwidth communication channels. The framework is extensively evaluated in simulation and it is also demonstrated using a real mobile robot equipped with a 3D LiDAR. Our method results in a 70 to 100 times reduction in the communication rate compared to sending the raw pointcloud.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge