William Edwards

Transformers Use Causal World Models in Maze-Solving Tasks

Dec 16, 2024

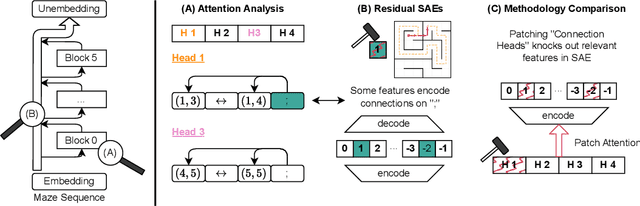

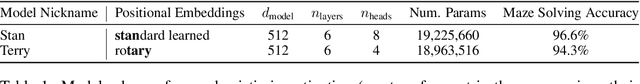

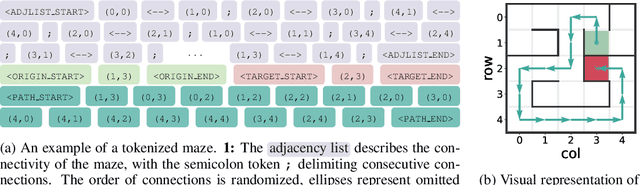

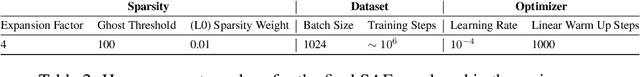

Abstract:Recent studies in interpretability have explored the inner workings of transformer models trained on tasks across various domains, often discovering that these networks naturally develop surprisingly structured representations. When such representations comprehensively reflect the task domain's structure, they are commonly referred to as ``World Models'' (WMs). In this work, we discover such WMs in transformers trained on maze tasks. In particular, by employing Sparse Autoencoders (SAEs) and analysing attention patterns, we examine the construction of WMs and demonstrate consistency between the circuit analysis and the SAE feature-based analysis. We intervene upon the isolated features to confirm their causal role and, in doing so, find asymmetries between certain types of interventions. Surprisingly, we find that models are able to reason with respect to a greater number of active features than they see during training, even if attempting to specify these in the input token sequence would lead the model to fail. Futhermore, we observe that varying positional encodings can alter how WMs are encoded in a model's residual stream. By analyzing the causal role of these WMs in a toy domain we hope to make progress toward an understanding of emergent structure in the representations acquired by Transformers, leading to the development of more interpretable and controllable AI systems.

Efficient Automatic Tuning for Data-driven Model Predictive Control via Meta-Learning

Mar 30, 2024

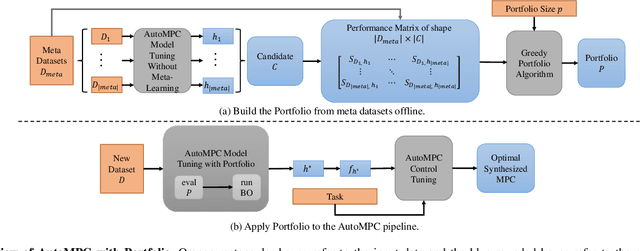

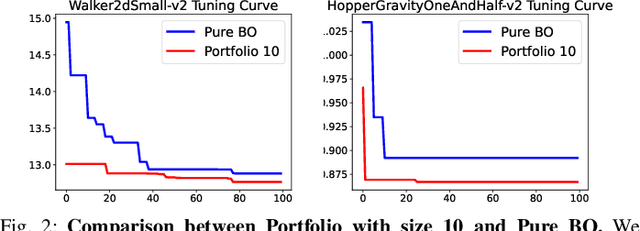

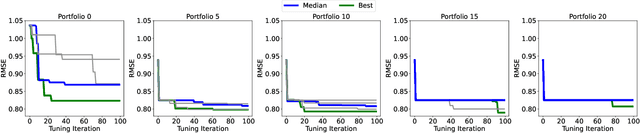

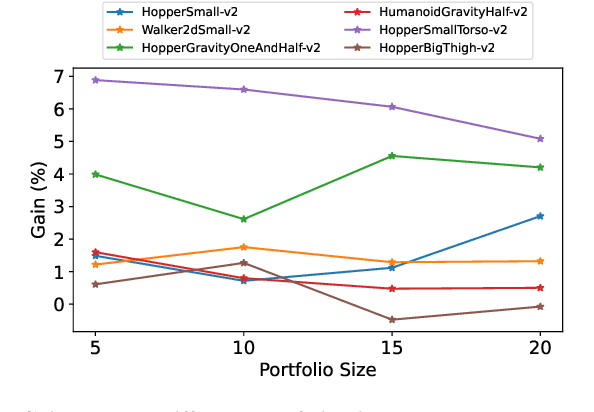

Abstract:AutoMPC is a Python package that automates and optimizes data-driven model predictive control. However, it can be computationally expensive and unstable when exploring large search spaces using pure Bayesian Optimization (BO). To address these issues, this paper proposes to employ a meta-learning approach called Portfolio that improves AutoMPC's efficiency and stability by warmstarting BO. Portfolio optimizes initial designs for BO using a diverse set of configurations from previous tasks and stabilizes the tuning process by fixing initial configurations instead of selecting them randomly. Experimental results demonstrate that Portfolio outperforms the pure BO in finding desirable solutions for AutoMPC within limited computational resources on 11 nonlinear control simulation benchmarks and 1 physical underwater soft robot dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge