Nils Dengler

EvidMTL: Evidential Multi-Task Learning for Uncertainty-Aware Semantic Surface Mapping from Monocular RGB Images

Mar 06, 2025Abstract:For scene understanding in unstructured environments, an accurate and uncertainty-aware metric-semantic mapping is required to enable informed action selection by autonomous systems.Existing mapping methods often suffer from overconfident semantic predictions, and sparse and noisy depth sensing, leading to inconsistent map representations. In this paper, we therefore introduce EvidMTL, a multi-task learning framework that uses evidential heads for depth estimation and semantic segmentation, enabling uncertainty-aware inference from monocular RGB images. To enable uncertainty-calibrated evidential multi-task learning, we propose a novel evidential depth loss function that jointly optimizes the belief strength of the depth prediction in conjunction with evidential segmentation loss. Building on this, we present EvidKimera, an uncertainty-aware semantic surface mapping framework, which uses evidential depth and semantics prediction for improved 3D metric-semantic consistency. We train and evaluate EvidMTL on the NYUDepthV2 and assess its zero-shot performance on ScanNetV2, demonstrating superior uncertainty estimation compared to conventional approaches while maintaining comparable depth estimation and semantic segmentation. In zero-shot mapping tests on ScanNetV2, EvidKimera outperforms Kimera in semantic surface mapping accuracy and consistency, highlighting the benefits of uncertainty-aware mapping and underscoring its potential for real-world robotic applications.

Map Space Belief Prediction for Manipulation-Enhanced Mapping

Feb 28, 2025

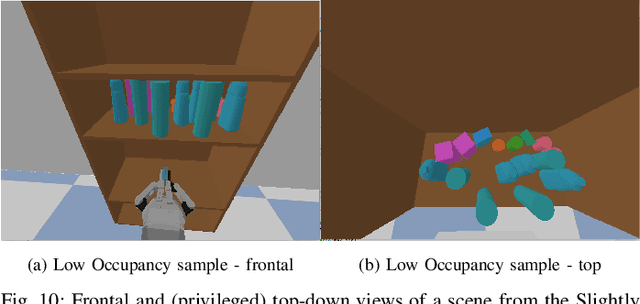

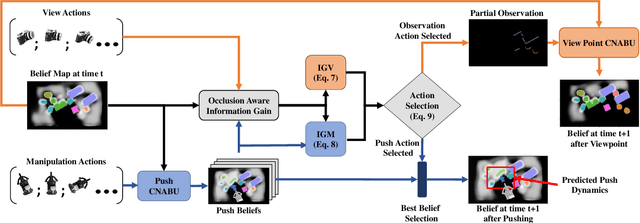

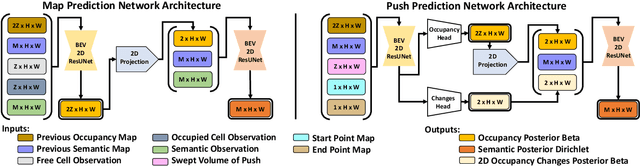

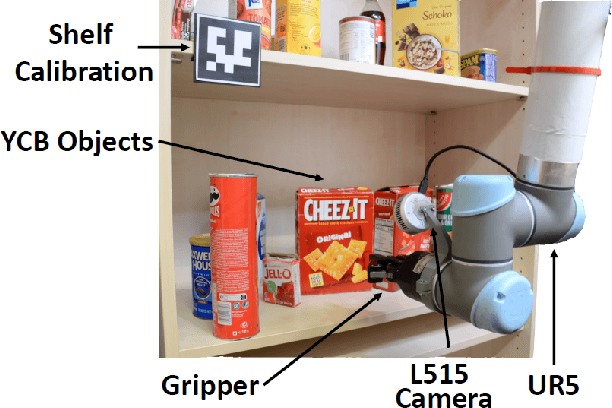

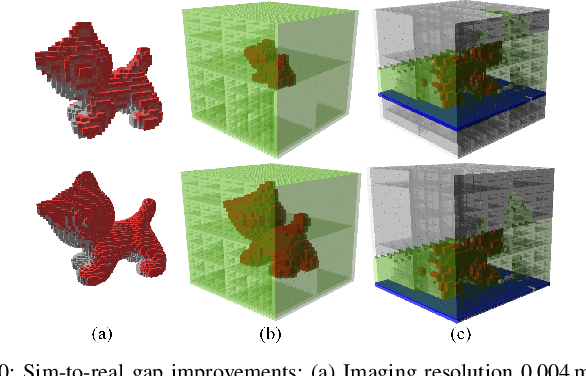

Abstract:Searching for objects in cluttered environments requires selecting efficient viewpoints and manipulation actions to remove occlusions and reduce uncertainty in object locations, shapes, and categories. In this work, we address the problem of manipulation-enhanced semantic mapping, where a robot has to efficiently identify all objects in a cluttered shelf. Although Partially Observable Markov Decision Processes~(POMDPs) are standard for decision-making under uncertainty, representing unstructured interactive worlds remains challenging in this formalism. To tackle this, we define a POMDP whose belief is summarized by a metric-semantic grid map and propose a novel framework that uses neural networks to perform map-space belief updates to reason efficiently and simultaneously about object geometries, locations, categories, occlusions, and manipulation physics. Further, to enable accurate information gain analysis, the learned belief updates should maintain calibrated estimates of uncertainty. Therefore, we propose Calibrated Neural-Accelerated Belief Updates (CNABUs) to learn a belief propagation model that generalizes to novel scenarios and provides confidence-calibrated predictions for unknown areas. Our experiments show that our novel POMDP planner improves map completeness and accuracy over existing methods in challenging simulations and successfully transfers to real-world cluttered shelves in zero-shot fashion.

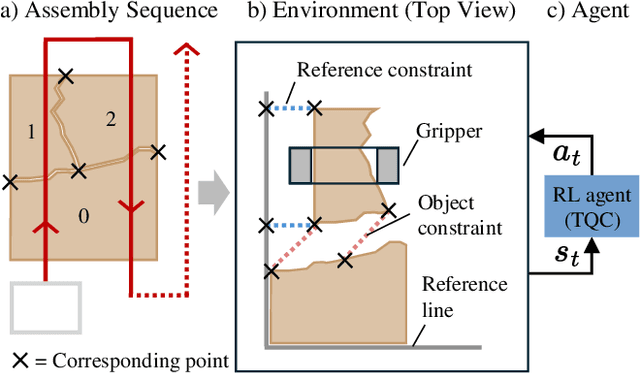

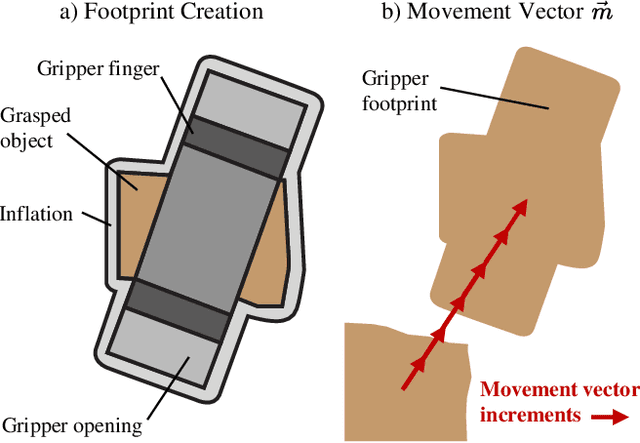

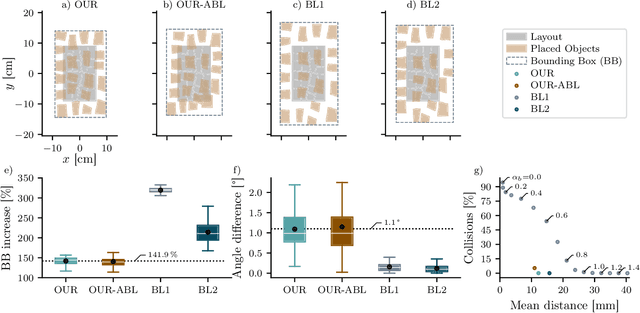

Constrained Object Placement Using Reinforcement Learning

Apr 16, 2024

Abstract:Close and precise placement of irregularly shaped objects requires a skilled robotic system. Particularly challenging is the manipulation of objects that have sensitive top surfaces and a fixed set of neighbors. To avoid damaging the surface, they have to be grasped from the side, and during placement, their neighbor relations have to be maintained. In this work, we train a reinforcement learning agent that generates smooth end-effector motions to place objects as close as possible next to each other. During the placement, our agent considers neighbor constraints defined in a given layout of the objects while trying to avoid collisions. Our approach learns to place compact object assemblies without the need for predefined spacing between objects as required by traditional methods. We thoroughly evaluated our approach using a two-finger gripper mounted to a robotic arm with six degrees of freedom. The results show that our agent outperforms two baseline approaches in terms of object assembly compactness, thereby reducing the needed space to place the objects according to the given neighbor constraints. On average, our approach reduces the distances between all placed objects by at least 60%, with fewer collisions at the same compactness compared to both baselines.

Learning Goal-Directed Object Pushing in Cluttered Scenes with Location-Based Attention

Mar 26, 2024Abstract:Non-prehensile planar pushing is a challenging task due to its underactuated nature with hybrid-dynamics, where a robot needs to reason about an object's long-term behaviour and contact-switching, while being robust to contact uncertainty. The presence of clutter in the environment further complicates this task, introducing the need to include more sophisticated spatial analysis to avoid collisions. Building upon prior work on reinforcement learning (RL) with multimodal categorical exploration for planar pushing, in this paper we incorporate location-based attention to enable robust navigation through clutter. Unlike previous RL literature addressing this obstacle avoidance pushing task, our framework requires no predefined global paths and considers the target orientation of the manipulated object. Our results demonstrate that the learned policies successfully navigate through a wide range of complex obstacle configurations, including dynamic obstacles, with smooth motions, achieving the desired target object pose. We also validate the transferability of the learned policies to robotic hardware using the KUKA iiwa robot arm.

RHINO-VR Experience: Teaching Mobile Robotics Concepts in an Interactive Museum Exhibit

Mar 22, 2024

Abstract:In 1997, the very first tour guide robot RHINO was deployed in a museum in Germany. With the ability to navigate autonomously through the environment, the robot gave tours to over 2,000 visitors. Today, RHINO itself has become an exhibit and is no longer operational. In this paper, we present RHINO-VR, an interactive museum exhibit using virtual reality (VR) that allows museum visitors to experience the historical robot RHINO in operation in a virtual museum. RHINO-VR, unlike static exhibits, enables users to familiarize themselves with basic mobile robotics concepts without the fear of damaging the exhibit. In the virtual environment, the user is able to interact with RHINO in VR by pointing to a location to which the robot should navigate and observing the corresponding actions of the robot. To include other visitors who cannot use the VR, we provide an external observation view to make RHINO visible to them. We evaluated our system by measuring the frame rate of the VR simulation, comparing the generated virtual 3D models with the originals, and conducting a user study. The user-study showed that RHINO-VR improved the visitors' understanding of the robot's functionality and that they would recommend experiencing the VR exhibit to others.

Safe Multi-Agent Reinforcement Learning for Formation Control without Individual Reference Targets

Dec 20, 2023

Abstract:In recent years, formation control of unmanned vehicles has received considerable interest, driven by the progress in autonomous systems and the imperative for multiple vehicles to carry out diverse missions. In this paper, we address the problem of behavior-based formation control of mobile robots, where we use safe multi-agent reinforcement learning~(MARL) to ensure the safety of the robots by eliminating all collisions during training and execution. To ensure safety, we implemented distributed model predictive control safety filters to override unsafe actions. We focus on achieving behavior-based formation without having individual reference targets for the robots, and instead use targets for the centroid of the formation. This formulation facilitates the deployment of formation control on real robots and improves the scalability of our approach to more robots. The task cannot be addressed through optimization-based controllers without specific individual reference targets for the robots and information about the relative locations of each robot to the others. That is why, for our formulation we use MARL to train the robots. Moreover, in order to account for the interactions between the agents, we use attention-based critics to improve the training process. We train the agents in simulation and later on demonstrate the resulting behavior of our approach on real Turtlebot robots. We show that despite the agents having very limited information, we can still safely achieve the desired behavior.

DawnIK: Decentralized Collision-Aware Inverse Kinematics Solver for Heterogeneous Multi-Arm Systems

Jul 24, 2023Abstract:Although inverse kinematics of serial manipulators is a well studied problem, challenges still exist in finding smooth feasible solutions that are also collision aware. Furthermore, with collaborative and service robots gaining traction, different robotic systems have to work in close proximity. This means that the current inverse kinematics approaches have to not only avoid collisions with themselves but also collisions with other robot arms. Therefore, we present a novel approach to compute inverse kinematics for serial manipulators that take into account different constraints while trying to reach a desired end-effector position and/or orientation that avoids collisions with themselves and other arms. Unlike other constraint based approaches, we neither perform expensive inverse Jacobian computations nor do we require arms with redundant degrees of freedom. Instead, we formulate different constraints as weighted cost functions to be optimized by a non-linear optimization solver. Our approach is superior to the state-of-the-art CollisionIK in terms of collision avoidance in the presence of multiple arms in confined spaces with no detected collisions at all in all the experimental scenarios. When the probability of collision is low, our approach shows better performance at trajectory tracking as well. Additionally, our approach is capable of simultaneous yet decentralized control of multiple arms for trajectory tracking in intersecting workspace without any collisions.

One-Shot View Planning for Fast and Complete Unknown Object Reconstruction

Apr 03, 2023

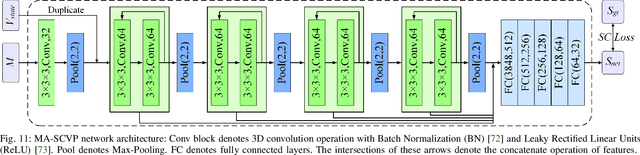

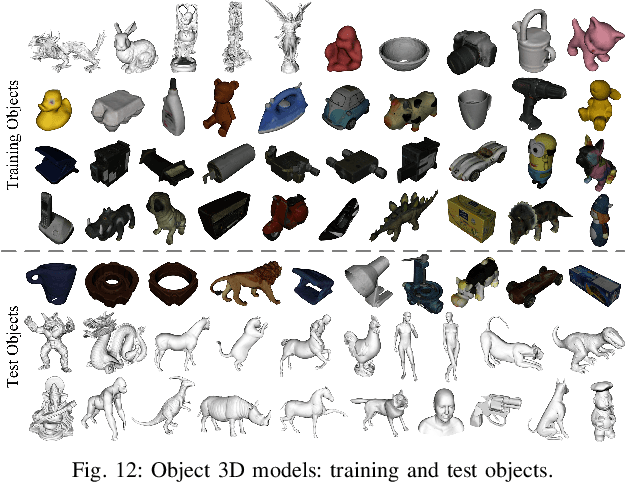

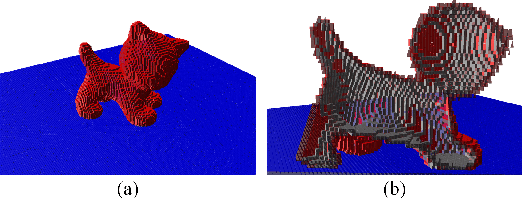

Abstract:Current view planning (VP) systems usually adopt an iterative pipeline with next-best-view (NBV) methods that can autonomously perform 3D reconstruction of unknown objects. However, they are slowed down by local path planning, which is improved by our previously proposed set-covering-based network SCVP using one-shot view planning and global path planning. In this work, we propose a combined pipeline that selects a few NBVs before activating the network to improve model completeness. However, this pipeline will result in more views than expected because the SCVP has not been trained from multiview scenarios. To reduce the overall number of views and paths required, we propose a multiview-activated architecture MA-SCVP and an efficient dataset sampling method for view planning based on a long-tail distribution. Ablation studies confirm the optimal network architecture, the sampling method and the number of samples, the NBV method and the number of NBVs in our combined pipeline. Comparative experiments support the claim that our system achieves faster and more complete reconstruction than state-of-the-art systems. For the reference of the community, we make the source codes public.

Viewpoint Push Planning for Mapping of Unknown Confined Spaces

Mar 06, 2023Abstract:Viewpoint planning is an important task in any application where objects or scenes need to be viewed from different angles to achieve sufficient coverage. The mapping of confined spaces such as shelves is an especially challenging task since objects occlude each other and the scene can only be observed from the front, thus with limited possible viewpoints. In this paper, we propose a deep reinforcement learning framework that generates promising views aiming at reducing the map entropy. Additionally, the pipeline extends standard viewpoint planning by predicting adequate minimally invasive push actions to uncover occluded objects and increase the visible space. Using a 2.5D occupancy height map as state representation that can be efficiently updated, our system decides whether to plan a new viewpoint or perform a push. To learn feasible pushes, we use a neural network to sample push candidates on the map and have human experts manually label them to indicate whether the sampled push is a good action to perform. As simulated and real-world experimental results with a robotic arm show, our system is able to significantly increase the mapped space compared to different baselines, while the executed push actions highly benefit the viewpoint planner with only minor changes to the object configuration.

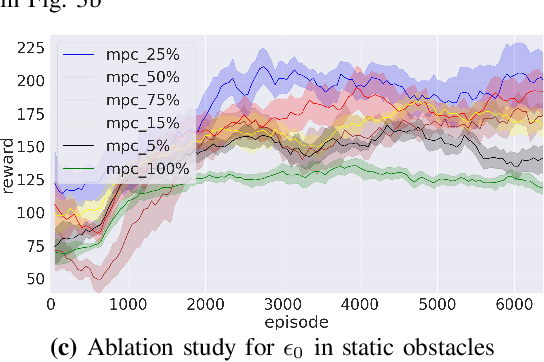

Handling Sparse Rewards in Reinforcement Learning Using Model Predictive Control

Oct 04, 2022

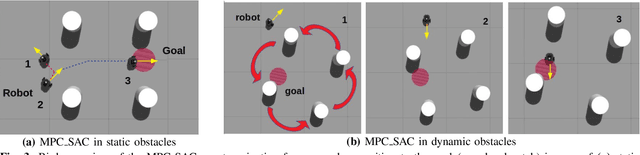

Abstract:Reinforcement learning (RL) has recently proven great success in various domains. Yet, the design of the reward function requires detailed domain expertise and tedious fine-tuning to ensure that agents are able to learn the desired behaviour. Using a sparse reward conveniently mitigates these challenges. However, the sparse reward represents a challenge on its own, often resulting in unsuccessful training of the agent. In this paper, we therefore address the sparse reward problem in RL. Our goal is to find an effective alternative to reward shaping, without using costly human demonstrations, that would also be applicable to a wide range of domains. Hence, we propose to use model predictive control~(MPC) as an experience source for training RL agents in sparse reward environments. Without the need for reward shaping, we successfully apply our approach in the field of mobile robot navigation both in simulation and real-world experiments with a Kuboki Turtlebot 2. We furthermore demonstrate great improvement over pure RL algorithms in terms of success rate as well as number of collisions and timeouts. Our experiments show that MPC as an experience source improves the agent's learning process for a given task in the case of sparse rewards.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge