Siqi Zhou

University of Toronto Institute for Aerospace Studies, Technical University of Munich

Addressing Relative Degree Issues in Control Barrier Function Synthesis with Physics-Informed Neural Networks

Apr 08, 2025Abstract:In robotics, control barrier function (CBF)-based safety filters are commonly used to enforce state constraints. A critical challenge arises when the relative degree of the CBF varies across the state space. This variability can create regions within the safe set where the control input becomes unconstrained. When implemented as a safety filter, this may result in chattering near the safety boundary and ultimately compromise system safety. To address this issue, we propose a novel approach for CBF synthesis by formulating it as solving a set of boundary value problems. The solutions to the boundary value problems are determined using physics-informed neural networks (PINNs). Our approach ensures that the synthesized CBFs maintain a constant relative degree across the set of admissible states, thereby preventing unconstrained control scenarios. We illustrate the approach in simulation and further verify it through real-world quadrotor experiments, demonstrating its effectiveness in preserving desired system safety properties.

SwarmGPT-Primitive: A Language-Driven Choreographer for Drone Swarms Using Safe Motion Primitive Composition

Dec 11, 2024Abstract:Catalyzed by advancements in hardware and software, drone performances are increasingly making their mark in the entertainment industry. However, designing smooth and safe choreographies for drone swarms is complex and often requires expert domain knowledge. In this work, we introduce SwarmGPT-Primitive, a language-based choreographer that integrates the reasoning capabilities of large language models (LLMs) with safe motion planning to facilitate deployable drone swarm choreographies. The LLM composes choreographies for a given piece of music by utilizing a library of motion primitives; the language-based choreographer is augmented with an optimization-based safety filter, which certifies the choreography for real-world deployment by making minimal adjustments when feasibility and safety constraints are violated. The overall SwarmGPT-Primitive framework decouples choreographic design from safe motion planning, which allows non-expert users to re-prompt and refine compositions without concerns about compliance with constraints such as avoiding collisions or downwash effects or satisfying actuation limits. We demonstrate our approach through simulations and experiments with swarms of up to 20 drones performing choreographies designed based on various songs, highlighting the system's ability to generate effective and synchronized drone choreographies for real-world deployment.

Semantically Safe Robot Manipulation: From Semantic Scene Understanding to Motion Safeguards

Oct 19, 2024

Abstract:Ensuring safe interactions in human-centric environments requires robots to understand and adhere to constraints recognized by humans as "common sense" (e.g., "moving a cup of water above a laptop is unsafe as the water may spill" or "rotating a cup of water is unsafe as it can lead to pouring its content"). Recent advances in computer vision and machine learning have enabled robots to acquire a semantic understanding of and reason about their operating environments. While extensive literature on safe robot decision-making exists, semantic understanding is rarely integrated into these formulations. In this work, we propose a semantic safety filter framework to certify robot inputs with respect to semantically defined constraints (e.g., unsafe spatial relationships, behaviours, and poses) and geometrically defined constraints (e.g., environment-collision and self-collision constraints). In our proposed approach, given perception inputs, we build a semantic map of the 3D environment and leverage the contextual reasoning capabilities of large language models to infer semantically unsafe conditions. These semantically unsafe conditions are then mapped to safe actions through a control barrier certification formulation. We evaluated our semantic safety filter approach in teleoperated tabletop manipulation tasks and pick-and-place tasks, demonstrating its effectiveness in incorporating semantic constraints to ensure safe robot operation beyond collision avoidance.

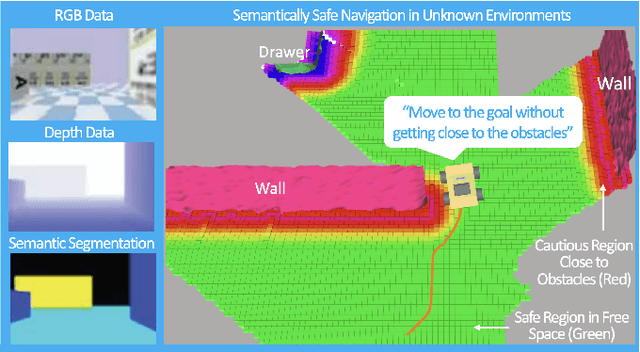

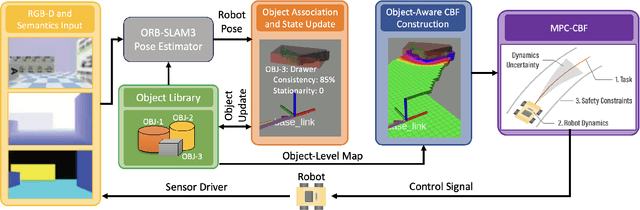

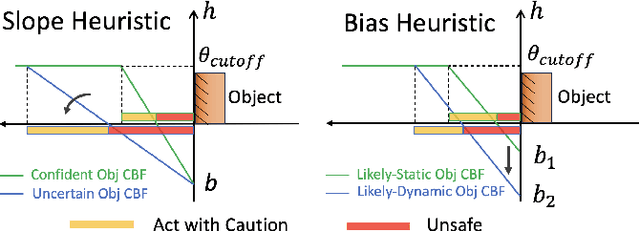

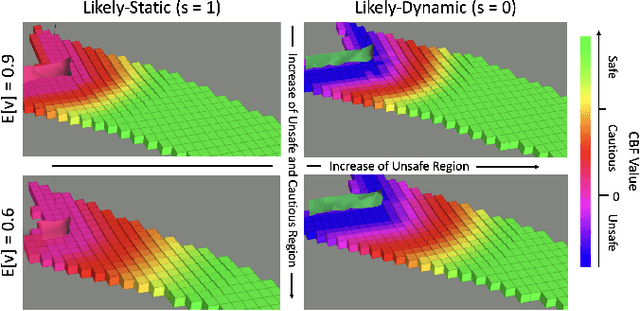

Closing the Perception-Action Loop for Semantically Safe Navigation in Semi-Static Environments

Apr 22, 2024

Abstract:Autonomous robots navigating in changing environments demand adaptive navigation strategies for safe long-term operation. While many modern control paradigms offer theoretical guarantees, they often assume known extrinsic safety constraints, overlooking challenges when deployed in real-world environments where objects can appear, disappear, and shift over time. In this paper, we present a closed-loop perception-action pipeline that bridges this gap. Our system encodes an online-constructed dense map, along with object-level semantic and consistency estimates into a control barrier function (CBF) to regulate safe regions in the scene. A model predictive controller (MPC) leverages the CBF-based safety constraints to adapt its navigation behaviour, which is particularly crucial when potential scene changes occur. We test the system in simulations and real-world experiments to demonstrate the impact of semantic information and scene change handling on robot behavior, validating the practicality of our approach.

Is Data All That Matters? The Role of Control Frequency for Learning-Based Sampled-Data Control of Uncertain Systems

Mar 14, 2024Abstract:Learning models or control policies from data has become a powerful tool to improve the performance of uncertain systems. While a strong focus has been placed on increasing the amount and quality of data to improve performance, data can never fully eliminate uncertainty, making feedback necessary to ensure stability and performance. We show that the control frequency at which the input is recalculated is a crucial design parameter, yet it has hardly been considered before. We address this gap by combining probabilistic model learning and sampled-data control. We use Gaussian processes (GPs) to learn a continuous-time model and compute a corresponding discrete-time controller. The result is an uncertain sampled-data control system, for which we derive robust stability conditions. We formulate semidefinite programs to compute the minimum control frequency required for stability and to optimize performance. As a result, our approach enables us to study the effect of both control frequency and data on stability and closed-loop performance. We show in numerical simulations of a quadrotor that performance can be improved by increasing either the amount of data or the control frequency, and that we can trade off one for the other. For example, by increasing the control frequency by 33%, we can reduce the number of data points by half while still achieving similar performance.

Control-Barrier-Aided Teleoperation with Visual-Inertial SLAM for Safe MAV Navigation in Complex Environments

Mar 07, 2024

Abstract:In this paper, we consider a Micro Aerial Vehicle (MAV) system teleoperated by a non-expert and introduce a perceptive safety filter that leverages Control Barrier Functions (CBFs) in conjunction with Visual-Inertial Simultaneous Localization and Mapping (VI-SLAM) and dense 3D occupancy mapping to guarantee safe navigation in complex and unstructured environments. Our system relies solely on onboard IMU measurements, stereo infrared images, and depth images and autonomously corrects teleoperated inputs when they are deemed unsafe. We define a point in 3D space as unsafe if it satisfies either of two conditions: (i) it is occupied by an obstacle, or (ii) it remains unmapped. At each time step, an occupancy map of the environment is updated by the VI-SLAM by fusing the onboard measurements, and a CBF is constructed to parameterize the (un)safe region in the 3D space. Given the CBF and state feedback from the VI-SLAM module, a safety filter computes a certified reference that best matches the teleoperation input while satisfying the safety constraint encoded by the CBF. In contrast to existing perception-based safe control frameworks, we directly close the perception-action loop and demonstrate the full capability of safe control in combination with real-time VI-SLAM without any external infrastructure or prior knowledge of the environment. We verify the efficacy of the perceptive safety filter in real-time MAV experiments using exclusively onboard sensing and computation and show that the teleoperated MAV is able to safely navigate through unknown environments despite arbitrary inputs sent by the teleoperator.

An EnKF-LSTM Assimilation Algorithm for Crop Growth Model

Mar 06, 2024Abstract:Accurate and timely prediction of crop growth is of great significance to ensure crop yields and researchers have developed several crop models for the prediction of crop growth. However, there are large difference between the simulation results obtained by the crop models and the actual results, thus in this paper, we proposed to combine the simulation results with the collected crop data for data assimilation so that the accuracy of prediction will be improved. In this paper, an EnKF-LSTM data assimilation method for various crops is proposed by combining ensemble Kalman filter and LSTM neural network, which effectively avoids the overfitting problem of existing data assimilation methods and eliminates the uncertainty of the measured data. The verification of the proposed EnKF-LSTM method and the comparison of the proposed method with other data assimilation methods were performed using datasets collected by sensor equipment deployed on a farm.

Safe Multi-Agent Reinforcement Learning for Formation Control without Individual Reference Targets

Dec 20, 2023

Abstract:In recent years, formation control of unmanned vehicles has received considerable interest, driven by the progress in autonomous systems and the imperative for multiple vehicles to carry out diverse missions. In this paper, we address the problem of behavior-based formation control of mobile robots, where we use safe multi-agent reinforcement learning~(MARL) to ensure the safety of the robots by eliminating all collisions during training and execution. To ensure safety, we implemented distributed model predictive control safety filters to override unsafe actions. We focus on achieving behavior-based formation without having individual reference targets for the robots, and instead use targets for the centroid of the formation. This formulation facilitates the deployment of formation control on real robots and improves the scalability of our approach to more robots. The task cannot be addressed through optimization-based controllers without specific individual reference targets for the robots and information about the relative locations of each robot to the others. That is why, for our formulation we use MARL to train the robots. Moreover, in order to account for the interactions between the agents, we use attention-based critics to improve the training process. We train the agents in simulation and later on demonstrate the resulting behavior of our approach on real Turtlebot robots. We show that despite the agents having very limited information, we can still safely achieve the desired behavior.

Optimized Control Invariance Conditions for Uncertain Input-Constrained Nonlinear Control Systems

Dec 15, 2023Abstract:Providing safety guarantees for learning-based controllers is important for real-world applications. One approach to realizing safety for arbitrary control policies is safety filtering. If necessary, the filter modifies control inputs to ensure that the trajectories of a closed-loop system stay within a given state constraint set for all future time, referred to as the set being positive invariant or the system being safe. Under the assumption of fully known dynamics, safety can be certified using control barrier functions (CBFs). However, the dynamics model is often either unknown or only partially known in practice. Learning-based methods have been proposed to approximate the CBF condition for unknown or uncertain systems from data; however, these techniques do not account for input constraints and, as a result, may not yield a valid CBF condition to render the safe set invariant. In this work, we study conditions that guarantee control invariance of the system under input constraints and propose an optimization problem to reduce the conservativeness of CBF-based safety filters. Building on these theoretical insights, we further develop a probabilistic learning approach that allows us to build a safety filter that guarantees safety for uncertain, input-constrained systems with high probability. We demonstrate the efficacy of our proposed approach in simulation and real-world experiments on a quadrotor and show that we can achieve safe closed-loop behavior for a learned system while satisfying state and input constraints.

Swarm-GPT: Combining Large Language Models with Safe Motion Planning for Robot Choreography Design

Dec 02, 2023

Abstract:This paper presents Swarm-GPT, a system that integrates large language models (LLMs) with safe swarm motion planning - offering an automated and novel approach to deployable drone swarm choreography. Swarm-GPT enables users to automatically generate synchronized drone performances through natural language instructions. With an emphasis on safety and creativity, Swarm-GPT addresses a critical gap in the field of drone choreography by integrating the creative power of generative models with the effectiveness and safety of model-based planning algorithms. This goal is achieved by prompting the LLM to generate a unique set of waypoints based on extracted audio data. A trajectory planner processes these waypoints to guarantee collision-free and feasible motion. Results can be viewed in simulation prior to execution and modified through dynamic re-prompting. Sim-to-real transfer experiments demonstrate Swarm-GPT's ability to accurately replicate simulated drone trajectories, with a mean sim-to-real root mean square error (RMSE) of 28.7 mm. To date, Swarm-GPT has been successfully showcased at three live events, exemplifying safe real-world deployment of pre-trained models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge