João Moura

Online Estimation and Manipulation of Articulated Objects

Jan 04, 2026Abstract:From refrigerators to kitchen drawers, humans interact with articulated objects effortlessly every day while completing household chores. For automating these tasks, service robots must be capable of manipulating arbitrary articulated objects. Recent deep learning methods have been shown to predict valuable priors on the affordance of articulated objects from vision. In contrast, many other works estimate object articulations by observing the articulation motion, but this requires the robot to already be capable of manipulating the object. In this article, we propose a novel approach combining these methods by using a factor graph for online estimation of articulation which fuses learned visual priors and proprioceptive sensing during interaction into an analytical model of articulation based on Screw Theory. With our method, a robotic system makes an initial prediction of articulation from vision before touching the object, and then quickly updates the estimate from kinematic and force sensing during manipulation. We evaluate our method extensively in both simulations and real-world robotic manipulation experiments. We demonstrate several closed-loop estimation and manipulation experiments in which the robot was capable of opening previously unseen drawers. In real hardware experiments, the robot achieved a 75% success rate for autonomous opening of unknown articulated objects.

Fast Flow-based Visuomotor Policies via Conditional Optimal Transport Couplings

May 02, 2025Abstract:Diffusion and flow matching policies have recently demonstrated remarkable performance in robotic applications by accurately capturing multimodal robot trajectory distributions. However, their computationally expensive inference, due to the numerical integration of an ODE or SDE, limits their applicability as real-time controllers for robots. We introduce a methodology that utilizes conditional Optimal Transport couplings between noise and samples to enforce straight solutions in the flow ODE for robot action generation tasks. We show that naively coupling noise and samples fails in conditional tasks and propose incorporating condition variables into the coupling process to improve few-step performance. The proposed few-step policy achieves a 4% higher success rate with a 10x speed-up compared to Diffusion Policy on a diverse set of simulation tasks. Moreover, it produces high-quality and diverse action trajectories within 1-2 steps on a set of real-world robot tasks. Our method also retains the same training complexity as Diffusion Policy and vanilla Flow Matching, in contrast to distillation-based approaches.

Learning Visuotactile Estimation and Control for Non-prehensile Manipulation under Occlusions

Dec 17, 2024

Abstract:Manipulation without grasping, known as non-prehensile manipulation, is essential for dexterous robots in contact-rich environments, but presents many challenges relating with underactuation, hybrid-dynamics, and frictional uncertainty. Additionally, object occlusions in a scenario of contact uncertainty and where the motion of the object evolves independently from the robot becomes a critical problem, which previous literature fails to address. We present a method for learning visuotactile state estimators and uncertainty-aware control policies for non-prehensile manipulation under occlusions, by leveraging diverse interaction data from privileged policies trained in simulation. We formulate the estimator within a Bayesian deep learning framework, to model its uncertainty, and then train uncertainty-aware control policies by incorporating the pre-learned estimator into the reinforcement learning (RL) loop, both of which lead to significantly improved estimator and policy performance. Therefore, unlike prior non-prehensile research that relies on complex external perception set-ups, our method successfully handles occlusions after sim-to-real transfer to robotic hardware with a simple onboard camera. See our video: https://youtu.be/hW-C8i_HWgs.

An Efficient Representation of Whole-body Model Predictive Control for Online Compliant Dual-arm Mobile Manipulation

Oct 30, 2024

Abstract:Dual-arm mobile manipulators can transport and manipulate large-size objects with simple end-effectors. To interact with dynamic environments with strict safety and compliance requirements, achieving whole-body motion planning online while meeting various hard constraints for such highly redundant mobile manipulators poses a significant challenge. We tackle this challenge by presenting an efficient representation of whole-body motion trajectories within our bilevel model-based predictive control (MPC) framework. We utilize B\'ezier-curve parameterization to represent the optimized collision-free trajectories of two collaborating end-effectors in the first MPC, facilitating fast long-horizon object-oriented motion planning in SE(3) while considering approximated feasibility constraints. This approach is further applied to parameterize whole-body trajectories in the second MPC for whole-body motion generation with predictive admittance control in a relatively short horizon while satisfying whole-body hard constraints. This representation enables two MPCs with continuous properties, thereby avoiding inaccurate model-state transition and dense decision-variable settings in existing MPCs using the discretization method. It strengthens the online execution of the bilevel MPC framework in high-dimensional space and facilitates the generation of consistent commands for our hybrid position/velocity-controlled robot. The simulation comparisons and real-world experiments demonstrate the efficiency and robustness of this approach in various scenarios for static and dynamic obstacle avoidance, and compliant interaction control with the manipulated object and external disturbances.

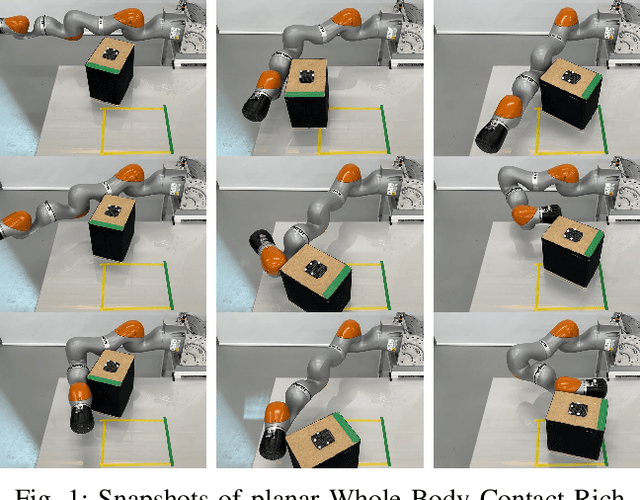

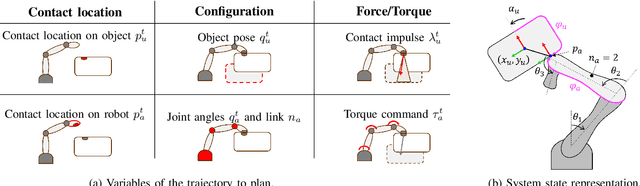

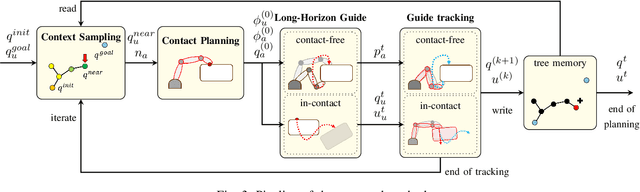

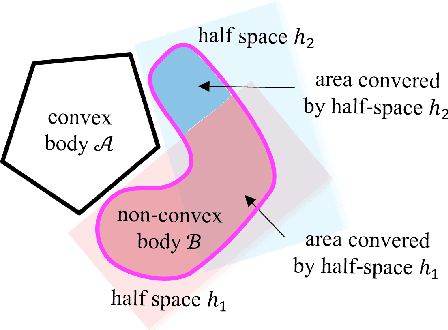

Explicit Contact Optimization in Whole-Body Contact-Rich Manipulation

Aug 28, 2024

Abstract:Humans can exploit contacts anywhere on their body surface to manipulate large and heavy items, objects normally out of reach or multiple objects at once. However, such manipulation through contacts using the whole surface of the body remains extremely challenging to achieve on robots. This can be labelled as Whole-Body Contact-Rich Manipulation (WBCRM) problem. In addition to the high-dimensionality of the Contact-Rich Manipulation problem due to the combinatorics of contact modes, admitting contact creation anywhere on the body surface adds complexity, which hinders planning of manipulation within a reasonable time. We address this computational problem by formulating the contact and motion planning of planar WBCRM as hierarchical continuous optimization problems. To enable this formulation, we propose a novel continuous explicit representation of the robot surface, that we believe to be foundational for future research using continuous optimization for WBCRM. Our results demonstrate a significant improvement of convergence, planning time and feasibility - with, on the average, 99% less iterations and 96% reduction in time to find a solution over considered scenarios, without recourse to prone-to-failure trajectory refinement steps.

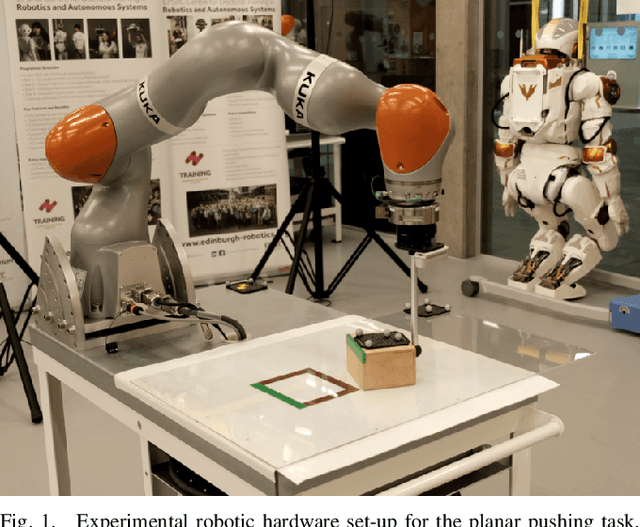

Learning Goal-Directed Object Pushing in Cluttered Scenes with Location-Based Attention

Mar 26, 2024Abstract:Non-prehensile planar pushing is a challenging task due to its underactuated nature with hybrid-dynamics, where a robot needs to reason about an object's long-term behaviour and contact-switching, while being robust to contact uncertainty. The presence of clutter in the environment further complicates this task, introducing the need to include more sophisticated spatial analysis to avoid collisions. Building upon prior work on reinforcement learning (RL) with multimodal categorical exploration for planar pushing, in this paper we incorporate location-based attention to enable robust navigation through clutter. Unlike previous RL literature addressing this obstacle avoidance pushing task, our framework requires no predefined global paths and considers the target orientation of the manipulated object. Our results demonstrate that the learned policies successfully navigate through a wide range of complex obstacle configurations, including dynamic obstacles, with smooth motions, achieving the desired target object pose. We also validate the transferability of the learned policies to robotic hardware using the KUKA iiwa robot arm.

Impact-Aware Bimanual Catching of Large-Momentum Objects

Mar 25, 2024

Abstract:This paper investigates one of the most challenging tasks in dynamic manipulation -- catching large-momentum moving objects. Beyond the realm of quasi-static manipulation, dealing with highly dynamic objects can significantly improve the robot's capability of interacting with its surrounding environment. Yet, the inevitable motion mismatch between the fast moving object and the approaching robot will result in large impulsive forces, which lead to the unstable contacts and irreversible damage to both the object and the robot. To address the above problems, we propose an online optimization framework to: 1) estimate and predict the linear and angular motion of the object; 2) search and select the optimal contact locations across every surface of the object to mitigate impact through sequential quadratic programming (SQP); 3) simultaneously optimize the end-effector motion, stiffness, and contact force for both robots using multi-mode trajectory optimization (MMTO); and 4) realise the impact-aware catching motion on the compliant robotic system based on indirect force controller. We validate the impulse distribution, contact selection, and impact-aware MMTO algorithms in simulation and demonstrate the benefits of the proposed framework in real-world experiments including catching large-momentum moving objects with well-defined motion, constrained motion and free-flying motion.

Online Estimation of Articulated Objects with Factor Graphs using Vision and Proprioceptive Sensing

Sep 28, 2023Abstract:From dishwashers to cabinets, humans interact with articulated objects every day, and for a robot to assist in common manipulation tasks, it must learn a representation of articulation. Recent deep learning learning methods can provide powerful vision-based priors on the affordance of articulated objects from previous, possibly simulated, experiences. In contrast, many works estimate articulation by observing the object in motion, requiring the robot to already be interacting with the object. In this work, we propose to use the best of both worlds by introducing an online estimation method that merges vision-based affordance predictions from a neural network with interactive kinematic sensing in an analytical model. Our work has the benefit of using vision to predict an articulation model before touching the object, while also being able to update the model quickly from kinematic sensing during the interaction. In this paper, we implement a full system using shared autonomy for robotic opening of articulated objects, in particular objects in which the articulation is not apparent from vision alone. We implemented our system on a real robot and performed several autonomous closed-loop experiments in which the robot had to open a door with unknown joint while estimating the articulation online. Our system achieved an 80% success rate for autonomous opening of unknown articulated objects.

Few-Shot Learning of Force-Based Motions From Demonstration Through Pre-training of Haptic Representation

Sep 08, 2023

Abstract:In many contact-rich tasks, force sensing plays an essential role in adapting the motion to the physical properties of the manipulated object. To enable robots to capture the underlying distribution of object properties necessary for generalising learnt manipulation tasks to unseen objects, existing Learning from Demonstration (LfD) approaches require a large number of costly human demonstrations. Our proposed semi-supervised LfD approach decouples the learnt model into an haptic representation encoder and a motion generation decoder. This enables us to pre-train the first using large amount of unsupervised data, easily accessible, while using few-shot LfD to train the second, leveraging the benefits of learning skills from humans. We validate the approach on the wiping task using sponges with different stiffness and surface friction. Our results demonstrate that pre-training significantly improves the ability of the LfD model to recognise physical properties and generate desired wiping motions for unseen sponges, outperforming the LfD method without pre-training. We validate the motion generated by our semi-supervised LfD model on the physical robot hardware using the KUKA iiwa robot arm. We also validate that the haptic representation encoder, pre-trained in simulation, captures the properties of real objects, explaining its contribution to improving the generalisation of the downstream task.

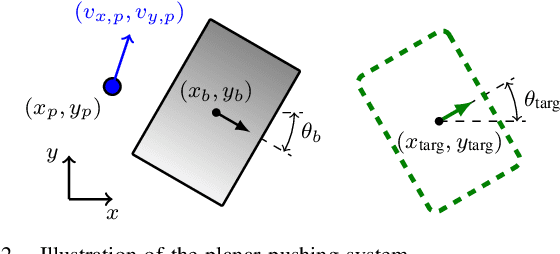

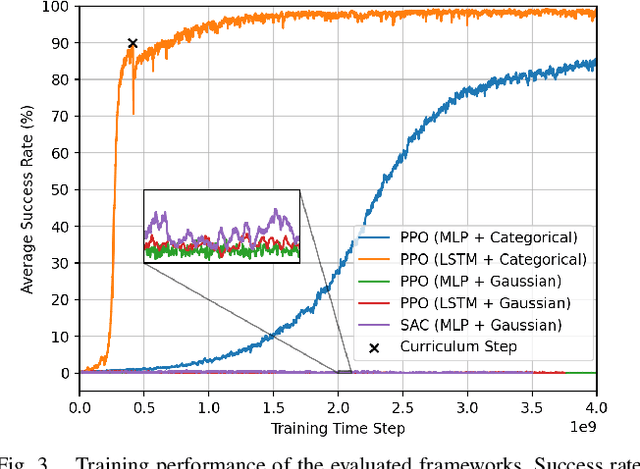

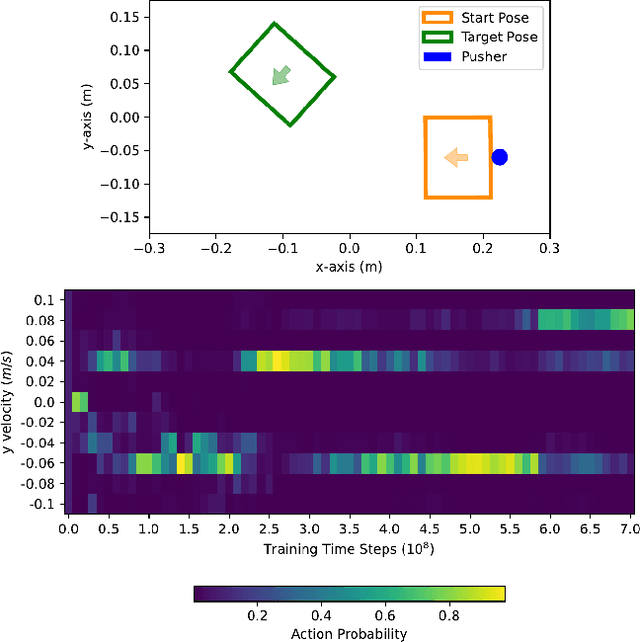

Nonprehensile Planar Manipulation through Reinforcement Learning with Multimodal Categorical Exploration

Aug 04, 2023

Abstract:Developing robot controllers capable of achieving dexterous nonprehensile manipulation, such as pushing an object on a table, is challenging. The underactuated and hybrid-dynamics nature of the problem, further complicated by the uncertainty resulting from the frictional interactions, requires sophisticated control behaviors. Reinforcement Learning (RL) is a powerful framework for developing such robot controllers. However, previous RL literature addressing the nonprehensile pushing task achieves low accuracy, non-smooth trajectories, and only simple motions, i.e. without rotation of the manipulated object. We conjecture that previously used unimodal exploration strategies fail to capture the inherent hybrid-dynamics of the task, arising from the different possible contact interaction modes between the robot and the object, such as sticking, sliding, and separation. In this work, we propose a multimodal exploration approach through categorical distributions, which enables us to train planar pushing RL policies for arbitrary starting and target object poses, i.e. positions and orientations, and with improved accuracy. We show that the learned policies are robust to external disturbances and observation noise, and scale to tasks with multiple pushers. Furthermore, we validate the transferability of the learned policies, trained entirely in simulation, to a physical robot hardware using the KUKA iiwa robot arm. See our supplemental video: https://youtu.be/vTdva1mgrk4.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge