Andrew Stratton

How Human Motion Prediction Quality Shapes Social Robot Navigation Performance in Constrained Spaces

Jan 14, 2026Abstract:Motivated by the vision of integrating mobile robots closer to humans in warehouses, hospitals, manufacturing plants, and the home, we focus on robot navigation in dynamic and spatially constrained environments. Ensuring human safety, comfort, and efficiency in such settings requires that robots are endowed with a model of how humans move around them. Human motion prediction around robots is especially challenging due to the stochasticity of human behavior, differences in user preferences, and data scarcity. In this work, we perform a methodical investigation of the effects of human motion prediction quality on robot navigation performance, as well as human productivity and impressions. We design a scenario involving robot navigation among two human subjects in a constrained workspace and instantiate it in a user study ($N=80$) involving two different robot platforms, conducted across two sites from different world regions. Key findings include evidence that: 1) the widely adopted average displacement error is not a reliable predictor of robot navigation performance and human impressions; 2) the common assumption of human cooperation breaks down in constrained environments, with users often not reciprocating robot cooperation, and causing performance degradations; 3) more efficient robot navigation often comes at the expense of human efficiency and comfort.

Characterizing the Complexity of Social Robot Navigation Scenarios

May 18, 2024

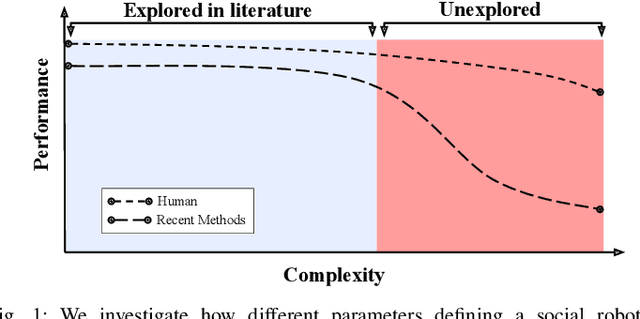

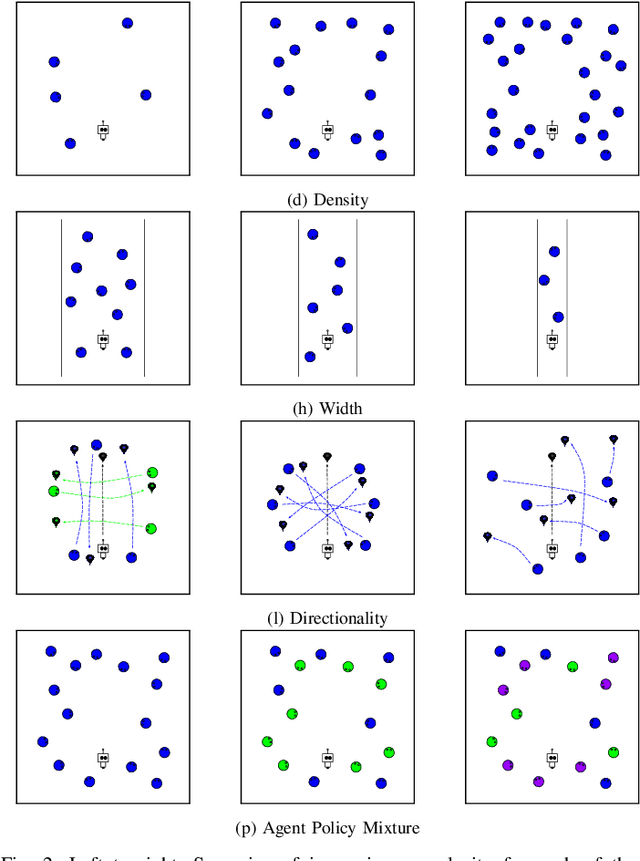

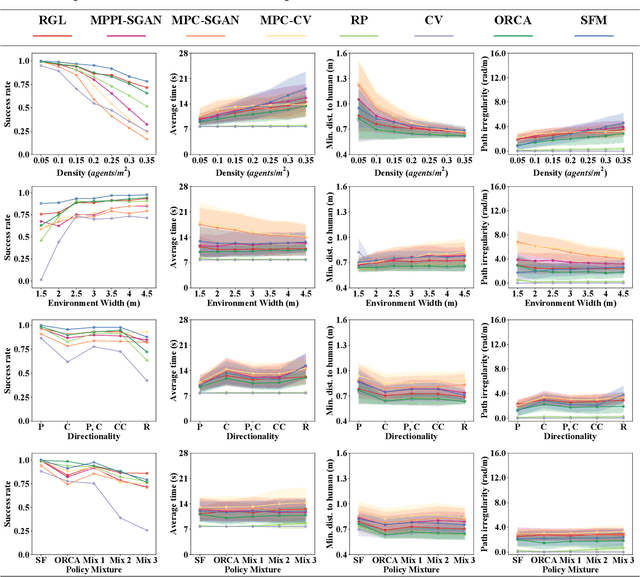

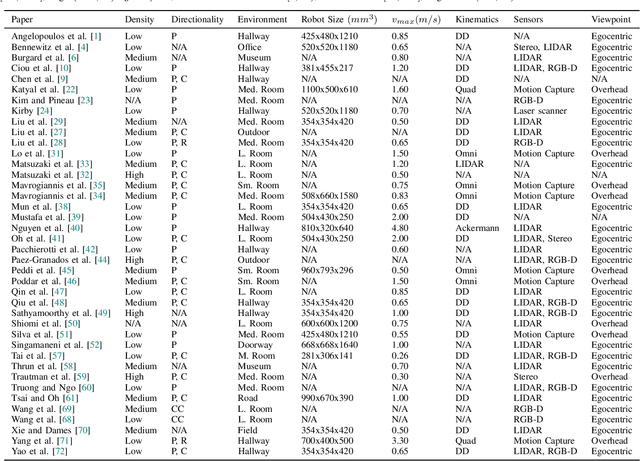

Abstract:Social robot navigation algorithms are often demonstrated in overly simplified scenarios, prohibiting the extraction of practical insights about their relevance to real world domains. Our key insight is that an understanding of the inherent complexity of a social robot navigation scenario could help characterize the limitations of existing navigation algorithms and provide actionable directions for improvement. Through an exploration of recent literature, we identify a series of factors contributing to the complexity of a scenario, disambiguating between contextual and robot-related ones. We then conduct a simulation study investigating how manipulations of contextual factors impact the performance of a variety of navigation algorithms. We find that dense and narrow environments correlate most strongly with performance drops, while the heterogeneity of agent policies and directionality of interactions have a less pronounced effect. This motivates a shift towards developing and testing algorithms under higher-complexity settings.

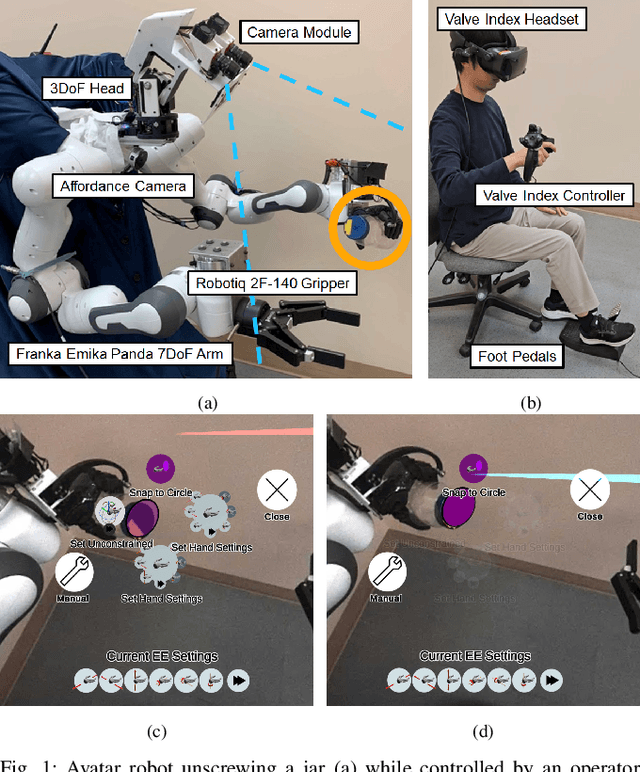

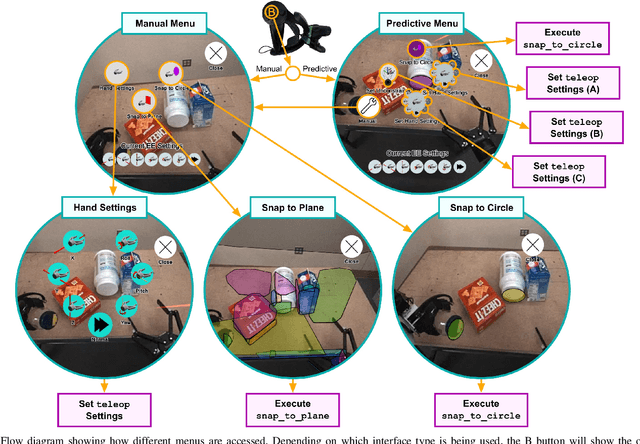

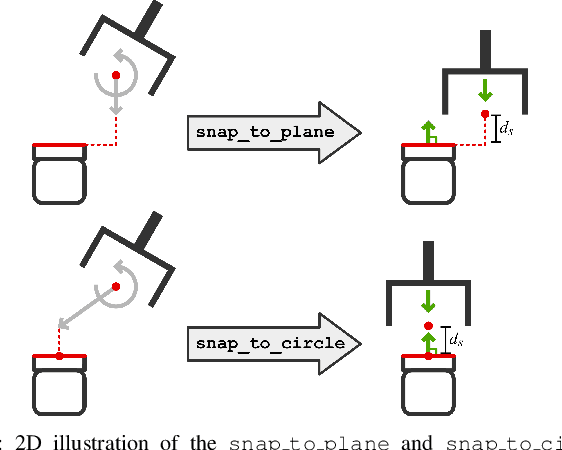

Integrating Open-World Shared Control in Immersive Avatars

Jan 05, 2024

Abstract:Teleoperated avatar robots allow people to transport their manipulation skills to environments that may be difficult or dangerous to work in. Current systems are able to give operators direct control of many components of the robot to immerse them in the remote environment, but operators still struggle to complete tasks as competently as they could in person. We present a framework for incorporating open-world shared control into avatar robots to combine the benefits of direct and shared control. This framework preserves the fluency of our avatar interface by minimizing obstructions to the operator's view and using the same interface for direct, shared, and fully autonomous control. In a human subjects study (N=19), we find that operators using this framework complete a range of tasks significantly more quickly and reliably than those that do not.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge