Patrick Lancaster

DexterityGen: Foundation Controller for Unprecedented Dexterity

Feb 06, 2025

Abstract:Teaching robots dexterous manipulation skills, such as tool use, presents a significant challenge. Current approaches can be broadly categorized into two strategies: human teleoperation (for imitation learning) and sim-to-real reinforcement learning. The first approach is difficult as it is hard for humans to produce safe and dexterous motions on a different embodiment without touch feedback. The second RL-based approach struggles with the domain gap and involves highly task-specific reward engineering on complex tasks. Our key insight is that RL is effective at learning low-level motion primitives, while humans excel at providing coarse motion commands for complex, long-horizon tasks. Therefore, the optimal solution might be a combination of both approaches. In this paper, we introduce DexterityGen (DexGen), which uses RL to pretrain large-scale dexterous motion primitives, such as in-hand rotation or translation. We then leverage this learned dataset to train a dexterous foundational controller. In the real world, we use human teleoperation as a prompt to the controller to produce highly dexterous behavior. We evaluate the effectiveness of DexGen in both simulation and real world, demonstrating that it is a general-purpose controller that can realize input dexterous manipulation commands and significantly improves stability by 10-100x measured as duration of holding objects across diverse tasks. Notably, with DexGen we demonstrate unprecedented dexterous skills including diverse object reorientation and dexterous tool use such as pen, syringe, and screwdriver for the first time.

Sparsh: Self-supervised touch representations for vision-based tactile sensing

Oct 31, 2024Abstract:In this work, we introduce general purpose touch representations for the increasingly accessible class of vision-based tactile sensors. Such sensors have led to many recent advances in robot manipulation as they markedly complement vision, yet solutions today often rely on task and sensor specific handcrafted perception models. Collecting real data at scale with task centric ground truth labels, like contact forces and slip, is a challenge further compounded by sensors of various form factor differing in aspects like lighting and gel markings. To tackle this we turn to self-supervised learning (SSL) that has demonstrated remarkable performance in computer vision. We present Sparsh, a family of SSL models that can support various vision-based tactile sensors, alleviating the need for custom labels through pre-training on 460k+ tactile images with masking and self-distillation in pixel and latent spaces. We also build TacBench, to facilitate standardized benchmarking across sensors and models, comprising of six tasks ranging from comprehending tactile properties to enabling physical perception and manipulation planning. In evaluations, we find that SSL pre-training for touch representation outperforms task and sensor-specific end-to-end training by 95.1% on average over TacBench, and Sparsh (DINO) and Sparsh (IJEPA) are the most competitive, indicating the merits of learning in latent space for tactile images. Project page: https://sparsh-ssl.github.io/

MoDem-V2: Visuo-Motor World Models for Real-World Robot Manipulation

Sep 25, 2023Abstract:Robotic systems that aspire to operate in uninstrumented real-world environments must perceive the world directly via onboard sensing. Vision-based learning systems aim to eliminate the need for environment instrumentation by building an implicit understanding of the world based on raw pixels, but navigating the contact-rich high-dimensional search space from solely sparse visual reward signals significantly exacerbates the challenge of exploration. The applicability of such systems is thus typically restricted to simulated or heavily engineered environments since agent exploration in the real-world without the guidance of explicit state estimation and dense rewards can lead to unsafe behavior and safety faults that are catastrophic. In this study, we isolate the root causes behind these limitations to develop a system, called MoDem-V2, capable of learning contact-rich manipulation directly in the uninstrumented real world. Building on the latest algorithmic advancements in model-based reinforcement learning (MBRL), demo-bootstrapping, and effective exploration, MoDem-V2 can acquire contact-rich dexterous manipulation skills directly in the real world. We identify key ingredients for leveraging demonstrations in model learning while respecting real-world safety considerations -- exploration centering, agency handover, and actor-critic ensembles. We empirically demonstrate the contribution of these ingredients in four complex visuo-motor manipulation problems in both simulation and the real world. To the best of our knowledge, our work presents the first successful system for demonstration-augmented visual MBRL trained directly in the real world. Visit https://sites.google.com/view/modem-v2 for videos and more details.

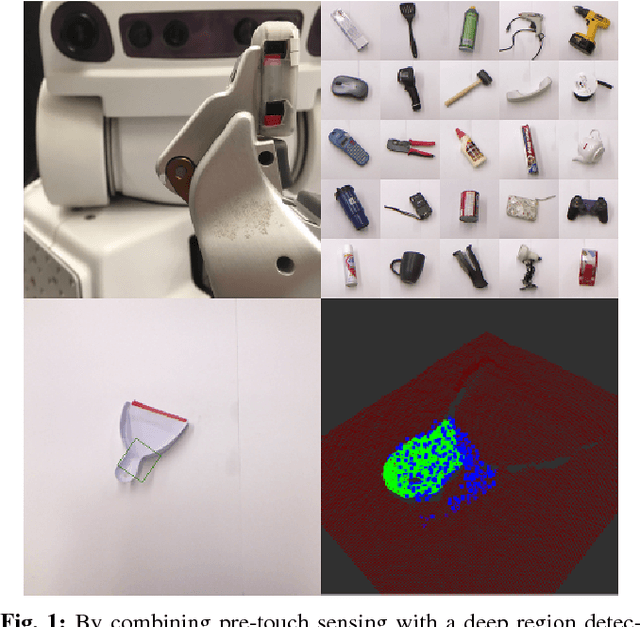

Improved Object Pose Estimation via Deep Pre-touch Sensing

Apr 09, 2022

Abstract:For certain manipulation tasks, object pose estimation from head-mounted cameras may not be sufficiently accurate. This is at least in part due to our inability to perfectly calibrate the coordinate frames of today's high degree of freedom robot arms that link the head to the end-effectors. We present a novel framework combining pre-touch sensing and deep learning to more accurately estimate pose in an efficient manner. The use of pre-touch sensing allows our method to localize the object directly with respect to the robot's end effector, thereby avoiding error caused by miscalibration of the arms. Instead of requiring the robot to scan the entire object with its pre-touch sensor, we use a deep neural network to detect object regions that contain distinctive geometric features. By focusing pre-touch sensing on these regions, the robot can more efficiently gather the information necessary to adjust its original pose estimate. Our region detection network was trained using a new dataset containing objects of widely varying geometries and has been labeled in a scalable fashion that is free from human bias. This dataset is applicable to any task that involves a pre-touch sensor gathering geometric information, and has been made publicly available. We evaluate our framework by having the robot re-estimate the pose of a number of objects of varying geometries. Compared to two simpler region proposal methods, we find that our deep neural network performs significantly better. In addition, we find that after a sequence of scans, objects can typically be localized to within 0.5 cm of their true position. We also observe that the original pose estimate can often be significantly improved after collecting a single quick scan.

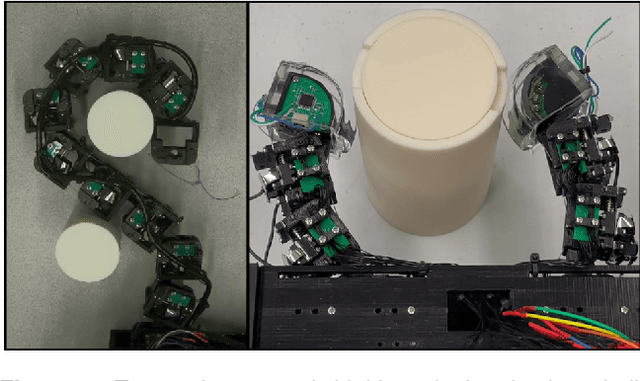

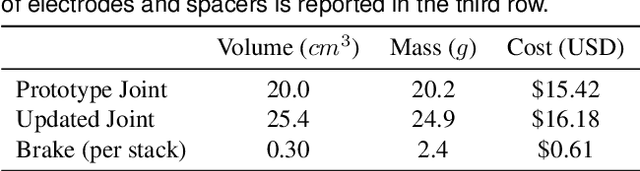

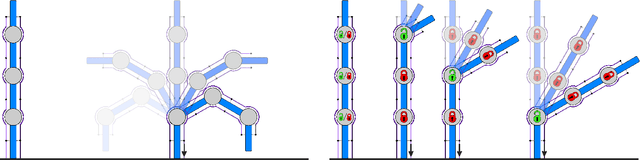

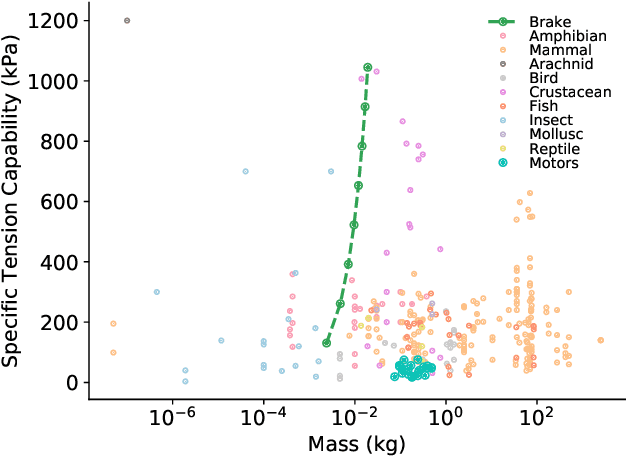

Electrostatic Brakes Enable Individual Joint Control of Underactuated, Highly Articulated Robots

Apr 05, 2022

Abstract:Highly articulated organisms serve as blueprints for incredibly dexterous mechanisms, but building similarly capable robotic counterparts has been hindered by the difficulties of developing electromechanical actuators with both the high strength and compactness of biological muscle. We develop a stackable electrostatic brake that has comparable specific tension and weight to that of muscles and integrate it into a robotic joint. Compared to electromechanical motors, our brake-equipped joint is four times lighter and one thousand times more power efficient while exerting similar holding torques. Our joint design enables a ten degree-of-freedom robot equipped with only one motor to manipulate multiple objects simultaneously. We also show that the use of brakes allows a two-fingered robot to perform in-hand re-positioning of an object 45% more quickly and with 53% lower positioning error than without brakes. Relative to fully actuated robots, our findings suggest that robots equipped with such electrostatic brakes will have lower weight, volume, and power consumption yet retain the ability to reach arbitrary joint configurations.

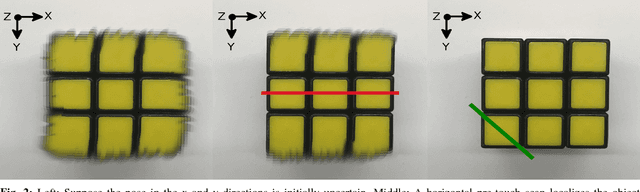

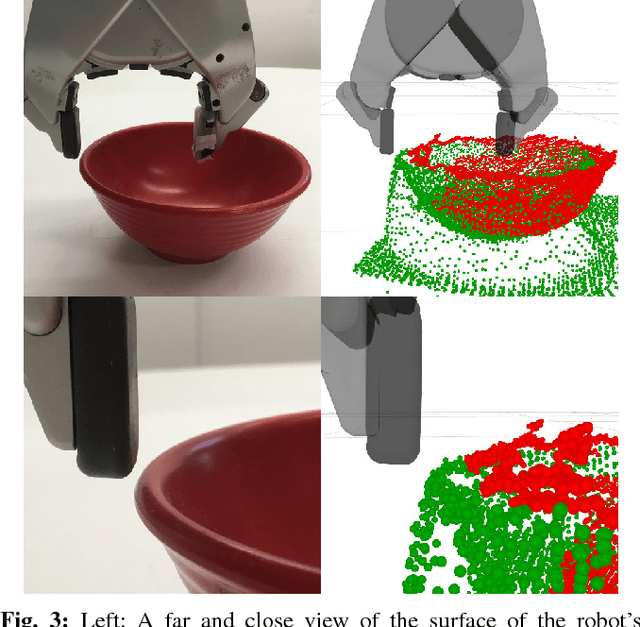

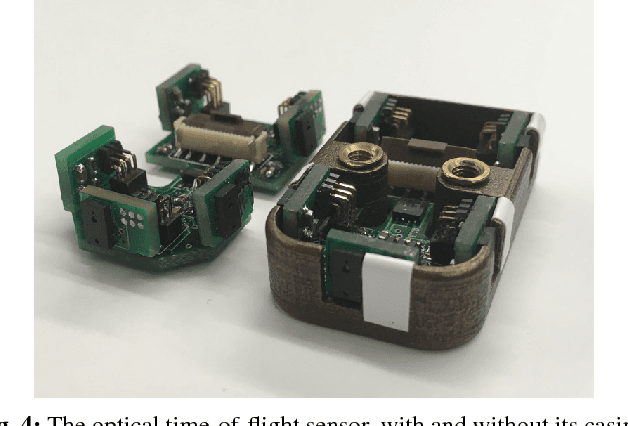

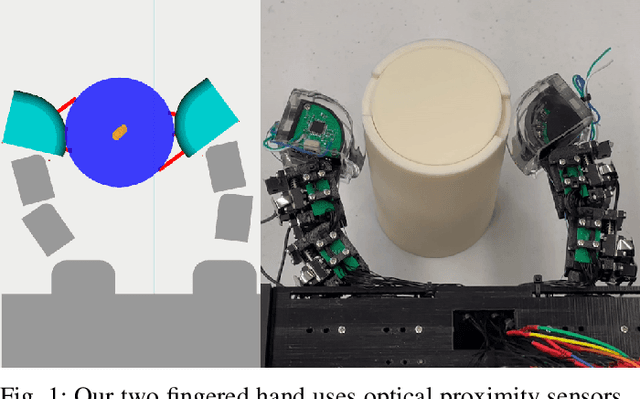

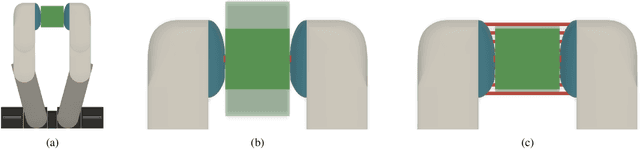

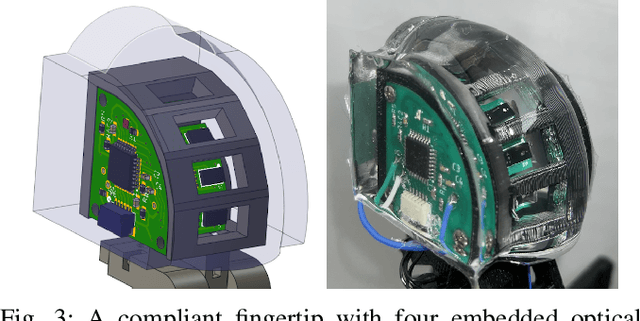

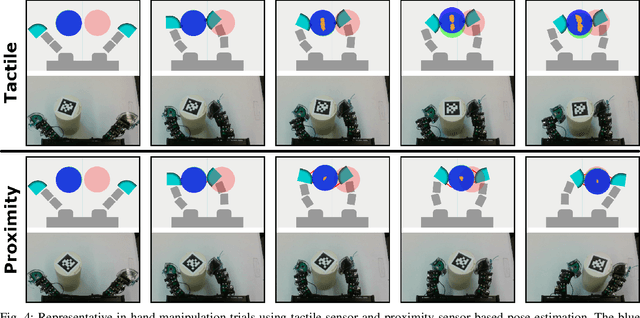

Optical Proximity Sensing for Pose Estimation During In-Hand Manipulation

Apr 05, 2022

Abstract:During in-hand manipulation, robots must be able to continuously estimate the pose of the object in order to generate appropriate control actions. The performance of algorithms for pose estimation hinges on the robot's sensors being able to detect discriminative geometric object features, but previous sensing modalities are unable to make such measurements robustly. The robot's fingers can occlude the view of environment- or robot-mounted image sensors, and tactile sensors can only measure at the local areas of contact. Motivated by fingertip-embedded proximity sensors' robustness to occlusion and ability to measure beyond the local areas of contact, we present the first evaluation of proximity sensor based pose estimation for in-hand manipulation. We develop a novel two-fingered hand with fingertip-embedded optical time-of-flight proximity sensors as a testbed for pose estimation during planar in-hand manipulation. Here, the in-hand manipulation task consists of the robot moving a cylindrical object from one end of its workspace to the other. We demonstrate, with statistical significance, that proximity-sensor based pose estimation via particle filtering during in-hand manipulation: a) exhibits 50% lower average pose error than a tactile-sensor based baseline; b) empowers a model predictive controller to achieve 30% lower final positioning error compared to when using tactile-sensor based pose estimates.

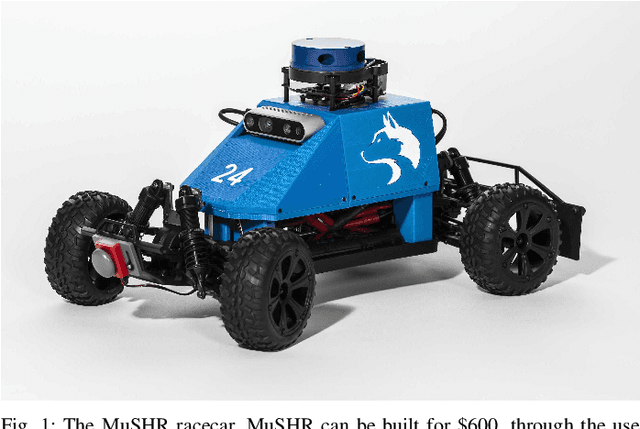

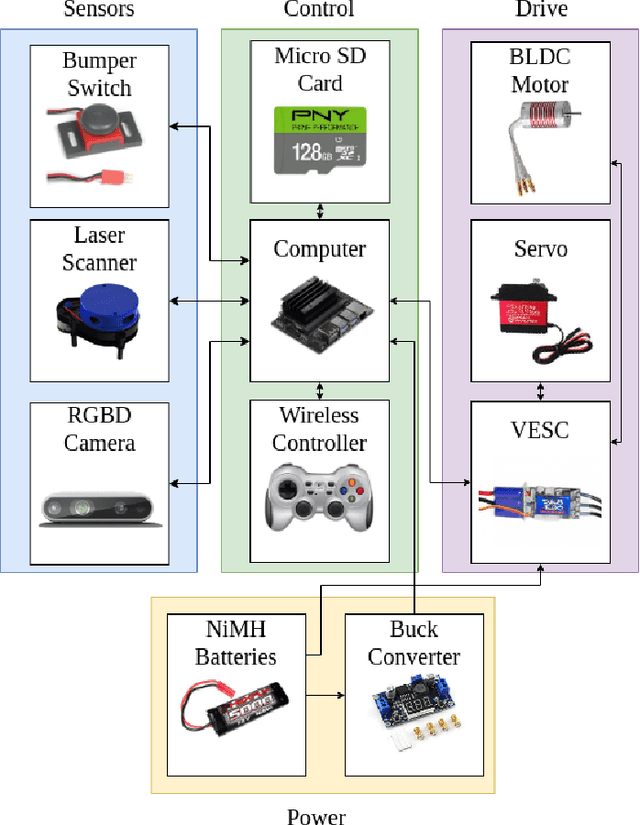

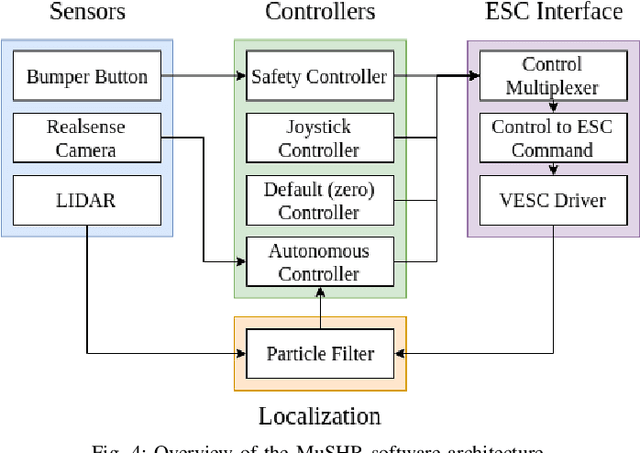

MuSHR: A Low-Cost, Open-Source Robotic Racecar for Education and Research

Aug 22, 2019

Abstract:We present MuSHR, the Multi-agent System for non-Holonomic Racing. MuSHR is a low-cost, open-source robotic racecar platform for education and research, developed by the Personal Robotics Lab in the Paul G. Allen School of Computer Science & Engineering at the University of Washington. MuSHR aspires to contribute towards democratizing the field of robotics as a low-cost platform that can be built and deployed by following detailed, open documentation and do-it-yourself tutorials. A set of demos and lab assignments developed for the Mobile Robots course at the University of Washington provide guided, hands-on experience with the platform, and milestones for further development. MuSHR is a valuable asset for academic research labs, robotics instructors, and robotics enthusiasts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge