Implicit Communication in Human-Robot Collaborative Transport

Paper and Code

Feb 05, 2025

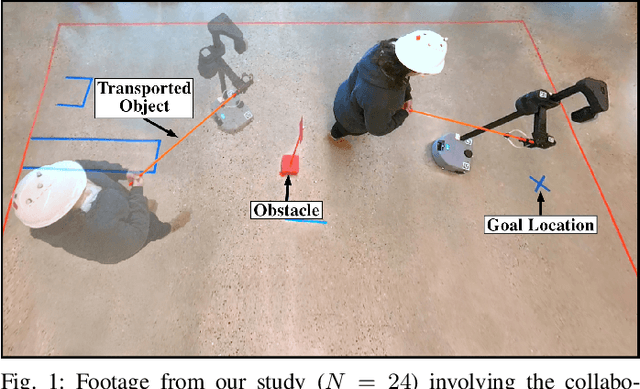

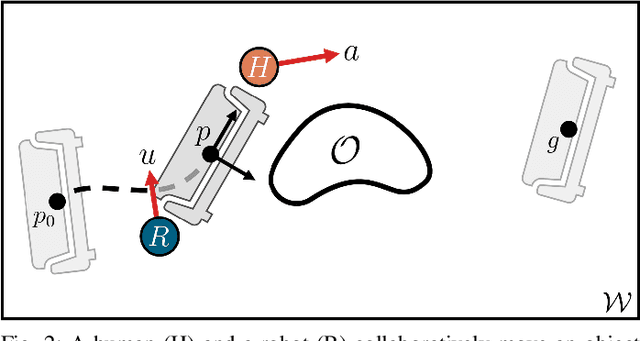

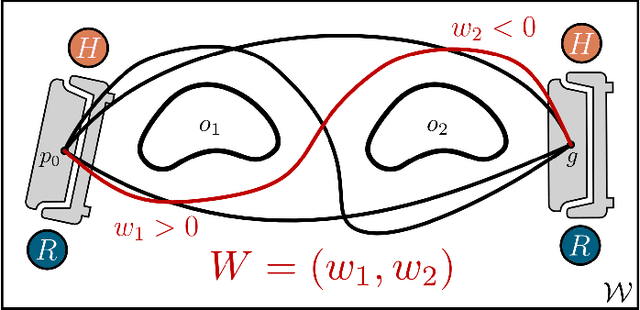

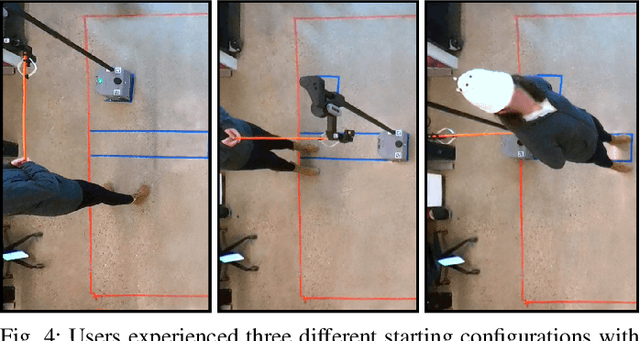

We focus on human-robot collaborative transport, in which a robot and a user collaboratively move an object to a goal pose. In the absence of explicit communication, this problem is challenging because it demands tight implicit coordination between two heterogeneous agents, who have very different sensing, actuation, and reasoning capabilities. Our key insight is that the two agents can coordinate fluently by encoding subtle, communicative signals into actions that affect the state of the transported object. To this end, we design an inference mechanism that probabilistically maps observations of joint actions executed by the two agents to a set of joint strategies of workspace traversal. Based on this mechanism, we define a cost representing the human's uncertainty over the unfolding traversal strategy and introduce it into a model predictive controller that balances between uncertainty minimization and efficiency maximization. We deploy our framework on a mobile manipulator (Hello Robot Stretch) and evaluate it in a within-subjects lab study (N=24). We show that our framework enables greater team performance and empowers the robot to be perceived as a significantly more fluent and competent partner compared to baselines lacking a communicative mechanism.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge