Hebert Azevedo-Sa

Using Trust for Heterogeneous Human-Robot Team Task Allocation

Oct 08, 2021

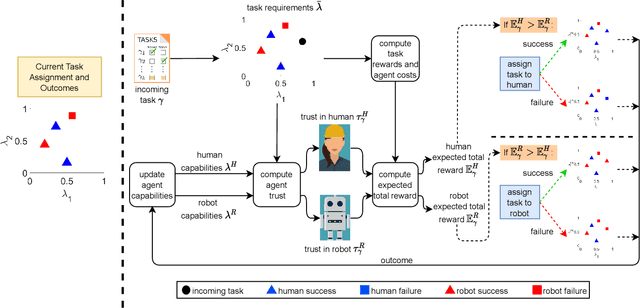

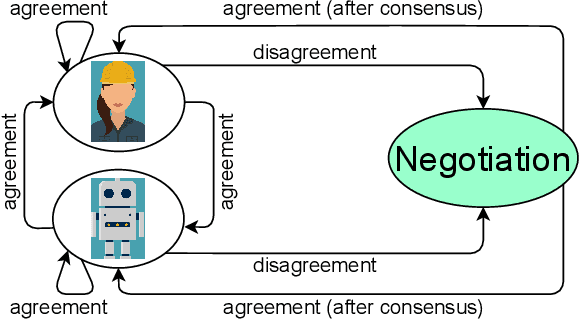

Abstract:Human-robot teams have the ability to perform better across various tasks than human-only and robot-only teams. However, such improvements cannot be realized without proper task allocation. Trust is an important factor in teaming relationships, and can be used in the task allocation strategy. Despite the importance, most existing task allocation strategies do not incorporate trust. This paper reviews select studies on trust and task allocation. We also summarize and discuss how a bi-directional trust model can be used for a task allocation strategy. The bi-directional trust model represents task requirements and agents by their capabilities, and can be used to predict trust for both existing and new tasks. Our task allocation approach uses predicted trust in the agent and expected total reward for task assignment. Finally, we present some directions for future work, including the incorporation of trust from the human and human capacity for task allocation, and a negotiation phase for resolving task disagreements.

A Unified Bi-directional Model for Natural and Artificial Trust in Human-Robot Collaboration

Jun 04, 2021

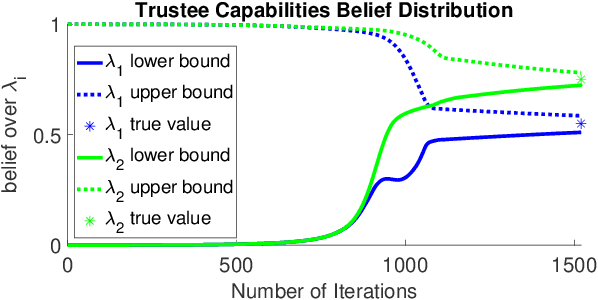

Abstract:We introduce a novel capabilities-based bi-directional multi-task trust model that can be used for trust prediction from either a human or a robotic trustor agent. Tasks are represented in terms of their capability requirements, while trustee agents are characterized by their individual capabilities. Trustee agents' capabilities are not deterministic; they are represented by belief distributions. For each task to be executed, a higher level of trust is assigned to trustee agents who have demonstrated that their capabilities exceed the task's requirements. We report results of an online experiment with 284 participants, revealing that our model outperforms existing models for multi-task trust prediction from a human trustor. We also present simulations of the model for determining trust from a robotic trustor. Our model is useful for control authority allocation applications that involve human-robot teams.

Using Trust in Automation to Enhance Driver-Autonomous Vehicle Interaction and Improve Team Performance

Jun 03, 2021

Abstract:Trust in robots has been gathering attention from multiple directions, as it has special relevance in the theoretical descriptions of human-robot interactions. It is essential for reaching high acceptance and usage rates of robotic technologies in society, as well as for enabling effective human-robot teaming. Researchers have been trying to model the development of trust in robots to improve the overall rapport between humans and robots. Unfortunately, the miscalibration of trust in automation is a common issue that jeopardizes the effectiveness of automation use. It happens when a user's trust levels are not appropriate to the capabilities of the automation being used. Users can be: under-trusting the automation -- when they do not use the functionalities that the machine can perform correctly because of a lack of trust; or over-trusting the automation -- when, due to an excess of trust, they use the machine in situations where its capabilities are not adequate. The main objective of this work is to examine driver's trust development in the ADS. We aim to model how risk factors (e.g.: false alarms and misses from the ADS) and the short-term interactions associated with these risk factors influence the dynamics of drivers' trust in the ADS. The driving context facilitates the instrumentation to measure trusting behaviors, such as drivers' eye movements and usage time of the automated features. Our findings indicate that a reliable characterization of drivers' trusting behaviors and a consequent estimation of trust levels is possible. We expect that these techniques will permit the design of ADSs able to adapt their behaviors to attempt to adjust driver's trust levels. This capability could avoid under- and over-trusting, which could harm their safety or their performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge