Daniel Szafir

Robotic VLA Benefits from Joint Learning with Motion Image Diffusion

Dec 19, 2025Abstract:Vision-Language-Action (VLA) models have achieved remarkable progress in robotic manipulation by mapping multimodal observations and instructions directly to actions. However, they typically mimic expert trajectories without predictive motion reasoning, which limits their ability to reason about what actions to take. To address this limitation, we propose joint learning with motion image diffusion, a novel strategy that enhances VLA models with motion reasoning capabilities. Our method extends the VLA architecture with a dual-head design: while the action head predicts action chunks as in vanilla VLAs, an additional motion head, implemented as a Diffusion Transformer (DiT), predicts optical-flow-based motion images that capture future dynamics. The two heads are trained jointly, enabling the shared VLM backbone to learn representations that couple robot control with motion knowledge. This joint learning builds temporally coherent and physically grounded representations without modifying the inference pathway of standard VLAs, thereby maintaining test-time latency. Experiments in both simulation and real-world environments demonstrate that joint learning with motion image diffusion improves the success rate of pi-series VLAs to 97.5% on the LIBERO benchmark and 58.0% on the RoboTwin benchmark, yielding a 23% improvement in real-world performance and validating its effectiveness in enhancing the motion reasoning capability of large-scale VLAs.

Practice Makes Perfect: A Study of Digital Twin Technology for Assembly and Problem-solving using Lunar Surface Telerobotics

May 19, 2025Abstract:Robotic systems that can traverse planetary or lunar surfaces to collect environmental data and perform physical manipulation tasks, such as assembling equipment or conducting mining operations, are envisioned to form the backbone of future human activities in space. However, the environmental conditions in which these robots, or "rovers," operate present challenges toward achieving fully autonomous solutions, meaning that rover missions will require some degree of human teleoperation or supervision for the foreseeable future. As a result, human operators require training to successfully direct rovers and avoid costly errors or mission failures, as well as the ability to recover from any issues that arise on the fly during mission activities. While analog environments, such as JPL's Mars Yard, can help with such training by simulating surface environments in the real world, access to such resources may be rare and expensive. As an alternative or supplement to such physical analogs, we explore the design and evaluation of a virtual reality digital twin system to train human teleoperation of robotic rovers with mechanical arms for space mission activities. We conducted an experiment with 24 human operators to investigate how our digital twin system can support human teleoperation of rovers in both pre-mission training and in real-time problem solving in a mock lunar mission in which users directed a physical rover in the context of deploying dipole radio antennas. We found that operators who first trained with the digital twin showed a 28% decrease in mission completion time, an 85% decrease in unrecoverable errors, as well as improved mental markers, including decreased cognitive load and increased situation awareness.

ReBot: Scaling Robot Learning with Real-to-Sim-to-Real Robotic Video Synthesis

Mar 15, 2025Abstract:Vision-language-action (VLA) models present a promising paradigm by training policies directly on real robot datasets like Open X-Embodiment. However, the high cost of real-world data collection hinders further data scaling, thereby restricting the generalizability of VLAs. In this paper, we introduce ReBot, a novel real-to-sim-to-real approach for scaling real robot datasets and adapting VLA models to target domains, which is the last-mile deployment challenge in robot manipulation. Specifically, ReBot replays real-world robot trajectories in simulation to diversify manipulated objects (real-to-sim), and integrates the simulated movements with inpainted real-world background to synthesize physically realistic and temporally consistent robot videos (sim-to-real). Our approach has several advantages: 1) it enjoys the benefit of real data to minimize the sim-to-real gap; 2) it leverages the scalability of simulation; and 3) it can generalize a pretrained VLA to a target domain with fully automated data pipelines. Extensive experiments in both simulation and real-world environments show that ReBot significantly enhances the performance and robustness of VLAs. For example, in SimplerEnv with the WidowX robot, ReBot improved the in-domain performance of Octo by 7.2% and OpenVLA by 21.8%, and out-of-domain generalization by 19.9% and 9.4%, respectively. For real-world evaluation with a Franka robot, ReBot increased the success rates of Octo by 17% and OpenVLA by 20%. More information can be found at: https://yuffish.github.io/rebot/

BOSS: Benchmark for Observation Space Shift in Long-Horizon Task

Feb 21, 2025Abstract:Robotics has long sought to develop visual-servoing robots capable of completing previously unseen long-horizon tasks. Hierarchical approaches offer a pathway for achieving this goal by executing skill combinations arranged by a task planner, with each visuomotor skill pre-trained using a specific imitation learning (IL) algorithm. However, even in simple long-horizon tasks like skill chaining, hierarchical approaches often struggle due to a problem we identify as Observation Space Shift (OSS), where the sequential execution of preceding skills causes shifts in the observation space, disrupting the performance of subsequent individually trained skill policies. To validate OSS and evaluate its impact on long-horizon tasks, we introduce BOSS (a Benchmark for Observation Space Shift). BOSS comprises three distinct challenges: "Single Predicate Shift", "Accumulated Predicate Shift", and "Skill Chaining", each designed to assess a different aspect of OSS's negative effect. We evaluated several recent popular IL algorithms on BOSS, including three Behavioral Cloning methods and the Visual Language Action model OpenVLA. Even on the simplest challenge, we observed average performance drops of 67%, 35%, 34%, and 54%, respectively, when comparing skill performance with and without OSS. Additionally, we investigate a potential solution to OSS that scales up the training data for each skill with a larger and more visually diverse set of demonstrations, with our results showing it is not sufficient to resolve OSS. The project page is: https://boss-benchmark.github.io/

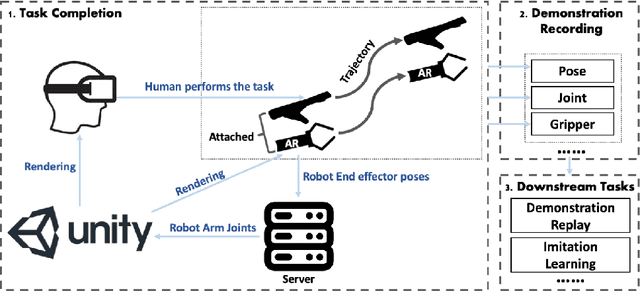

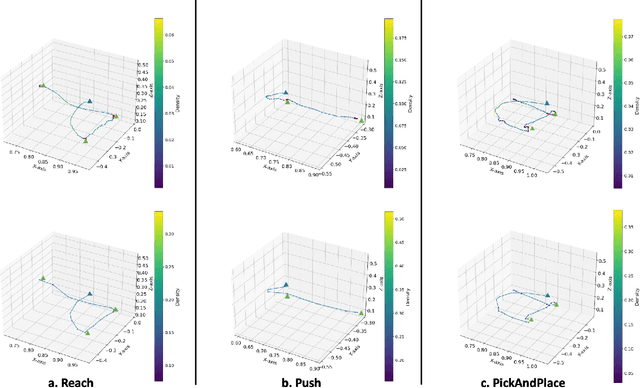

ARCADE: Scalable Demonstration Collection and Generation via Augmented Reality for Imitation Learning

Oct 21, 2024

Abstract:Robot Imitation Learning (IL) is a crucial technique in robot learning, where agents learn by mimicking human demonstrations. However, IL encounters scalability challenges stemming from both non-user-friendly demonstration collection methods and the extensive time required to amass a sufficient number of demonstrations for effective training. In response, we introduce the Augmented Reality for Collection and generAtion of DEmonstrations (ARCADE) framework, designed to scale up demonstration collection for robot manipulation tasks. Our framework combines two key capabilities: 1) it leverages AR to make demonstration collection as simple as users performing daily tasks using their hands, and 2) it enables the automatic generation of additional synthetic demonstrations from a single human-derived demonstration, significantly reducing user effort and time. We assess ARCADE's performance on a real Fetch robot across three robotics tasks: 3-Waypoints-Reach, Push, and Pick-And-Place. Using our framework, we were able to rapidly train a policy using vanilla Behavioral Cloning (BC), a classic IL algorithm, which excelled across these three tasks. We also deploy ARCADE on a real household task, Pouring-Water, achieving an 80% success rate.

Augmented Reality Demonstrations for Scalable Robot Imitation Learning

Mar 20, 2024

Abstract:Robot Imitation Learning (IL) is a widely used method for training robots to perform manipulation tasks that involve mimicking human demonstrations to acquire skills. However, its practicality has been limited due to its requirement that users be trained in operating real robot arms to provide demonstrations. This paper presents an innovative solution: an Augmented Reality (AR)-assisted framework for demonstration collection, empowering non-roboticist users to produce demonstrations for robot IL using devices like the HoloLens 2. Our framework facilitates scalable and diverse demonstration collection for real-world tasks. We validate our approach with experiments on three classical robotics tasks: reach, push, and pick-and-place. The real robot performs each task successfully while replaying demonstrations collected via AR.

Paper index: Designing an introductory HRI course (workshop at HRI 2024)

Mar 04, 2024Abstract:Human-robot interaction is now an established discipline. Dozens of HRI courses exist at universities worldwide, and some institutions even offer degrees in HRI. However, although many students are being taught HRI, there is no agreed-upon curriculum for an introductory HRI course. In this workshop, we aimed to reach community consensus on what should be covered in such a course. Through interactive activities like panels, breakout discussions, and syllabus design, workshop participants explored the many topics and pedagogical approaches for teaching HRI. This collection of articles submitted to the workshop provides examples of HRI courses being offered worldwide.

TOBY: A Tool for Exploring Data in Academic Survey Papers

Jun 13, 2023Abstract:This paper describes TOBY, a visualization tool that helps a user explore the contents of an academic survey paper. The visualization consists of four components: a hierarchical view of taxonomic data in the survey, a document similarity view in the space of taxonomic classes, a network view of citations, and a new paper recommendation tool. In this paper, we will discuss these features in the context of three separate deployments of the tool.

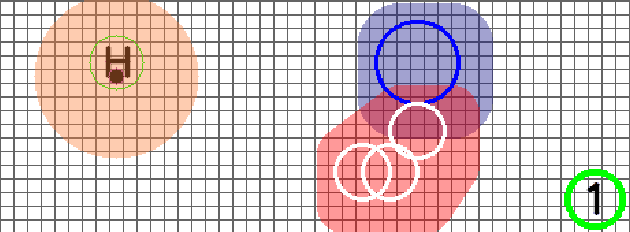

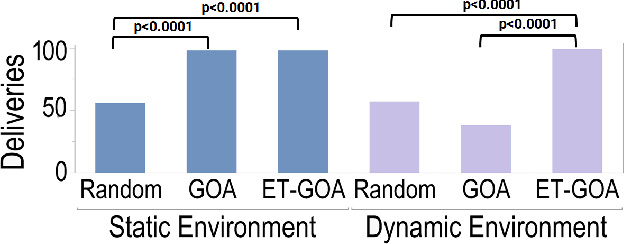

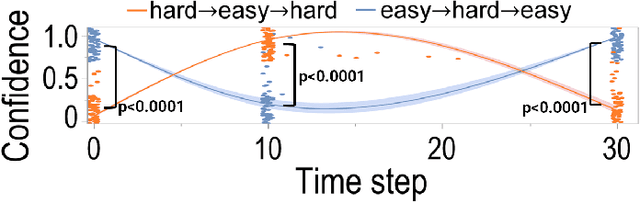

Dynamic Competency Self-Assessment for Autonomous Agents

Mar 03, 2023

Abstract:As autonomous robots are deployed in increasingly complex environments, platform degradation, environmental uncertainties, and deviations from validated operation conditions can make it difficult for human partners to understand robot capabilities and limitations. The ability for a robot to self-assess its competency in dynamic and uncertain environments will be a crucial next step in successful human-robot teaming. This work presents and evaluates an Event-Triggered Generalized Outcome Assessment (ET-GOA) algorithm for autonomous agents to dynamically assess task confidence during execution. The algorithm uses a fast online statistical test of the agent's observations and its model predictions to decide when competency assessment is needed. We provide experimental results using ET-GOA to generate competency reports during a simulated delivery task and suggest future research directions for self-assessing agents.

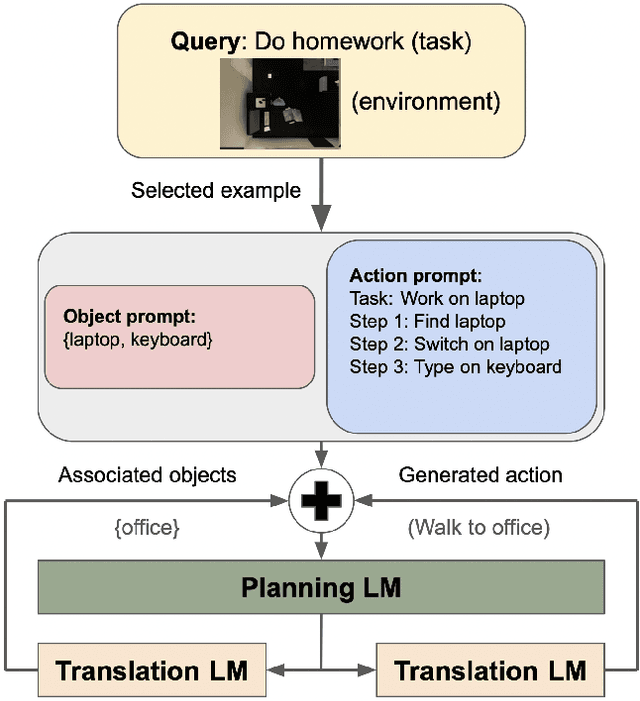

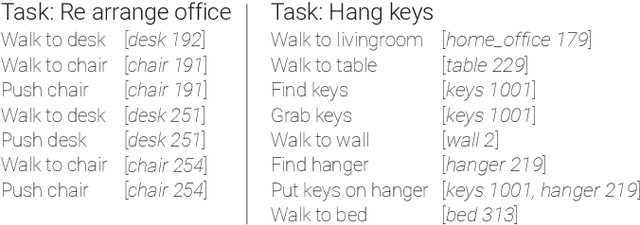

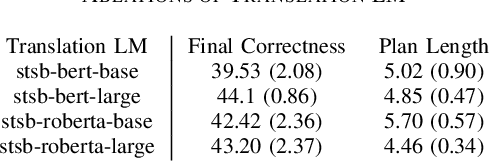

Generating Executable Action Plans with Environmentally-Aware Language Models

Oct 10, 2022

Abstract:Large Language Models (LLMs) trained using massive text datasets have recently shown promise in generating action plans for robotic agents from high level text queries. However, these models typically do not consider the robot's environment, resulting in generated plans that may not actually be executable due to ambiguities in the planned actions or environmental constraints. In this paper, we propose an approach to generate environmentally-aware action plans that can be directly mapped to executable agent actions. Our approach involves integrating environmental objects and object relations as additional inputs into LLM action plan generation to provide the system with an awareness of its surroundings, resulting in plans where each generated action is mapped to objects present in the scene. We also design a novel scoring function that, along with generating the action steps and associating them with objects, helps the system disambiguate among object instances and take into account their states. We evaluate our approach using the VirtualHome simulator and the ActivityPrograms knowledge base. Our results show that the action plans generated from our system outperform prior work in terms of their correctness and executability by 5.3% and 8.9% respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge