Nisar R. Ahmed

Rao-Blackwellized POMDP Planning

Sep 24, 2024

Abstract:Partially Observable Markov Decision Processes (POMDPs) provide a structured framework for decision-making under uncertainty, but their application requires efficient belief updates. Sequential Importance Resampling Particle Filters (SIRPF), also known as Bootstrap Particle Filters, are commonly used as belief updaters in large approximate POMDP solvers, but they face challenges such as particle deprivation and high computational costs as the system's state dimension grows. To address these issues, this study introduces Rao-Blackwellized POMDP (RB-POMDP) approximate solvers and outlines generic methods to apply Rao-Blackwellization in both belief updates and online planning. We compare the performance of SIRPF and Rao-Blackwellized Particle Filters (RBPF) in a simulated localization problem where an agent navigates toward a target in a GPS-denied environment using POMCPOW and RB-POMCPOW planners. Our results not only confirm that RBPFs maintain accurate belief approximations over time with fewer particles, but, more surprisingly, RBPFs combined with quadrature-based integration improve planning quality significantly compared to SIRPF-based planning under the same computational limits.

"A Good Bot Always Knows Its Limitations": Assessing Autonomous System Decision-making Competencies through Factorized Machine Self-confidence

Jul 29, 2024

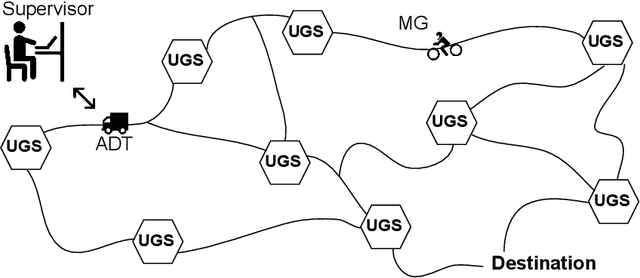

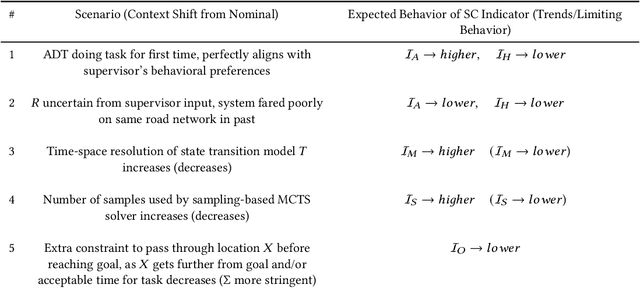

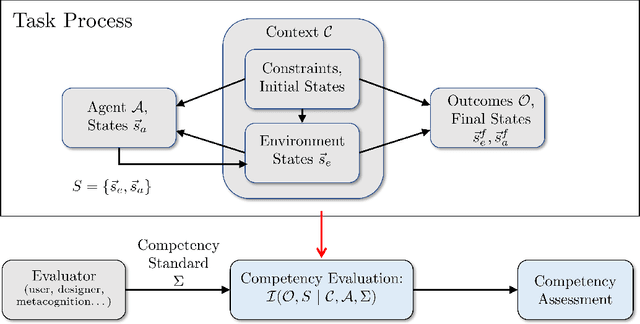

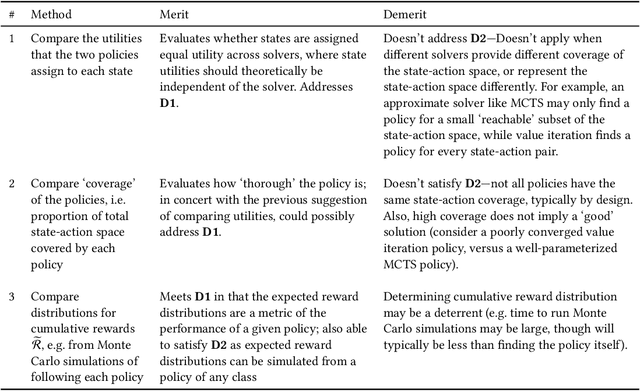

Abstract:How can intelligent machines assess their competencies in completing tasks? This question has come into focus for autonomous systems that algorithmically reason and make decisions under uncertainty. It is argued here that machine self-confidence -- a form of meta-reasoning based on self-assessments of an agent's knowledge about the state of the world and itself, as well as its ability to reason about and execute tasks -- leads to many eminently computable and useful competency indicators for such agents. This paper presents a culmination of work on this concept in the form of a computational framework called Factorized Machine Self-confidence (FaMSeC), which provides an engineering-focused holistic description of factors driving an algorithmic decision-making process, including outcome assessment, solver quality, model quality, alignment quality, and past experience. In FaMSeC, self-confidence indicators are derived from hierarchical `problem-solving statistics' embedded within broad classes of probabilistic decision-making algorithms such as Markov decision processes. The problem-solving statistics are obtained by evaluating and grading probabilistic exceedance margins with respect to given competency standards, which are specified for each decision-making competency factor by the informee (e.g. a non-expert user or an expert system designer). This approach allows `algorithmic goodness of fit' evaluations to be easily incorporated into the design of many kinds of autonomous agents via human-interpretable competency self-assessment reports. Detailed descriptions and running application examples for a Markov decision process agent show how two FaMSeC factors (outcome assessment and solver quality) can be practically computed and reported for a range of possible tasking contexts through novel use of meta-utility functions, behavior simulations, and surrogate prediction models.

Deep Modeling of Non-Gaussian Aleatoric Uncertainty

May 30, 2024Abstract:Deep learning offers promising new ways to accurately model aleatoric uncertainty in robotic estimation systems, particularly when the uncertainty distributions do not conform to traditional assumptions of being fixed and Gaussian. In this study, we formulate and evaluate three fundamental deep learning approaches for conditional probability density modeling to quantify non-Gaussian aleatoric uncertainty: parametric, discretized, and generative modeling. We systematically compare the respective strengths and weaknesses of these three methods on simulated non-Gaussian densities as well as on real-world terrain-relative navigation data. Our results show that these deep learning methods can accurately capture complex uncertainty patterns, highlighting their potential for improving the reliability and robustness of estimation systems.

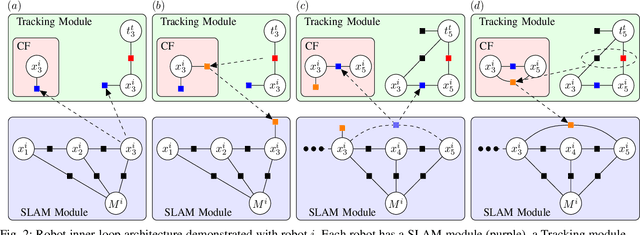

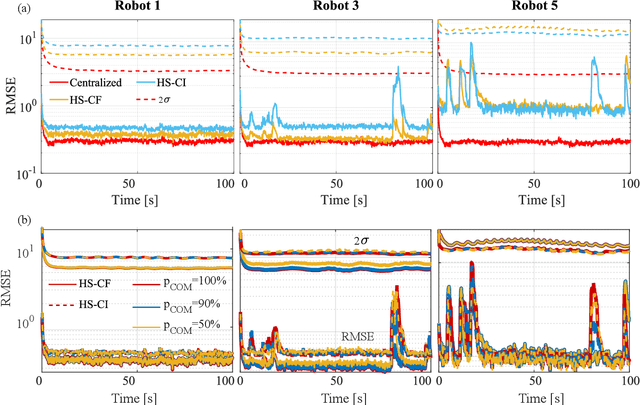

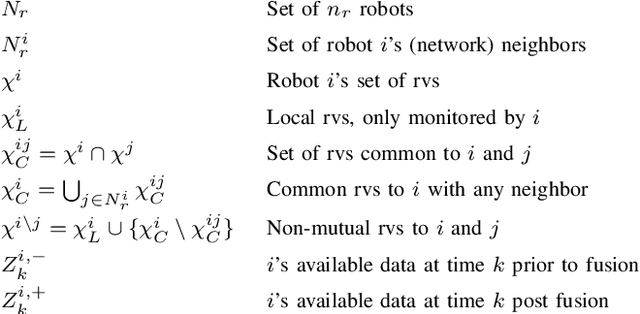

Scalable Factor Graph-Based Heterogeneous Bayesian DDF for Dynamic Systems

Jan 29, 2024Abstract:Heterogeneous Bayesian decentralized data fusion captures the set of problems in which two robots must combine two probability density functions over non-equal, but overlapping sets of random variables. In the context of multi-robot dynamic systems, this enables robots to take a "divide and conquer" approach to reason and share data over complementary tasks instead of over the full joint state space. For example, in a target tracking application, this allows robots to track different subsets of targets and share data on only common targets. This paper presents a framework by which robots can each use a local factor graph to represent relevant partitions of a complex global joint probability distribution, thus allowing them to avoid reasoning over the entirety of a more complex model and saving communication as well as computation costs. From a theoretical point of view, this paper makes contributions by casting the heterogeneous decentralized fusion problem in terms of a factor graph, analyzing the challenges that arise due to dynamic filtering, and then developing a new conservative filtering algorithm that ensures statistical correctness. From a practical point of view, we show how this framework can be used to represent different multi-robot applications and then test it with simulations and hardware experiments to validate and demonstrate its statistical conservativeness, applicability, and robustness to real-world challenges.

Using Surprise Index for Competency Assessment in Autonomous Decision-Making

Dec 14, 2023

Abstract:This paper considers the problem of evaluating an autonomous system's competency in performing a task, particularly when working in dynamic and uncertain environments. The inherent opacity of machine learning models, from the perspective of the user, often described as a `black box', poses a challenge. To overcome this, we propose using a measure called the Surprise index, which leverages available measurement data to quantify whether the dynamic system performs as expected. We show that the surprise index can be computed in closed form for dynamic systems when observed evidence in a probabilistic model if the joint distribution for that evidence follows a multivariate Gaussian marginal distribution. We then apply it to a nonlinear spacecraft maneuver problem, where actions are chosen by a reinforcement learning agent and show it can indicate how well the trajectory follows the required orbit.

Exploiting Structure for Optimal Multi-Agent Bayesian Decentralized Estimation

Jul 20, 2023

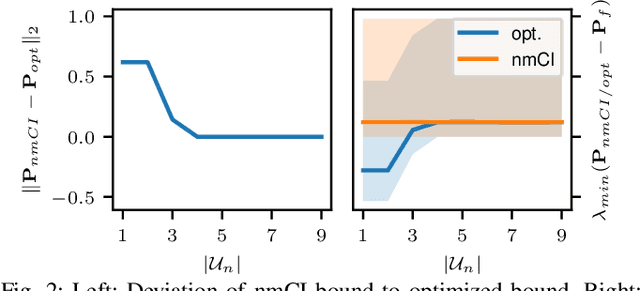

Abstract:A key challenge in Bayesian decentralized data fusion is the `rumor propagation' or `double counting' phenomenon, where previously sent data circulates back to its sender. It is often addressed by approximate methods like covariance intersection (CI) which takes a weighted average of the estimates to compute the bound. The problem is that this bound is not tight, i.e. the estimate is often over-conservative. In this paper, we show that by exploiting the probabilistic independence structure in multi-agent decentralized fusion problems a tighter bound can be found using (i) an expansion to the CI algorithm that uses multiple (non-monolithic) weighting factors instead of one (monolithic) factor in the original CI and (ii) a general optimization scheme that is able to compute optimal bounds and fully exploit an arbitrary dependency structure. We compare our methods and show that on a simple problem, they converge to the same solution. We then test our new non-monolithic CI algorithm on a large-scale target tracking simulation and show that it achieves a tighter bound and a more accurate estimate compared to the original monolithic CI.

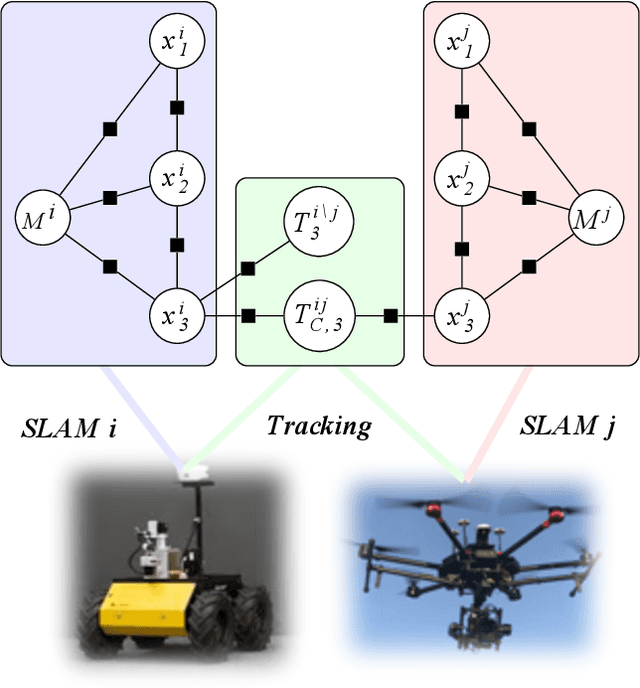

Towards Decentralized Heterogeneous Multi-Robot SLAM and Target Tracking

Jun 07, 2023

Abstract:In many robotics problems, there is a significant gain in collaborative information sharing between multiple robots, for exploration, search and rescue, tracking multiple targets, or mapping large environments. One of the key implicit assumptions when solving cooperative multi-robot problems is that all robots use the same (homogeneous) underlying algorithm. However, in practice, we want to allow collaboration between robots possessing different capabilities and that therefore must rely on heterogeneous algorithms. We present a system architecture and the supporting theory, to enable collaboration in a decentralized network of robots, where each robot relies on different estimation algorithms. To develop our approach, we focus on multi-robot simultaneous localization and mapping (SLAM) with multi-target tracking. Our theoretical framework builds on our idea of exploiting the conditional independence structure inherent to many robotics applications to separate between each robot's local inference (estimation) tasks and fuse only relevant parts of their non-equal, but overlapping probability density function (pdfs). We present a new decentralized graph-based approach to the multi-robot SLAM and tracking problem. We leverage factor graphs to split between different parts of the problem for efficient data sharing between robots in the network while enabling robots to use different local sparse landmark/dense/metric-semantic SLAM algorithms.

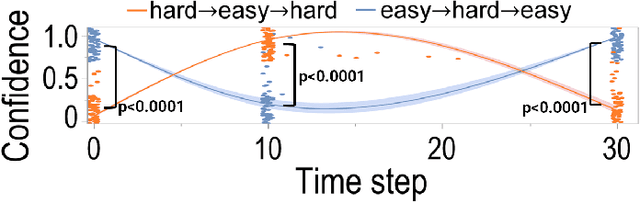

Dynamic Competency Self-Assessment for Autonomous Agents

Mar 03, 2023

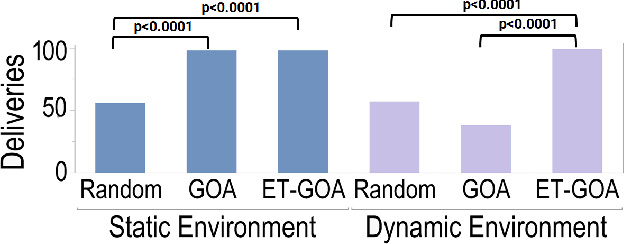

Abstract:As autonomous robots are deployed in increasingly complex environments, platform degradation, environmental uncertainties, and deviations from validated operation conditions can make it difficult for human partners to understand robot capabilities and limitations. The ability for a robot to self-assess its competency in dynamic and uncertain environments will be a crucial next step in successful human-robot teaming. This work presents and evaluates an Event-Triggered Generalized Outcome Assessment (ET-GOA) algorithm for autonomous agents to dynamically assess task confidence during execution. The algorithm uses a fast online statistical test of the agent's observations and its model predictions to decide when competency assessment is needed. We provide experimental results using ET-GOA to generate competency reports during a simulated delivery task and suggest future research directions for self-assessing agents.

Learning to Forecast Aleatoric and Epistemic Uncertainties over Long Horizon Trajectories

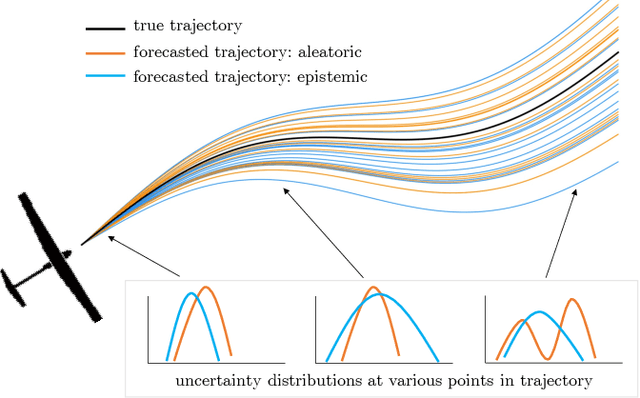

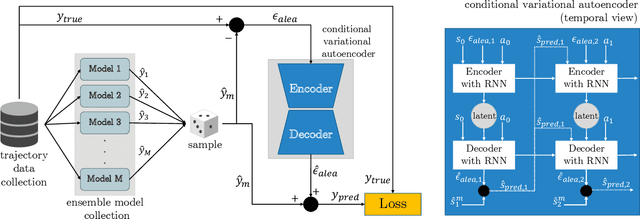

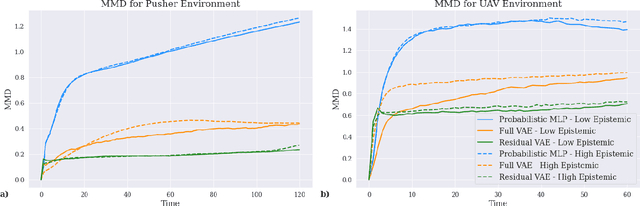

Feb 17, 2023

Abstract:Giving autonomous agents the ability to forecast their own outcomes and uncertainty will allow them to communicate their competencies and be used more safely. We accomplish this by using a learned world model of the agent system to forecast full agent trajectories over long time horizons. Real world systems involve significant sources of both aleatoric and epistemic uncertainty that compound and interact over time in the trajectory forecasts. We develop a deep generative world model that quantifies aleatoric uncertainty while incorporating the effects of epistemic uncertainty during the learning process. We show on two reinforcement learning problems that our uncertainty model produces calibrated outcome uncertainty estimates over the full trajectory horizon.

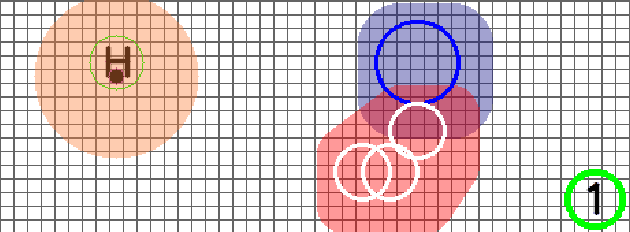

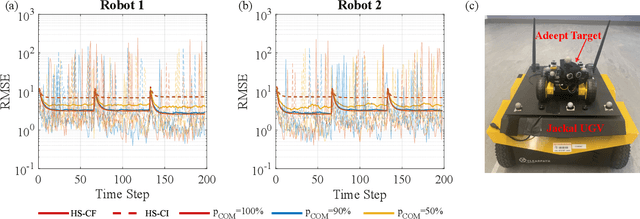

Heterogeneous Bayesian Decentralized Data Fusion: An Empirical Study

Sep 17, 2022

Abstract:In multi-robot applications, inference over large state spaces can often be divided into smaller overlapping sub-problems that can then be collaboratively solved in parallel over `separate' subsets of states. To this end, the factor graph decentralized data fusion (FG-DDF) framework was developed to analyze and exploit conditional independence in heterogeneous Bayesian decentralized fusion problems, in which robots update and fuse pdfs over different locally overlapping random states. This allows robots to efficiently use smaller probabilistic models and sparse message passing to accurately and scalably fuse relevant local parts of a larger global joint state pdf, while accounting for data dependencies between robots. Whereas prior work required limiting assumptions about network connectivity and model linearity, this paper relaxes these to empirically explore the applicability and robustness of FG-DDF in more general settings. We develop a new heterogeneous fusion rule which generalizes the homogeneous covariance intersection algorithm, and test it in multi-robot tracking and localization scenarios with non-linear motion/observation models under communication dropout. Simulation and linear hardware experiments show that, in practice, the FG-DDF continues to provide consistent filtered estimates under these more practical operating conditions, while reducing computation and communication costs by more than 95%, thus enabling the design of scalable real-world multi-robot systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge