Learning to Forecast Aleatoric and Epistemic Uncertainties over Long Horizon Trajectories

Paper and Code

Feb 17, 2023

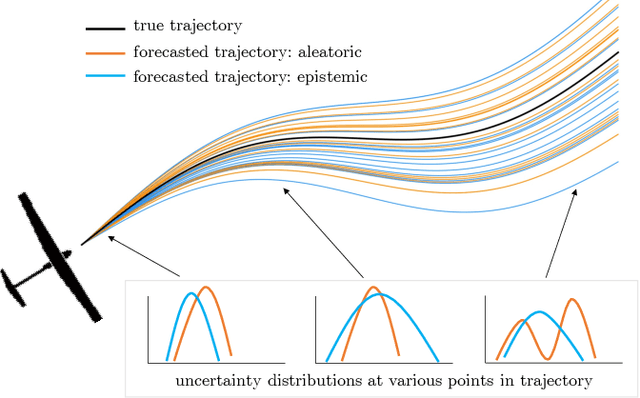

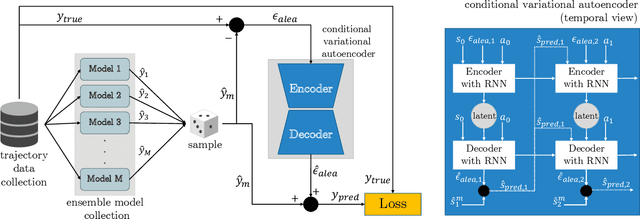

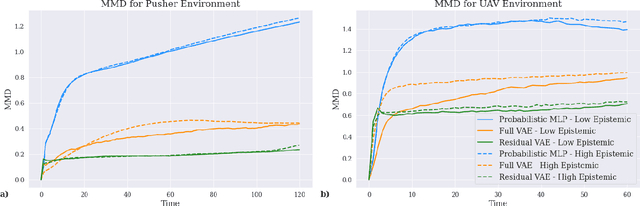

Giving autonomous agents the ability to forecast their own outcomes and uncertainty will allow them to communicate their competencies and be used more safely. We accomplish this by using a learned world model of the agent system to forecast full agent trajectories over long time horizons. Real world systems involve significant sources of both aleatoric and epistemic uncertainty that compound and interact over time in the trajectory forecasts. We develop a deep generative world model that quantifies aleatoric uncertainty while incorporating the effects of epistemic uncertainty during the learning process. We show on two reinforcement learning problems that our uncertainty model produces calibrated outcome uncertainty estimates over the full trajectory horizon.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge