Rebecca Russell

Deep Modeling of Non-Gaussian Aleatoric Uncertainty

May 30, 2024Abstract:Deep learning offers promising new ways to accurately model aleatoric uncertainty in robotic estimation systems, particularly when the uncertainty distributions do not conform to traditional assumptions of being fixed and Gaussian. In this study, we formulate and evaluate three fundamental deep learning approaches for conditional probability density modeling to quantify non-Gaussian aleatoric uncertainty: parametric, discretized, and generative modeling. We systematically compare the respective strengths and weaknesses of these three methods on simulated non-Gaussian densities as well as on real-world terrain-relative navigation data. Our results show that these deep learning methods can accurately capture complex uncertainty patterns, highlighting their potential for improving the reliability and robustness of estimation systems.

Surrogate Neural Networks for Efficient Simulation-based Trajectory Planning Optimization

Mar 30, 2023

Abstract:This paper presents a novel methodology that uses surrogate models in the form of neural networks to reduce the computation time of simulation-based optimization of a reference trajectory. Simulation-based optimization is necessary when there is no analytical form of the system accessible, only input-output data that can be used to create a surrogate model of the simulation. Like many high-fidelity simulations, this trajectory planning simulation is very nonlinear and computationally expensive, making it challenging to optimize iteratively. Through gradient descent optimization, our approach finds the optimal reference trajectory for landing a hypersonic vehicle. In contrast to the large datasets used to create the surrogate models in prior literature, our methodology is specifically designed to minimize the number of simulation executions required by the gradient descent optimizer. We demonstrated this methodology to be more efficient than the standard practice of hand-tuning the inputs through trial-and-error or randomly sampling the input parameter space. Due to the intelligently selected input values to the simulation, our approach yields better simulation outcomes that are achieved more rapidly and to a higher degree of accuracy. Optimizing the hypersonic vehicle's reference trajectory is very challenging due to the simulation's extreme nonlinearity, but even so, this novel approach found a 74% better-performing reference trajectory compared to nominal, and the numerical results clearly show a substantial reduction in computation time for designing future trajectories.

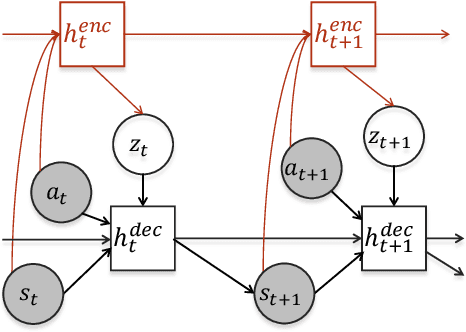

Learning to Forecast Aleatoric and Epistemic Uncertainties over Long Horizon Trajectories

Feb 17, 2023

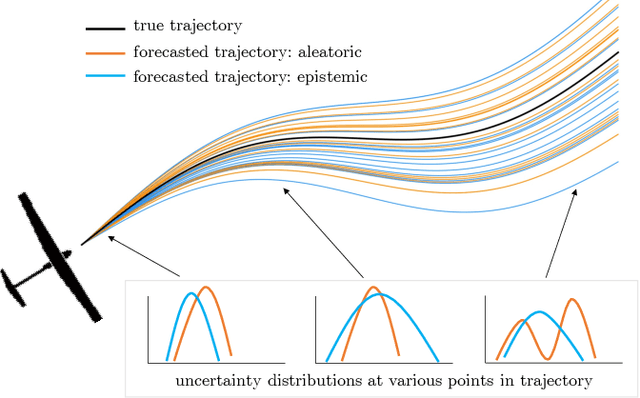

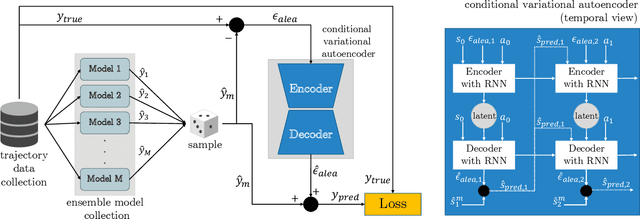

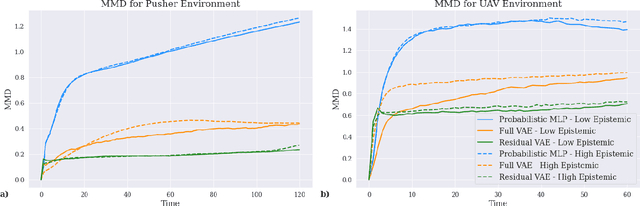

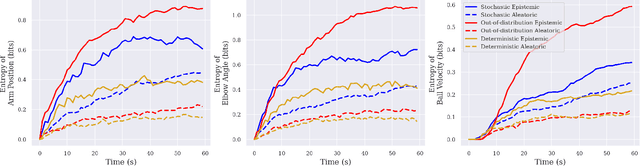

Abstract:Giving autonomous agents the ability to forecast their own outcomes and uncertainty will allow them to communicate their competencies and be used more safely. We accomplish this by using a learned world model of the agent system to forecast full agent trajectories over long time horizons. Real world systems involve significant sources of both aleatoric and epistemic uncertainty that compound and interact over time in the trajectory forecasts. We develop a deep generative world model that quantifies aleatoric uncertainty while incorporating the effects of epistemic uncertainty during the learning process. We show on two reinforcement learning problems that our uncertainty model produces calibrated outcome uncertainty estimates over the full trajectory horizon.

Learning and Understanding a Disentangled Feature Representation for Hidden Parameters in Reinforcement Learning

Nov 29, 2022Abstract:Hidden parameters are latent variables in reinforcement learning (RL) environments that are constant over the course of a trajectory. Understanding what, if any, hidden parameters affect a particular environment can aid both the development and appropriate usage of RL systems. We present an unsupervised method to map RL trajectories into a feature space where distance represents the relative difference in system behavior due to hidden parameters. Our approach disentangles the effects of hidden parameters by leveraging a recurrent neural network (RNN) world model as used in model-based RL. First, we alter the standard world model training algorithm to isolate the hidden parameter information in the world model memory. Then, we use a metric learning approach to map the RNN memory into a space with a distance metric approximating a bisimulation metric with respect to the hidden parameters. The resulting disentangled feature space can be used to meaningfully relate trajectories to each other and analyze the hidden parameter. We demonstrate our approach on four hidden parameters across three RL environments. Finally we present two methods to help identify and understand the effects of hidden parameters on systems.

Symmetry Detection in Trajectory Data for More Meaningful Reinforcement Learning Representations

Nov 29, 2022Abstract:Knowledge of the symmetries of reinforcement learning (RL) systems can be used to create compressed and semantically meaningful representations of a low-level state space. We present a method of automatically detecting RL symmetries directly from raw trajectory data without requiring active control of the system. Our method generates candidate symmetries and trains a recurrent neural network (RNN) to discriminate between the original trajectories and the transformed trajectories for each candidate symmetry. The RNN discriminator's accuracy for each candidate reveals how symmetric the system is under that transformation. This information can be used to create high-level representations that are invariant to all symmetries on a dataset level and to communicate properties of the RL behavior to users. We show in experiments on two simulated RL use cases (a pusher robot and a UAV flying in wind) that our method can determine the symmetries underlying both the environment physics and the trained RL policy.

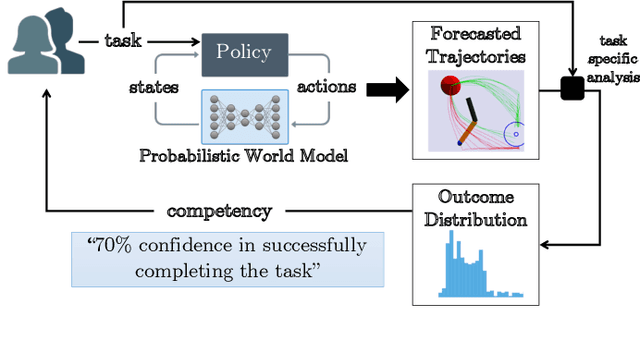

Uncertainty Quantification for Competency Assessment of Autonomous Agents

Jun 21, 2022

Abstract:For safe and reliable deployment in the real world, autonomous agents must elicit appropriate levels of trust from human users. One method to build trust is to have agents assess and communicate their own competencies for performing given tasks. Competency depends on the uncertainties affecting the agent, making accurate uncertainty quantification vital for competency assessment. In this work, we show how ensembles of deep generative models can be used to quantify the agent's aleatoric and epistemic uncertainties when forecasting task outcomes as part of competency assessment.

Competency Assessment for Autonomous Agents using Deep Generative Models

Mar 23, 2022

Abstract:For autonomous agents to act as trustworthy partners to human users, they must be able to reliably communicate their competency for the tasks they are asked to perform. Towards this objective, we develop probabilistic world models based on deep generative modelling that allow for the simulation of agent trajectories and accurate calculation of tasking outcome probabilities. By combining the strengths of conditional variational autoencoders with recurrent neural networks, the deep generative world model can probabilistically forecast trajectories over long horizons to task completion. We show how these forecasted trajectories can be used to calculate outcome probability distributions, which enable the precise assessment of agent competency for specific tasks and initial settings.

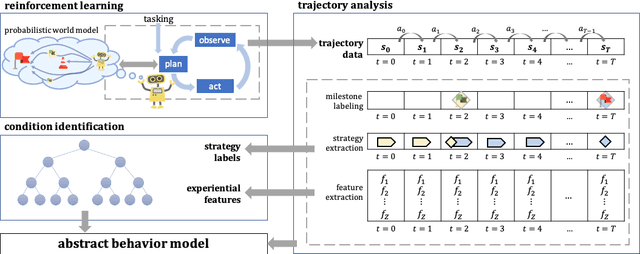

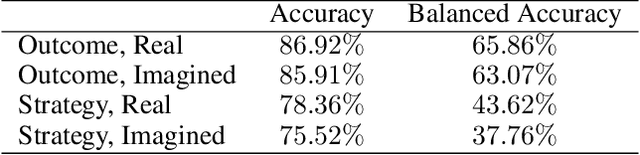

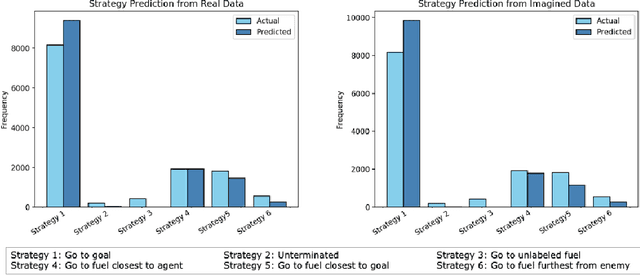

Explaining Conditions for Reinforcement Learning Behaviors from Real and Imagined Data

Nov 17, 2020

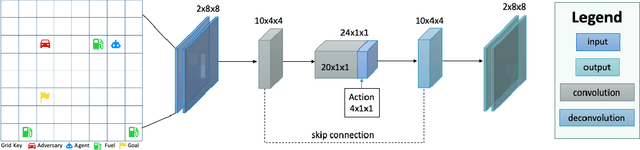

Abstract:The deployment of reinforcement learning (RL) in the real world comes with challenges in calibrating user trust and expectations. As a step toward developing RL systems that are able to communicate their competencies, we present a method of generating human-interpretable abstract behavior models that identify the experiential conditions leading to different task execution strategies and outcomes. Our approach consists of extracting experiential features from state representations, abstracting strategy descriptors from trajectories, and training an interpretable decision tree that identifies the conditions most predictive of different RL behaviors. We demonstrate our method on trajectory data generated from interactions with the environment and on imagined trajectory data that comes from a trained probabilistic world model in a model-based RL setting.

Real-Time Object Pose Estimation with Pose Interpreter Networks

Aug 03, 2018

Abstract:In this work, we introduce pose interpreter networks for 6-DoF object pose estimation. In contrast to other CNN-based approaches to pose estimation that require expensively annotated object pose data, our pose interpreter network is trained entirely on synthetic pose data. We use object masks as an intermediate representation to bridge real and synthetic. We show that when combined with a segmentation model trained on RGB images, our synthetically trained pose interpreter network is able to generalize to real data. Our end-to-end system for object pose estimation runs in real-time (20 Hz) on live RGB data, without using depth information or ICP refinement.

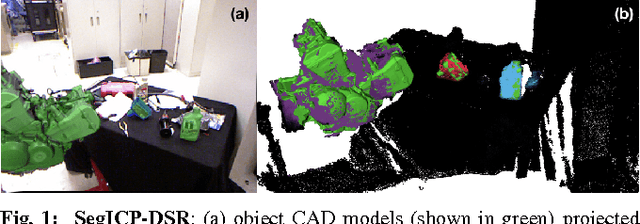

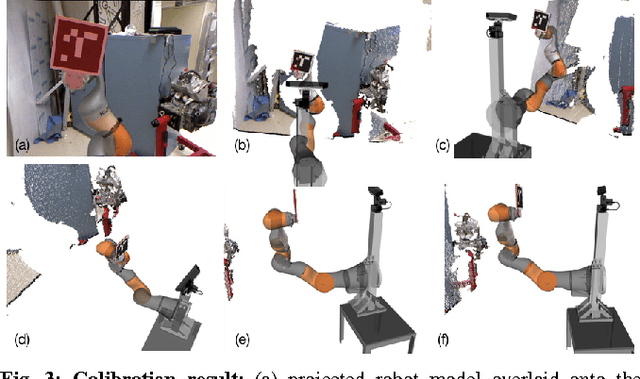

SegICP-DSR: Dense Semantic Scene Reconstruction and Registration

Nov 06, 2017

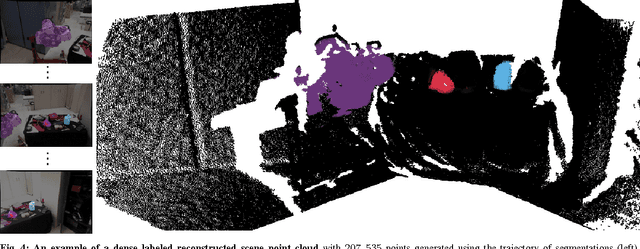

Abstract:To enable autonomous robotic manipulation in unstructured environments, we present SegICP-DSR, a real- time, dense, semantic scene reconstruction and pose estimation algorithm that achieves mm-level pose accuracy and standard deviation (7.9 mm, {\sigma}=7.6 mm and 1.7 deg, {\sigma}=0.7 deg) and suc- cessfully identified the object pose in 97% of test cases. This represents a 29% increase in accuracy, and a 14% increase in success rate compared to SegICP in cluttered, unstruc- tured environments. The performance increase of SegICP-DSR arises from (1) improved deep semantic segmentation under adversarial training, (2) precise automated calibration of the camera intrinsic and extrinsic parameters, (3) viewpoint specific ray-casting of the model geometry, and (4) dense semantic ElasticFusion point clouds for registration. We benchmark the performance of SegICP-DSR on thousands of pose-annotated video frames and demonstrate its accuracy and efficacy on two tight tolerance grasping and insertion tasks using a KUKA LBR iiwa robotic arm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge