SegICP-DSR: Dense Semantic Scene Reconstruction and Registration

Paper and Code

Nov 06, 2017

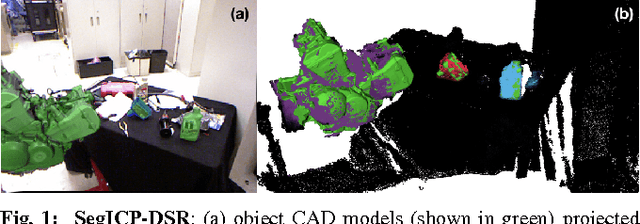

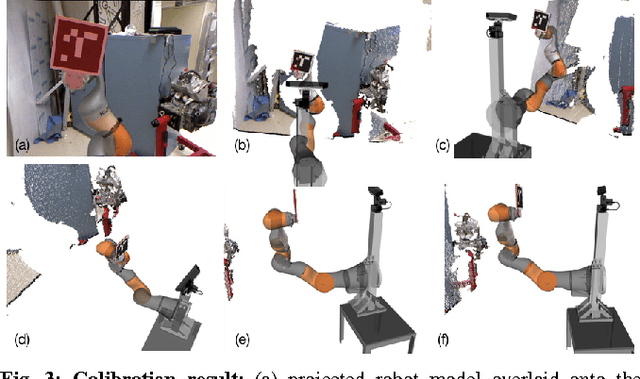

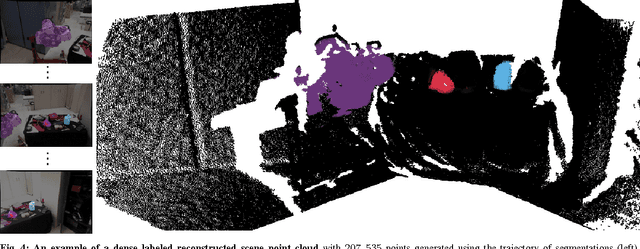

To enable autonomous robotic manipulation in unstructured environments, we present SegICP-DSR, a real- time, dense, semantic scene reconstruction and pose estimation algorithm that achieves mm-level pose accuracy and standard deviation (7.9 mm, {\sigma}=7.6 mm and 1.7 deg, {\sigma}=0.7 deg) and suc- cessfully identified the object pose in 97% of test cases. This represents a 29% increase in accuracy, and a 14% increase in success rate compared to SegICP in cluttered, unstruc- tured environments. The performance increase of SegICP-DSR arises from (1) improved deep semantic segmentation under adversarial training, (2) precise automated calibration of the camera intrinsic and extrinsic parameters, (3) viewpoint specific ray-casting of the model geometry, and (4) dense semantic ElasticFusion point clouds for registration. We benchmark the performance of SegICP-DSR on thousands of pose-annotated video frames and demonstrate its accuracy and efficacy on two tight tolerance grasping and insertion tasks using a KUKA LBR iiwa robotic arm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge