Jay M. Wong

SegICP-DSR: Dense Semantic Scene Reconstruction and Registration

Nov 06, 2017

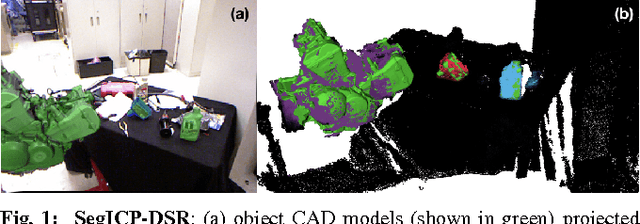

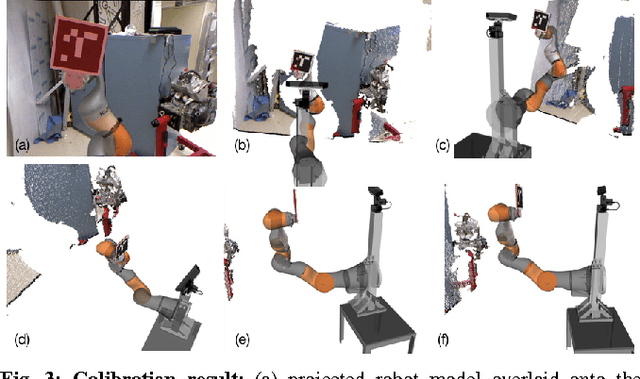

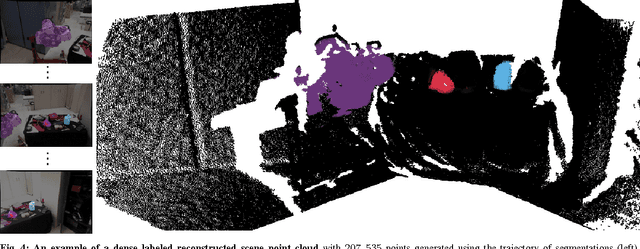

Abstract:To enable autonomous robotic manipulation in unstructured environments, we present SegICP-DSR, a real- time, dense, semantic scene reconstruction and pose estimation algorithm that achieves mm-level pose accuracy and standard deviation (7.9 mm, {\sigma}=7.6 mm and 1.7 deg, {\sigma}=0.7 deg) and suc- cessfully identified the object pose in 97% of test cases. This represents a 29% increase in accuracy, and a 14% increase in success rate compared to SegICP in cluttered, unstruc- tured environments. The performance increase of SegICP-DSR arises from (1) improved deep semantic segmentation under adversarial training, (2) precise automated calibration of the camera intrinsic and extrinsic parameters, (3) viewpoint specific ray-casting of the model geometry, and (4) dense semantic ElasticFusion point clouds for registration. We benchmark the performance of SegICP-DSR on thousands of pose-annotated video frames and demonstrate its accuracy and efficacy on two tight tolerance grasping and insertion tasks using a KUKA LBR iiwa robotic arm.

SegICP: Integrated Deep Semantic Segmentation and Pose Estimation

Sep 05, 2017

Abstract:Recent robotic manipulation competitions have highlighted that sophisticated robots still struggle to achieve fast and reliable perception of task-relevant objects in complex, realistic scenarios. To improve these systems' perceptive speed and robustness, we present SegICP, a novel integrated solution to object recognition and pose estimation. SegICP couples convolutional neural networks and multi-hypothesis point cloud registration to achieve both robust pixel-wise semantic segmentation as well as accurate and real-time 6-DOF pose estimation for relevant objects. Our architecture achieves 1cm position error and <5^\circ$ angle error in real time without an initial seed. We evaluate and benchmark SegICP against an annotated dataset generated by motion capture.

Towards Lifelong Self-Supervision: A Deep Learning Direction for Robotics

Nov 01, 2016

Abstract:Despite outstanding success in vision amongst other domains, many of the recent deep learning approaches have evident drawbacks for robots. This manuscript surveys recent work in the literature that pertain to applying deep learning systems to the robotics domain, either as means of estimation or as a tool to resolve motor commands directly from raw percepts. These recent advances are only a piece to the puzzle. We suggest that deep learning as a tool alone is insufficient in building a unified framework to acquire general intelligence. For this reason, we complement our survey with insights from cognitive development and refer to ideas from classical control theory, producing an integrated direction for a lifelong learning architecture.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge