Aastha Acharya

Deep Modeling of Non-Gaussian Aleatoric Uncertainty

May 30, 2024Abstract:Deep learning offers promising new ways to accurately model aleatoric uncertainty in robotic estimation systems, particularly when the uncertainty distributions do not conform to traditional assumptions of being fixed and Gaussian. In this study, we formulate and evaluate three fundamental deep learning approaches for conditional probability density modeling to quantify non-Gaussian aleatoric uncertainty: parametric, discretized, and generative modeling. We systematically compare the respective strengths and weaknesses of these three methods on simulated non-Gaussian densities as well as on real-world terrain-relative navigation data. Our results show that these deep learning methods can accurately capture complex uncertainty patterns, highlighting their potential for improving the reliability and robustness of estimation systems.

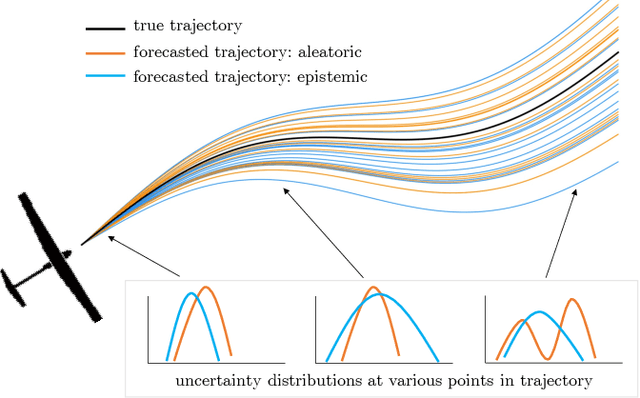

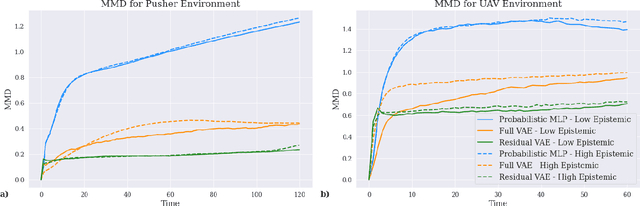

Learning to Forecast Aleatoric and Epistemic Uncertainties over Long Horizon Trajectories

Feb 17, 2023

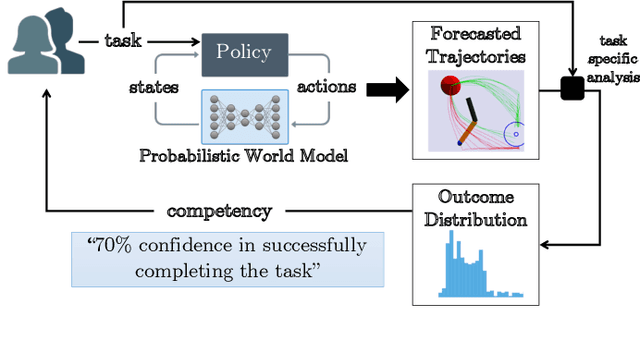

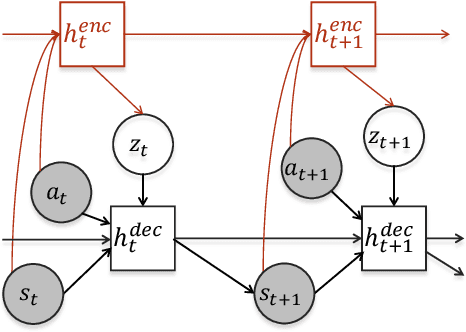

Abstract:Giving autonomous agents the ability to forecast their own outcomes and uncertainty will allow them to communicate their competencies and be used more safely. We accomplish this by using a learned world model of the agent system to forecast full agent trajectories over long time horizons. Real world systems involve significant sources of both aleatoric and epistemic uncertainty that compound and interact over time in the trajectory forecasts. We develop a deep generative world model that quantifies aleatoric uncertainty while incorporating the effects of epistemic uncertainty during the learning process. We show on two reinforcement learning problems that our uncertainty model produces calibrated outcome uncertainty estimates over the full trajectory horizon.

AAAI 2022 Fall Symposium: Lessons Learned for Autonomous Assessment of Machine Abilities

Jan 13, 2023Abstract:Modern civilian and military systems have created a demand for sophisticated intelligent autonomous machines capable of operating in uncertain dynamic environments. Such systems are realizable thanks in large part to major advances in perception and decision-making techniques, which in turn have been propelled forward by modern machine learning tools. However, these newer forms of intelligent autonomy raise questions about when/how communication of the operational intent and assessments of actual vs. supposed capabilities of autonomous agents impact overall performance. This symposium examines the possibilities for enabling intelligent autonomous systems to self-assess and communicate their ability to effectively execute assigned tasks, as well as reason about the overall limits of their competencies and maintain operability within those limits. The symposium brings together researchers working in this burgeoning area of research to share lessons learned, identify major theoretical and practical challenges encountered so far, and potential avenues for future research and real-world applications.

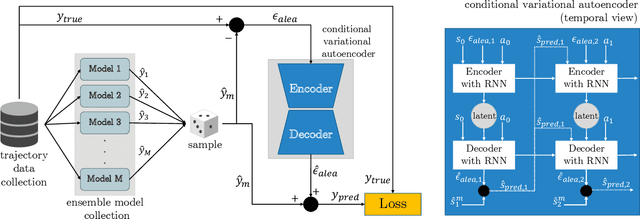

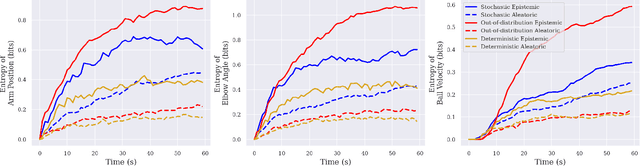

Uncertainty Quantification for Competency Assessment of Autonomous Agents

Jun 21, 2022

Abstract:For safe and reliable deployment in the real world, autonomous agents must elicit appropriate levels of trust from human users. One method to build trust is to have agents assess and communicate their own competencies for performing given tasks. Competency depends on the uncertainties affecting the agent, making accurate uncertainty quantification vital for competency assessment. In this work, we show how ensembles of deep generative models can be used to quantify the agent's aleatoric and epistemic uncertainties when forecasting task outcomes as part of competency assessment.

Competency Assessment for Autonomous Agents using Deep Generative Models

Mar 23, 2022

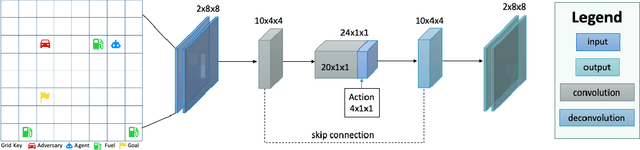

Abstract:For autonomous agents to act as trustworthy partners to human users, they must be able to reliably communicate their competency for the tasks they are asked to perform. Towards this objective, we develop probabilistic world models based on deep generative modelling that allow for the simulation of agent trajectories and accurate calculation of tasking outcome probabilities. By combining the strengths of conditional variational autoencoders with recurrent neural networks, the deep generative world model can probabilistically forecast trajectories over long horizons to task completion. We show how these forecasted trajectories can be used to calculate outcome probability distributions, which enable the precise assessment of agent competency for specific tasks and initial settings.

Explaining Conditions for Reinforcement Learning Behaviors from Real and Imagined Data

Nov 17, 2020

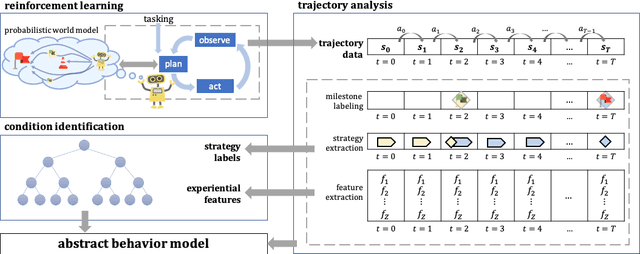

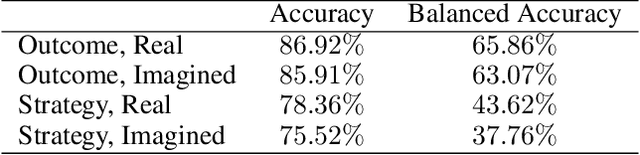

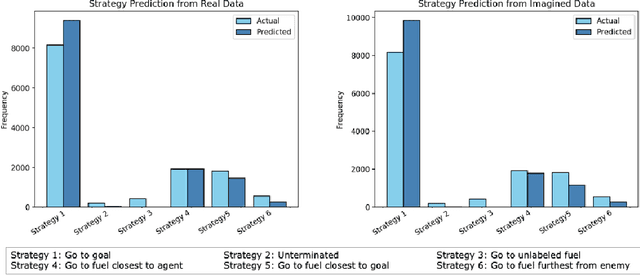

Abstract:The deployment of reinforcement learning (RL) in the real world comes with challenges in calibrating user trust and expectations. As a step toward developing RL systems that are able to communicate their competencies, we present a method of generating human-interpretable abstract behavior models that identify the experiential conditions leading to different task execution strategies and outcomes. Our approach consists of extracting experiential features from state representations, abstracting strategy descriptors from trajectories, and training an interpretable decision tree that identifies the conditions most predictive of different RL behaviors. We demonstrate our method on trajectory data generated from interactions with the environment and on imagined trajectory data that comes from a trained probabilistic world model in a model-based RL setting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge