Nicholas Conlon

Dynamic Competency Self-Assessment for Autonomous Agents

Mar 03, 2023

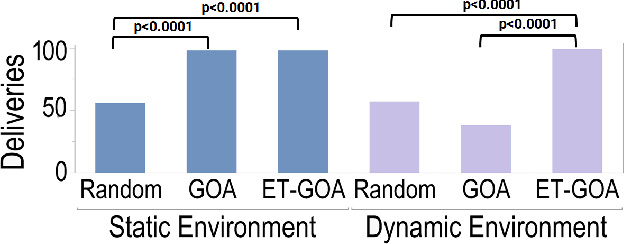

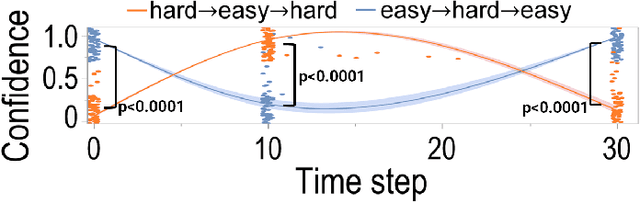

Abstract:As autonomous robots are deployed in increasingly complex environments, platform degradation, environmental uncertainties, and deviations from validated operation conditions can make it difficult for human partners to understand robot capabilities and limitations. The ability for a robot to self-assess its competency in dynamic and uncertain environments will be a crucial next step in successful human-robot teaming. This work presents and evaluates an Event-Triggered Generalized Outcome Assessment (ET-GOA) algorithm for autonomous agents to dynamically assess task confidence during execution. The algorithm uses a fast online statistical test of the agent's observations and its model predictions to decide when competency assessment is needed. We provide experimental results using ET-GOA to generate competency reports during a simulated delivery task and suggest future research directions for self-assessing agents.

AAAI 2022 Fall Symposium: Lessons Learned for Autonomous Assessment of Machine Abilities

Jan 13, 2023Abstract:Modern civilian and military systems have created a demand for sophisticated intelligent autonomous machines capable of operating in uncertain dynamic environments. Such systems are realizable thanks in large part to major advances in perception and decision-making techniques, which in turn have been propelled forward by modern machine learning tools. However, these newer forms of intelligent autonomy raise questions about when/how communication of the operational intent and assessments of actual vs. supposed capabilities of autonomous agents impact overall performance. This symposium examines the possibilities for enabling intelligent autonomous systems to self-assess and communicate their ability to effectively execute assigned tasks, as well as reason about the overall limits of their competencies and maintain operability within those limits. The symposium brings together researchers working in this burgeoning area of research to share lessons learned, identify major theoretical and practical challenges encountered so far, and potential avenues for future research and real-world applications.

Investigating the Effects of Robot Proficiency Self-Assessment on Trust and Performance

Mar 19, 2022

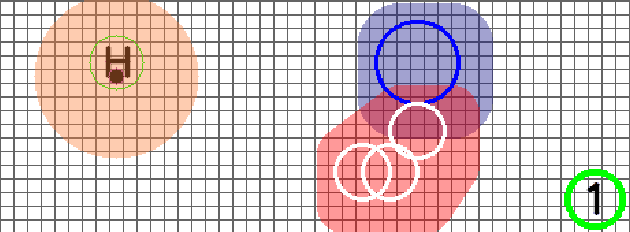

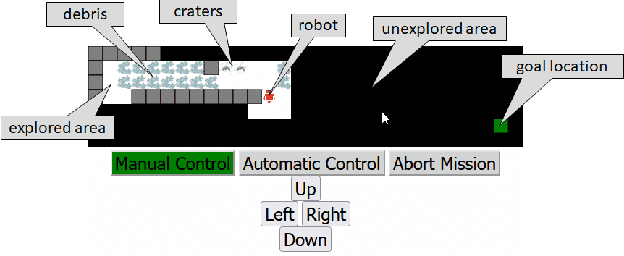

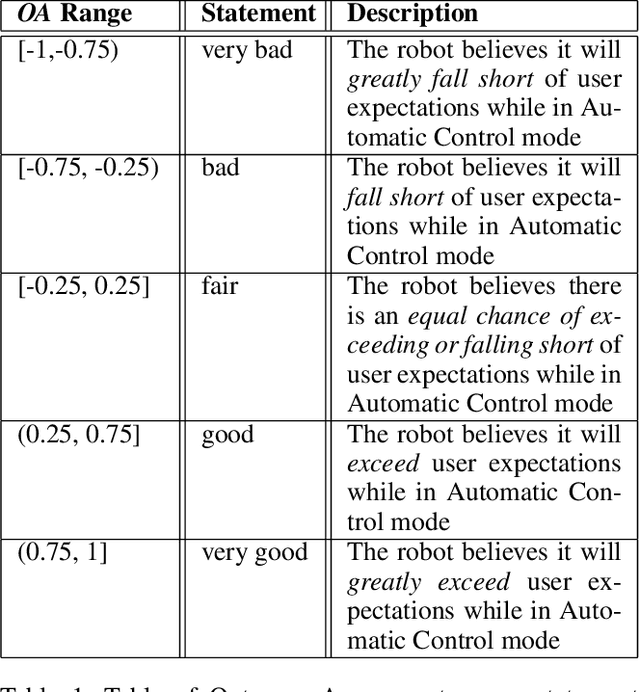

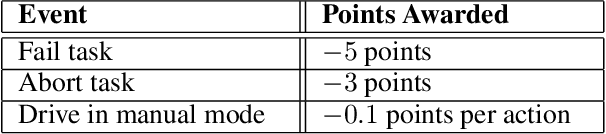

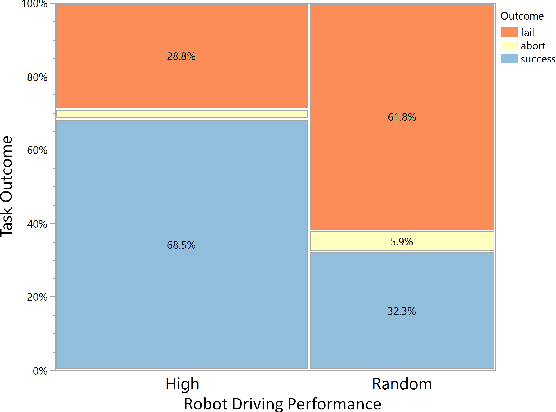

Abstract:Human-robot teams will soon be expected to accomplish complex tasks in high-risk and uncertain environments. Here, the human may not necessarily be a robotics expert, but will need to establish a baseline understanding of the robot's abilities in order to appropriately utilize and rely on the robot. This willingness to rely, also known as trust, is based partly on the human's belief in the robot's proficiency at a given task. If trust is too high, the human may push the robot beyond its capabilities. If trust is too low, the human may not utilize it when they otherwise could have, wasting precious resources. In this work, we develop and execute an online human-subjects study to investigate how robot proficiency self-assessment reports based on Factorized Machine Self-Confidence affect operator trust and task performance in a grid world navigation task. Additionally we present and analyze a metric for trust level assessment, which measures the allocation of control between an operator and robot when the human teammate is free to switch between teleportation and autonomous control. Our results show that an a priori robot self-assessment report aligns operator trust with robot proficiency, and leads to performance improvements and small increases in self-reported trust.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge