Christopher Reale

Learning and Understanding a Disentangled Feature Representation for Hidden Parameters in Reinforcement Learning

Nov 29, 2022Abstract:Hidden parameters are latent variables in reinforcement learning (RL) environments that are constant over the course of a trajectory. Understanding what, if any, hidden parameters affect a particular environment can aid both the development and appropriate usage of RL systems. We present an unsupervised method to map RL trajectories into a feature space where distance represents the relative difference in system behavior due to hidden parameters. Our approach disentangles the effects of hidden parameters by leveraging a recurrent neural network (RNN) world model as used in model-based RL. First, we alter the standard world model training algorithm to isolate the hidden parameter information in the world model memory. Then, we use a metric learning approach to map the RNN memory into a space with a distance metric approximating a bisimulation metric with respect to the hidden parameters. The resulting disentangled feature space can be used to meaningfully relate trajectories to each other and analyze the hidden parameter. We demonstrate our approach on four hidden parameters across three RL environments. Finally we present two methods to help identify and understand the effects of hidden parameters on systems.

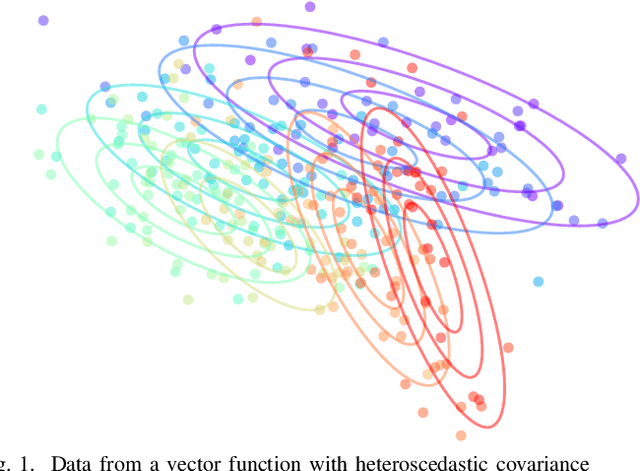

Multivariate Uncertainty in Deep Learning

Oct 31, 2019

Abstract:Deep learning is increasingly used for state estimation problems such as tracking, navigation, and pose estimation. The uncertainties associated with these measurements are typically assumed to be a fixed covariance matrix. For many scenarios this assumption is inaccurate, leading to worse subsequent filtered state estimates. We show how to model multivariate uncertainty for regression problems with neural networks, incorporating both aleatoric and epistemic sources of heteroscedastic uncertainty. We train a deep uncertainty covariance matrix model in two ways: directly using a multivariate Gaussian density loss function, and indirectly using end-to-end training through a Kalman filter. We experimentally show in a visual tracking problem the large impact that accurate multivariate uncertainty quantification can have on Kalman filter estimation for both in-domain and out-of-domain evaluation data.

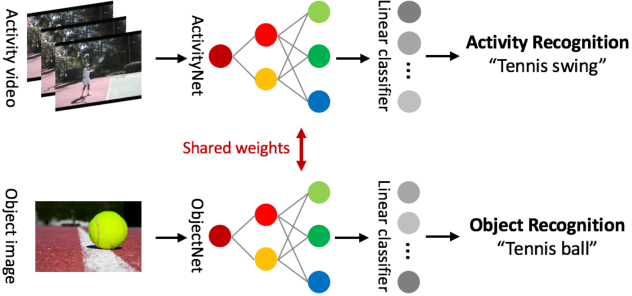

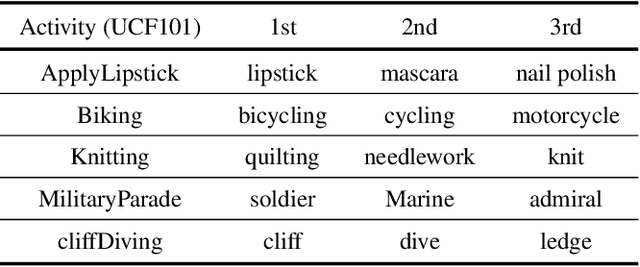

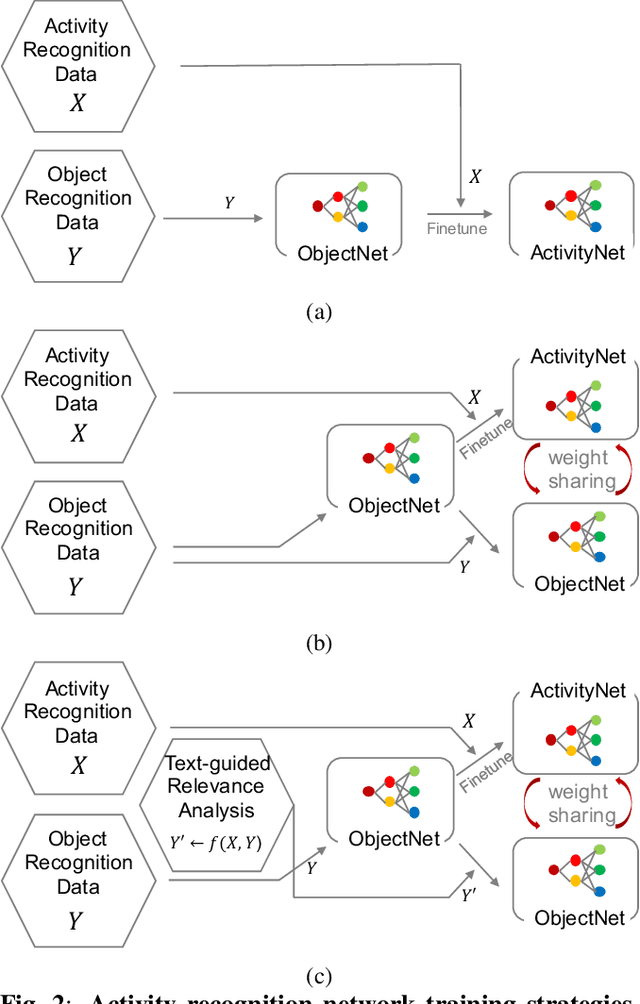

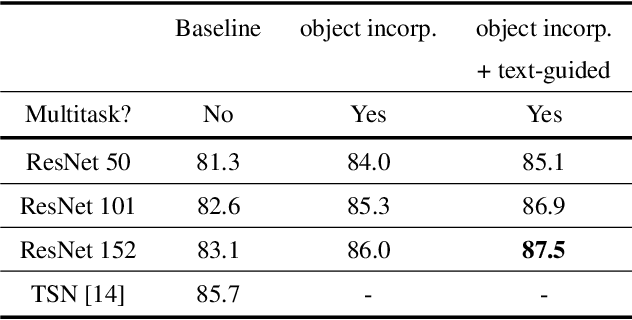

Object and Text-guided Semantics for CNN-based Activity Recognition

May 04, 2018

Abstract:Many previous methods have demonstrated the importance of considering semantically relevant objects for carrying out video-based human activity recognition, yet none of the methods have harvested the power of large text corpora to relate the objects and the activities to be transferred into learning a unified deep convolutional neural network. We present a novel activity recognition CNN which co-learns the object recognition task in an end-to-end multitask learning scheme to improve upon the baseline activity recognition performance. We further improve upon the multitask learning approach by exploiting a text-guided semantic space to select the most relevant objects with respect to the target activities. To the best of our knowledge, we are the first to investigate this approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge