Wafa Johal

LIG

HoRD: Robust Humanoid Control via History-Conditioned Reinforcement Learning and Online Distillation

Feb 05, 2026Abstract:Humanoid robots can suffer significant performance drops under small changes in dynamics, task specifications, or environment setup. We propose HoRD, a two-stage learning framework for robust humanoid control under domain shift. First, we train a high-performance teacher policy via history-conditioned reinforcement learning, where the policy infers latent dynamics context from recent state--action trajectories to adapt online to diverse randomized dynamics. Second, we perform online distillation to transfer the teacher's robust control capabilities into a transformer-based student policy that operates on sparse root-relative 3D joint keypoint trajectories. By combining history-conditioned adaptation with online distillation, HoRD enables a single policy to adapt zero-shot to unseen domains without per-domain retraining. Extensive experiments show HoRD outperforms strong baselines in robustness and transfer, especially under unseen domains and external perturbations. Code and project page are available at https://tonywang-0517.github.io/hord/.

Going Down the Abstraction Stream with Augmented Reality and Tangible Robots: the Case of Vector Instruction

Apr 20, 2025Abstract:Despite being used in many engineering and scientific areas such as physics and mathematics and often taught in high school, graphical vector addition turns out to be a topic prone to misconceptions in understanding even at university-level physics classes. To improve the learning experience and the resulting understanding of vectors, we propose to investigate how concreteness fading implemented with the use of augmented reality and tangible robots could help learners to build a strong representation of vector addition. We design a gamified learning environment consisting of three concreteness fading stages and conduct an experiment with 30 participants. Our results shows a positive learning gain. We analyze extensively the behavior of the participants to understand the usage of the technological tools -- augmented reality and tangible robots -- during the learning scenario. Finally, we discuss how the combination of these tools shows real advantages in implementing the concreteness fading paradigm. Our work provides empirical insights into how users utilize concrete visualizations conveyed by a haptic-enabled robot and augmented reality in a learning scenario.

Gazing at Failure: Investigating Human Gaze in Response to Robot Failure in Collaborative Tasks

Feb 24, 2025Abstract:Robots are prone to making errors, which can negatively impact their credibility as teammates during collaborative tasks with human users. Detecting and recovering from these failures is crucial for maintaining effective level of trust from users. However, robots may fail without being aware of it. One way to detect such failures could be by analysing humans' non-verbal behaviours and reactions to failures. This study investigates how human gaze dynamics can signal a robot's failure and examines how different types of failures affect people's perception of robot. We conducted a user study with 27 participants collaborating with a robotic mobile manipulator to solve tangram puzzles. The robot was programmed to experience two types of failures -- executional and decisional -- occurring either at the beginning or end of the task, with or without acknowledgement of the failure. Our findings reveal that the type and timing of the robot's failure significantly affect participants' gaze behaviour and perception of the robot. Specifically, executional failures led to more gaze shifts and increased focus on the robot, while decisional failures resulted in lower entropy in gaze transitions among areas of interest, particularly when the failure occurred at the end of the task. These results highlight that gaze can serve as a reliable indicator of robot failures and their types, and could also be used to predict the appropriate recovery actions.

Can you pass that tool?: Implications of Indirect Speech in Physical Human-Robot Collaboration

Feb 17, 2025Abstract:Indirect speech acts (ISAs) are a natural pragmatic feature of human communication, allowing requests to be conveyed implicitly while maintaining subtlety and flexibility. Although advancements in speech recognition have enabled natural language interactions with robots through direct, explicit commands--providing clarity in communication--the rise of large language models presents the potential for robots to interpret ISAs. However, empirical evidence on the effects of ISAs on human-robot collaboration (HRC) remains limited. To address this, we conducted a Wizard-of-Oz study (N=36), engaging a participant and a robot in collaborative physical tasks. Our findings indicate that robots capable of understanding ISAs significantly improve human's perceived robot anthropomorphism, team performance, and trust. However, the effectiveness of ISAs is task- and context-dependent, thus requiring careful use. These results highlight the importance of appropriately integrating direct and indirect requests in HRC to enhance collaborative experiences and task performance.

ROSAnnotator: A Web Application for ROSBag Data Analysis in Human-Robot Interaction

Jan 13, 2025Abstract:Human-robot interaction (HRI) is an interdisciplinary field that utilises both quantitative and qualitative methods. While ROSBags, a file format within the Robot Operating System (ROS), offer an efficient means of collecting temporally synched multimodal data in empirical studies with real robots, there is a lack of tools specifically designed to integrate qualitative coding and analysis functions with ROSBags. To address this gap, we developed ROSAnnotator, a web-based application that incorporates a multimodal Large Language Model (LLM) to support both manual and automated annotation of ROSBag data. ROSAnnotator currently facilitates video, audio, and transcription annotations and provides an open interface for custom ROS messages and tools. By using ROSAnnotator, researchers can streamline the qualitative analysis process, create a more cohesive analysis pipeline, and quickly access statistical summaries of annotations, thereby enhancing the overall efficiency of HRI data analysis. https://github.com/CHRI-Lab/ROSAnnotator

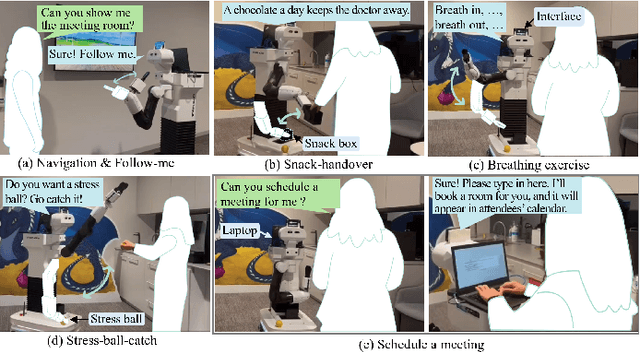

OfficeMate: Pilot Evaluation of an Office Assistant Robot

Jan 09, 2025

Abstract:Office Assistant Robots (OARs) offer a promising solution to proactively provide in-situ support to enhance employee well-being and productivity in office spaces. We introduce OfficeMate, a social OAR designed to assist with practical tasks, foster social interaction, and promote health and well-being. Through a pilot evaluation with seven participants in an office environment, we found that users see potential in OARs for reducing stress and promoting healthy habits and value the robot's ability to provide companionship and physical activity reminders in the office space. However, concerns regarding privacy, communication, and the robot's interaction timing were also raised. The feedback highlights the need to carefully consider the robot's appearance and behaviour to ensure it enhances user experience and aligns with office social norms. We believe these insights will better inform the development of adaptive, intelligent OAR systems for future office space integration.

Assisting MoCap-Based Teleoperation of Robot Arm using Augmented Reality Visualisations

Jan 09, 2025

Abstract:Teleoperating a robot arm involves the human operator positioning the robot's end-effector or programming each joint. Whereas humans can control their own arms easily by integrating visual and proprioceptive feedback, it is challenging to control an external robot arm in the same way, due to its inconsistent orientation and appearance. We explore teleoperating a robot arm through motion-capture (MoCap) of the human operator's arm with the assistance of augmented reality (AR) visualisations. We investigate how AR helps teleoperation by visualising a virtual reference of the human arm alongside the robot arm to help users understand the movement mapping. We found that the AR overlay of a humanoid arm on the robot in the same orientation helped users learn the control. We discuss findings and future work on MoCap-based robot teleoperation.

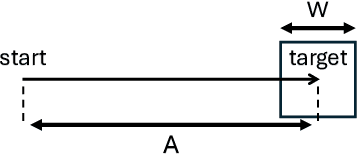

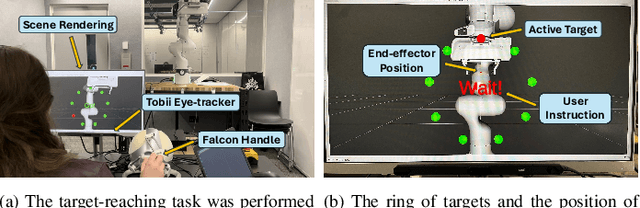

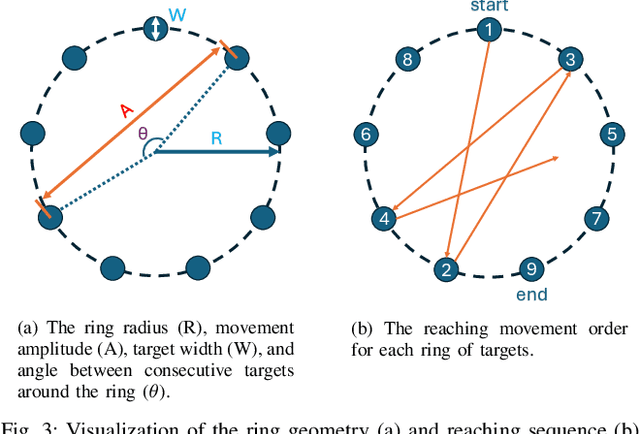

Using Fitts' Law to Benchmark Assisted Human-Robot Performance

Dec 06, 2024

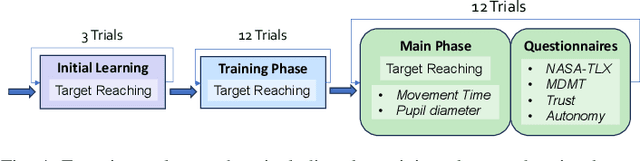

Abstract:Shared control systems aim to combine human and robot abilities to improve task performance. However, achieving optimal performance requires that the robot's level of assistance adjusts the operator's cognitive workload in response to the task difficulty. Understanding and dynamically adjusting this balance is crucial to maximizing efficiency and user satisfaction. In this paper, we propose a novel benchmarking method for shared control systems based on Fitts' Law to formally parameterize the difficulty level of a target-reaching task. With this we systematically quantify and model the effect of task difficulty (i.e. size and distance of target) and robot autonomy on task performance and operators' cognitive load and trust levels. Our empirical results (N=24) not only show that both task difficulty and robot autonomy influence task performance, but also that the performance can be modelled using these parameters, which may allow for the generalization of this relationship across more diverse setups. We also found that the users' perceived cognitive load and trust were influenced by these factors. Given the challenges in directly measuring cognitive load in real-time, our adapted Fitts' model presents a potential alternative approach to estimate cognitive load through determining the difficulty level of the task, with the assumption that greater task difficulty results in higher cognitive load levels. We hope that these insights and our proposed framework inspire future works to further investigate the generalizability of the method, ultimately enabling the benchmarking and systematic assessment of shared control quality and user impact, which will aid in the development of more effective and adaptable systems.

CelluloTactix: Towards Empowering Collaborative Online Learning through Tangible Haptic Interaction with Cellulo Robots

Apr 18, 2024

Abstract:Online learning has soared in popularity in the educational landscape of COVID-19 and carries the benefits of increased flexibility and access to far-away training resources. However, it also restricts communication between peers and teachers, limits physical interactions and confines learning to the computer screen and keyboard. In this project, we designed a novel way to engage students in collaborative online learning by using haptic-enabled tangible robots, Cellulo. We built a library which connects two robots remotely for a learning activity based around the structure of a biological cell. To discover how separate modes of haptic feedback might differentially affect collaboration, two modes of haptic force-feedback were implemented (haptic co-location and haptic consensus). With a case study, we found that the haptic co-location mode seemed to stimulate collectivist behaviour to a greater extent than the haptic consensus mode, which was associated with individualism and less interaction. While the haptic co-location mode seemed to encourage information pooling, participants using the haptic consensus mode tended to focus more on technical co-ordination. This work introduces a novel system that can provide interesting insights on how to integrate haptic feedback into collaborative remote learning activities in future.

Paper index: Designing an introductory HRI course (workshop at HRI 2024)

Mar 04, 2024Abstract:Human-robot interaction is now an established discipline. Dozens of HRI courses exist at universities worldwide, and some institutions even offer degrees in HRI. However, although many students are being taught HRI, there is no agreed-upon curriculum for an introductory HRI course. In this workshop, we aimed to reach community consensus on what should be covered in such a course. Through interactive activities like panels, breakout discussions, and syllabus design, workshop participants explored the many topics and pedagogical approaches for teaching HRI. This collection of articles submitted to the workshop provides examples of HRI courses being offered worldwide.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge