Jonathan Eden

Prospect Theory in Physical Human-Robot Interaction: A Pilot Study of Probability Perception

Dec 09, 2025Abstract:Understanding how humans respond to uncertainty is critical for designing safe and effective physical human-robot interaction (pHRI), as physically working with robots introduces multiple sources of uncertainty, including trust, comfort, and perceived safety. Conventional pHRI control frameworks typically build on optimal control theory, which assumes that human actions minimize a cost function; however, human behavior under uncertainty often departs from such optimal patterns. To address this gap, additional understanding of human behavior under uncertainty is needed. This pilot study implemented a physically coupled target-reaching task in which the robot delivered assistance or disturbances with systematically varied probabilities (10\% to 90\%). Analysis of participants' force inputs and decision-making strategies revealed two distinct behavioral clusters: a "trade-off" group that modulated their physical responses according to disturbance likelihood, and an "always-compensate" group characterized by strong risk aversion irrespective of probability. These findings provide empirical evidence that human decision-making in pHRI is highly individualized and that the perception of probability can differ to its true value. Accordingly, the study highlights the need for more interpretable behavioral models, such as cumulative prospect theory (CPT), to more accurately capture these behaviors and inform the design of future adaptive robot controllers.

Exploring Interference between Concurrent Skin Stretches

Mar 26, 2025Abstract:Proprioception is essential for coordinating human movements and enhancing the performance of assistive robotic devices. Skin stretch feedback, which closely aligns with natural proprioception mechanisms, presents a promising method for conveying proprioceptive information. To better understand the impact of interference on skin stretch perception, we conducted a user study with 30 participants that evaluated the effect of two simultaneous skin stretches on user perception. We observed that when participants experience simultaneous skin stretch stimuli, a masking effect occurs which deteriorates perception performance in the collocated skin stretch configurations. However, the perceived workload stays the same. These findings show that interference can affect the perception of skin stretch such that multi-channel skin stretch feedback designs should avoid locating modules in close proximity.

Using Fitts' Law to Benchmark Assisted Human-Robot Performance

Dec 06, 2024

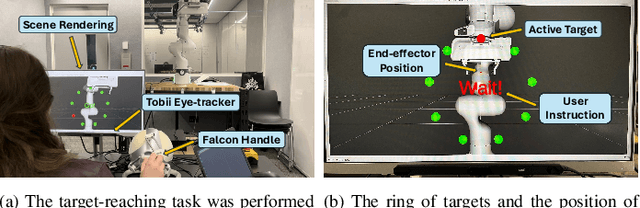

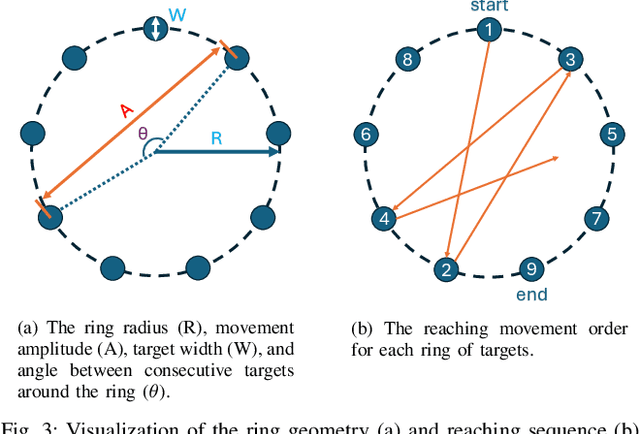

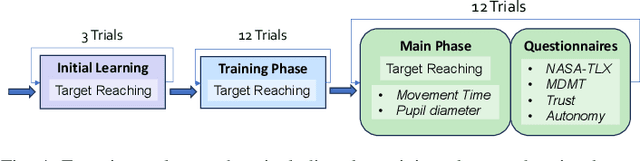

Abstract:Shared control systems aim to combine human and robot abilities to improve task performance. However, achieving optimal performance requires that the robot's level of assistance adjusts the operator's cognitive workload in response to the task difficulty. Understanding and dynamically adjusting this balance is crucial to maximizing efficiency and user satisfaction. In this paper, we propose a novel benchmarking method for shared control systems based on Fitts' Law to formally parameterize the difficulty level of a target-reaching task. With this we systematically quantify and model the effect of task difficulty (i.e. size and distance of target) and robot autonomy on task performance and operators' cognitive load and trust levels. Our empirical results (N=24) not only show that both task difficulty and robot autonomy influence task performance, but also that the performance can be modelled using these parameters, which may allow for the generalization of this relationship across more diverse setups. We also found that the users' perceived cognitive load and trust were influenced by these factors. Given the challenges in directly measuring cognitive load in real-time, our adapted Fitts' model presents a potential alternative approach to estimate cognitive load through determining the difficulty level of the task, with the assumption that greater task difficulty results in higher cognitive load levels. We hope that these insights and our proposed framework inspire future works to further investigate the generalizability of the method, ultimately enabling the benchmarking and systematic assessment of shared control quality and user impact, which will aid in the development of more effective and adaptable systems.

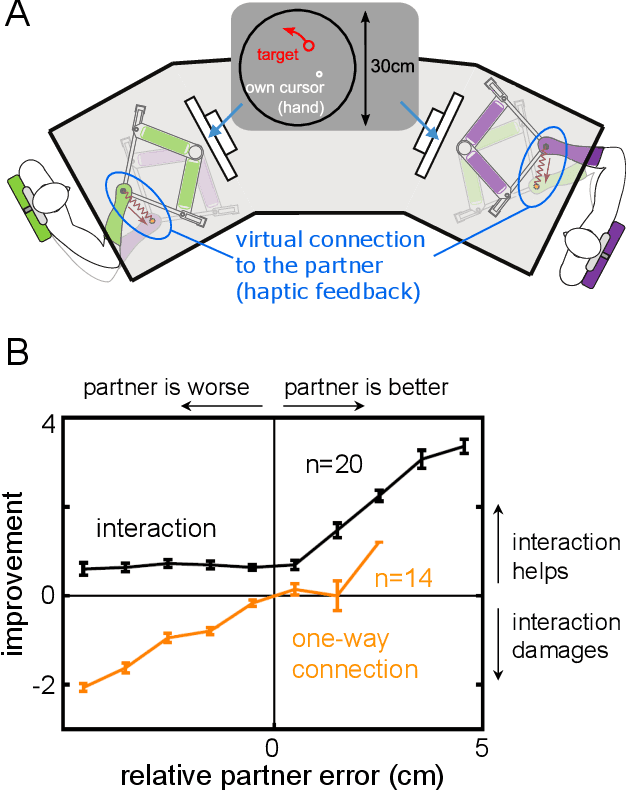

Interacting humans and robots can improve sensory prediction by adapting their viscoelasticity

Oct 17, 2024

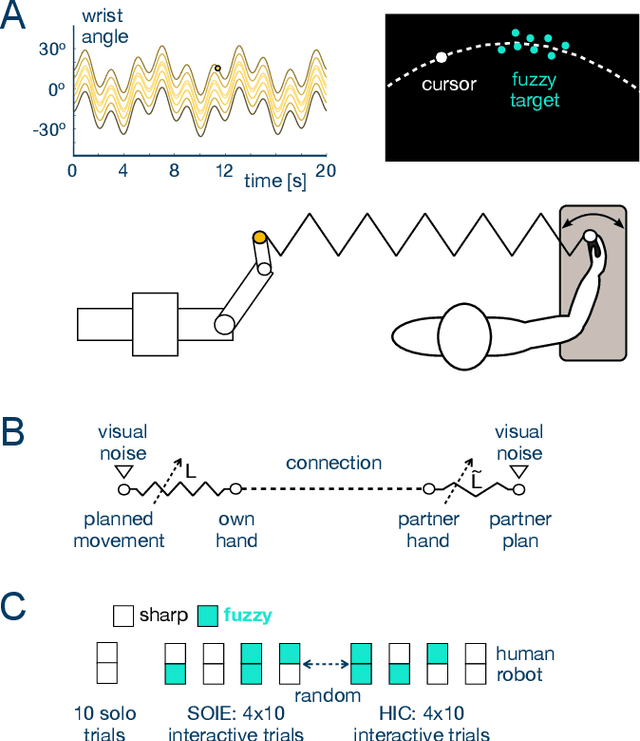

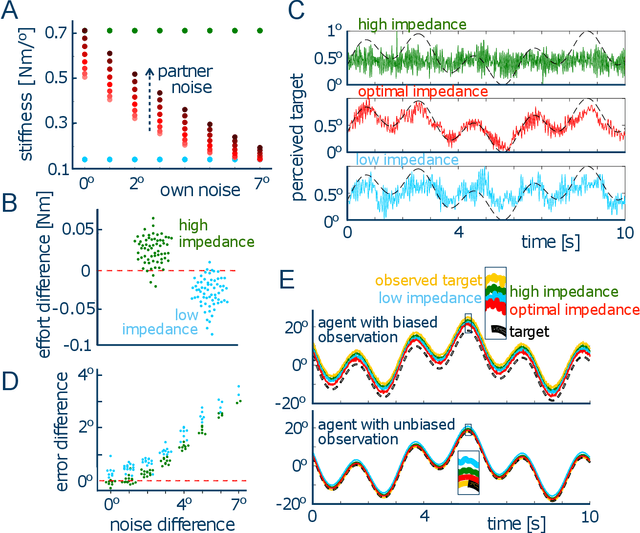

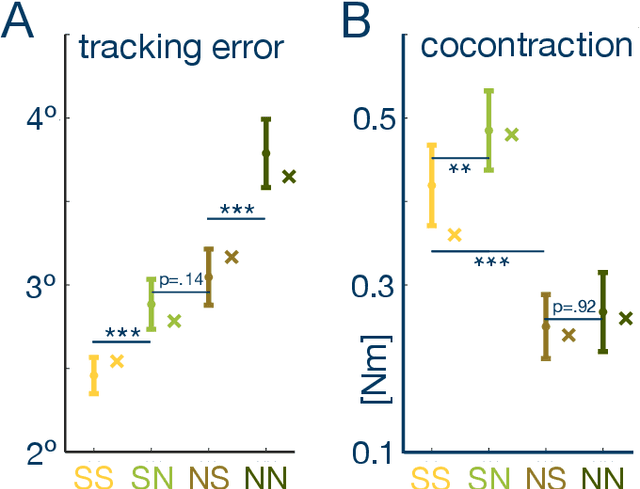

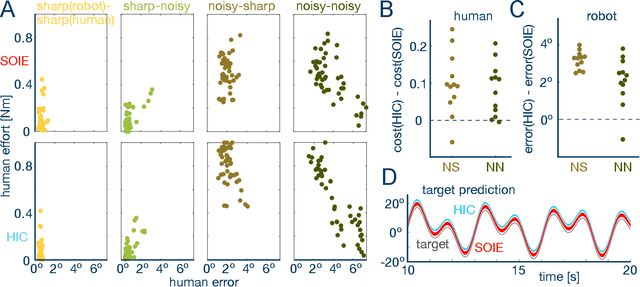

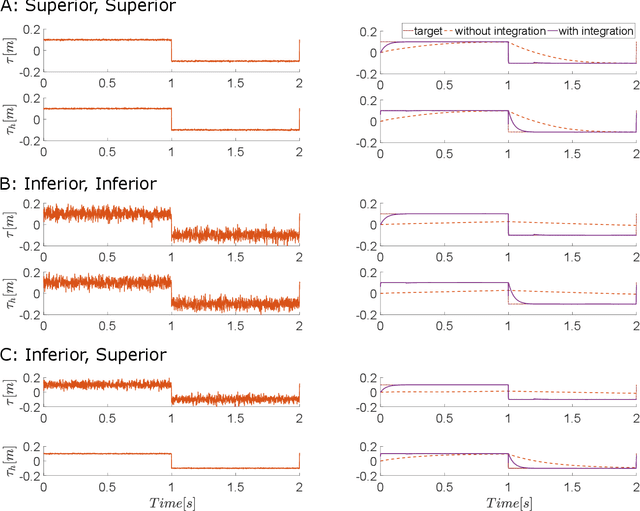

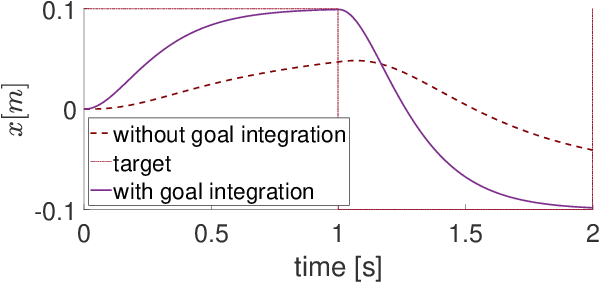

Abstract:To manipulate objects or dance together, humans and robots exchange energy and haptic information. While the exchange of energy in human-robot interaction has been extensively investigated, the underlying exchange of haptic information is not well understood. Here, we develop a computational model of the mechanical and sensory interactions between agents that can tune their viscoelasticity while considering their sensory and motor noise. The resulting stochastic-optimal-information-and-effort (SOIE) controller predicts how the exchange of haptic information and the performance can be improved by adjusting viscoelasticity. This controller was first implemented on a robot-robot experiment with a tracking task which showed its superior performance when compared to either stiff or compliant control. Importantly, the optimal controller also predicts how connected humans alter their muscle activation to improve haptic communication, with differentiated viscoelasticity adjustment to their own sensing noise and haptic perturbations. A human-robot experiment then illustrated the applicability of this optimal control strategy for robots, yielding improved tracking performance and effective haptic communication as the robot adjusted its viscoelasticity according to its own and the user's noise characteristics. The proposed SOIE controller may thus be used to improve haptic communication and collaboration of humans and robots.

Exploring the Effects of Shared Autonomy on Cognitive Load and Trust in Human-Robot Interaction

Feb 05, 2024

Abstract:Teleoperation is increasingly recognized as a viable solution for deploying robots in hazardous environments. Controlling a robot to perform a complex or demanding task may overload operators resulting in poor performance. To design a robot controller to assist the human in executing such challenging tasks, a comprehensive understanding of the interplay between the robot's autonomous behavior and the operator's internal state is essential. In this paper, we investigate the relationships between robot autonomy and both the human user's cognitive load and trust levels, and the potential existence of three-way interactions in the robot-assisted execution of the task. Our user study (N=24) results indicate that while autonomy level influences the teleoperator's perceived cognitive load and trust, there is no clear interaction between these factors. Instead, these elements appear to operate independently, thus highlighting the need to consider both cognitive load and trust as distinct but interrelated factors in varying the robot autonomy level in shared-control settings. This insight is crucial for the development of more effective and adaptable assistive robotic systems.

Mechanical features based object recognition

Oct 14, 2022

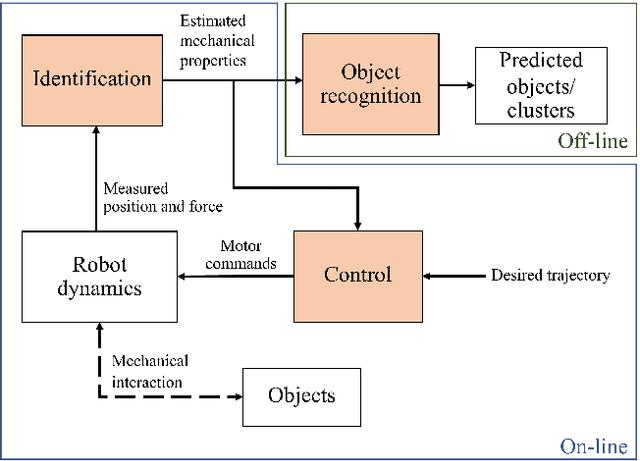

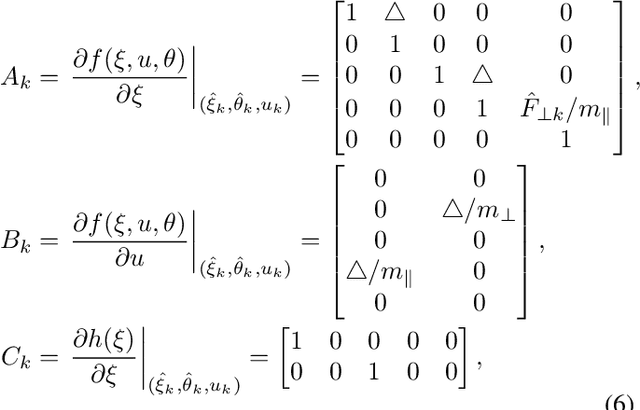

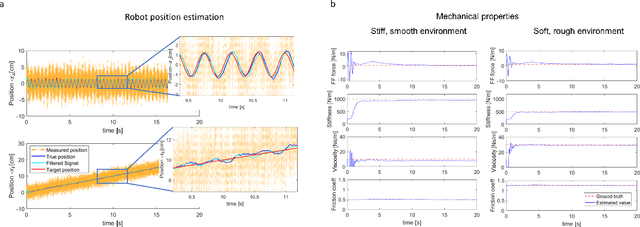

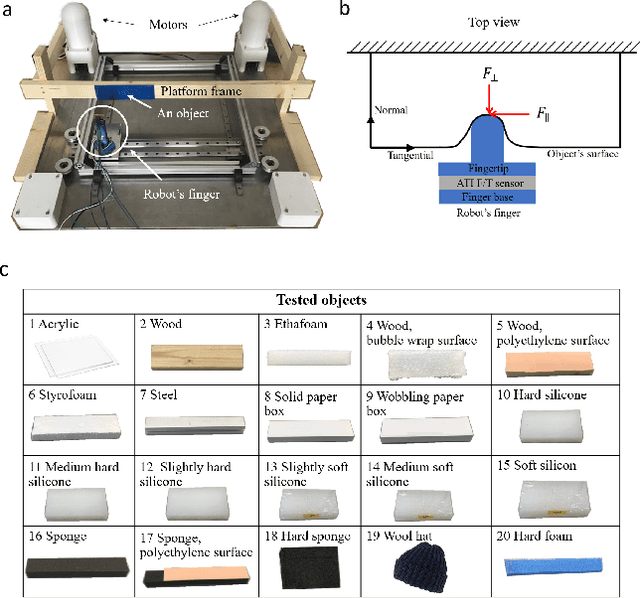

Abstract:Current robotic haptic object recognition relies on statistical measures derived from movement dependent interaction signals such as force, vibration or position. Mechanical properties that can be identified from these signals are intrinsic object properties that may yield a more robust object representation. Therefore, this paper proposes an object recognition framework using multiple representative mechanical properties: the coefficient of restitution, stiffness, viscosity and friction coefficient. These mechanical properties are identified in real-time using a dual Kalman filter, then used to classify objects. The proposed framework was tested with a robot identifying 20 objects through haptic exploration. The results demonstrate the technique's effectiveness and efficiency, and that all four mechanical properties are required for best recognition yielding a rate of 98.18 $\pm$ 0.424 %. Clustering with Gaussian mixture models further shows that using these mechanical properties results in superior recognition as compared to using statistical parameters of the interaction signals.

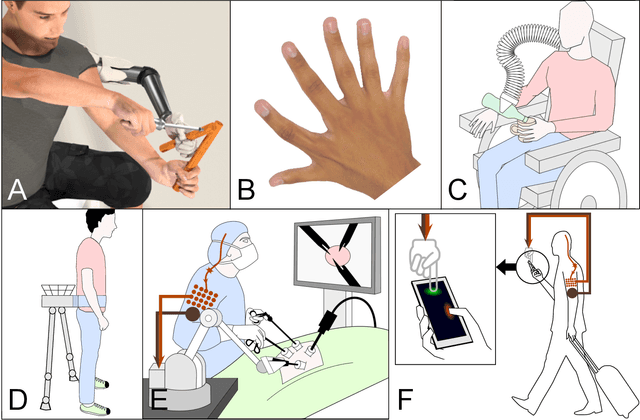

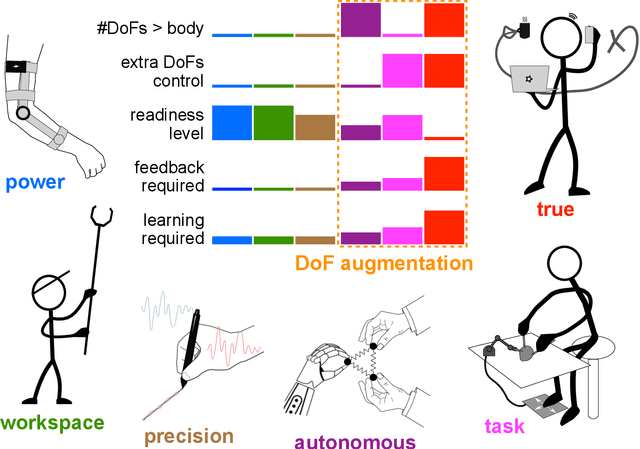

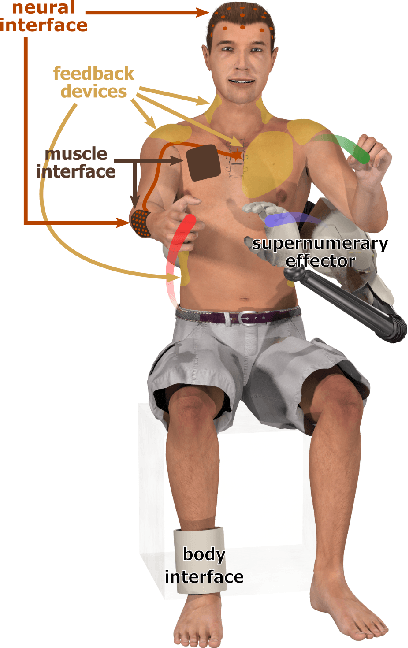

Human movement augmentation and how to make it a reality

Jun 15, 2021

Abstract:Augmenting the body with artificial limbs controlled concurrently to the natural limbs has long appeared in science fiction, but recent technological and neuroscientific advances have begun to make this vision possible. By allowing individuals to achieve otherwise impossible actions, this movement augmentation could revolutionize medical and industrial applications and profoundly change the way humans interact with their environment. Here, we construct a movement augmentation taxonomy through what is augmented and how it is achieved. With this framework, we analyze augmentation that extends the number of degrees-of-freedom, discuss critical features of effective augmentation such as physiological control signals, sensory feedback and learning, and propose a vision for the field.

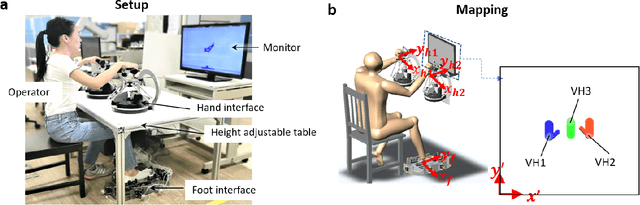

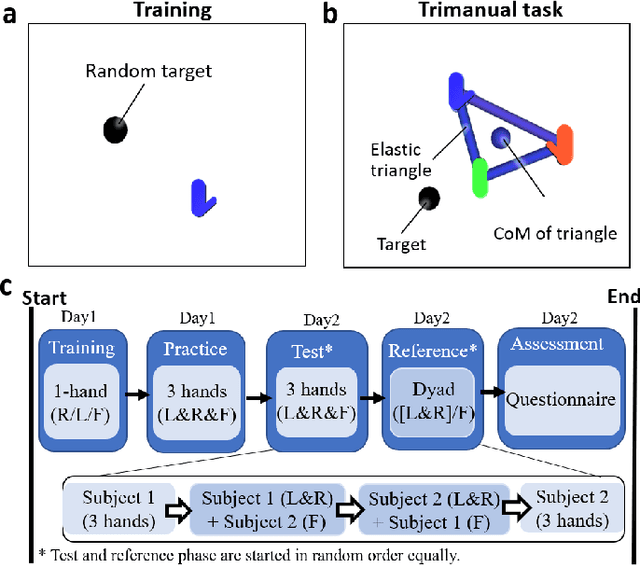

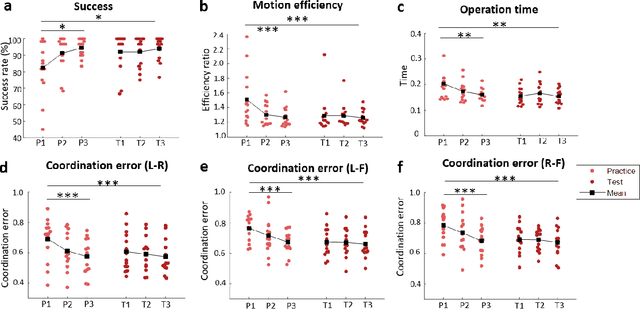

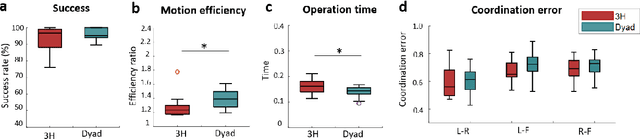

Trimanipulation: Evaluation of human performance in a 3-handed coordination task

Apr 13, 2021

Abstract:Many teleoperation tasks require three or more tools working together, which need the cooperation of multiple operators. The effectiveness of such schemes may be limited by communication. Trimanipulation by a single operator using an artificial third arm controlled together with their natural arms is a promising solution to this issue. Foot-controlled interfaces have previously shown the capability to be used for the continuous control of robot arms. However, the use of such interfaces for controlling a supernumerary robotic limb (SRLs) in coordination with the natural limbs, is not well understood. In this paper, a teleoperation task imitating physically coupled hands in a virtual reality scene was conducted with 14 subjects to evaluate human performance during tri-manipulation. The participants were required to move three limbs together in a coordinated way mimicking three arms holding a shared physical object. It was found that after a short practice session, the three-hand tri-manipulation using a single subject's hands and foot was still slower than dyad operation, however, they displayed similar performance in success rate and higher motion efficiency than two person's cooperation.

Improving Tracking through Human-Robot Sensory Augmentation

Feb 17, 2020

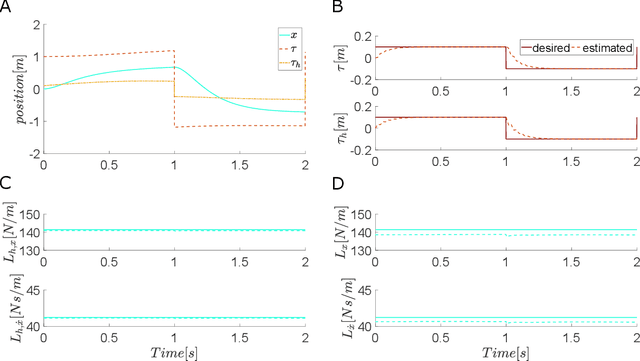

Abstract:This paper introduces human-robot sensory augmentation and illustrates it on a tracking task, where performance can be improved by the exchange of sensory information between the robot and its human user. It was recently found that during interaction between humans, the partners use each other's sensory information to improve their own sensing, thus also their performance and learning. In this paper, we develop a computational model of this unique human ability, and use it to build a novel control framework for human-robot interaction. The human partner's control is formulated as a feedback control with unknown control gains and desired trajectory. A Kalman filter is used to estimate first the control gains and then the desired trajectory. The estimated human partner's desired trajectory is used as augmented sensory information about the system and combined with the robot's measurement to estimate an uncertain target trajectory. Simulations and an implementation of the presented framework on a robotic interface validate the proposed observer-predictor pair for a tracking task. The results obtained using this robot demonstrate how the human user's control can be identified, and exhibit similar benefits of this sensory augmentation as was observed between interacting humans.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge