Yanpei Huang

Haptic feedback of front car motion can improve driving control

Jul 29, 2024

Abstract:This study investigates the role of haptic feedback in a car-following scenario, where information about the motion of the front vehicle is provided through a virtual elastic connection with it. Using a robotic interface in a simulated driving environment, we examined the impact of varying levels of such haptic feedback on the driver's ability to follow the road while avoiding obstacles. The results of an experiment with 15 subjects indicate that haptic feedback from the front car's motion can significantly improve driving control (i.e., reduce motion jerk and deviation from the road) and reduce mental load (evaluated via questionnaire). This suggests that haptic communication, as observed between physically interacting humans, can be leveraged to improve safety and efficiency in automated driving systems, warranting further testing in real driving scenarios.

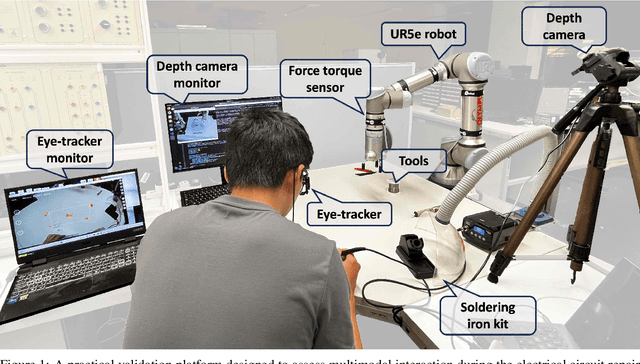

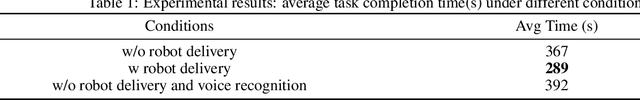

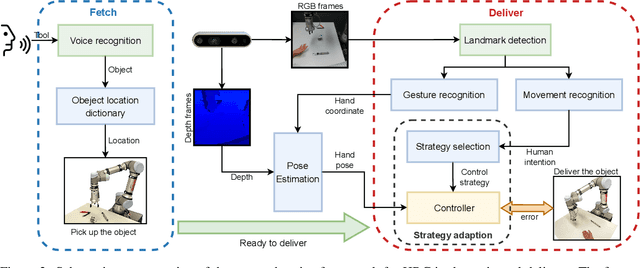

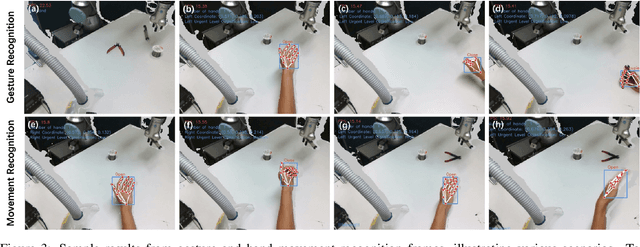

Dynamic Hand Gesture-Featured Human Motor Adaptation in Tool Delivery using Voice Recognition

Sep 20, 2023

Abstract:Human-robot collaboration has benefited users with higher efficiency towards interactive tasks. Nevertheless, most collaborative schemes rely on complicated human-machine interfaces, which might lack the requisite intuitiveness compared with natural limb control. We also expect to understand human intent with low training data requirements. In response to these challenges, this paper introduces an innovative human-robot collaborative framework that seamlessly integrates hand gesture and dynamic movement recognition, voice recognition, and a switchable control adaptation strategy. These modules provide a user-friendly approach that enables the robot to deliver the tools as per user need, especially when the user is working with both hands. Therefore, users can focus on their task execution without additional training in the use of human-machine interfaces, while the robot interprets their intuitive gestures. The proposed multimodal interaction framework is executed in the UR5e robot platform equipped with a RealSense D435i camera, and the effectiveness is assessed through a soldering circuit board task. The experiment results have demonstrated superior performance in hand gesture recognition, where the static hand gesture recognition module achieves an accuracy of 94.3\%, while the dynamic motion recognition module reaches 97.6\% accuracy. Compared with human solo manipulation, the proposed approach facilitates higher efficiency tool delivery, without significantly distracting from human intents.

Shared Control for Bimanual Telesurgery with Optimized Robotic Partner

Jul 12, 2021

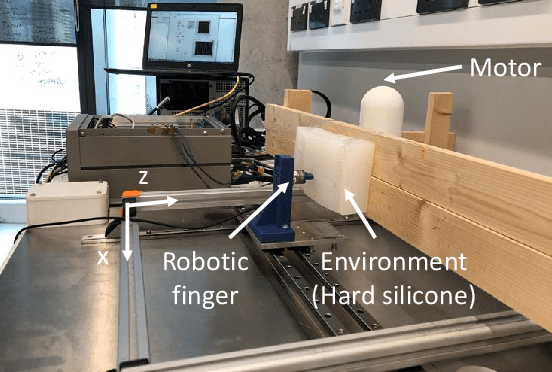

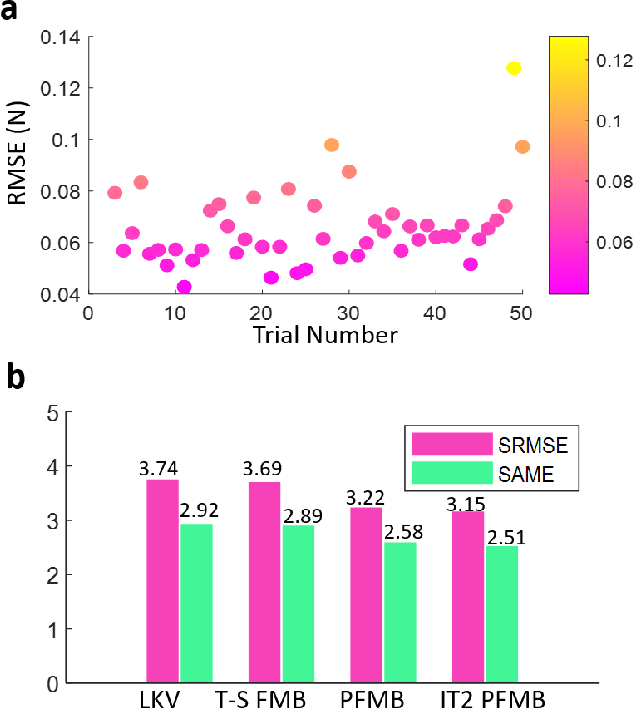

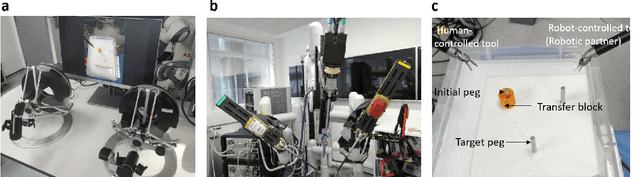

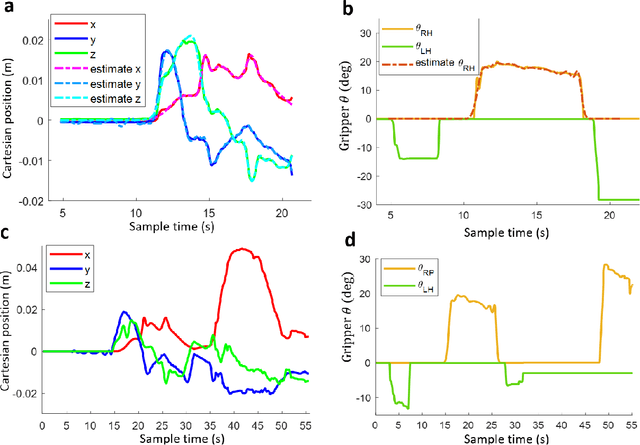

Abstract:Traditional telesurgery relies on the surgeon's full control of the robot on the patient's side, which tends to increase surgeon fatigue and may reduce the efficiency of the operation. This paper introduces a Robotic Partner (RP) to facilitate intuitive bimanual telesurgery, aiming at reducing the surgeon workload and enhancing surgeon-assisted capability. An interval type-2 polynomial fuzzy-model-based learning algorithm is employed to extract expert domain knowledge from surgeons and reflect environmental interaction information. Based on this, a bimanual shared control is developed to interact with the other robot teleoperated by the surgeon, understanding their control and providing assistance. As prior information of the environment model is not required, it reduces reliance on force sensors in control design. Experimental results on the DaVinci Surgical System show that the RP could assist peg-transfer tasks and reduce the surgeon's workload by 51\% in force-sensor-free scenarios.

Trimanipulation: Evaluation of human performance in a 3-handed coordination task

Apr 13, 2021

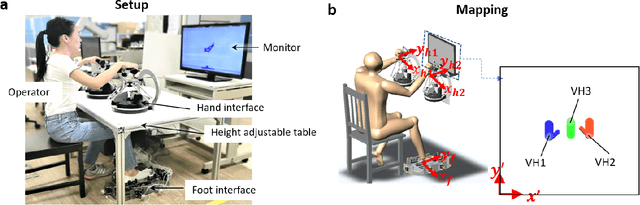

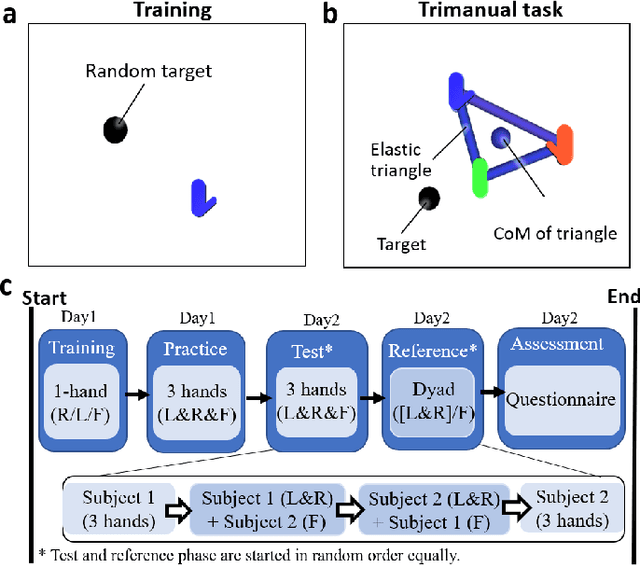

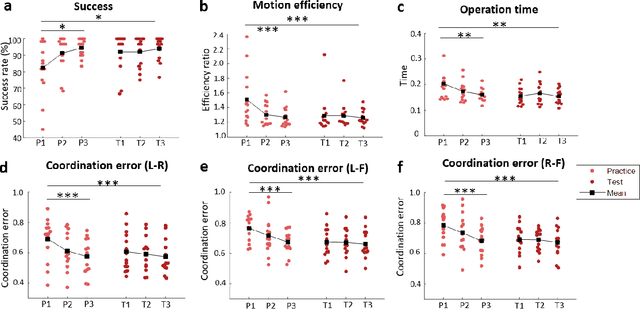

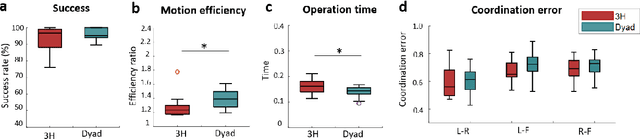

Abstract:Many teleoperation tasks require three or more tools working together, which need the cooperation of multiple operators. The effectiveness of such schemes may be limited by communication. Trimanipulation by a single operator using an artificial third arm controlled together with their natural arms is a promising solution to this issue. Foot-controlled interfaces have previously shown the capability to be used for the continuous control of robot arms. However, the use of such interfaces for controlling a supernumerary robotic limb (SRLs) in coordination with the natural limbs, is not well understood. In this paper, a teleoperation task imitating physically coupled hands in a virtual reality scene was conducted with 14 subjects to evaluate human performance during tri-manipulation. The participants were required to move three limbs together in a coordinated way mimicking three arms holding a shared physical object. It was found that after a short practice session, the three-hand tri-manipulation using a single subject's hands and foot was still slower than dyad operation, however, they displayed similar performance in success rate and higher motion efficiency than two person's cooperation.

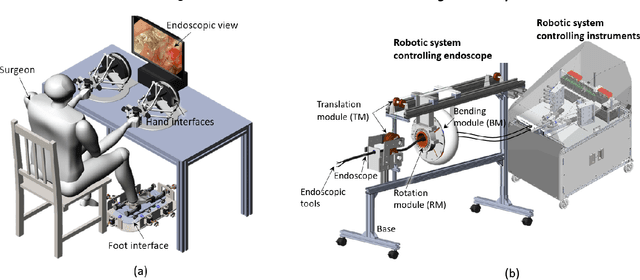

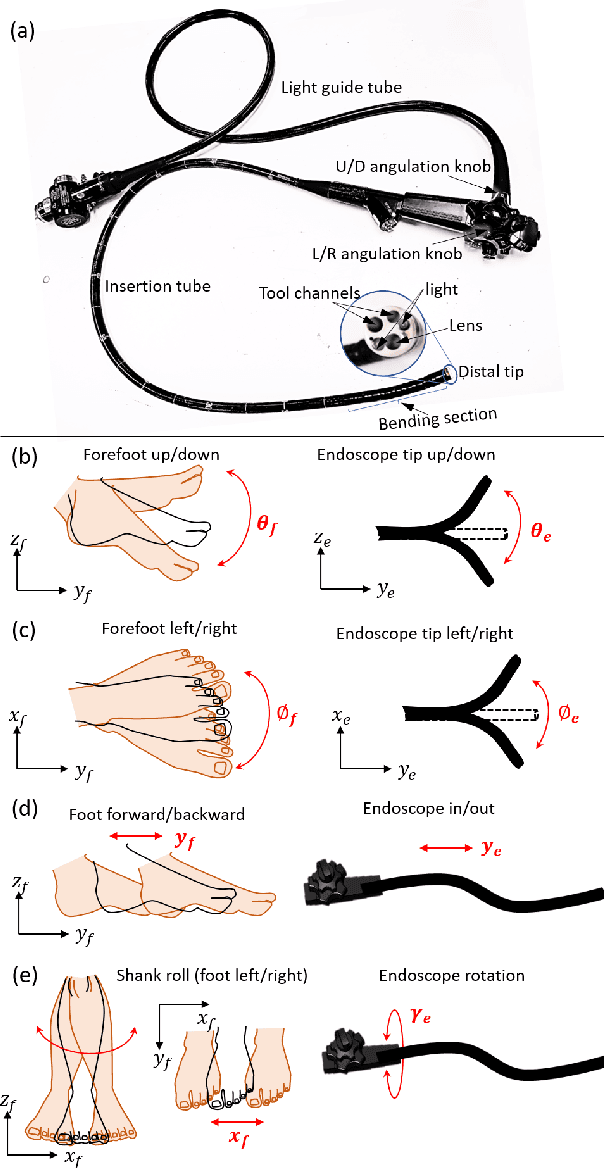

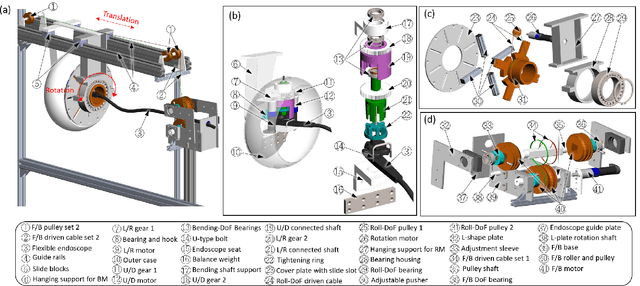

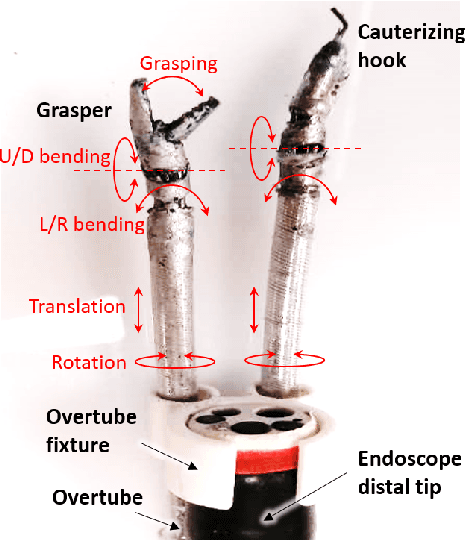

A Three-limb Teleoperated Robotic System with Foot Control for Flexible Endoscopic Surgery

Jul 12, 2020

Abstract:Flexible endoscopy requires high skills to manipulate both the endoscope and associated instruments. In most robotic flexible endoscopic systems, the endoscope and instruments are controlled separately by two operators, which may result in communication errors and inefficient operation. We present a novel teleoperation robotic endoscopic system that can be commanded by a surgeon alone. This 13 degrees-of-freedom (DoF) system integrates a foot-controlled robotic flexible endoscope and two hand-controlled robotic endoscopic instruments (a robotic grasper and a robotic cauterizing hook). A foot-controlled human-machine interface maps the natural foot gestures to the 4-DoF movements of the endoscope, and two hand-controlled interfaces map the movements of the two hands to the two instruments individually. The proposed robotic system was validated in an ex-vivo experiment carried out by six subjects, where foot control was also compared with a sequential clutch-based hand control scheme. The participants could successfully teleoperate the endoscope and the two instruments to cut the tissues at scattered target areas in a porcine stomach. Foot control yielded 43.7% faster task completion and required less mental effort as compared to the clutch-based hand control scheme. The system introduced in this paper is intuitive for three-limb manipulation even for operators without experience of handling the endoscope and robotic instruments. This three-limb teleoperated robotic system enables one surgeon to intuitively control three endoscopic tools which normally require two operators, leading to reduced manpower, less communication errors, and improved efficiency.

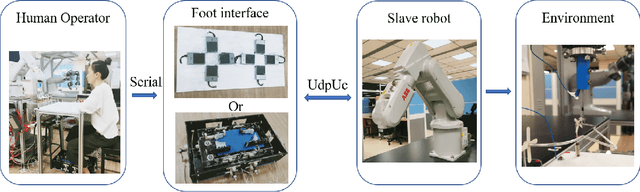

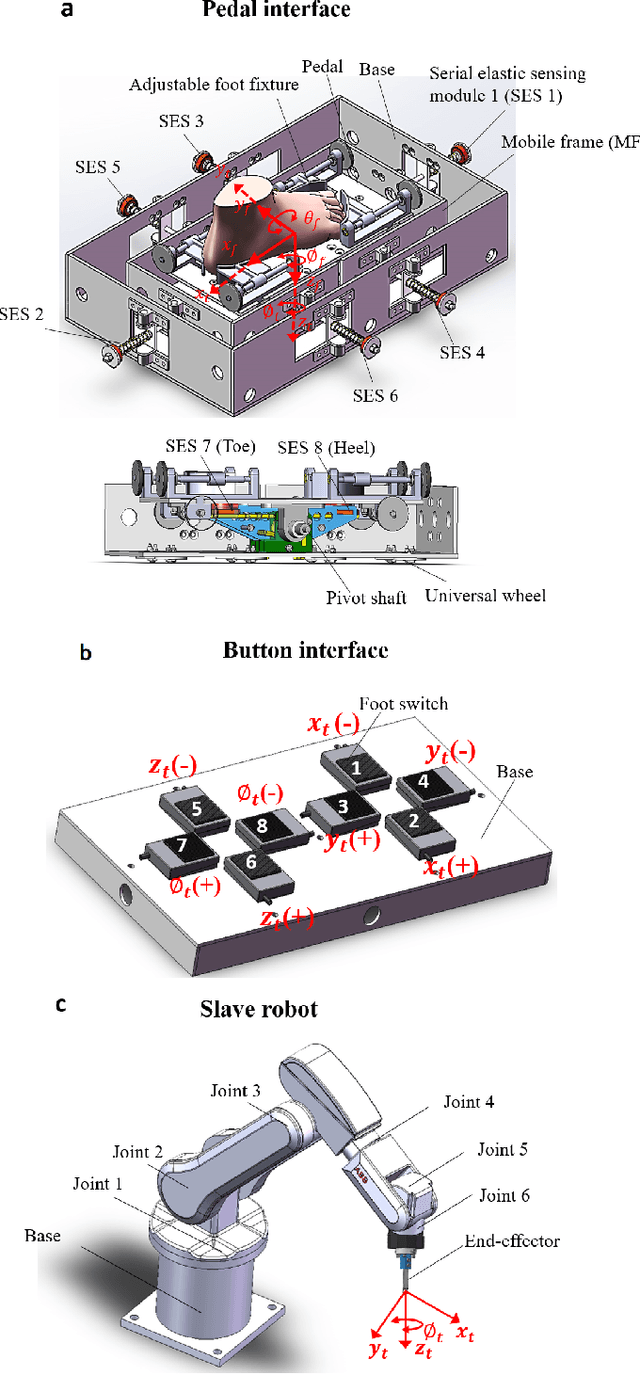

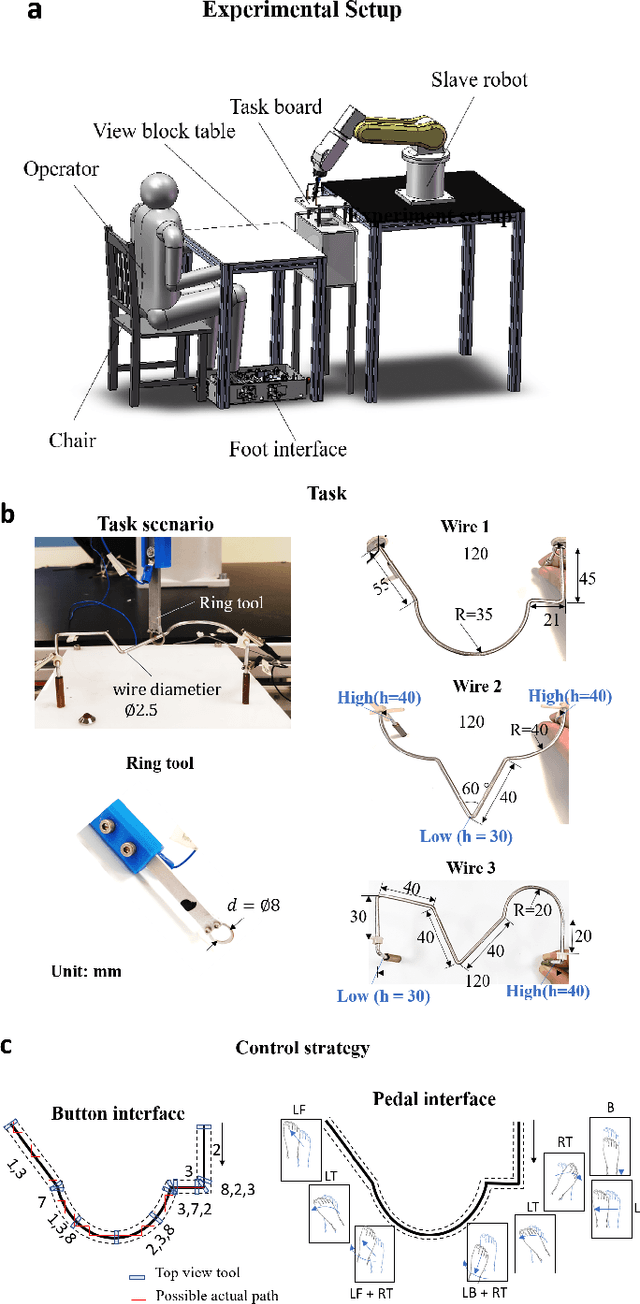

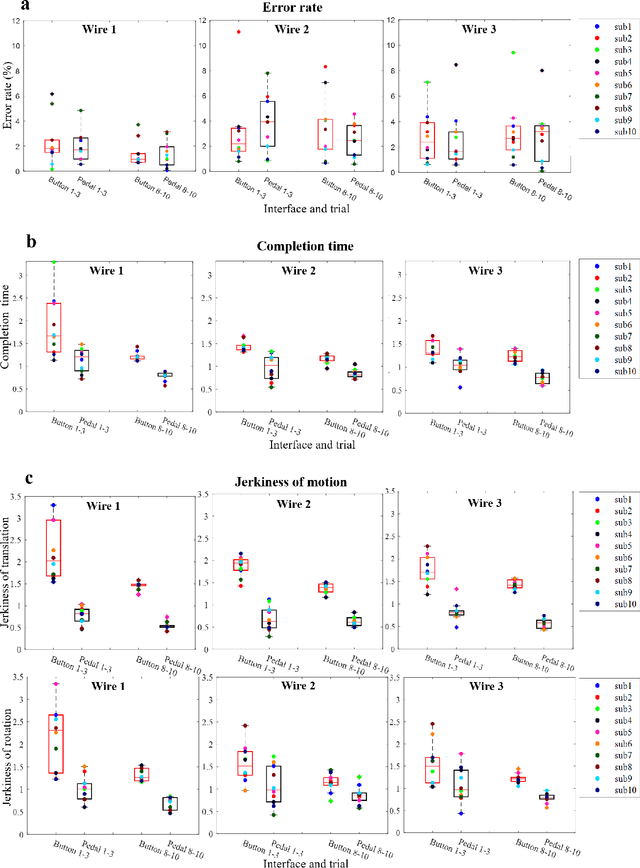

Performance evaluation of a foot-controlled human-robot interface

Mar 08, 2019

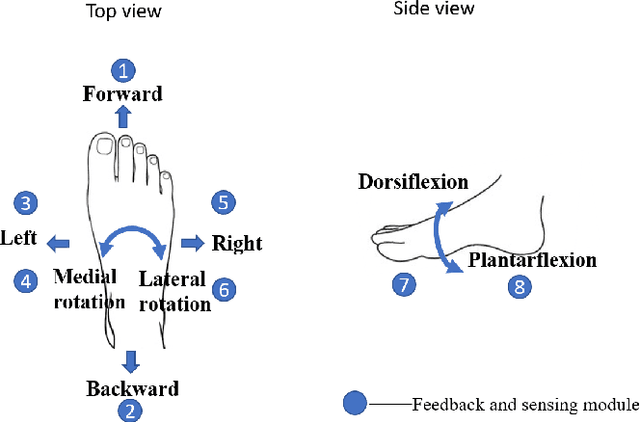

Abstract:Robotic minimally invasive interventions typically require using more than two instruments. We thus developed a foot pedal interface which allows the user to control a robotic arm (simultaneously to working with the hands) with four degrees of freedom in continuous directions and speeds. This paper evaluates and compares the performances of ten naive operators in using this new pedal interface and a traditional button interface in completing tasks. These tasks are geometrically complex path-following tasks similar to those in laparoscopic training, and the traditional button interface allows axis-by-axis control with constant speeds. Precision, time, and smoothness of the subjects' control movements for these tasks are analysed. The results demonstrate that the pedal interface can be used to control a robot for complex motion tasks. The subjects kept the average error rate at a low level of around 2.6% with both interfaces, but the pedal interface resulted in about 30% faster operation speed and 60% smoother movement, which indicates improved efficiency and user experience as compared with the button interface. The results of a questionnaire show that the operators found that controlling the robot with the pedal interface was more intuitive, comfortable, and less tiring than using the button interface.

A Subject-Specific Four-Degree-of-Freedom Foot Interface to Control a Robot Arm

Feb 13, 2019

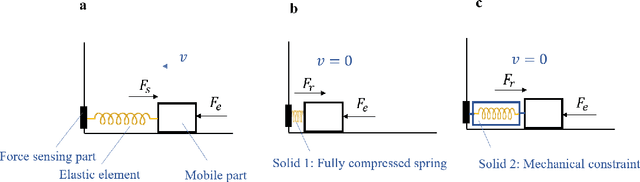

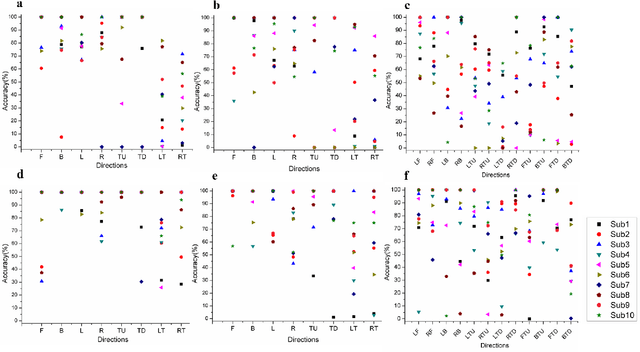

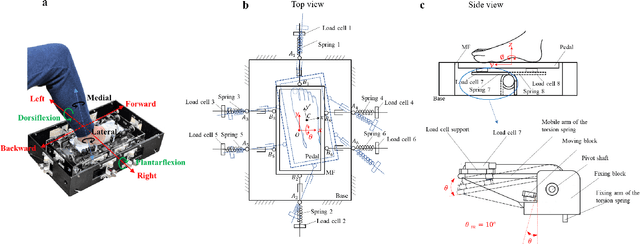

Abstract:In robotic surgery, the surgeon controls robotic instruments using dedicated interfaces. One critical limitation of current interfaces is that they are designed to be operated by only the hands. This means that the surgeon can only control at most two robotic instruments at one time while many interventions require three instruments. This paper introduces a novel four-degree-of-freedom foot-machine interface which allows the surgeon to control a third robotic instrument using the foot, giving the surgeon a "third hand". This interface is essentially a parallel-serial hybrid mechanism with springs and force sensors. Unlike existing switch-based interfaces that can only un-intuitively generate motion in discrete directions, this interface allows intuitive control of a slave robotic arm in continuous directions and speeds, naturally matching the foot movements with dynamic force & position feedbacks. An experiment with ten naive subjects was conducted to test the system. In view of the significant variance of motion patterns between subjects, a subject-specific mapping from foot movements to command outputs was developed using Independent Component Analysis (ICA). Results showed that the ICA method could accurately identify subjects' foot motion patterns and significantly improve the prediction accuracy of motion directions from 68% to 88% as compared with the forward kinematics-based approach. This foot-machine interface can be applied for the teleoperation of industrial/surgical robots independently or in coordination with hands in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge