Haolin Fei

Dynamic Hand Gesture-Featured Human Motor Adaptation in Tool Delivery using Voice Recognition

Sep 20, 2023

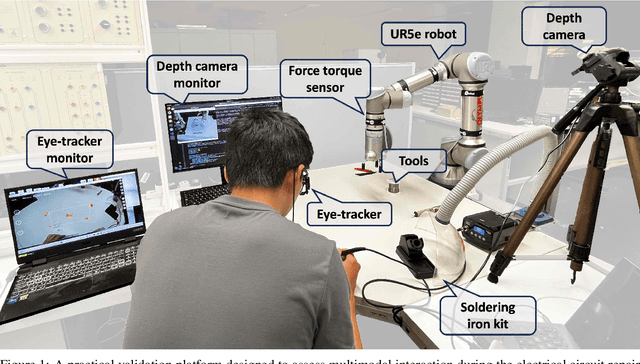

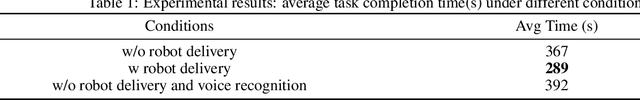

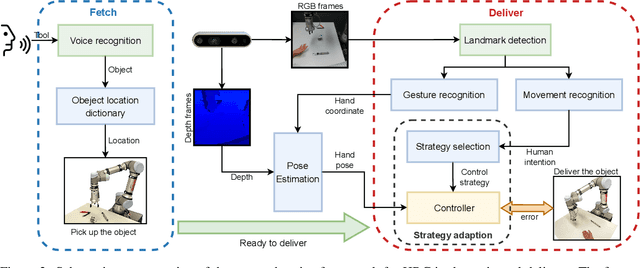

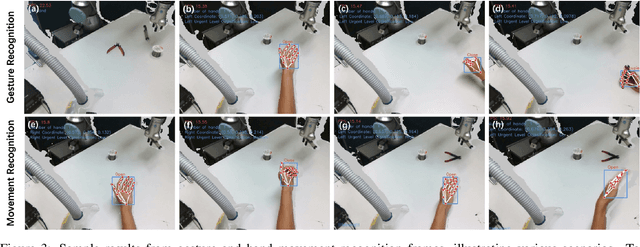

Abstract:Human-robot collaboration has benefited users with higher efficiency towards interactive tasks. Nevertheless, most collaborative schemes rely on complicated human-machine interfaces, which might lack the requisite intuitiveness compared with natural limb control. We also expect to understand human intent with low training data requirements. In response to these challenges, this paper introduces an innovative human-robot collaborative framework that seamlessly integrates hand gesture and dynamic movement recognition, voice recognition, and a switchable control adaptation strategy. These modules provide a user-friendly approach that enables the robot to deliver the tools as per user need, especially when the user is working with both hands. Therefore, users can focus on their task execution without additional training in the use of human-machine interfaces, while the robot interprets their intuitive gestures. The proposed multimodal interaction framework is executed in the UR5e robot platform equipped with a RealSense D435i camera, and the effectiveness is assessed through a soldering circuit board task. The experiment results have demonstrated superior performance in hand gesture recognition, where the static hand gesture recognition module achieves an accuracy of 94.3\%, while the dynamic motion recognition module reaches 97.6\% accuracy. Compared with human solo manipulation, the proposed approach facilitates higher efficiency tool delivery, without significantly distracting from human intents.

A Model-driven and Data-driven Fusion Framework for Accurate Air Quality Prediction

Dec 06, 2019

Abstract:Air quality is closely related to public health. Health issues such as cardiovascular diseases and respiratory diseases, may have connection with long exposure to highly polluted environment. Therefore, accurate air quality forecasts are extremely important to those who are vulnerable. To estimate the variation of several air pollution concentrations, previous researchers used various approaches, such as the Community Multiscale Air Quality model (CMAQ) or neural networks. Although CMAQ model considers a coverage of the historic air pollution data and meteorological variables, extra bias is introduced due to additional adjustment. In this paper, a combination of model-based strategy and data-driven method namely the physical-temporal collection(PTC) model is proposed, aiming to fix the systematic error that traditional models deliver. In the data-driven part, the first components are the temporal pattern and the weather pattern to measure important features that contribute to the prediction performance. The less relevant input variables will be removed to eliminate negative weights in network training. Then, we deploy a long-short-term-memory (LSTM) to fetch the preliminary results, which will be further corrected by a neural network (NN) involving the meteorological index as well as other pollutants concentrations. The data-set we applied for forecasting is from January 1st, 2016 to December 31st, 2016. According to the results, our PTC achieves an excellent performance compared with the baseline model (CMAQ prediction, GRU, DNN and etc.). This joint model-based data-driven method for air quality prediction can be easily deployed on stations without extra adjustment, providing results with high-time-resolution information for vulnerable members to prevent heavy air pollution ahead.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge