Etienne Burdet

Model selection and real-time skill assessment for suturing in robotic surgery

Jan 17, 2026Abstract:Automated feedback systems have the potential to provide objective skill assessment for training and evaluation in robot-assisted surgery. In this study, we examine methods to achieve real-time prediction of surgical skill level in real-time based on Objective Structured Assessment of Technical Skills (OSATS) scores. Using data acquired from the da Vinci Surgical System, we carry out three main analyses, focusing on model design, their real-time performance, and their skill-level-based cross-validation training. For the model design, we evaluate the effectiveness of multimodal deep learning models for predicting surgical skill levels using synchronized kinematic and vision data. Our models include separate unimodal baselines and fusion architectures that integrate features from both modalities and are evaluated using mean Spearman's correlation coefficients, demonstrating that the fusion model consistently outperforms unimodal models for real-time predictions. For the real-time performance, we observe the prediction's trend over time and highlight correlation with the surgeon's gestures. For the skill-level-based cross-validation, we separately trained models on surgeons with different skill levels, which showed that high-skill demonstrations allow for better performance than those trained on low-skilled ones and generalize well to similarly skilled participants. Our findings show that multimodal learning allows more stable fine-grained evaluation of surgical performance and highlights the value of expert-level training data for model generalization.

Confidence-based Intent Prediction for Teleoperation in Bimanual Robotic Suturing

Apr 29, 2025Abstract:Robotic-assisted procedures offer enhanced precision, but while fully autonomous systems are limited in task knowledge, difficulties in modeling unstructured environments, and generalisation abilities, fully manual teleoperated systems also face challenges such as delay, stability, and reduced sensory information. To address these, we developed an interactive control strategy that assists the human operator by predicting their motion plan at both high and low levels. At the high level, a surgeme recognition system is employed through a Transformer-based real-time gesture classification model to dynamically adapt to the operator's actions, while at the low level, a Confidence-based Intention Assimilation Controller adjusts robot actions based on user intent and shared control paradigms. The system is built around a robotic suturing task, supported by sensors that capture the kinematics of the robot and task dynamics. Experiments across users with varying skill levels demonstrated the effectiveness of the proposed approach, showing statistically significant improvements in task completion time and user satisfaction compared to traditional teleoperation.

Switch-based Independent Antagonist Actuation with a Single Motor for a Soft Exosuit

Feb 07, 2025

Abstract:The use of a cable-driven soft exosuit poses challenges with regards to the mechanical design of the actuation system, particularly when used for actuation along multiple degrees of freedom (DoF). The simplest general solution requires the use of two actuators to be capable of inducing movement along one DoF. However, this solution is not practical for the development of multi-joint exosuits. Reducing the number of actuators is a critical need in multi-DoF exosuits. We propose a switch-based mechanism to control an antagonist pair of cables such that it can actuate along any cable path geometry. The results showed that 298.24ms was needed for switching between cables. While this latency is relatively large, it can reduced in the future by a better choice of the motor used for actuation.

Predictive Visuo-Tactile Interactive Perception Framework for Object Properties Inference

Nov 13, 2024Abstract:Interactive exploration of the unknown physical properties of objects such as stiffness, mass, center of mass, friction coefficient, and shape is crucial for autonomous robotic systems operating continuously in unstructured environments. Precise identification of these properties is essential to manipulate objects in a stable and controlled way, and is also required to anticipate the outcomes of (prehensile or non-prehensile) manipulation actions such as pushing, pulling, lifting, etc. Our study focuses on autonomously inferring the physical properties of a diverse set of various homogeneous, heterogeneous, and articulated objects utilizing a robotic system equipped with vision and tactile sensors. We propose a novel predictive perception framework for identifying object properties of the diverse objects by leveraging versatile exploratory actions: non-prehensile pushing and prehensile pulling. As part of the framework, we propose a novel active shape perception to seamlessly initiate exploration. Our innovative dual differentiable filtering with Graph Neural Networks learns the object-robot interaction and performs consistent inference of indirectly observable time-invariant object properties. In addition, we formulate a $N$-step information gain approach to actively select the most informative actions for efficient learning and inference. Extensive real-robot experiments with planar objects show that our predictive perception framework results in better performance than the state-of-the-art baseline and demonstrate our framework in three major applications for i) object tracking, ii) goal-driven task, and iii) change in environment detection.

Interacting humans and robots can improve sensory prediction by adapting their viscoelasticity

Oct 17, 2024

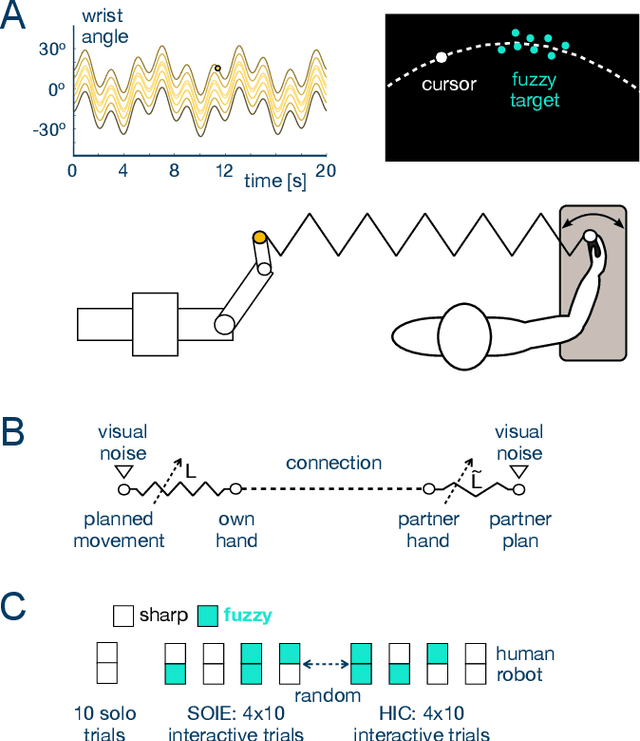

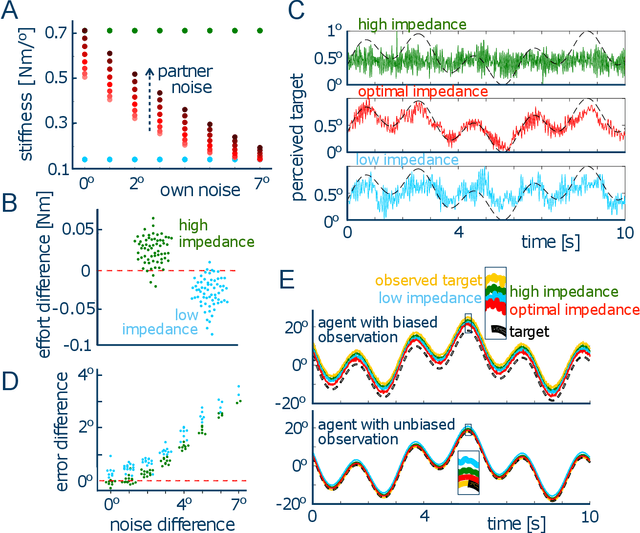

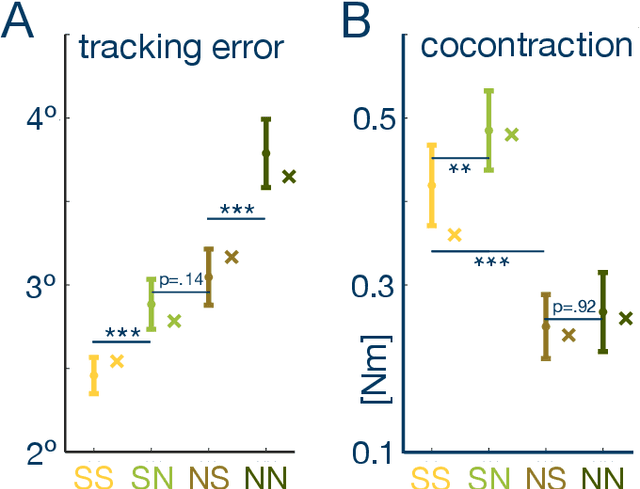

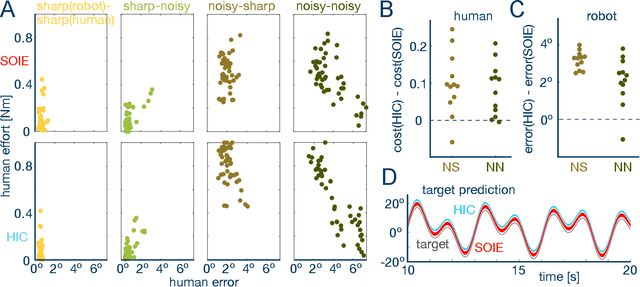

Abstract:To manipulate objects or dance together, humans and robots exchange energy and haptic information. While the exchange of energy in human-robot interaction has been extensively investigated, the underlying exchange of haptic information is not well understood. Here, we develop a computational model of the mechanical and sensory interactions between agents that can tune their viscoelasticity while considering their sensory and motor noise. The resulting stochastic-optimal-information-and-effort (SOIE) controller predicts how the exchange of haptic information and the performance can be improved by adjusting viscoelasticity. This controller was first implemented on a robot-robot experiment with a tracking task which showed its superior performance when compared to either stiff or compliant control. Importantly, the optimal controller also predicts how connected humans alter their muscle activation to improve haptic communication, with differentiated viscoelasticity adjustment to their own sensing noise and haptic perturbations. A human-robot experiment then illustrated the applicability of this optimal control strategy for robots, yielding improved tracking performance and effective haptic communication as the robot adjusted its viscoelasticity according to its own and the user's noise characteristics. The proposed SOIE controller may thus be used to improve haptic communication and collaboration of humans and robots.

Haptic feedback of front car motion can improve driving control

Jul 29, 2024

Abstract:This study investigates the role of haptic feedback in a car-following scenario, where information about the motion of the front vehicle is provided through a virtual elastic connection with it. Using a robotic interface in a simulated driving environment, we examined the impact of varying levels of such haptic feedback on the driver's ability to follow the road while avoiding obstacles. The results of an experiment with 15 subjects indicate that haptic feedback from the front car's motion can significantly improve driving control (i.e., reduce motion jerk and deviation from the road) and reduce mental load (evaluated via questionnaire). This suggests that haptic communication, as observed between physically interacting humans, can be leveraged to improve safety and efficiency in automated driving systems, warranting further testing in real driving scenarios.

Visuo-Tactile based Predictive Cross Modal Perception for Object Exploration in Robotics

May 23, 2024Abstract:Autonomously exploring the unknown physical properties of novel objects such as stiffness, mass, center of mass, friction coefficient, and shape is crucial for autonomous robotic systems operating continuously in unstructured environments. We introduce a novel visuo-tactile based predictive cross-modal perception framework where initial visual observations (shape) aid in obtaining an initial prior over the object properties (mass). The initial prior improves the efficiency of the object property estimation, which is autonomously inferred via interactive non-prehensile pushing and using a dual filtering approach. The inferred properties are then used to enhance the predictive capability of the cross-modal function efficiently by using a human-inspired `surprise' formulation. We evaluated our proposed framework in the real-robotic scenario, demonstrating superior performance.

Space Physiology and Technology: Musculoskeletal Adaptations, Countermeasures, and the Opportunity for Wearable Robotics

Apr 04, 2024Abstract:Space poses significant challenges for human physiology, leading to physiological adaptations in response to an environment vastly different from Earth. While these adaptations can be beneficial, they may not fully counteract the adverse impact of space-related stressors. A comprehensive understanding of these physiological adaptations is needed to devise effective countermeasures to support human life in space. This review focuses on the impact of the environment in space on the musculoskeletal system. It highlights the complex interplay between bone and muscle adaptation, the underlying physiological mechanisms, and their implications on astronaut health. Furthermore, the review delves into the deployed and current advances in countermeasures and proposes, as a perspective for future developments, wearable sensing and robotic technologies, such as exoskeletons, as a fitting alternative.

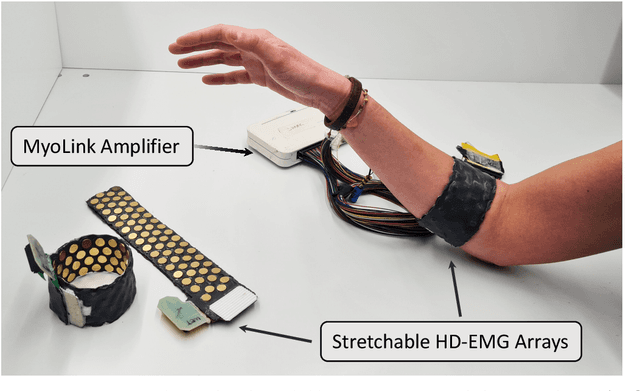

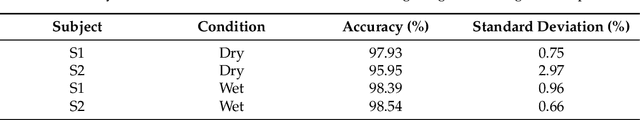

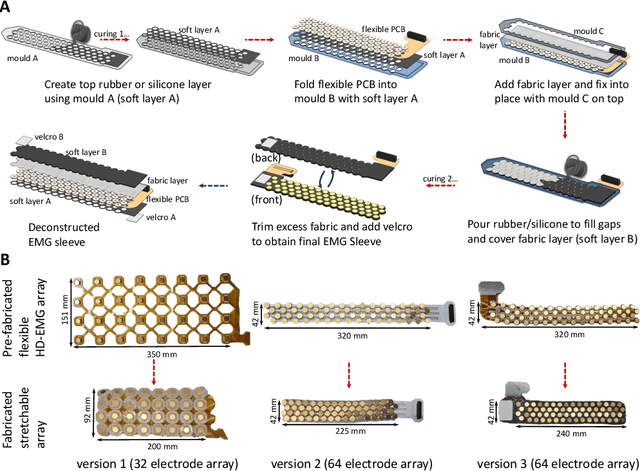

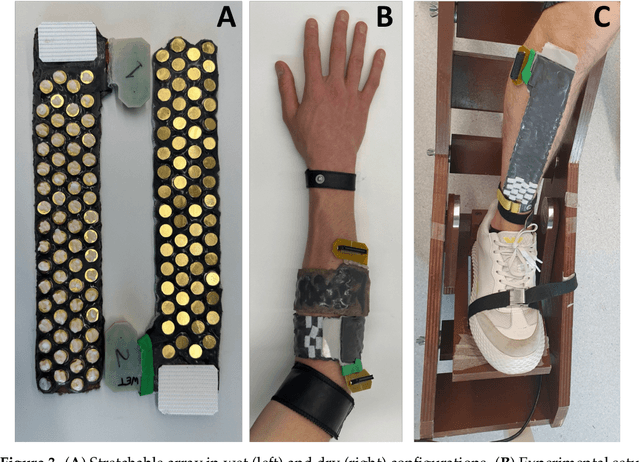

Design, Fabrication and Evaluation of a Stretchable High-Density Electromyography Array

Mar 29, 2024

Abstract:The adoption of high-density electrode systems for human-machine interfaces in real-life applications has been impeded by practical and technical challenges, including noise interference, motion artifacts and the lack of compact electrode interfaces. To overcome some of these challenges, we introduce a wearable and stretchable electromyography (EMG) array, and present its design, fabrication methodology, characterisation, and comprehensive evaluation. Our proposed solution comprises dry-electrodes on flexible printed circuit board (PCB) substrates, eliminating the need for time-consuming skin preparation. The proposed fabrication method allows the manufacturing of stretchable sleeves, with consistent and standardised coverage across subjects. We thoroughly tested our developed prototype, evaluating its potential for application in both research and real-world environments. The results of our study showed that the developed stretchable array matches or outperforms traditional EMG grids and holds promise in furthering the real-world translation of high-density EMG for human-machine interfaces.

* This is the author's version of the manuscript published in MDPI Sensors journal - https://www.mdpi.com/1424-8220/24/6/1810 , This manuscript is in IEEE format - 8 pages, 5 figures, 1 table

Design and Preliminary Evaluation of a Torso Stabiliser for Individuals with Spinal Cord Injury

Mar 26, 2024

Abstract:Spinal cord injuries (SCIs) generally result in sensory and mobility impairments, with torso instability being particularly debilitating. Existing torso stabilisers are often rigid and restrictive. This paper presents an early investigation into a non-restrictive 1 degree-of-freedom (DoF) mechanical torso stabiliser inspired by devices such as centrifugal clutches and seat-belt mechanisms. Firstly, the paper presents a motion-capture (MoCap) and OpenSim-based kinematic analysis of the cable-based system to understand requisite device characteristics. The simulated evaluation resulted in the cable-based device to require 55-60cm of unrestricted travel, and to lock at a threshold cable velocity of 80-100cm/sec. Next, the developed 1-DoF device is introduced. The proposed mechanical device is transparent during activities of daily living, and transitions to compliant blocking when incipient fall is detected. Prototype behaviour was then validated using a MoCap-based kinematic analysis to verify non-restrictive movement, reliable transition to blocking, and compliance of the blocking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge