Ekaterina Ivanova

HISTAI: An Open-Source, Large-Scale Whole Slide Image Dataset for Computational Pathology

May 17, 2025Abstract:Recent advancements in Digital Pathology (DP), particularly through artificial intelligence and Foundation Models, have underscored the importance of large-scale, diverse, and richly annotated datasets. Despite their critical role, publicly available Whole Slide Image (WSI) datasets often lack sufficient scale, tissue diversity, and comprehensive clinical metadata, limiting the robustness and generalizability of AI models. In response, we introduce the HISTAI dataset, a large, multimodal, open-access WSI collection comprising over 60,000 slides from various tissue types. Each case in the HISTAI dataset is accompanied by extensive clinical metadata, including diagnosis, demographic information, detailed pathological annotations, and standardized diagnostic coding. The dataset aims to fill gaps identified in existing resources, promoting innovation, reproducibility, and the development of clinically relevant computational pathology solutions. The dataset can be accessed at https://github.com/HistAI/HISTAI.

SPIDER: A Comprehensive Multi-Organ Supervised Pathology Dataset and Baseline Models

Mar 04, 2025

Abstract:Advancing AI in computational pathology requires large, high-quality, and diverse datasets, yet existing public datasets are often limited in organ diversity, class coverage, or annotation quality. To bridge this gap, we introduce SPIDER (Supervised Pathology Image-DEscription Repository), the largest publicly available patch-level dataset covering multiple organ types, including Skin, Colorectal, and Thorax, with comprehensive class coverage for each organ. SPIDER provides high-quality annotations verified by expert pathologists and includes surrounding context patches, which enhance classification performance by providing spatial context. Alongside the dataset, we present baseline models trained on SPIDER using the Hibou-L foundation model as a feature extractor combined with an attention-based classification head. The models achieve state-of-the-art performance across multiple tissue categories and serve as strong benchmarks for future digital pathology research. Beyond patch classification, the model enables rapid identification of significant areas, quantitative tissue metrics, and establishes a foundation for multimodal approaches. Both the dataset and trained models are publicly available to advance research, reproducibility, and AI-driven pathology development. Access them at: https://github.com/HistAI/SPIDER

Hibou: A Family of Foundational Vision Transformers for Pathology

Jun 07, 2024

Abstract:Pathology, the microscopic examination of diseased tissue, is critical for diagnosing various medical conditions, particularly cancers. Traditional methods are labor-intensive and prone to human error. Digital pathology, which converts glass slides into high-resolution digital images for analysis by computer algorithms, revolutionizes the field by enhancing diagnostic accuracy, consistency, and efficiency through automated image analysis and large-scale data processing. Foundational transformer pretraining is crucial for developing robust, generalizable models as it enables learning from vast amounts of unannotated data. This paper introduces the Hibou family of foundational vision transformers for pathology, leveraging the DINOv2 framework to pretrain two model variants, Hibou-B and Hibou-L, on a proprietary dataset of over 1 million whole slide images (WSIs) representing diverse tissue types and staining techniques. Our pretrained models demonstrate superior performance on both patch-level and slide-level benchmarks, surpassing existing state-of-the-art methods. Notably, Hibou-L achieves the highest average accuracy across multiple benchmark datasets. To support further research and application in the field, we have open-sourced the Hibou-B model, which can be accessed at https://github.com/HistAI/hibou

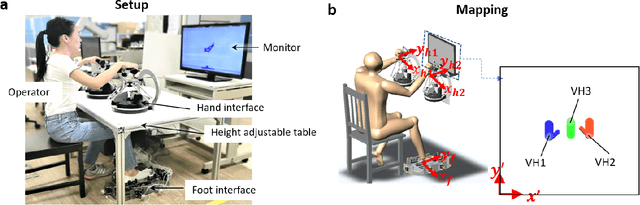

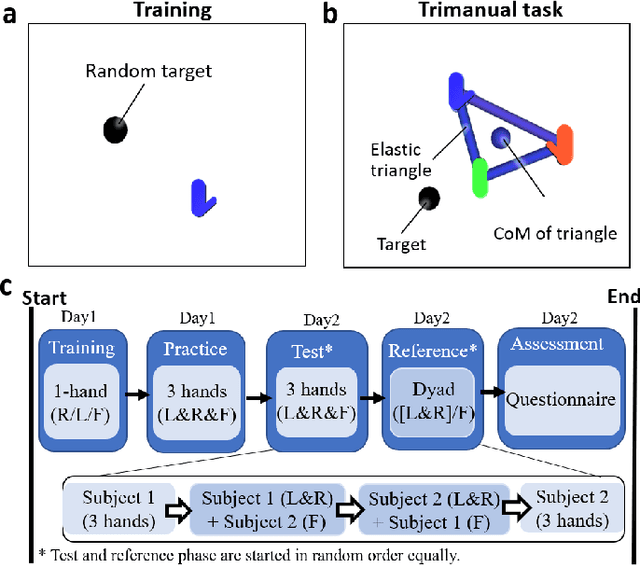

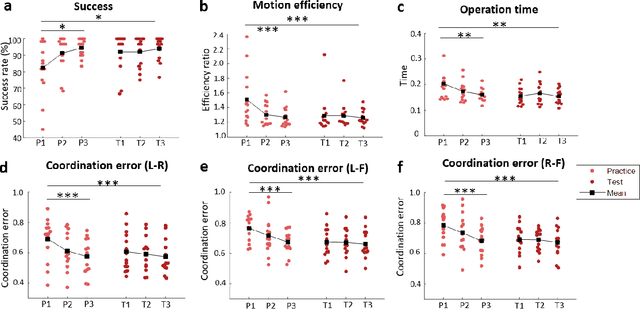

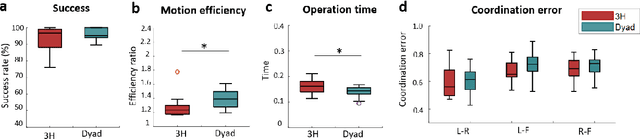

Trimanipulation: Evaluation of human performance in a 3-handed coordination task

Apr 13, 2021

Abstract:Many teleoperation tasks require three or more tools working together, which need the cooperation of multiple operators. The effectiveness of such schemes may be limited by communication. Trimanipulation by a single operator using an artificial third arm controlled together with their natural arms is a promising solution to this issue. Foot-controlled interfaces have previously shown the capability to be used for the continuous control of robot arms. However, the use of such interfaces for controlling a supernumerary robotic limb (SRLs) in coordination with the natural limbs, is not well understood. In this paper, a teleoperation task imitating physically coupled hands in a virtual reality scene was conducted with 14 subjects to evaluate human performance during tri-manipulation. The participants were required to move three limbs together in a coordinated way mimicking three arms holding a shared physical object. It was found that after a short practice session, the three-hand tri-manipulation using a single subject's hands and foot was still slower than dyad operation, however, they displayed similar performance in success rate and higher motion efficiency than two person's cooperation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge