Denny Oetomo

Exploring Interference between Concurrent Skin Stretches

Mar 26, 2025Abstract:Proprioception is essential for coordinating human movements and enhancing the performance of assistive robotic devices. Skin stretch feedback, which closely aligns with natural proprioception mechanisms, presents a promising method for conveying proprioceptive information. To better understand the impact of interference on skin stretch perception, we conducted a user study with 30 participants that evaluated the effect of two simultaneous skin stretches on user perception. We observed that when participants experience simultaneous skin stretch stimuli, a masking effect occurs which deteriorates perception performance in the collocated skin stretch configurations. However, the perceived workload stays the same. These findings show that interference can affect the perception of skin stretch such that multi-channel skin stretch feedback designs should avoid locating modules in close proximity.

TT-MPD: Test Time Model Pruning and Distillation

Dec 10, 2024

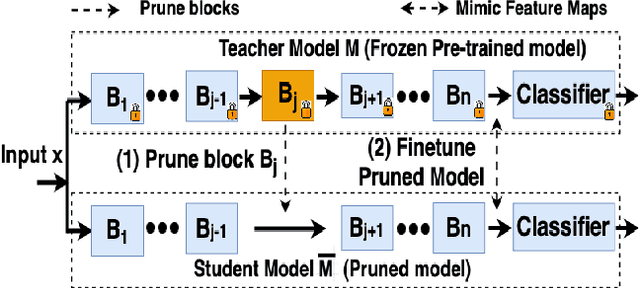

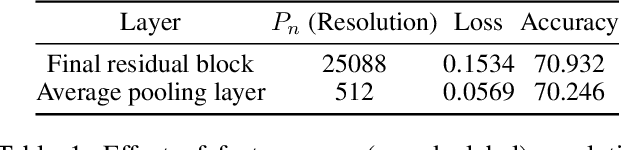

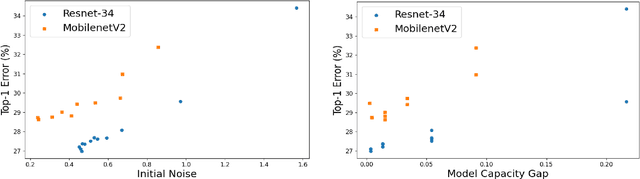

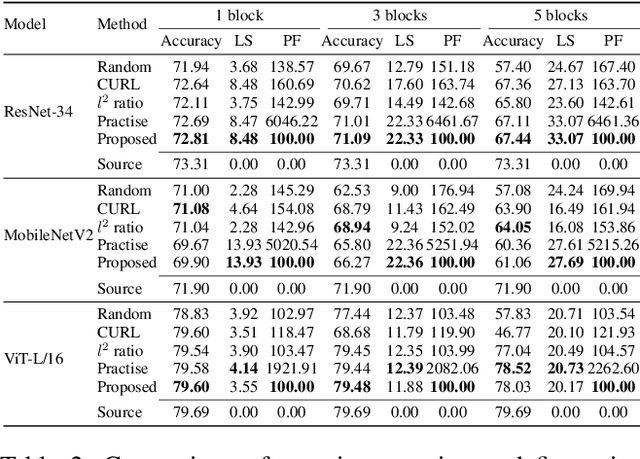

Abstract:Pruning can be an effective method of compressing large pre-trained models for inference speed acceleration. Previous pruning approaches rely on access to the original training dataset for both pruning and subsequent fine-tuning. However, access to the training data can be limited due to concerns such as data privacy and commercial confidentiality. Furthermore, with covariate shift (disparities between test and training data distributions), pruning and finetuning with training datasets can hinder the generalization of the pruned model to test data. To address these issues, pruning and finetuning the model with test time samples becomes essential. However, test-time model pruning and fine-tuning incur additional computation costs and slow down the model's prediction speed, thus posing efficiency issues. Existing pruning methods are not efficient enough for test time model pruning setting, since finetuning the pruned model is needed to evaluate the importance of removable components. To address this, we propose two variables to approximate the fine-tuned accuracy. We then introduce an efficient pruning method that considers the approximated finetuned accuracy and potential inference latency saving. To enhance fine-tuning efficiency, we propose an efficient knowledge distillation method that only needs to generate pseudo labels for a small set of finetuning samples one time, thereby reducing the expensive pseudo-label generation cost. Experimental results demonstrate that our method achieves a comparable or superior tradeoff between test accuracy and inference latency, with a 32% relative reduction in pruning and finetuning time compared to the best existing method.

Using Fitts' Law to Benchmark Assisted Human-Robot Performance

Dec 06, 2024

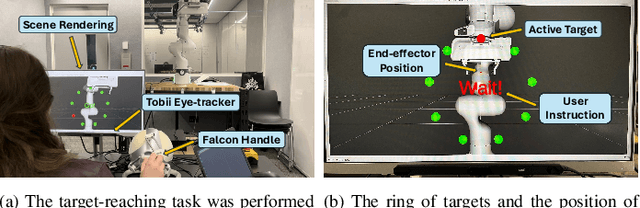

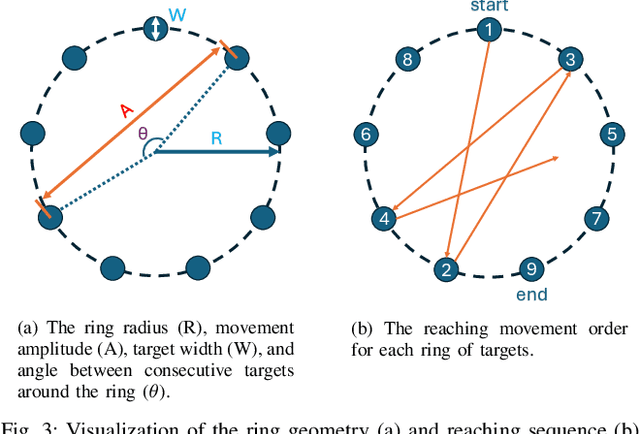

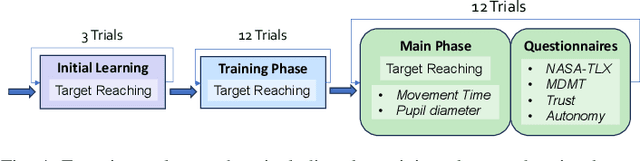

Abstract:Shared control systems aim to combine human and robot abilities to improve task performance. However, achieving optimal performance requires that the robot's level of assistance adjusts the operator's cognitive workload in response to the task difficulty. Understanding and dynamically adjusting this balance is crucial to maximizing efficiency and user satisfaction. In this paper, we propose a novel benchmarking method for shared control systems based on Fitts' Law to formally parameterize the difficulty level of a target-reaching task. With this we systematically quantify and model the effect of task difficulty (i.e. size and distance of target) and robot autonomy on task performance and operators' cognitive load and trust levels. Our empirical results (N=24) not only show that both task difficulty and robot autonomy influence task performance, but also that the performance can be modelled using these parameters, which may allow for the generalization of this relationship across more diverse setups. We also found that the users' perceived cognitive load and trust were influenced by these factors. Given the challenges in directly measuring cognitive load in real-time, our adapted Fitts' model presents a potential alternative approach to estimate cognitive load through determining the difficulty level of the task, with the assumption that greater task difficulty results in higher cognitive load levels. We hope that these insights and our proposed framework inspire future works to further investigate the generalizability of the method, ultimately enabling the benchmarking and systematic assessment of shared control quality and user impact, which will aid in the development of more effective and adaptable systems.

Exploring the Effects of Shared Autonomy on Cognitive Load and Trust in Human-Robot Interaction

Feb 05, 2024

Abstract:Teleoperation is increasingly recognized as a viable solution for deploying robots in hazardous environments. Controlling a robot to perform a complex or demanding task may overload operators resulting in poor performance. To design a robot controller to assist the human in executing such challenging tasks, a comprehensive understanding of the interplay between the robot's autonomous behavior and the operator's internal state is essential. In this paper, we investigate the relationships between robot autonomy and both the human user's cognitive load and trust levels, and the potential existence of three-way interactions in the robot-assisted execution of the task. Our user study (N=24) results indicate that while autonomy level influences the teleoperator's perceived cognitive load and trust, there is no clear interaction between these factors. Instead, these elements appear to operate independently, thus highlighting the need to consider both cognitive load and trust as distinct but interrelated factors in varying the robot autonomy level in shared-control settings. This insight is crucial for the development of more effective and adaptable assistive robotic systems.

When To Grow? A Fitting Risk-Aware Policy for Layer Growing in Deep Neural Networks

Jan 06, 2024

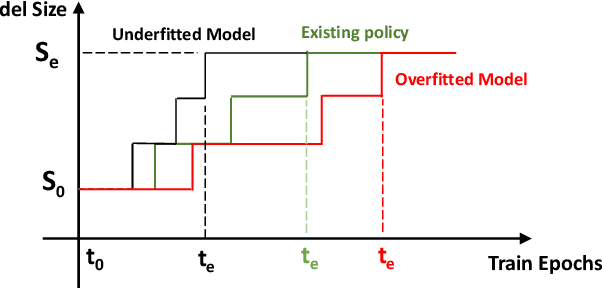

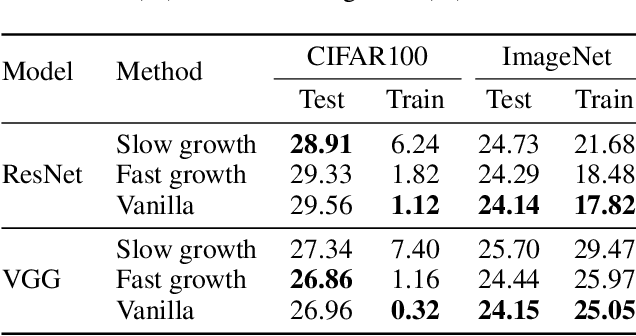

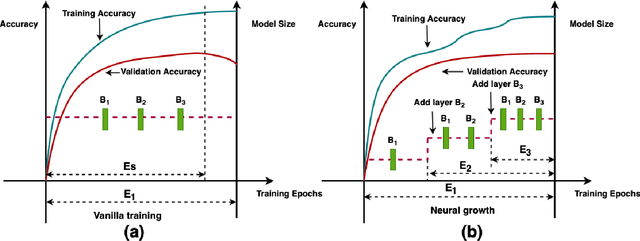

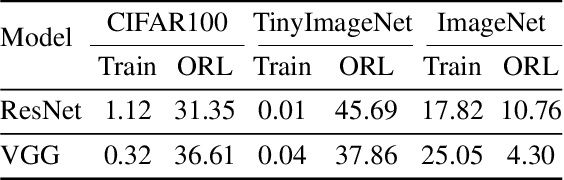

Abstract:Neural growth is the process of growing a small neural network to a large network and has been utilized to accelerate the training of deep neural networks. One crucial aspect of neural growth is determining the optimal growth timing. However, few studies investigate this systematically. Our study reveals that neural growth inherently exhibits a regularization effect, whose intensity is influenced by the chosen policy for growth timing. While this regularization effect may mitigate the overfitting risk of the model, it may lead to a notable accuracy drop when the model underfits. Yet, current approaches have not addressed this issue due to their lack of consideration of the regularization effect from neural growth. Motivated by these findings, we propose an under/over fitting risk-aware growth timing policy, which automatically adjusts the growth timing informed by the level of potential under/overfitting risks to address both risks. Comprehensive experiments conducted using CIFAR-10/100 and ImageNet datasets show that the proposed policy achieves accuracy improvements of up to 1.3% in models prone to underfitting while achieving similar accuracies in models suffering from overfitting compared to the existing methods.

Data-Driven Goal Recognition in Transhumeral Prostheses Using Process Mining Techniques

Sep 15, 2023Abstract:A transhumeral prosthesis restores missing anatomical segments below the shoulder, including the hand. Active prostheses utilize real-valued, continuous sensor data to recognize patient target poses, or goals, and proactively move the artificial limb. Previous studies have examined how well the data collected in stationary poses, without considering the time steps, can help discriminate the goals. In this case study paper, we focus on using time series data from surface electromyography electrodes and kinematic sensors to sequentially recognize patients' goals. Our approach involves transforming the data into discrete events and training an existing process mining-based goal recognition system. Results from data collected in a virtual reality setting with ten subjects demonstrate the effectiveness of our proposed goal recognition approach, which achieves significantly better precision and recall than the state-of-the-art machine learning techniques and is less confident when wrong, which is beneficial when approximating smoother movements of prostheses.

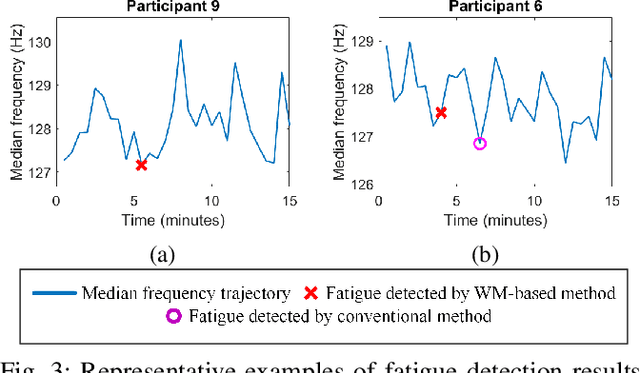

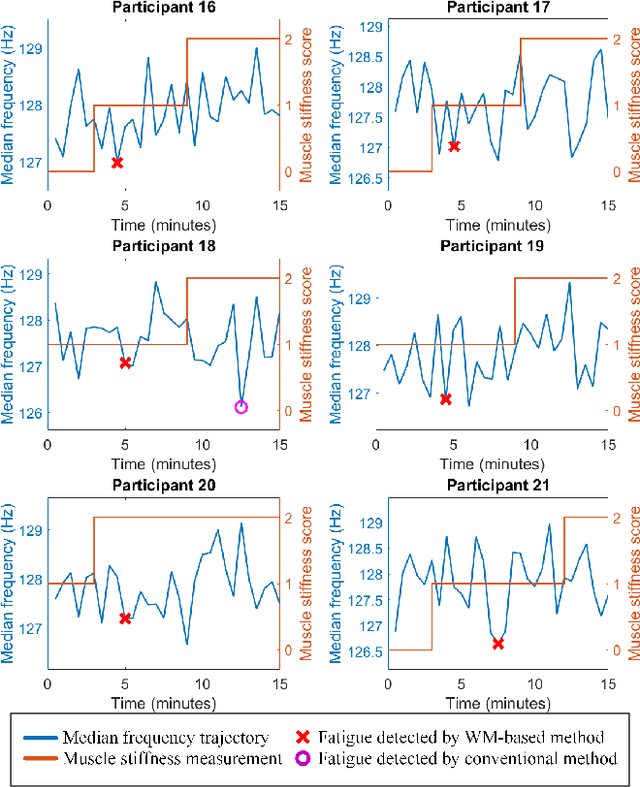

A Weak Monotonicity Based Muscle Fatigue Detection Algorithm for a Short-Duration Poor Posture Using sEMG Measurements

Jun 18, 2021

Abstract:Muscle fatigue is usually defined as a decrease in the ability to produce force. The surface electromyography (sEMG) signals have been widely used to provide information about muscle activities including detecting muscle fatigue by various data-driven techniques such as machine learning and statistical approaches. However, it is well-known that sEMG signals are weak signals (low amplitude of the signals) with a low signal-to-noise ratio, data-driven techniques cannot work well when the quality of the data is poor. In particular, the existing methods are unable to detect muscle fatigue coming from static poses. This work exploits the concept of weak monotonicity, which has been observed in the process of fatigue, to robustly detect muscle fatigue in the presence of measurement noises and human variations. Such a population trend methodology has shown its potential in muscle fatigue detection as demonstrated by the experiment of a static pose.

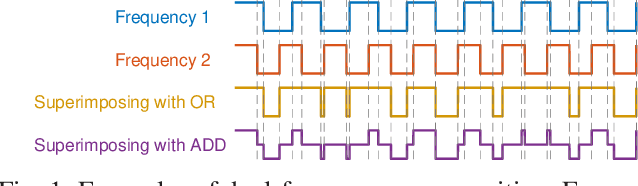

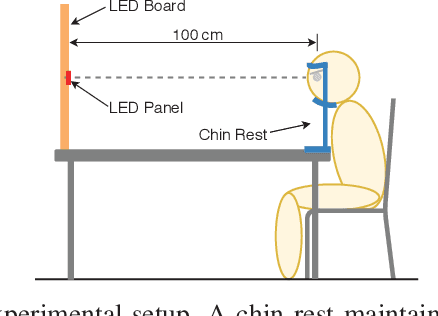

Frequency Superposition -- A Multi-Frequency Stimulation Method in SSVEP-based BCIs

Apr 25, 2021

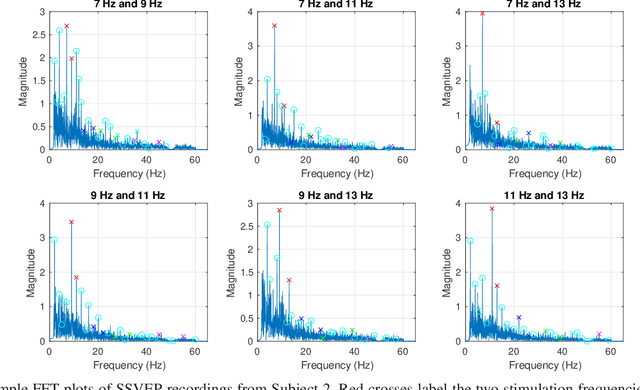

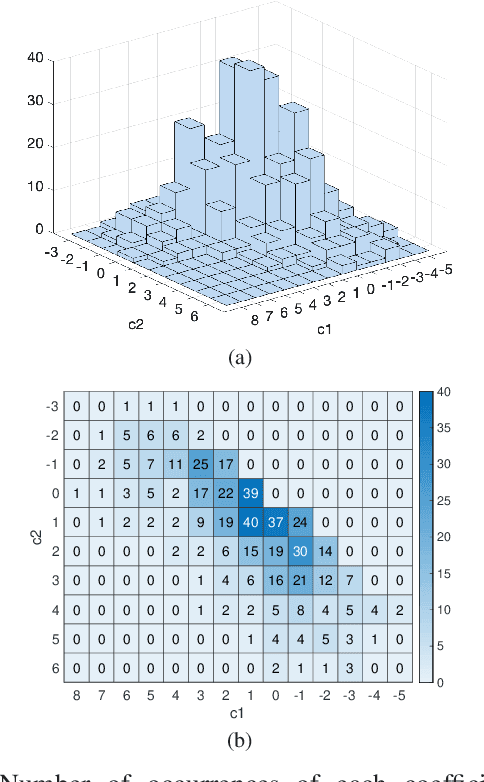

Abstract:The steady-state visual evoked potential (SSVEP) is one of the most widely used modalities in brain-computer interfaces (BCIs) due to its many advantages. However, the existence of harmonics and the limited range of responsive frequencies in SSVEP make it challenging to further expand the number of targets without sacrificing other aspects of the interface or putting additional constraints on the system. This paper introduces a novel multi-frequency stimulation method for SSVEP and investigates its potential to effectively and efficiently increase the number of targets presented. The proposed stimulation method, obtained by the superposition of the stimulation signals at different frequencies, is size-efficient, allows single-step target identification, puts no strict constraints on the usable frequency range, can be suited to self-paced BCIs, and does not require specific light sources. In addition to the stimulus frequencies and their harmonics, the evoked SSVEP waveforms include frequencies that are integer linear combinations of the stimulus frequencies. Results of decoding SSVEPs collected from nine subjects using canonical correlation analysis (CCA) with only the frequencies and harmonics as reference, also demonstrate the potential of using such a stimulation paradigm in SSVEP-based BCIs.

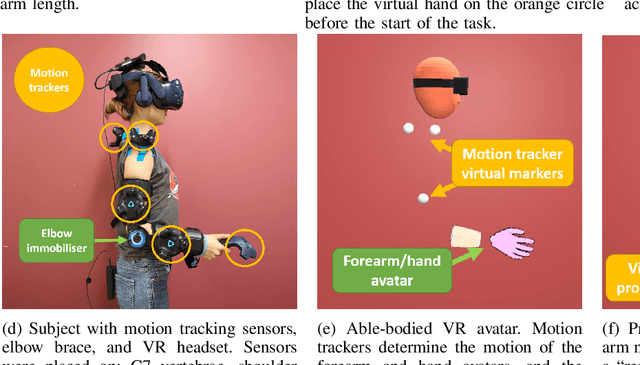

The Use of Implicit Human Motor Behaviour in the Online Personalisation of Prosthetic Interfaces

Mar 02, 2020

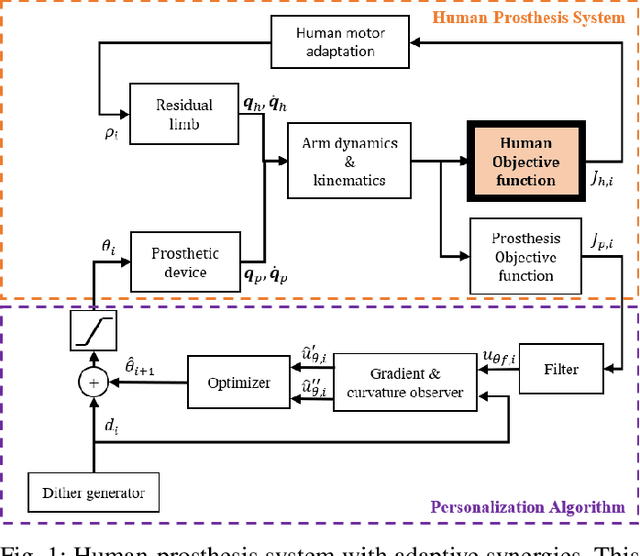

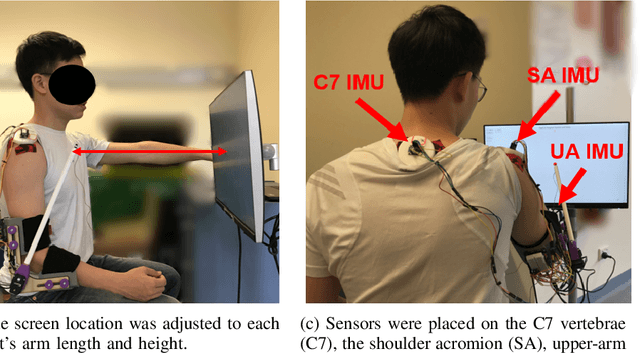

Abstract:In previous work, the authors proposed a data-driven optimisation algorithm for the personalisation of human-prosthetic interfaces, demonstrating the possibility of adapting prosthesis behaviour to its user while the user performs tasks with it. This method requires that the human and the prosthesis personalisation algorithm have same pre-defined objective function. This was previously ensured by providing the human with explicit feedback on what the objective function is. However, constantly displaying this information to the prosthesis user is impractical. Moreover, the method utilised task information in the objective function which may not be available from the wearable sensors typically used in prosthetic applications. In this work, the previous approach is extended to use a prosthesis objective function based on implicit human motor behaviour, which represents able-bodied human motor control and is measureable using wearable sensors. The approach is tested in a hardware implementation of the personalisation algorithm on a prosthetic elbow, where the prosthetic objective function is a function of upper-body compensation, and is measured using wearable IMUs. Experimental results on able-bodied subjects using a supernumerary prosthetic elbow mounted on an elbow orthosis suggest that it is possible to use a prosthesis objective function which is implicit in human behaviour to achieve collaboration without providing explicit feedback to the human, motivating further studies.

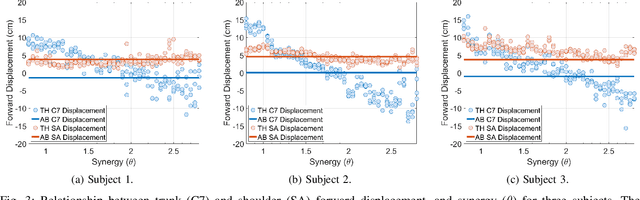

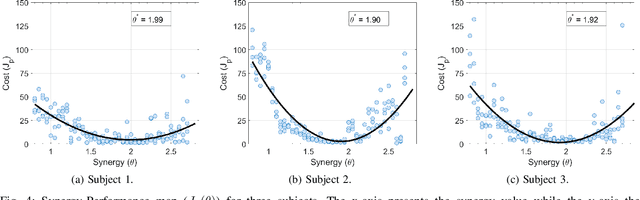

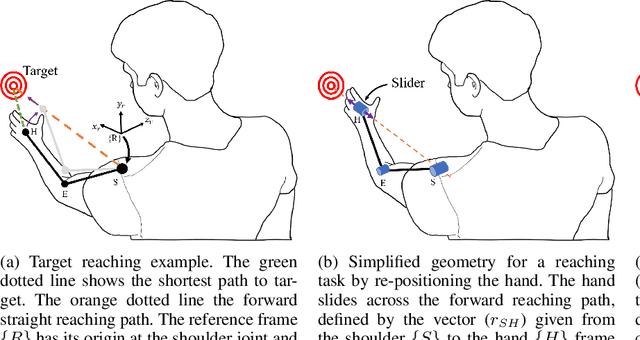

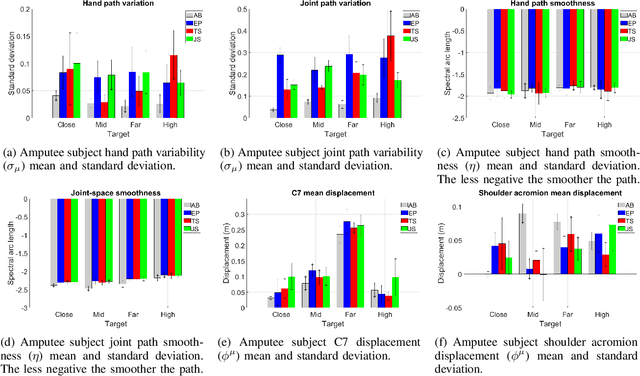

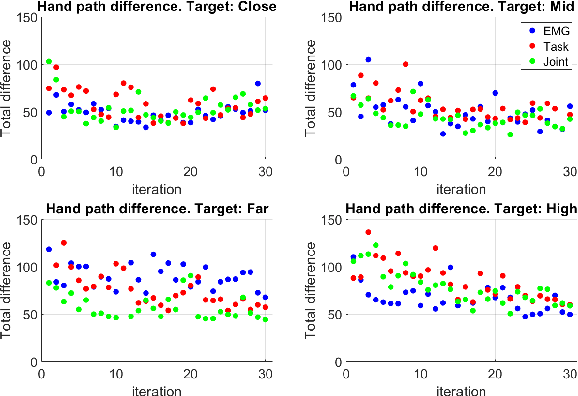

Task-space Synergies for Reaching using Upper-limb Prostheses

Feb 19, 2020

Abstract:Synergistic prostheses enable the coordinated movement of the human-prosthetic arm, as required by activities of daily living. This is achieved by coupling the motion of the prosthesis to the human command, such as residual limb movement in motion-based interfaces. Previous studies demonstrated that developing human-prosthetic synergies in joint-space must consider individual motor behaviour and the intended task to be performed, requiring personalisation and task calibration. In this work, an alternative synergy-based strategy, utilising a synergistic relationship expressed in task-space, is proposed. This task-space synergy has the potential to replace the need for personalisation and task calibration with a model-based approach requiring knowledge of the individual user's arm kinematics, the anticipated hand motion during the task and voluntary information from the prosthetic user. The proposed method is compared with surface electromyography-based and joint-space synergy-based prosthetic interfaces in a study of motor behaviour and task performance on able-bodied subjects using a VR-based transhumeral prosthesis. Experimental results showed that for a set of forward reaching tasks the proposed task-space synergy achieves comparable performance to joint-space synergies and superior to conventional surface electromyography. Case study results with an amputee subject motivate the further development of the proposed task-space synergy method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge