Peter Choong

The Use of Implicit Human Motor Behaviour in the Online Personalisation of Prosthetic Interfaces

Mar 02, 2020

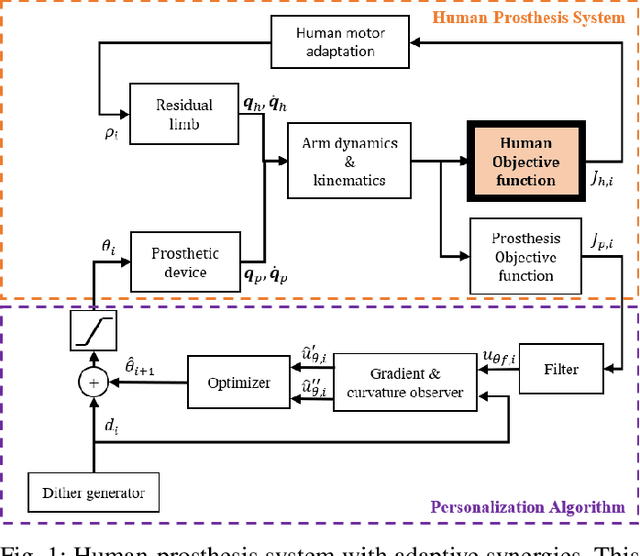

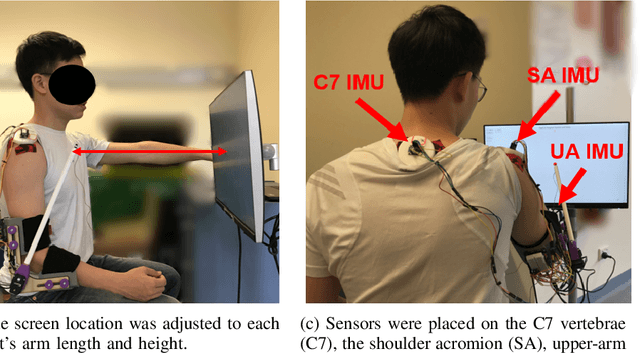

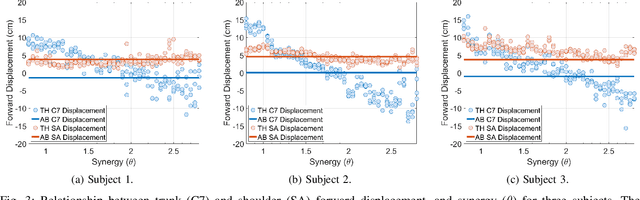

Abstract:In previous work, the authors proposed a data-driven optimisation algorithm for the personalisation of human-prosthetic interfaces, demonstrating the possibility of adapting prosthesis behaviour to its user while the user performs tasks with it. This method requires that the human and the prosthesis personalisation algorithm have same pre-defined objective function. This was previously ensured by providing the human with explicit feedback on what the objective function is. However, constantly displaying this information to the prosthesis user is impractical. Moreover, the method utilised task information in the objective function which may not be available from the wearable sensors typically used in prosthetic applications. In this work, the previous approach is extended to use a prosthesis objective function based on implicit human motor behaviour, which represents able-bodied human motor control and is measureable using wearable sensors. The approach is tested in a hardware implementation of the personalisation algorithm on a prosthetic elbow, where the prosthetic objective function is a function of upper-body compensation, and is measured using wearable IMUs. Experimental results on able-bodied subjects using a supernumerary prosthetic elbow mounted on an elbow orthosis suggest that it is possible to use a prosthesis objective function which is implicit in human behaviour to achieve collaboration without providing explicit feedback to the human, motivating further studies.

Task-space Synergies for Reaching using Upper-limb Prostheses

Feb 19, 2020

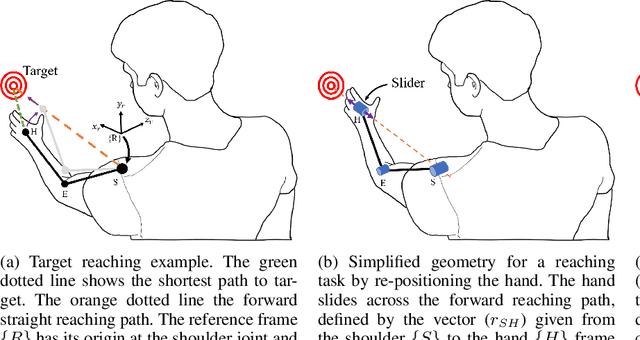

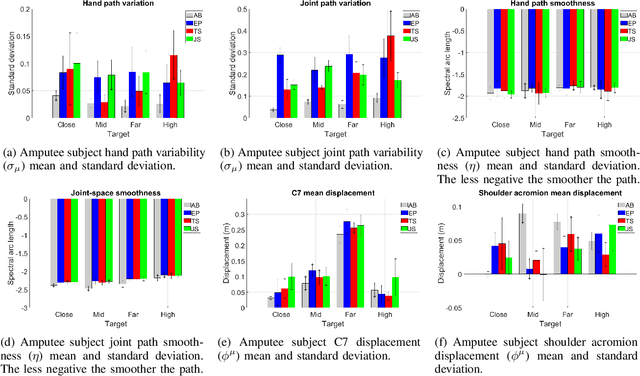

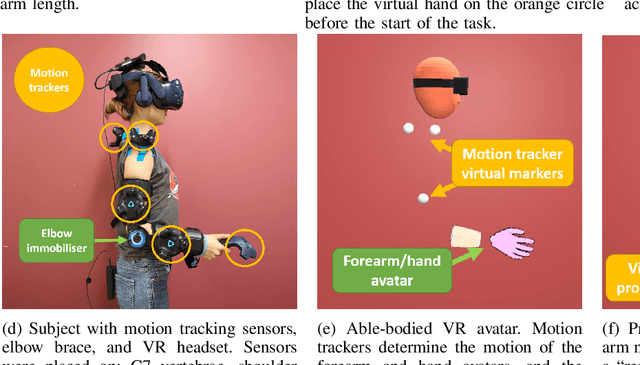

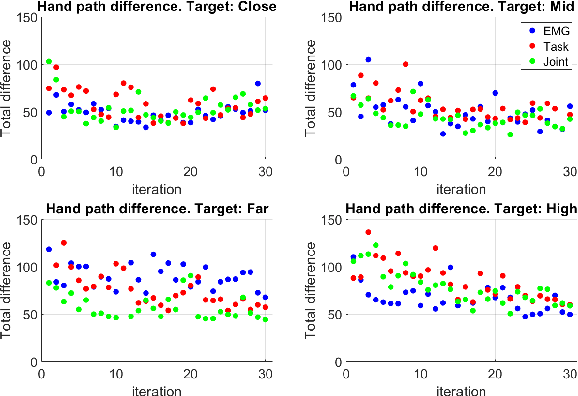

Abstract:Synergistic prostheses enable the coordinated movement of the human-prosthetic arm, as required by activities of daily living. This is achieved by coupling the motion of the prosthesis to the human command, such as residual limb movement in motion-based interfaces. Previous studies demonstrated that developing human-prosthetic synergies in joint-space must consider individual motor behaviour and the intended task to be performed, requiring personalisation and task calibration. In this work, an alternative synergy-based strategy, utilising a synergistic relationship expressed in task-space, is proposed. This task-space synergy has the potential to replace the need for personalisation and task calibration with a model-based approach requiring knowledge of the individual user's arm kinematics, the anticipated hand motion during the task and voluntary information from the prosthetic user. The proposed method is compared with surface electromyography-based and joint-space synergy-based prosthetic interfaces in a study of motor behaviour and task performance on able-bodied subjects using a VR-based transhumeral prosthesis. Experimental results showed that for a set of forward reaching tasks the proposed task-space synergy achieves comparable performance to joint-space synergies and superior to conventional surface electromyography. Case study results with an amputee subject motivate the further development of the proposed task-space synergy method.

Control Barrier Functions for Mechanical Systems: Theory and Application to Robotic Grasping

Mar 23, 2019

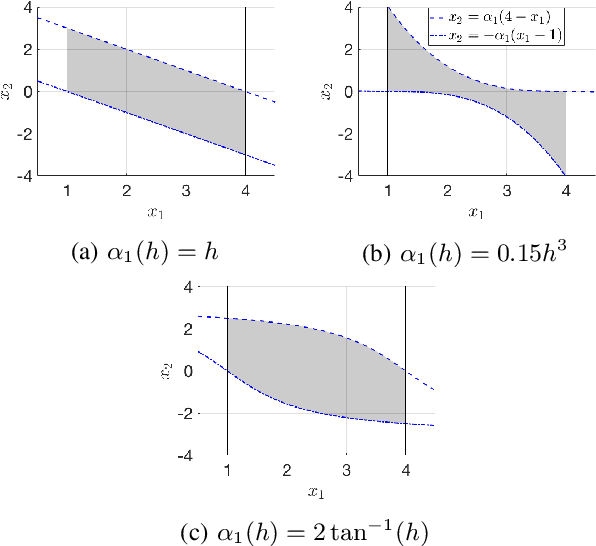

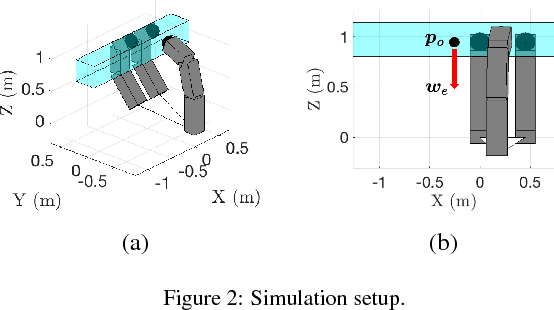

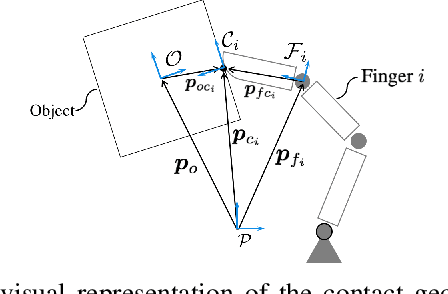

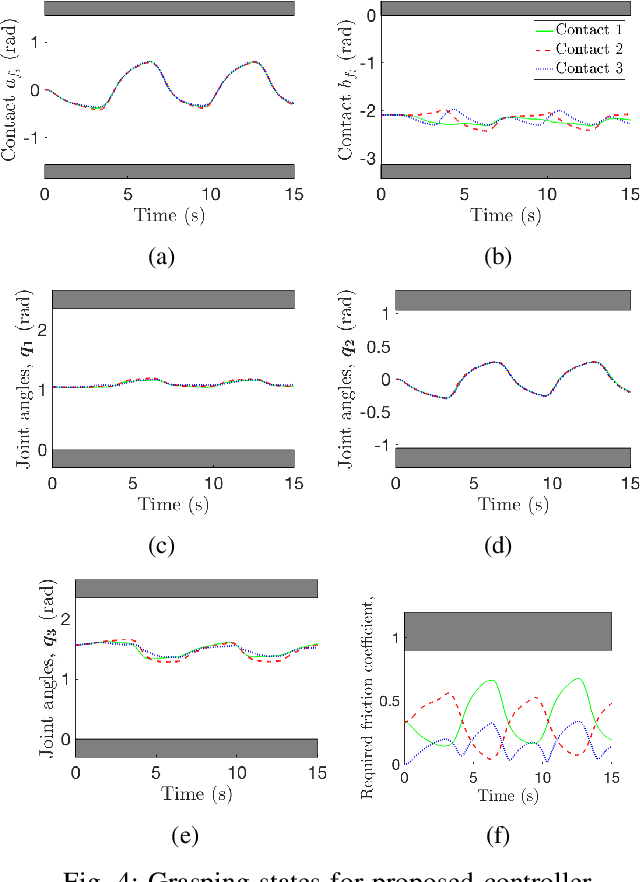

Abstract:Control barrier functions have been demonstrated to be a useful method of ensuring constraint satisfaction for a wide class of controllers, however existing results are mostly restricted to continuous time systems of relative degree one. Mechanical systems, including robots, are typically second-order systems in which the control occurs at the force/torque level. These systems have velocity and position constraints (i.e. relative degree two) that are vital for safety and/or task execution. Additionally, mechanical systems are typically controlled digitally as sampled-data systems. The contribution of this work is two-fold. First, is the development of novel, robust control barrier functions that ensure constraint satisfaction for relative degree two, sampled-data systems in the presence of model uncertainty. Second, is the application of the proposed method to the challenging problem of robotic grasping in which a robotic hand must ensure an object remains inside the grasp while manipulating it to a desired reference trajectory. A grasp constraint satisfying controller is proposed that can admit existing nominal manipulation controllers from the literature, while simultaneously ensuring no slip, no over-extension (e.g. singular configurations), and no rolling off of the fingertips. Simulation and experimental results validate the proposed control for the robotic hand application.

Personalized On-line Adaptation of Kinematic Synergies for Human-Prosthesis Interfaces

Feb 19, 2019

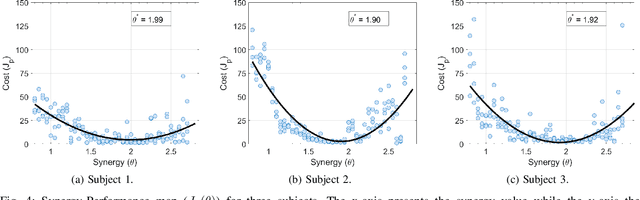

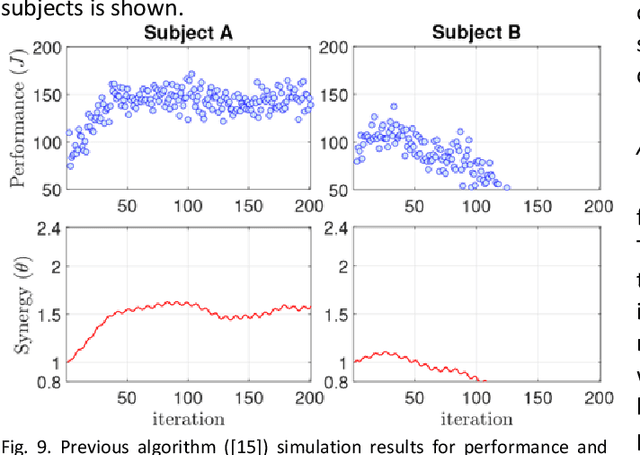

Abstract:Synergies have been adopted in prosthetic limb applications to reduce complexity of design, but typically involve a single synergy setting for a population and ignore individual preference or adaptation capacity. In this paper, a systematic design of kinematic synergies for human-prosthesis interfaces using on-line measurements from each individual is proposed. The task of reaching using the upper-limb is described by an objective function and the interface is parameterized by a kinematic synergy. Consequently, personalizing the interface for a given individual can be formulated as finding an optimal personalized parameter. A structure to model the observed motor behavior that allows for the personalized traits of motor preference and motor learning is proposed, and subsequently used in an on-line optimization scheme to identify the synergies for an individual. The knowledge of the common features contained in the model enables on-line adaptation of the human-prosthesis interface to happen concurrently to human motor adaptation without the need to re-tune the parameters of the on-line algorithm for each individual. Human-in-the-loop experimental results with able-bodied subjects, performed in a virtual reality environment to emulate amputation and prosthesis use, show that the proposed personalization algorithm was effective in obtaining optimal synergies with a fast uniform convergence speed across a group of individuals.

Robust Object Manipulation for Tactile-based Blind Grasping

Feb 07, 2019

Abstract:Tactile-based blind grasping addresses realistic robotic grasping in which the hand only has access to proprioceptive and tactile sensors. The robotic hand has no prior knowledge of the object/grasp properties, such as object weight, inertia, and shape. There exists no manipulation controller that rigorously guarantees object manipulation in such a setting. Here, a robust control law is proposed for object manipulation in tactile-based blind grasping. The analysis ensures semi-global asymptotic and exponential stability in the presence of model uncertainties and external disturbances that are neglected in related work. Simulation and experimental results validate the effectiveness of the proposed approach.

Grasp Constraint Satisfaction for Object Manipulation using Robotic Hands

Nov 27, 2018

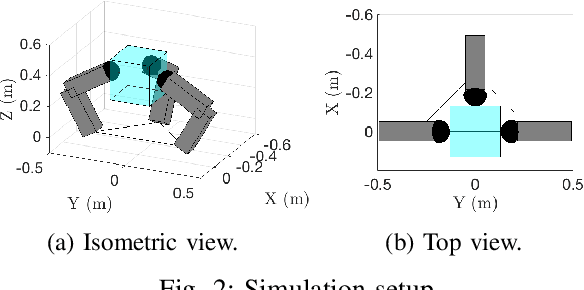

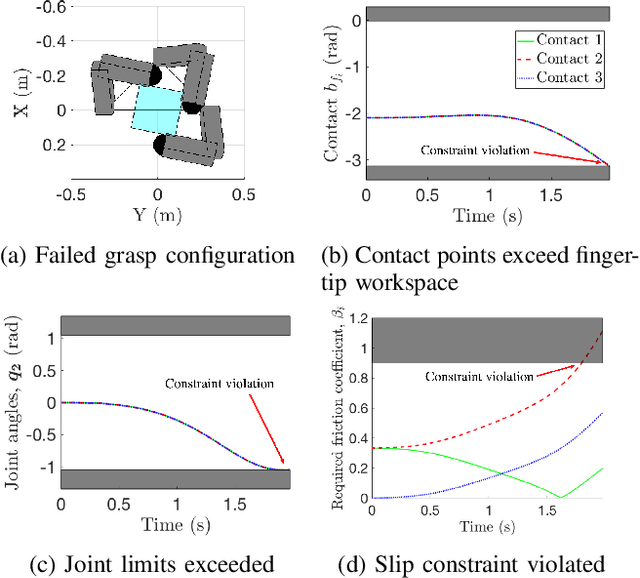

Abstract:For successful object manipulation with robotic hands, it is important to ensure that the object remains in the grasp at all times. In addition to grasp constraints associated with slipping and singular hand configurations, excessive rolling is an important grasp concern where the contact points roll off of the fingertip surface. Related literature focus only on a subset of grasp constraints, or assume grasp constraint satisfaction without providing guarantees of such a claim. In this paper, we propose a control approach that systematically handles all grasp constraints. The proposed controller ensures that the object does not slip, joints do not exceed joint angle constraints (e.g. reach singular configurations), and the contact points remain in the fingertip workspace. The proposed controller accepts a nominal manipulation control, and ensures the grasping constraints are satisfied to support the assumptions made in the literature. Simulation results validate the proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge