Muhammad Bilal

NOS-Gate: Queue-Aware Streaming IDS for Consumer Gateways under Timing-Controlled Evasion

Jan 01, 2026Abstract:Timing and burst patterns can leak through encryption, and an adaptive adversary can exploit them. This undermines metadata-only detection in a stand-alone consumer gateway. Therefore, consumer gateways need streaming intrusion detection on encrypted traffic using metadata only, under tight CPU and latency budgets. We present a streaming IDS for stand-alone gateways that instantiates a lightweight two-state unit derived from Network-Optimised Spiking (NOS) dynamics per flow, named NOS-Gate. NOS-Gate scores fixed-length windows of metadata features and, under a $K$-of-$M$ persistence rule, triggers a reversible mitigation that temporarily reduces the flow's weight under weighted fair queueing (WFQ). We evaluate NOS-Gate under timing-controlled evasion using an executable 'worlds' benchmark that specifies benign device processes, auditable attacker budgets, contention structure, and packet-level WFQ replay to quantify queue impact. All methods are calibrated label-free via burn-in quantile thresholding. Across multiple reproducible worlds and malicious episodes, at an achieved $0.1%$ false-positive operating point, NOS-Gate attains 0.952 incident recall versus 0.857 for the best baseline in these runs. Under gating, it reduces p99.9 queueing delay and p99.9 collateral delay with a mean scoring cost of ~ 2.09 μs per flow-window on CPU.

Learning to Undo: Rollback-Augmented Reinforcement Learning with Reversibility Signals

Oct 16, 2025Abstract:This paper proposes a reversible learning framework to improve the robustness and efficiency of value based Reinforcement Learning agents, addressing vulnerability to value overestimation and instability in partially irreversible environments. The framework has two complementary core mechanisms: an empirically derived transition reversibility measure called Phi of s and a, and a selective state rollback operation. We introduce an online per state action estimator called Phi that quantifies the likelihood of returning to a prior state within a fixed horizon K. This measure is used to adjust the penalty term during temporal difference updates dynamically, integrating reversibility awareness directly into the value function. The system also includes a selective rollback operator. When an action yields an expected return markedly lower than its instantaneous estimated value and violates a predefined threshold, the agent is penalized and returns to the preceding state rather than progressing. This interrupts sub optimal high risk trajectories and avoids catastrophic steps. By combining reversibility aware evaluation with targeted rollback, the method improves safety, performance, and stability. In the CliffWalking v0 domain, the framework reduced catastrophic falls by over 99.8 percent and yielded a 55 percent increase in mean episode return. In the Taxi v3 domain, it suppressed illegal actions by greater than or equal to 99.9 percent and achieved a 65.7 percent improvement in cumulative reward, while also sharply reducing reward variance in both environments. Ablation studies confirm that the rollback mechanism is the critical component underlying these safety and performance gains, marking a robust step toward safe and reliable sequential decision making.

Crop Pest Classification Using Deep Learning Techniques: A Review

Jul 02, 2025Abstract:Insect pests continue to bring a serious threat to crop yields around the world, and traditional methods for monitoring them are often slow, manual, and difficult to scale. In recent years, deep learning has emerged as a powerful solution, with techniques like convolutional neural networks (CNNs), vision transformers (ViTs), and hybrid models gaining popularity for automating pest detection. This review looks at 37 carefully selected studies published between 2018 and 2025, all focused on AI-based pest classification. The selected research is organized by crop type, pest species, model architecture, dataset usage, and key technical challenges. The early studies relied heavily on CNNs but latest work is shifting toward hybrid and transformer-based models that deliver higher accuracy and better contextual understanding. Still, challenges like imbalanced datasets, difficulty in detecting small pests, limited generalizability, and deployment on edge devices remain significant hurdles. Overall, this review offers a structured overview of the field, highlights useful datasets, and outlines the key challenges and future directions for AI-based pest monitoring systems.

TEMSET-24K: Densely Annotated Dataset for Indexing Multipart Endoscopic Videos using Surgical Timeline Segmentation

Feb 10, 2025Abstract:Indexing endoscopic surgical videos is vital in surgical data science, forming the basis for systematic retrospective analysis and clinical performance evaluation. Despite its significance, current video analytics rely on manual indexing, a time-consuming process. Advances in computer vision, particularly deep learning, offer automation potential, yet progress is limited by the lack of publicly available, densely annotated surgical datasets. To address this, we present TEMSET-24K, an open-source dataset comprising 24,306 trans-anal endoscopic microsurgery (TEMS) video micro-clips. Each clip is meticulously annotated by clinical experts using a novel hierarchical labeling taxonomy encompassing phase, task, and action triplets, capturing intricate surgical workflows. To validate this dataset, we benchmarked deep learning models, including transformer-based architectures. Our in silico evaluation demonstrates high accuracy (up to 0.99) and F1 scores (up to 0.99) for key phases like Setup and Suturing. The STALNet model, tested with ConvNeXt, ViT, and SWIN V2 encoders, consistently segmented well-represented phases. TEMSET-24K provides a critical benchmark, propelling state-of-the-art solutions in surgical data science.

Open Foundation Models in Healthcare: Challenges, Paradoxes, and Opportunities with GenAI Driven Personalized Prescription

Feb 04, 2025Abstract:In response to the success of proprietary Large Language Models (LLMs) such as OpenAI's GPT-4, there is a growing interest in developing open, non-proprietary LLMs and AI foundation models (AIFMs) for transparent use in academic, scientific, and non-commercial applications. Despite their inability to match the refined functionalities of their proprietary counterparts, open models hold immense potential to revolutionize healthcare applications. In this paper, we examine the prospects of open-source LLMs and AIFMs for developing healthcare applications and make two key contributions. Firstly, we present a comprehensive survey of the current state-of-the-art open-source healthcare LLMs and AIFMs and introduce a taxonomy of these open AIFMs, categorizing their utility across various healthcare tasks. Secondly, to evaluate the general-purpose applications of open LLMs in healthcare, we present a case study on personalized prescriptions. This task is particularly significant due to its critical role in delivering tailored, patient-specific medications that can greatly improve treatment outcomes. In addition, we compare the performance of open-source models with proprietary models in settings with and without Retrieval-Augmented Generation (RAG). Our findings suggest that, although less refined, open LLMs can achieve performance comparable to proprietary models when paired with grounding techniques such as RAG. Furthermore, to highlight the clinical significance of LLMs-empowered personalized prescriptions, we perform subjective assessment through an expert clinician. We also elaborate on ethical considerations and potential risks associated with the misuse of powerful LLMs and AIFMs, highlighting the need for a cautious and responsible implementation in healthcare.

Temporal Graph MLP Mixer for Spatio-Temporal Forecasting

Jan 17, 2025

Abstract:Spatiotemporal forecasting is critical in applications such as traffic prediction, climate modeling, and environmental monitoring. However, the prevalence of missing data in real-world sensor networks significantly complicates this task. In this paper, we introduce the Temporal Graph MLP-Mixer (T-GMM), a novel architecture designed to address these challenges. The model combines node-level processing with patch-level subgraph encoding to capture localized spatial dependencies while leveraging a three-dimensional MLP-Mixer to handle temporal, spatial, and feature-based dependencies. Experiments on the AQI, ENGRAD, PV-US and METR-LA datasets demonstrate the model's ability to effectively forecast even in the presence of significant missing data. While not surpassing state-of-the-art models in all scenarios, the T-GMM exhibits strong learning capabilities, particularly in capturing long-range dependencies. These results highlight its potential for robust, scalable spatiotemporal forecasting.

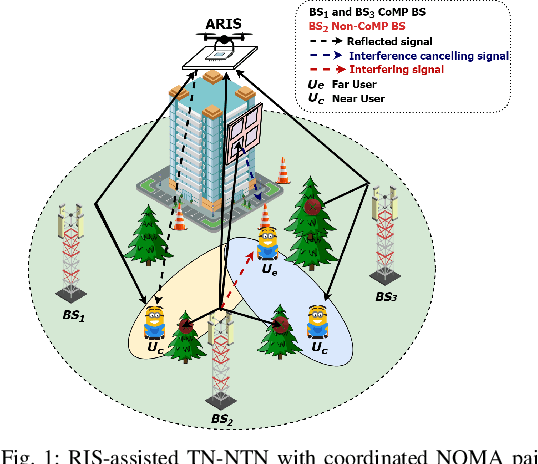

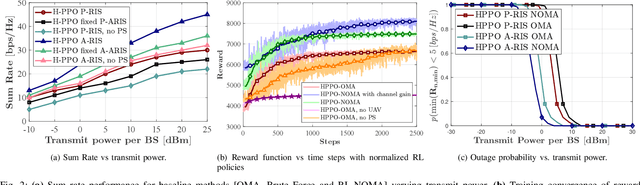

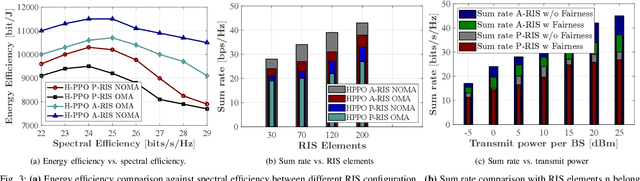

Deep Reinforcement Learning Optimized Intelligent Resource Allocation in Active RIS-Integrated TN-NTN Networks

Jan 11, 2025

Abstract:This work explores the deployment of active reconfigurable intelligent surfaces (A-RIS) in integrated terrestrial and non-terrestrial networks (TN-NTN) while utilizing coordinated multipoint non-orthogonal multiple access (CoMP-NOMA). Our system model incorporates a UAV-assisted RIS in coordination with a terrestrial RIS which aims for signal enhancement. We aim to maximize the sum rate for all users in the network using a custom hybrid proximal policy optimization (H-PPO) algorithm by optimizing the UAV trajectory, base station (BS) power allocation factors, active RIS amplification factor, and phase shift matrix. We integrate edge users into NOMA pairs to achieve diversity gain, further enhancing the overall experience for edge users. Exhaustive comparisons are made with passive RIS-assisted networks to demonstrate the superior efficacy of active RIS in terms of energy efficiency, outage probability, and network sum rate.

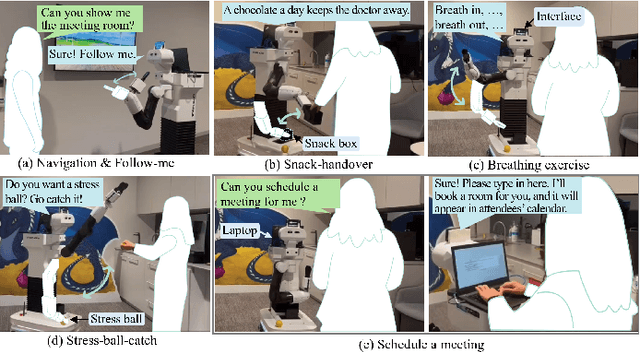

OfficeMate: Pilot Evaluation of an Office Assistant Robot

Jan 09, 2025

Abstract:Office Assistant Robots (OARs) offer a promising solution to proactively provide in-situ support to enhance employee well-being and productivity in office spaces. We introduce OfficeMate, a social OAR designed to assist with practical tasks, foster social interaction, and promote health and well-being. Through a pilot evaluation with seven participants in an office environment, we found that users see potential in OARs for reducing stress and promoting healthy habits and value the robot's ability to provide companionship and physical activity reminders in the office space. However, concerns regarding privacy, communication, and the robot's interaction timing were also raised. The feedback highlights the need to carefully consider the robot's appearance and behaviour to ensure it enhances user experience and aligns with office social norms. We believe these insights will better inform the development of adaptive, intelligent OAR systems for future office space integration.

REFOL: Resource-Efficient Federated Online Learning for Traffic Flow Forecasting

Nov 21, 2024

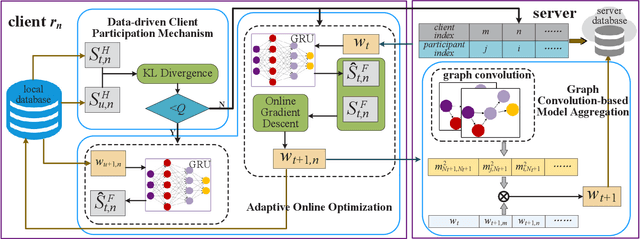

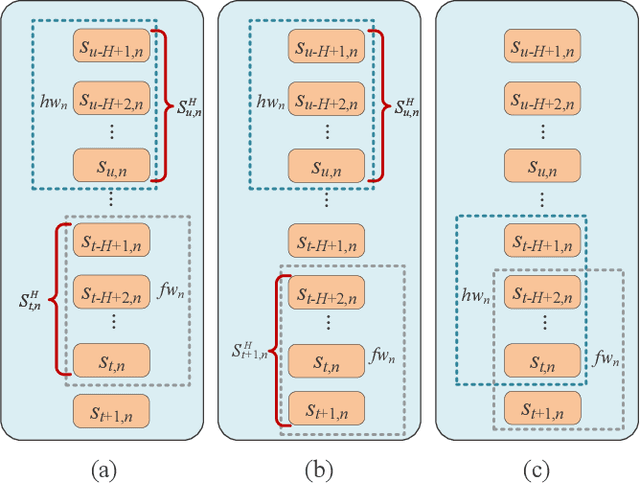

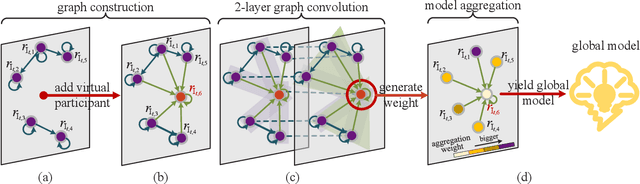

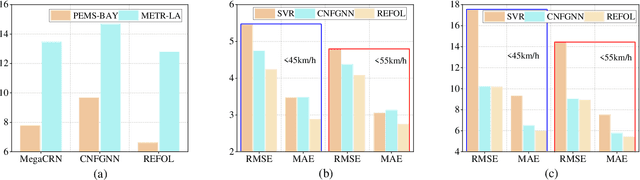

Abstract:Multiple federated learning (FL) methods are proposed for traffic flow forecasting (TFF) to avoid heavy-transmission and privacy-leaking concerns resulting from the disclosure of raw data in centralized methods. However, these FL methods adopt offline learning which may yield subpar performance, when concept drift occurs, i.e., distributions of historical and future data vary. Online learning can detect concept drift during model training, thus more applicable to TFF. Nevertheless, the existing federated online learning method for TFF fails to efficiently solve the concept drift problem and causes tremendous computing and communication overhead. Therefore, we propose a novel method named Resource-Efficient Federated Online Learning (REFOL) for TFF, which guarantees prediction performance in a communication-lightweight and computation-efficient way. Specifically, we design a data-driven client participation mechanism to detect the occurrence of concept drift and determine clients' participation necessity. Subsequently, we propose an adaptive online optimization strategy, which guarantees prediction performance and meanwhile avoids meaningless model updates. Then, a graph convolution-based model aggregation mechanism is designed, aiming to assess participants' contribution based on spatial correlation without importing extra communication and computing consumption on clients. Finally, we conduct extensive experiments on real-world datasets to demonstrate the superiority of REFOL in terms of prediction improvement and resource economization.

Real-time and Downtime-tolerant Fault Diagnosis for Railway Turnout Machines (RTMs) Empowered with Cloud-Edge Pipeline Parallelism

Nov 04, 2024

Abstract:Railway Turnout Machines (RTMs) are mission-critical components of the railway transportation infrastructure, responsible for directing trains onto desired tracks. For safety assurance applications, especially in early-warning scenarios, RTM faults are expected to be detected as early as possible on a continuous 7x24 basis. However, limited emphasis has been placed on distributed model inference frameworks that can meet the inference latency and reliability requirements of such mission critical fault diagnosis systems. In this paper, an edge-cloud collaborative early-warning system is proposed to enable real-time and downtime-tolerant fault diagnosis of RTMs, providing a new paradigm for the deployment of models in safety-critical scenarios. Firstly, a modular fault diagnosis model is designed specifically for distributed deployment, which utilizes a hierarchical architecture consisting of the prior knowledge module, subordinate classifiers, and a fusion layer for enhanced accuracy and parallelism. Then, a cloud-edge collaborative framework leveraging pipeline parallelism, namely CEC-PA, is developed to minimize the overhead resulting from distributed task execution and context exchange by strategically partitioning and offloading model components across cloud and edge. Additionally, an election consensus mechanism is implemented within CEC-PA to ensure system robustness during coordinator node downtime. Comparative experiments and ablation studies are conducted to validate the effectiveness of the proposed distributed fault diagnosis approach. Our ensemble-based fault diagnosis model achieves a remarkable 97.4% accuracy on a real-world dataset collected by Nanjing Metro in Jiangsu Province, China. Meanwhile, CEC-PA demonstrates superior recovery proficiency during node disruptions and speed-up ranging from 1.98x to 7.93x in total inference time compared to its counterparts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge