Muhammad Ahmed Mohsin

Continuous-Utility Direct Preference Optimization

Jan 31, 2026Abstract:Large language model reasoning is often treated as a monolithic capability, relying on binary preference supervision that fails to capture partial progress or fine-grained reasoning quality. We introduce Continuous Utility Direct Preference Optimization (CU-DPO), a framework that aligns models to a portfolio of prompt-based cognitive strategies by replacing binary labels with continuous scores that capture fine-grained reasoning quality. We prove that learning with K strategies yields a Theta(K log K) improvement in sample complexity over binary preferences, and that DPO converges to the entropy-regularized utility-maximizing policy. To exploit this signal, we propose a two-stage training pipeline: (i) strategy selection, which optimizes the model to choose the best strategy for a given problem via best-vs-all comparisons, and (ii) execution refinement, which trains the model to correctly execute the selected strategy using margin-stratified pairs. On mathematical reasoning benchmarks, CU-DPO improves strategy selection accuracy from 35-46 percent to 68-78 percent across seven base models, yielding consistent downstream reasoning gains of up to 6.6 points on in-distribution datasets with effective transfer to out-of-distribution tasks.

On the Fundamental Limits of LLMs at Scale

Nov 17, 2025

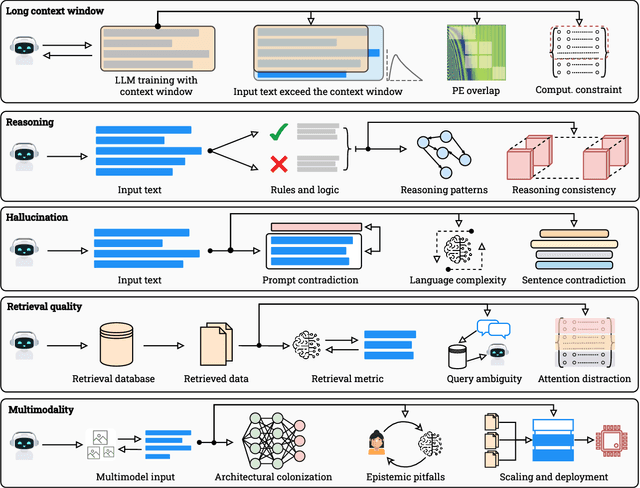

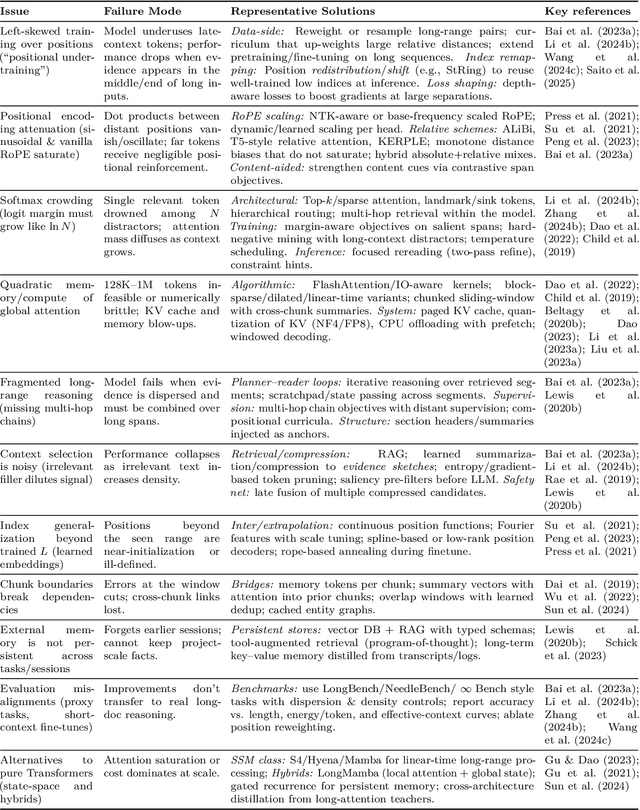

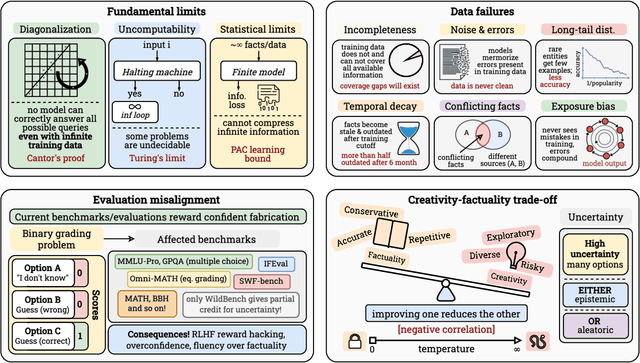

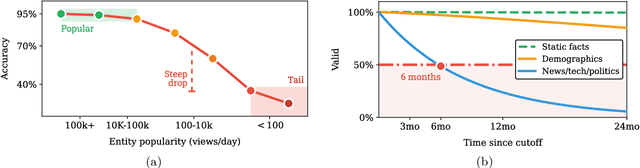

Abstract:Large Language Models (LLMs) have benefited enormously from scaling, yet these gains are bounded by five fundamental limitations: (1) hallucination, (2) context compression, (3) reasoning degradation, (4) retrieval fragility, and (5) multimodal misalignment. While existing surveys describe these phenomena empirically, they lack a rigorous theoretical synthesis connecting them to the foundational limits of computation, information, and learning. This work closes that gap by presenting a unified, proof-informed framework that formalizes the innate theoretical ceilings of LLM scaling. First, computability and uncomputability imply an irreducible residue of error: for any computably enumerable model family, diagonalization guarantees inputs on which some model must fail, and undecidable queries (e.g., halting-style tasks) induce infinite failure sets for all computable predictors. Second, information-theoretic and statistical constraints bound attainable accuracy even on decidable tasks, finite description length enforces compression error, and long-tail factual knowledge requires prohibitive sample complexity. Third, geometric and computational effects compress long contexts far below their nominal size due to positional under-training, encoding attenuation, and softmax crowding. We further show how likelihood-based training favors pattern completion over inference, how retrieval under token limits suffers from semantic drift and coupling noise, and how multimodal scaling inherits shallow cross-modal alignment. Across sections, we pair theorems and empirical evidence to outline where scaling helps, where it saturates, and where it cannot progress, providing both theoretical foundations and practical mitigation paths like bounded-oracle retrieval, positional curricula, and sparse or hierarchical attention.

Towards 6G Intelligence: The Role of Generative AI in Future Wireless Networks

Aug 27, 2025

Abstract:Ambient intelligence (AmI) is a computing paradigm in which physical environments are embedded with sensing, computation, and communication so they can perceive people and context, decide appropriate actions, and respond autonomously. Realizing AmI at global scale requires sixth generation (6G) wireless networks with capabilities for real time perception, reasoning, and action aligned with human behavior and mobility patterns. We argue that Generative Artificial Intelligence (GenAI) is the creative core of such environments. Unlike traditional AI, GenAI learns data distributions and can generate realistic samples, making it well suited to close key AmI gaps, including generating synthetic sensor and channel data in under observed areas, translating user intent into compact, semantic messages, predicting future network conditions for proactive control, and updating digital twins without compromising privacy. This chapter reviews foundational GenAI models, GANs, VAEs, diffusion models, and generative transformers, and connects them to practical AmI use cases, including spectrum sharing, ultra reliable low latency communication, intelligent security, and context aware digital twins. We also examine how 6G enablers, such as edge and fog computing, IoT device swarms, intelligent reflecting surfaces (IRS), and non terrestrial networks, can host or accelerate distributed GenAI. Finally, we outline open challenges in energy efficient on device training, trustworthy synthetic data, federated generative learning, and AmI specific standardization. We show that GenAI is not a peripheral addition, but a foundational element for transforming 6G from a faster network into an ambient intelligent ecosystem.

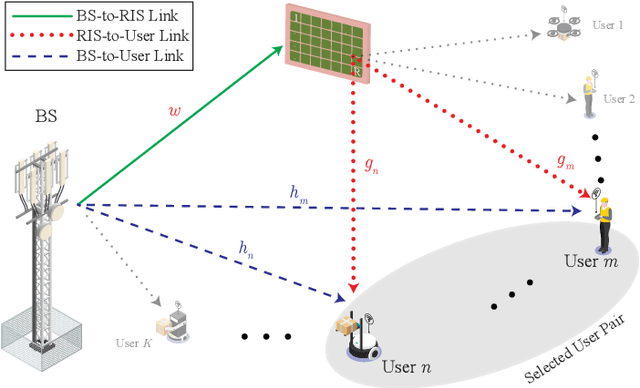

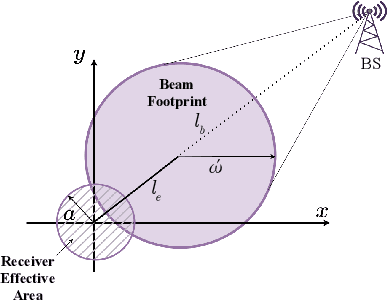

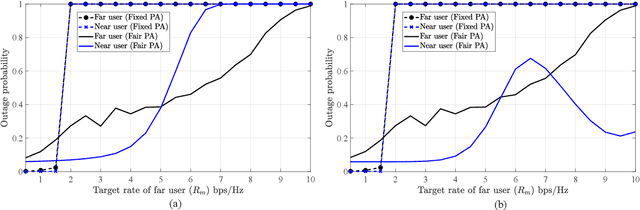

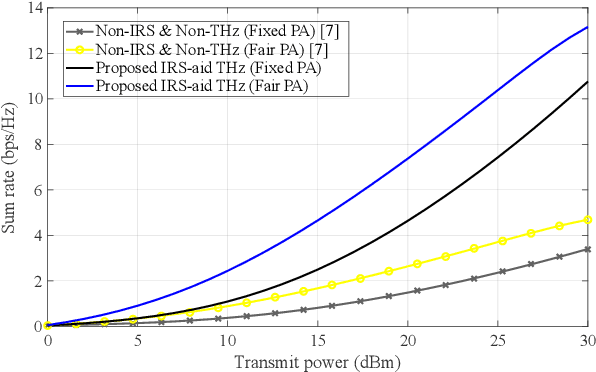

TeraRIS NOMA-MIMO Communications for 6G and Beyond Industrial Networks

Aug 07, 2025

Abstract:This paper presents a joint framework that integrates reconfigurable intelligent surfaces (RISs) with Terahertz (THz) communications and non-orthogonal multiple access (NOMA) to enhance smart industrial communications. The proposed system leverages the advantages of RIS and THz bands to improve spectral efficiency, coverage, and reliability key requirements for industrial automation and real-time communications in future 6G networks and beyond. Within this framework, two power allocation strategies are investigated: the first optimally distributes power between near and far industrial nodes, and the second prioritizes network demands to enhance system performance further. A performance evaluation is conducted to compare the sum rate and outage probability against a fixed power allocation scheme. Our scheme achieves up to a 23% sum rate gain over fixed PA at 30 dBm. Simulation results validate the theoretical analysis, demonstrating the effectiveness and robustness of the RIS-assisted NOMA MIMO framework for THz enabled industrial communications.

Meta-Thinking in LLMs via Multi-Agent Reinforcement Learning: A Survey

Apr 20, 2025

Abstract:This survey explores the development of meta-thinking capabilities in Large Language Models (LLMs) from a Multi-Agent Reinforcement Learning (MARL) perspective. Meta-thinking self-reflection, assessment, and control of thinking processes is an important next step in enhancing LLM reliability, flexibility, and performance, particularly for complex or high-stakes tasks. The survey begins by analyzing current LLM limitations, such as hallucinations and the lack of internal self-assessment mechanisms. It then talks about newer methods, including RL from human feedback (RLHF), self-distillation, and chain-of-thought prompting, and each of their limitations. The crux of the survey is to talk about how multi-agent architectures, namely supervisor-agent hierarchies, agent debates, and theory of mind frameworks, can emulate human-like introspective behavior and enhance LLM robustness. By exploring reward mechanisms, self-play, and continuous learning methods in MARL, this survey gives a comprehensive roadmap to building introspective, adaptive, and trustworthy LLMs. Evaluation metrics, datasets, and future research avenues, including neuroscience-inspired architectures and hybrid symbolic reasoning, are also discussed.

Resource Allocation for RIS-Assisted CoMP-NOMA Networks using Reinforcement Learning

Apr 01, 2025Abstract:This thesis delves into the forefront of wireless communication by exploring the synergistic integration of three transformative technologies: STAR-RIS, CoMP, and NOMA. Driven by the ever-increasing demand for higher data rates, improved spectral efficiency, and expanded coverage in the evolving landscape of 6G development, this research investigates the potential of these technologies to revolutionize future wireless networks. The thesis analyzes the performance gains achievable through strategic deployment of STAR-RIS, focusing on mitigating inter-cell interference, enhancing signal strength, and extending coverage to cell-edge users. Resource sharing strategies for STAR-RIS elements are explored, optimizing both transmission and reflection functionalities. Analytical frameworks are developed to quantify the benefits of STAR-RIS assisted CoMP-NOMA networks under realistic channel conditions, deriving key performance metrics such as ergodic rates and outage probabilities. Additionally, the research delves into energy-efficient design approaches for CoMP-NOMA networks incorporating RIS, proposing novel RIS configurations and optimization algorithms to achieve a balance between performance and energy consumption. Furthermore, the application of Deep Reinforcement Learning (DRL) techniques for intelligent and adaptive optimization in aerial RIS-assisted CoMP-NOMA networks is explored, aiming to maximize network sum rate while meeting user quality of service requirements. Through a comprehensive investigation of these technologies and their synergistic potential, this thesis contributes valuable insights into the future of wireless communication, paving the way for the development of more efficient, reliable, and sustainable networks capable of meeting the demands of our increasingly connected world.

Retrieval Augmented Generation with Multi-Modal LLM Framework for Wireless Environments

Mar 09, 2025Abstract:Future wireless networks aim to deliver high data rates and lower power consumption while ensuring seamless connectivity, necessitating robust optimization. Large language models (LLMs) have been deployed for generalized optimization scenarios. To take advantage of generative AI (GAI) models, we propose retrieval augmented generation (RAG) for multi-sensor wireless environment perception. Utilizing domain-specific prompt engineering, we apply RAG to efficiently harness multimodal data inputs from sensors in a wireless environment. Key pre-processing pipelines including image-to-text conversion, object detection, and distance calculations for multimodal RAG input from multi-sensor data are proposed to obtain a unified vector database crucial for optimizing LLMs in global wireless tasks. Our evaluation, conducted with OpenAI's GPT and Google's Gemini models, demonstrates an 8%, 8%, 10%, 7%, and 12% improvement in relevancy, faithfulness, completeness, similarity, and accuracy, respectively, compared to conventional LLM-based designs. Furthermore, our RAG-based LLM framework with vectorized databases is computationally efficient, providing real-time convergence under latency constraints.

Intelligent Spectrum Sharing in Integrated TN-NTNs: A Hierarchical Deep Reinforcement Learning Approach

Mar 09, 2025Abstract:Integrating non-terrestrial networks (NTNs) with terrestrial networks (TNs) is key to enhancing coverage, capacity, and reliability in future wireless communications. However, the multi-tier, heterogeneous architecture of these integrated TN-NTNs introduces complex challenges in spectrum sharing and interference management. Conventional optimization approaches struggle to handle the high-dimensional decision space and dynamic nature of these networks. This paper proposes a novel hierarchical deep reinforcement learning (HDRL) framework to address these challenges and enable intelligent spectrum sharing. The proposed framework leverages the inherent hierarchy of the network, with separate policies for each tier, to learn and optimize spectrum allocation decisions at different timescales and levels of abstraction. By decomposing the complex spectrum sharing problem into manageable sub-tasks and allowing for efficient coordination among the tiers, the HDRL approach offers a scalable and adaptive solution for spectrum management in future TN-NTNs. Simulation results demonstrate the superior performance of the proposed framework compared to traditional approaches, highlighting its potential to enhance spectral efficiency and network capacity in dynamic, multi-tier environments.

Computation Offloading Strategies in Integrated Terrestrial and Non-Terrestrial Networks

Feb 21, 2025Abstract:The rapid growth of computation-intensive applications like augmented reality, autonomous driving, remote healthcare, and smart cities has exposed the limitations of traditional terrestrial networks, particularly in terms of inadequate coverage, limited capacity, and high latency in remote areas. This chapter explores how integrated terrestrial and non-terrestrial networks (IT-NTNs) can address these challenges and enable efficient computation offloading. We examine mobile edge computing (MEC) and its evolution toward multiple-access edge computing, highlighting the critical role computation offloading plays for resource-constrained devices. We then discuss the architecture of IT-NTNs, focusing on how terrestrial base stations, unmanned aerial vehicles (UAVs), high-altitude platforms (HAPs), and LEO satellites work together to deliver ubiquitous connectivity. Furthermore, we analyze various computation offloading strategies, including edge, cloud, and hybrid offloading, outlining their strengths and weaknesses. Key enabling technologies such as NOMA, mmWave/THz communication, and reconfigurable intelligent surfaces (RIS) are also explored as essential components of existing algorithms for resource allocation, task offloading decisions, and mobility management. Finally, we conclude by highlighting the transformative impact of computation offloading in IT-NTNs across diverse application areas and discuss key challenges and future research directions, emphasizing the potential of these networks to revolutionize communication and computation paradigms.

Successive Interference Cancellation-aided Diffusion Models for Joint Channel Estimation and Data Detection in Low Rank Channel Scenarios

Jan 20, 2025Abstract:This paper proposes a novel joint channel-estimation and source-detection algorithm using successive interference cancellation (SIC)-aided generative score-based diffusion models. Prior work in this area focuses on massive MIMO scenarios, which are typically characterized by full-rank channels, and fail in low-rank channel scenarios. The proposed algorithm outperforms existing methods in joint source-channel estimation, especially in low-rank scenarios where the number of users exceeds the number of antennas at the access point (AP). The proposed score-based iterative diffusion process estimates the gradient of the prior distribution on partial channels, and recursively updates the estimated channel parts as well as the source. Extensive simulation results show that the proposed method outperforms the baseline methods in terms of normalized mean squared error (NMSE) and symbol error rate (SER) in both full-rank and low-rank channel scenarios, while having a more dominant effect in the latter, at various signal-to-noise ratios (SNR).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge