Ramtin Tabatabaei

Gazing at Failure: Investigating Human Gaze in Response to Robot Failure in Collaborative Tasks

Feb 24, 2025Abstract:Robots are prone to making errors, which can negatively impact their credibility as teammates during collaborative tasks with human users. Detecting and recovering from these failures is crucial for maintaining effective level of trust from users. However, robots may fail without being aware of it. One way to detect such failures could be by analysing humans' non-verbal behaviours and reactions to failures. This study investigates how human gaze dynamics can signal a robot's failure and examines how different types of failures affect people's perception of robot. We conducted a user study with 27 participants collaborating with a robotic mobile manipulator to solve tangram puzzles. The robot was programmed to experience two types of failures -- executional and decisional -- occurring either at the beginning or end of the task, with or without acknowledgement of the failure. Our findings reveal that the type and timing of the robot's failure significantly affect participants' gaze behaviour and perception of the robot. Specifically, executional failures led to more gaze shifts and increased focus on the robot, while decisional failures resulted in lower entropy in gaze transitions among areas of interest, particularly when the failure occurred at the end of the task. These results highlight that gaze can serve as a reliable indicator of robot failures and their types, and could also be used to predict the appropriate recovery actions.

ROSAnnotator: A Web Application for ROSBag Data Analysis in Human-Robot Interaction

Jan 13, 2025Abstract:Human-robot interaction (HRI) is an interdisciplinary field that utilises both quantitative and qualitative methods. While ROSBags, a file format within the Robot Operating System (ROS), offer an efficient means of collecting temporally synched multimodal data in empirical studies with real robots, there is a lack of tools specifically designed to integrate qualitative coding and analysis functions with ROSBags. To address this gap, we developed ROSAnnotator, a web-based application that incorporates a multimodal Large Language Model (LLM) to support both manual and automated annotation of ROSBag data. ROSAnnotator currently facilitates video, audio, and transcription annotations and provides an open interface for custom ROS messages and tools. By using ROSAnnotator, researchers can streamline the qualitative analysis process, create a more cohesive analysis pipeline, and quickly access statistical summaries of annotations, thereby enhancing the overall efficiency of HRI data analysis. https://github.com/CHRI-Lab/ROSAnnotator

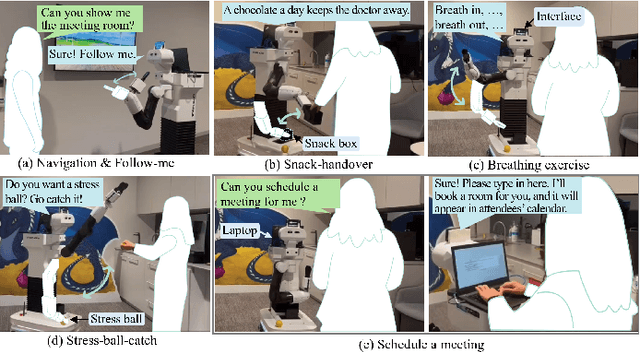

OfficeMate: Pilot Evaluation of an Office Assistant Robot

Jan 09, 2025

Abstract:Office Assistant Robots (OARs) offer a promising solution to proactively provide in-situ support to enhance employee well-being and productivity in office spaces. We introduce OfficeMate, a social OAR designed to assist with practical tasks, foster social interaction, and promote health and well-being. Through a pilot evaluation with seven participants in an office environment, we found that users see potential in OARs for reducing stress and promoting healthy habits and value the robot's ability to provide companionship and physical activity reminders in the office space. However, concerns regarding privacy, communication, and the robot's interaction timing were also raised. The feedback highlights the need to carefully consider the robot's appearance and behaviour to ensure it enhances user experience and aligns with office social norms. We believe these insights will better inform the development of adaptive, intelligent OAR systems for future office space integration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge